From vectors to graphs

Retrieval Augmented Generation (RAG) with LangChain

Meri Nova

Machine Learning Engineer

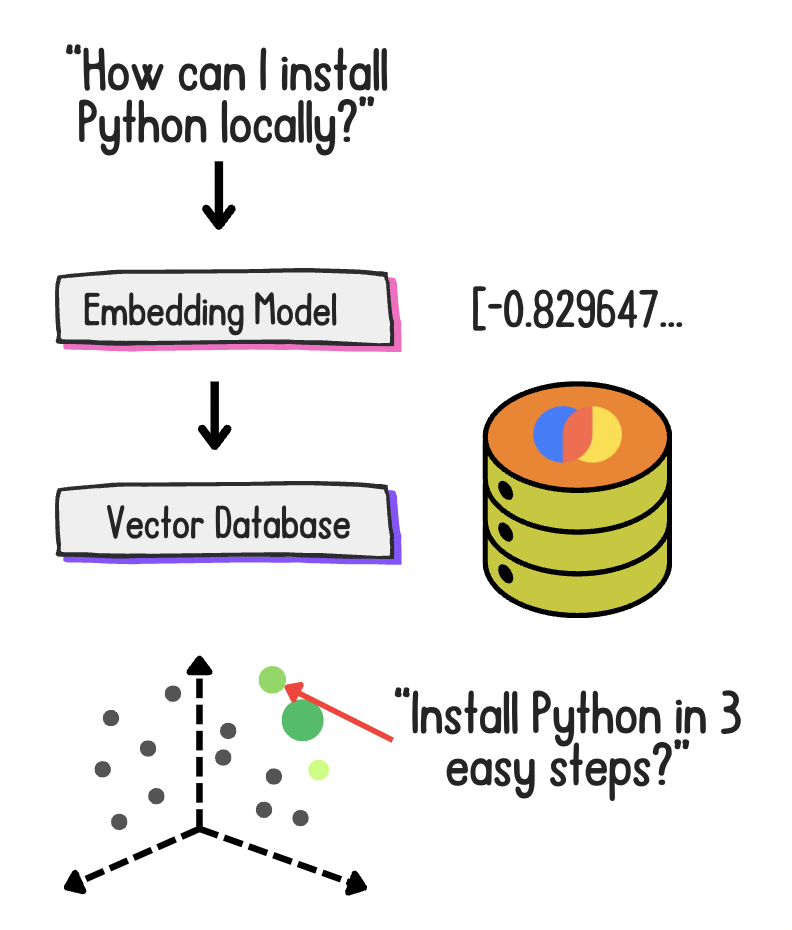

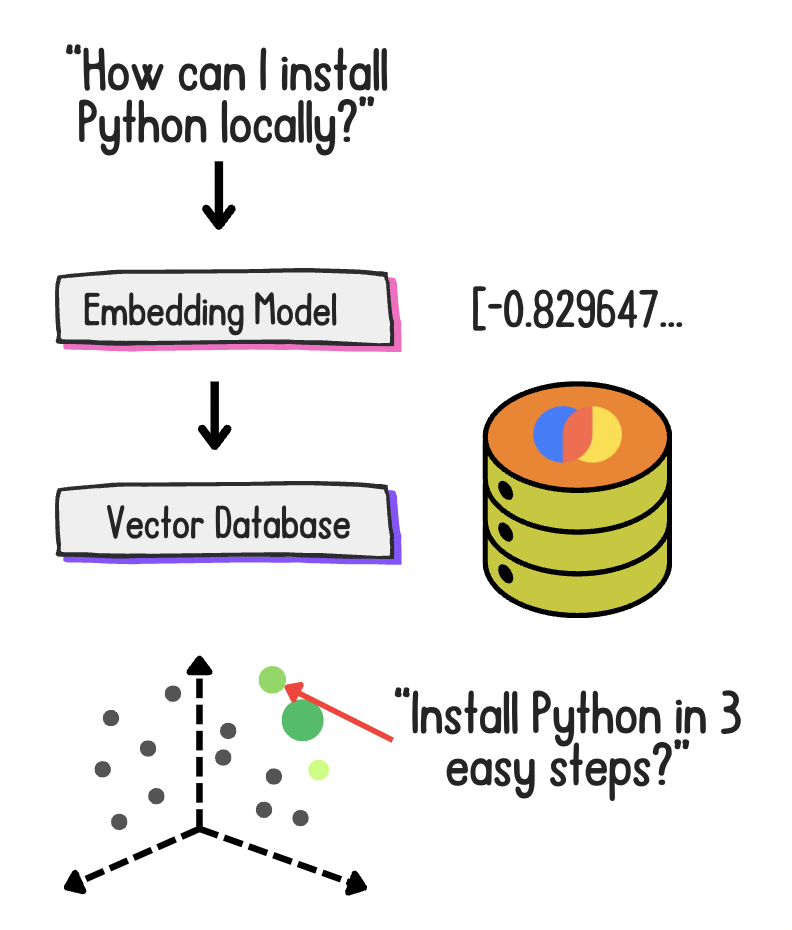

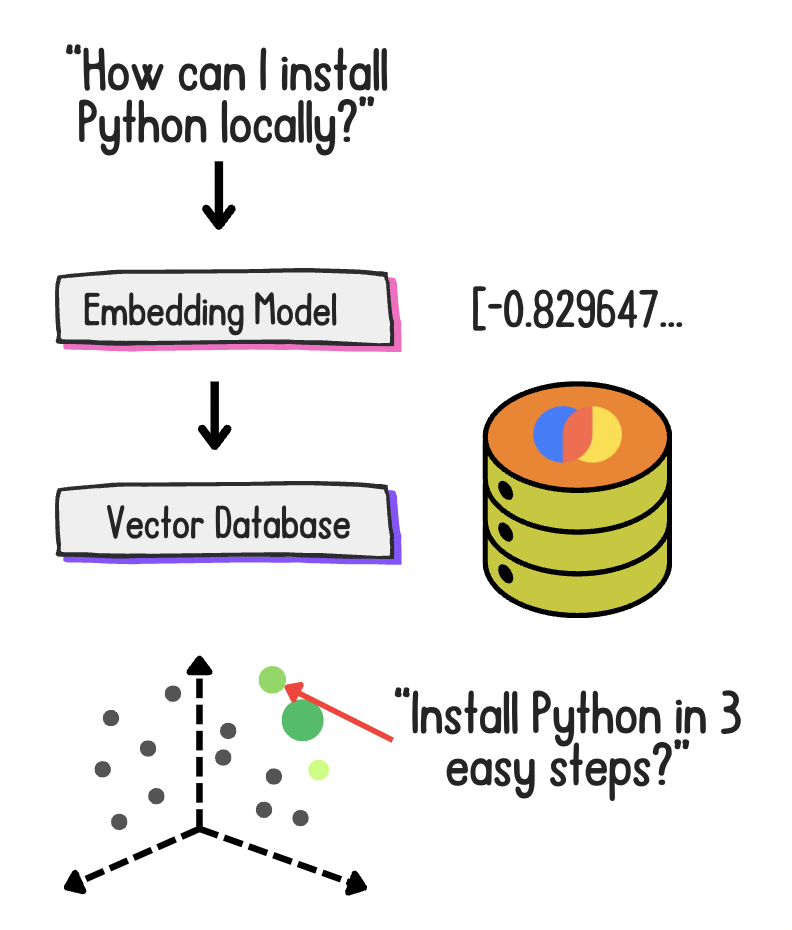

Vector RAG limitations

Vector RAG limitations

Vector RAG limitations

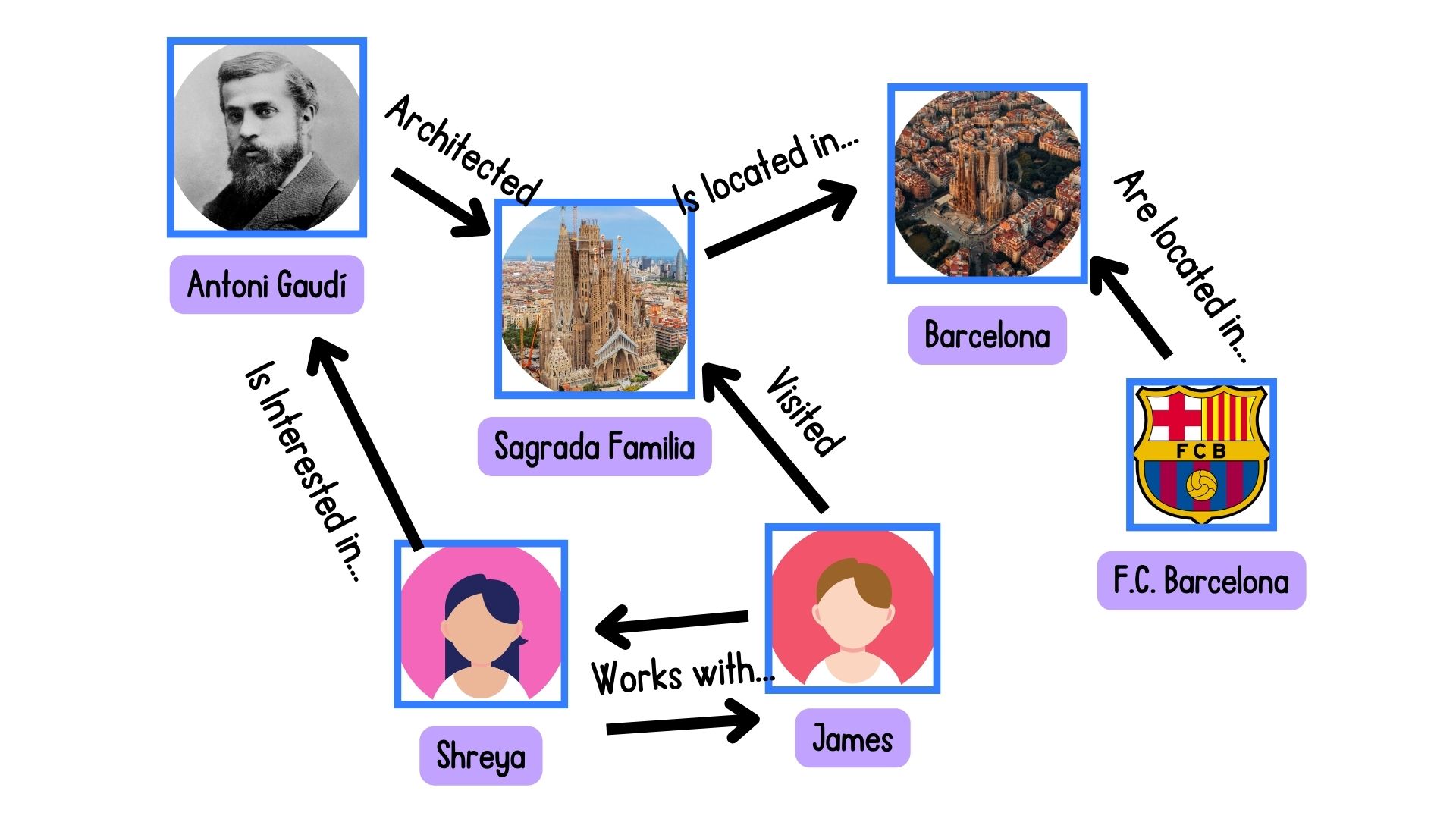

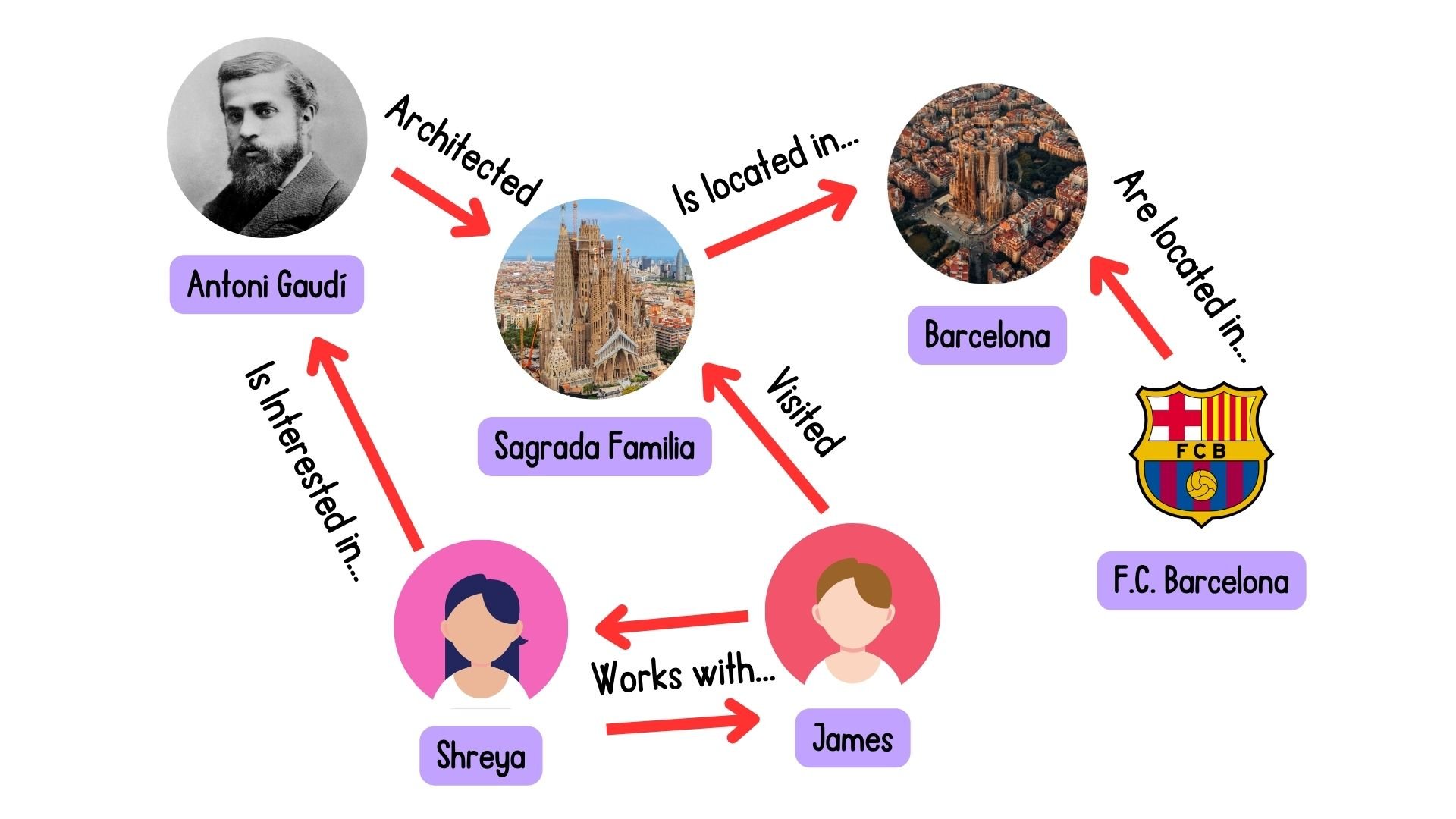

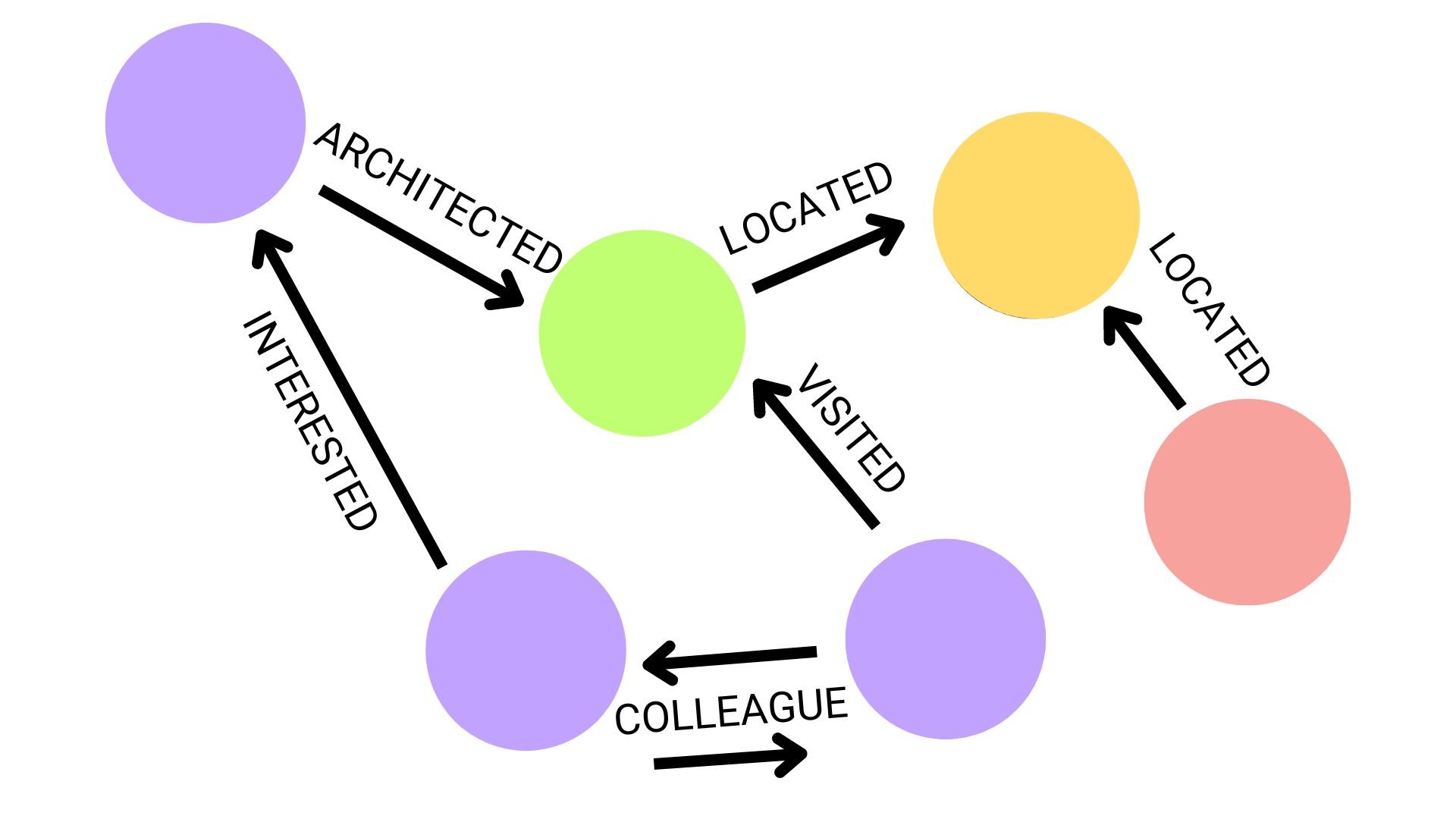

Graph databases

Graph databases - nodes

Graph databases - edges

Neo4j graph databases

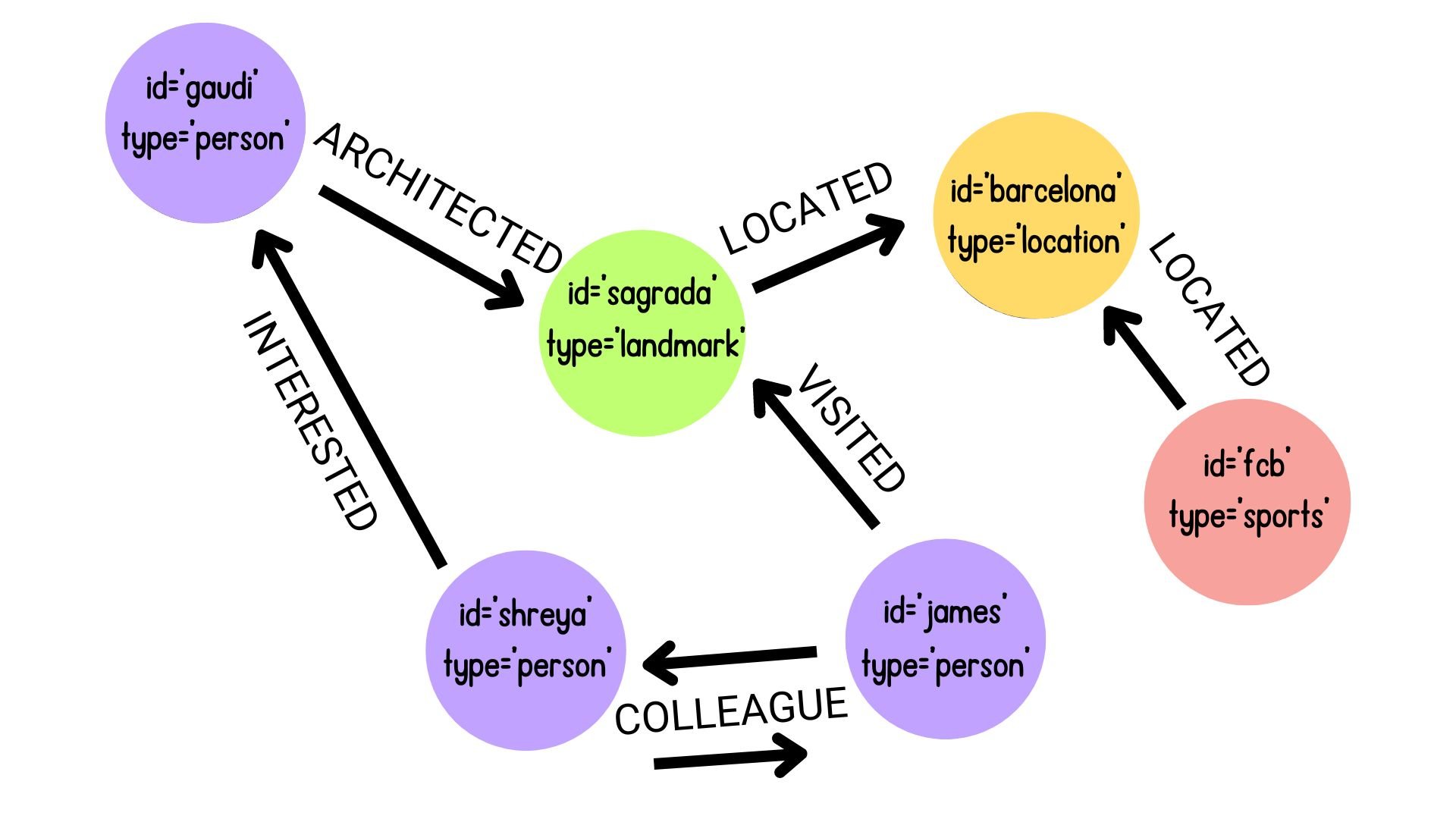

From graphics to graphs...

From graphics to graphs...

From graphics to graphs...

Loading and chunking Wikipedia pages

from langchain_community.document_loaders import WikipediaLoader from langchain_text_splitters import TokenTextSplitter raw_documents = WikipediaLoader(query="large language model").load()text_splitter = TokenTextSplitter(chunk_size=100, chunk_overlap=20) documents = text_splitter.split_documents(raw_documents[:3]) print(documents[0])

page_content='A large language model (LLM) is a computational model capable of...'

metadata={'title': 'Large language model',

'summary': "A large language model (LLM) is...",

'source': 'https://en.wikipedia.org/wiki/Large_language_model'}

From text to graphs!

from langchain_openai import ChatOpenAIfrom langchain_experimental.graph_transformers import LLMGraphTransformerllm = ChatOpenAI(api_key="...", temperature=0, model_name="gpt-4o-mini")llm_transformer = LLMGraphTransformer(llm=llm)graph_documents = llm_transformer.convert_to_graph_documents(documents) print(graph_documents)

From text to graphs!

[GraphDocument(

nodes=[

Node(id='Llm', type='Computational model'),

Node(id='Language Generation', type='Concept'),

Node(id='Natural Language Processing Tasks', type='Concept'),

Node(id='Llama Family', type='Computational model'),

Node(id='Ibm', type='Organization'),

..., Node(id='Bert', type='Computational model')],

relationships=[

Relationship(source=Node(id='Llm', type='Computational model'),

target=Node(id='Language Generation', type='Concept'),

type='CAPABLE_OF'),

...])]

Let's practice!

Retrieval Augmented Generation (RAG) with LangChain