Ingesting Data

Introduction to Databricks SQL

Kevin Barlow

Data Manager

Motivation

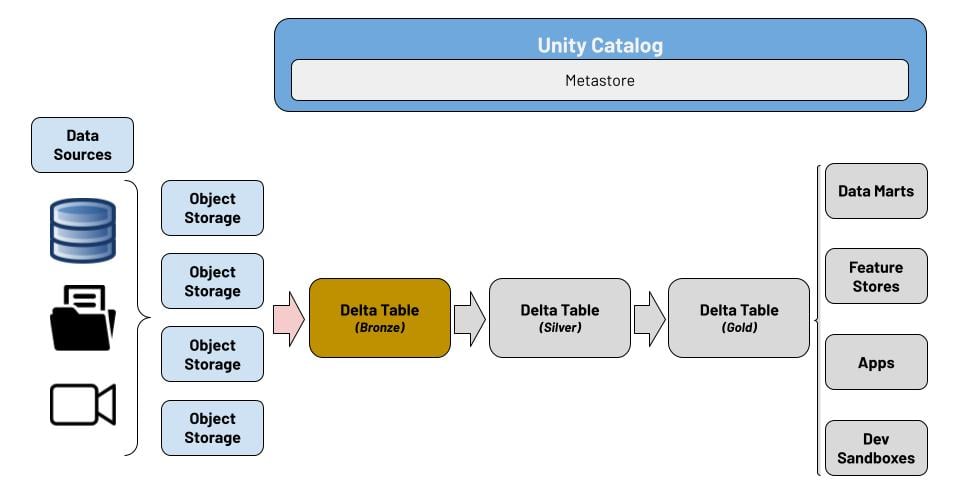

Creating the lakehouse

GUI-based options

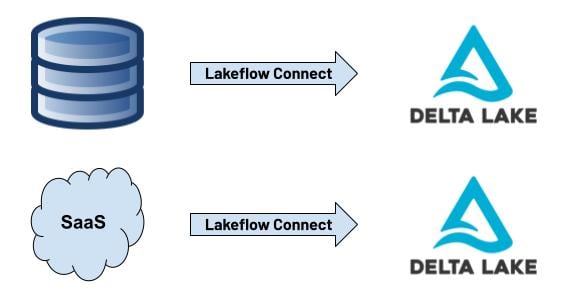

Lakeflow Connect

- Built-in connectors for ingesting data

- Databases

- SaaS Applications

- Creates pipelines to keep data up to date

Data upload

- Manually upload your files

- CSV, Parquet, etc.

- Quickly create new Delta tables

- Great for ad hoc data upload

Bringing data into the lakehouse

COPY INTO

- Copy data from cloud object storage directly into Delta tables

- Better for more static datasets

- Can run natively in SQL Editor

COPY INTO my_table

FROM '/path/to/files'

FILEFORMAT = PARQUET

FORMAT_OPTIONS ('mergeSchema' = 'true')

COPY_OPTIONS ('mergeSchema' = 'true')

Auto Loader

- Automatically ingests new data files from cloud storage

- Better for larger and changing datasets

- Leverages Delta Live Tables in SQL

CREATE TABLE customers

AS SELECT *

FROM cloud_files(

"/path/to/files",

"csv")

Let's practice!

Introduction to Databricks SQL