Tensors, layers and autoencoders

Introduction to Deep Learning with Keras

Miguel Esteban

Data Scientist & Founder

Accessing Keras layers

# Acessing the first layer of a Keras model first_layer = model.layers[0]# Printing the layer, and its input, output and weights print(first_layer.input) print(first_layer.output) print(first_layer.weights)

<tf.Tensor 'dense_1_input:0' shape=(?, 3) dtype=float32>

<tf.Tensor 'dense_1/Relu:0' shape=(?, 2) dtype=float32>

[<tf.Variable 'dense_1/kernel:0' shape=(3, 2) dtype=float32_ref>,

<tf.Variable 'dense_1/bias:0' shape=(2,) dtype=float32_ref>]

What are tensors?

# Defining a rank 2 tensor (2 dimensions) T2 = [[1,2,3], [4,5,6], [7,8,9]]# Defining a rank 3 tensor (3 dimensions) T3 = [[1,2,3], [4,5,6], [7,8,9], [10,11,12], [13,14,15], [16,17,18], [19,20,21], [22,23,24], [25,26,27]]

# Import Keras backend import tensorflow.keras.backend as K# Get the input and output tensors of a model layer inp = model.layers[0].input out = model.layers[0].output# Function that maps layer inputs to outputs inp_to_out = K.function([inp], [out])# We pass and input and get the output we'd get in that first layer print(inp_to_out([X_train])

# Outputs of the first layer per sample in X_train

[array([[0.7, 0],...,[0.1, 0.3]])]

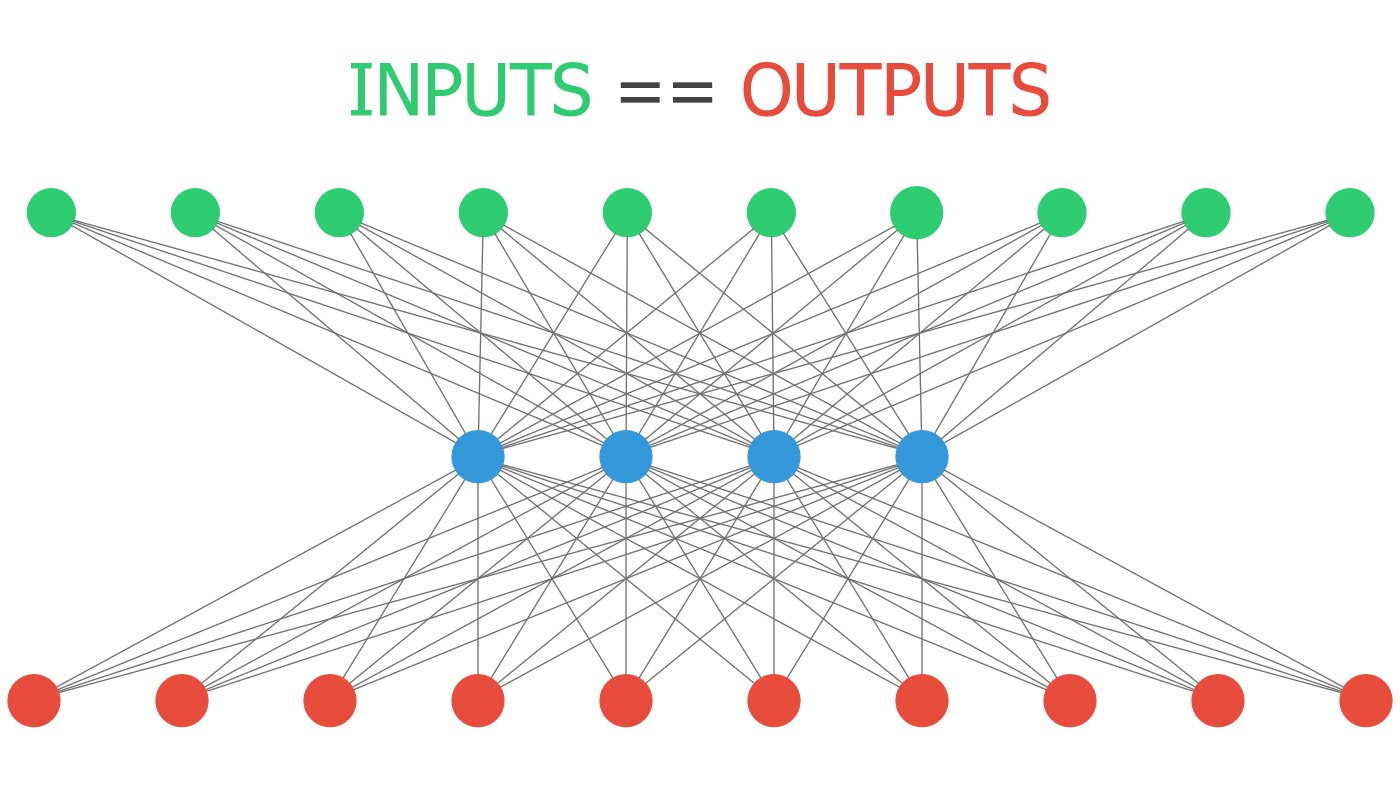

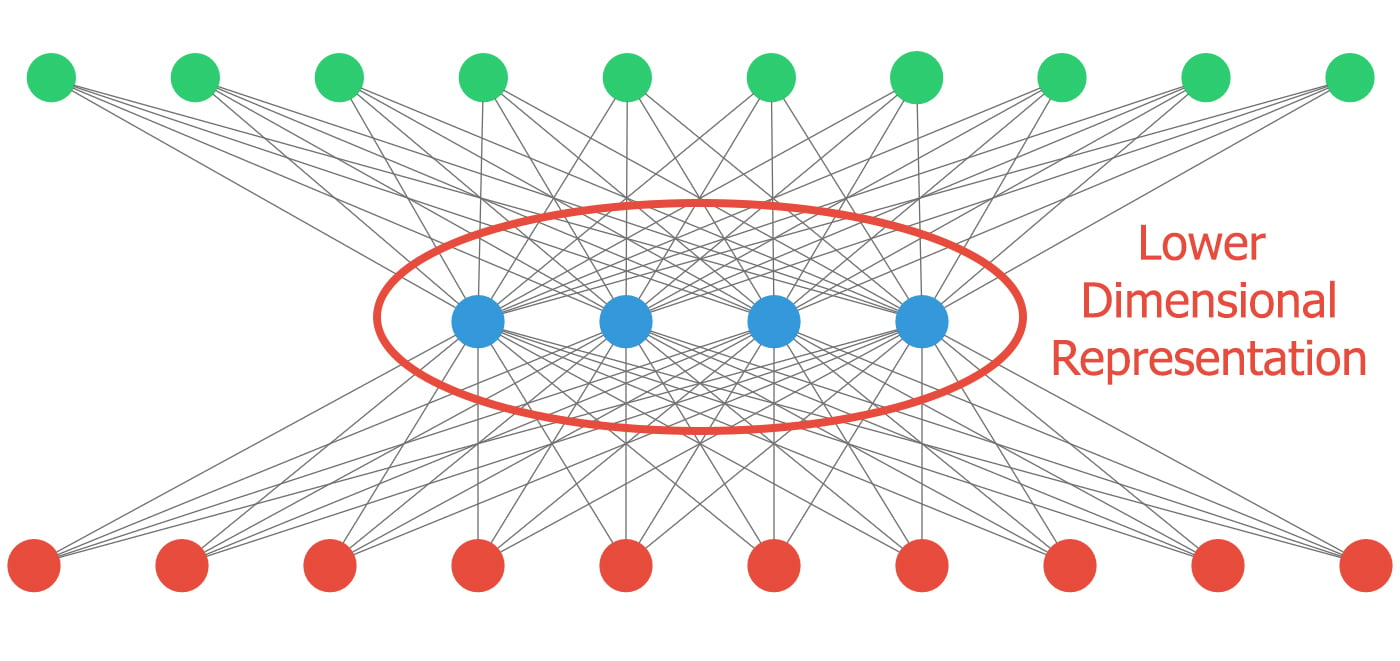

Autoencoders!

Autoencoders!

Autoencoder use cases

- Dimensionality reduction:

- Smaller dimensional space representation of our inputs.

- De-noising data:

- If trained with clean data, irrelevant noise will be filtered out during reconstruction.

- Anomaly detection:

- A poor reconstruction will result when the model is fed with unseen inputs.

- ...

Building a simple autoencoder

# Instantiate a sequential model autoencoder = Sequential()# Add a hidden layer of 4 neurons and an input layer of 100 autoencoder.add(Dense(4, input_shape=(100,), activation='relu'))# Add an output layer of 100 neurons autoencoder.add(Dense(100, activation='sigmoid'))# Compile your model with the appropiate loss autoencoder.compile(optimizer='adam', loss='binary_crossentropy')

Breaking it into an encoder

# Building a separate model to encode inputs encoder = Sequential() encoder.add(autoencoder.layers[0])# Predicting returns the four hidden layer neuron outputs encoder.predict(X_test)

# Four numbers for each observation in X_test

array([10.0234375, 5.833543, 18.90444, 9.20348],...)

Let's experiment!

Introduction to Deep Learning with Keras