Gradient boosted trees with XGBoost

Credit Risk Modeling in Python

Michael Crabtree

Data Scientist, Ford Motor Company

Decision trees

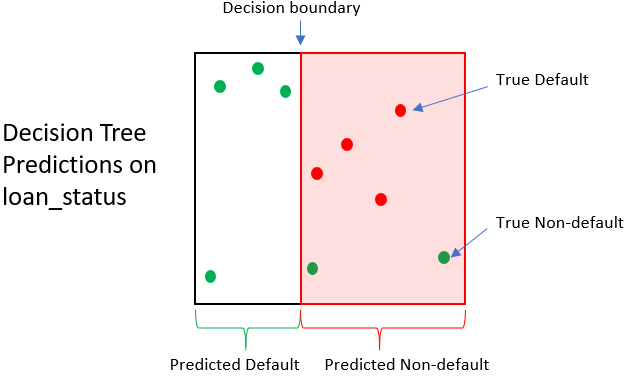

- Creates predictions similar to logistic regression

- Not structured like a regression

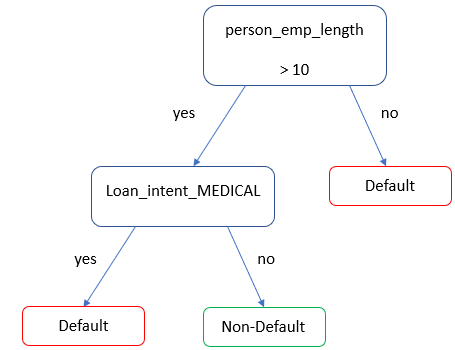

Decision trees for loan status

- Simple decision tree for predicting

loan_statusprobability of default

Decision tree impact

| Loan | True loan status | Pred. Loan Status | Loan payoff value | Selling Value | Gain/Loss |

|---|---|---|---|---|---|

| 1 | 0 | 1 | $1,500 | $250 | -$1,250 |

| 2 | 0 | 1 | $1,200 | $250 | -$950 |

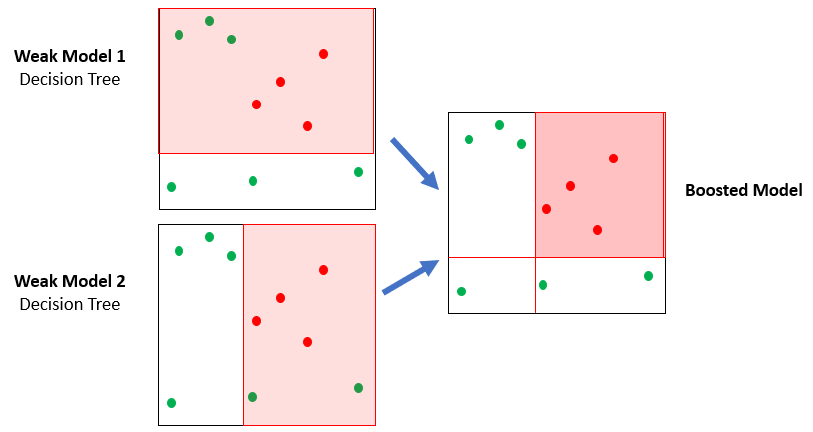

A forest of trees

- XGBoost uses many simplistic trees (ensemble)

- Each tree will be slightly better than a coin toss

Creating and training trees

- Part of the

xgboostPython package, calledxgbhere - Trains with

.fit()just like the logistic regression model

# Create a logistic regression model

clf_logistic = LogisticRegression()

# Train the logistic regression

clf_logistic.fit(X_train, np.ravel(y_train))

# Create a gradient boosted tree model

clf_gbt = xgb.XGBClassifier()

# Train the gradient boosted tree

clf_gbt.fit(X_train,np.ravel(y_train))

Default predictions with XGBoost

- Predicts with both

.predict()and.predict_proba().predict_proba()produces a value between0and1.predict()produces a1or0forloan_status

# Predict probabilities of default

gbt_preds_prob = clf_gbt.predict_proba(X_test)

# Predict loan_status as a 1 or 0

gbt_preds = clf_gbt.predict(X_test)

# gbt_preds_prob

array([[0.059, 0.940], [0.121, 0.989]])

# gbt_preds

array([1, 1, 0...])

Hyperparameters of gradient boosted trees

- Hyperparameters: model parameters (settings) that cannot be learned from data

- Some common hyperparameters for gradient boosted trees

learning_rate: smaller values make each step more conservativemax_depth: sets how deep each tree can go, larger means more complex

xgb.XGBClassifier(learning_rate = 0.2,

max_depth = 4)

Let's practice!

Credit Risk Modeling in Python