Class imbalance in loan data

Credit Risk Modeling in Python

Michael Crabtree

Data Scientist, Ford Motor Company

Not enough defaults in the data

- The values of

loan_statusare the classes- Non-default:

0 - Default:

1

- Non-default:

y_train['loan_status'].value_counts()

| loan_status | Training Data Count | Percentage of Total |

|---|---|---|

| 0 | 13,798 | 78% |

| 1 | 3,877 | 22% |

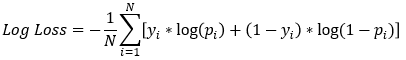

Model loss function

- Gradient Boosted Trees in

xgboostuse a loss function of log-loss- The goal is to minimize this value

| True loan status | Predicted probability | Log Loss |

|---|---|---|

| 1 | 0.1 | 2.3 |

| 0 | 0.9 | 2.3 |

- An inaccurately predicted default has more negative financial impact

The cost of imbalance

- A false negative (default predicted as non-default) is much more costly

| Person | Loan Amount | Potential Profit | Predicted Status | Actual Status | Losses |

|---|---|---|---|---|---|

| A | $1,000 | $10 | Default | Non-Default | -$10 |

| B | $1,000 | $10 | Non-Default | Default | -$1,000 |

- Log-loss for the model is the same for both, our actual losses is not

Causes of imbalance

- Data problems

- Credit data was not sampled correctly

- Data storage problems

- Business processes:

- Measures already in place to not accept probable defaults

- Probable defaults are quickly sold to other firms

- Behavioral factors:

- Normally, people do not default on their loans

- The less often they default, the higher their credit rating

- Normally, people do not default on their loans

Dealing with class imbalance

- Several ways to deal with class imbalance in data

| Method | Pros | Cons |

|---|---|---|

| Gather more data | Increases number of defaults | Percentage of defaults may not change |

| Penalize models | Increases recall for defaults | Model requires more tuning and maintenance |

| Sample data differently | Least technical adjustment | Fewer defaults in data |

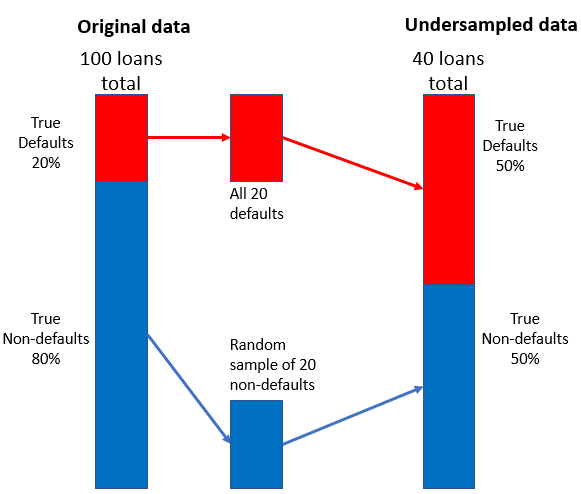

Undersampling strategy

- Combine smaller random sample of non-defaults with defaults

Combining the split data sets

- Test and training set must be put back together

- Create two new sets based on actual

loan_status

# Concat the training sets

X_y_train = pd.concat([X_train.reset_index(drop = True),

y_train.reset_index(drop = True)], axis = 1)

# Get the counts of defaults and non-defaults

count_nondefault, count_default = X_y_train['loan_status'].value_counts()

# Separate nondefaults and defaults

nondefaults = X_y_train[X_y_train['loan_status'] == 0]

defaults = X_y_train[X_y_train['loan_status'] == 1]

Undersampling the non-defaults

- Randomly sample data set of non-defaults

- Concatenate with data set of defaults

# Undersample the non-defaults using sample() in pandas

nondefaults_under = nondefaults.sample(count_default)

# Concat the undersampled non-defaults with the defaults

X_y_train_under = pd.concat([nondefaults_under.reset_index(drop = True),

defaults.reset_index(drop = True)], axis=0)

Let's practice!

Credit Risk Modeling in Python