Evaluate models with Accelerator

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Why put a model in evaluation mode?

- Training mode

- Dropout: Set neurons to zero

- Batch normalization

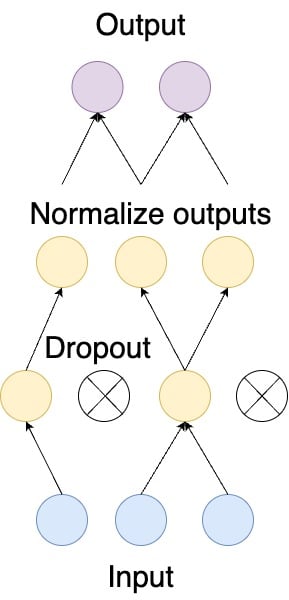

Dropout and batch normalization

Why put a model in evaluation mode?

- Training mode

- Dropout: Set neurons to zero

- Batch normalization

- Evaluation mode disables these layers

model.eval()activates evaluation mode

Dropout and batch normalization

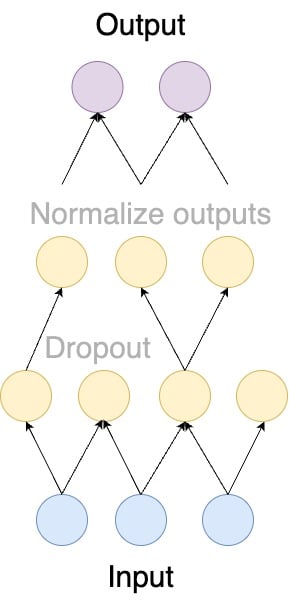

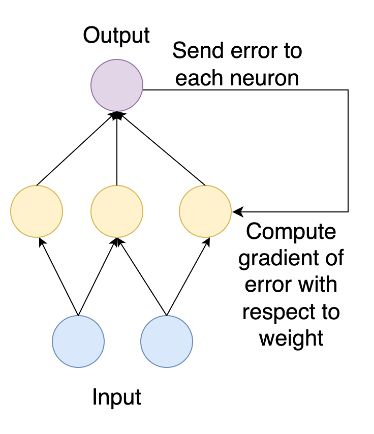

Disable gradients with torch.no_grad()

- Training requires gradient computation

torch.no_grad()disables gradients- Call both

model.evalandtorch.no_grad:

model.eval()

with torch.no_grad():

outputs = model(**inputs)

Computing gradients in backpropagation

Prepare a validation dataset

- Load validation split of the MRPC dataset

validation_dataset = load_dataset("glue", "mrpc", split="validation")

- Tokenize the validation dataset

def encode(examples):

return tokenizer(examples["sentence1"],

examples["sentence2"],

truncation=True,

padding="max_length")

validation_dataset = validation_dataset.map(encode, batched=True)

Life of an epoch: training and evaluation loops

- For each epoch, iterate over the train and validation datasets

- First run the model in training mode

- Then run the model in evaluation mode and log metrics after evaluation

for epoch in range(num_epochs):model.train() for step, batch in enumerate(train_dataloader): # Perform training stepmodel.eval() for step, batch in enumerate(eval_dataloader): # Perform evaluation step# Log evaluation metrics

Inside the evaluation loop

metric = evaluate.load("glue", "mrpc") model.eval() for step, batch in enumerate(eval_dataloader):with torch.no_grad(): outputs = model(**batch) predictions = outputs.logits.argmax(dim=-1)predictions, references = accelerator.gather_for_metrics((predictions, batch["labels"]))metric.add_batch(predictions=predictions, references=references)eval_metric = metric.compute() print(f"Eval metrics: \n{eval_metric}")

Eval metrics:

{'accuracy': 0.81, 'f1': 0.77}

Log metrics after evaluation

- Tracking tools: notebooks that log metrics; examples are TensorBoard and MLflow

log_with: use all experiment tracking tools.init_trackers(): initialize tracking tools.log(): trackaccuracy,f1,epoch.end_training(): finish tracking

accelerator = Accelerator(project_dir=".", log_with="all")accelerator.init_trackers("my_project")for epoch in range(num_epochs): # Training loop is here # Evaluation loop is here accelerator.log({"accuracy": eval_metric["accuracy"], "f1": eval_metric["f1"],}, step=epoch)accelerator.end_training()

Let's practice!

Efficient AI Model Training with PyTorch