Preprocess images and audio for training

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Preparing images and audio

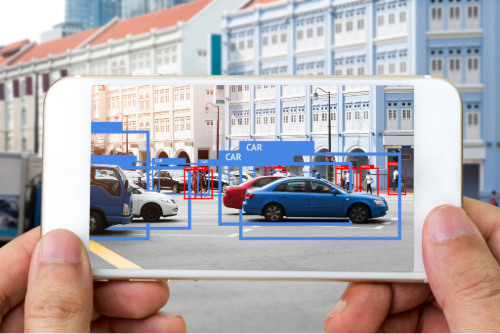

Image application

- Image classification to identify objects

- Data sharding

Audio application

- Provide voice commands

- Example: "Turn down the volume"

Manipulating a sample image dataset

print(dataset)

Dataset({

features: ['img', 'label'],

num_rows: 1000

})

print(dataset[0]["img"])

<PIL.JpegImagePlugin.JpegImageFile image mode=RGB size=720x480>

Standardize the image format

- Format images: width, height

- Standardize pixel values: mean, standard deviation

AutoImageProcessorloads all preprocessing steps

from transformers import AutoImageProcessor model = "microsoft/swin-tiny-patch4-window7-224"image_processor = AutoImageProcessor.from_pretrained(model)

Standardize the image format

dataset = dataset.map( lambda examples: {"pixel_values": [image_processor(image, return_tensors="pt").pixel_values for image in examples["img"] ]}, batched=True)print(dataset)

Dataset({

features: ['img', 'label', 'pixel_values'],

num_rows: 1000

})

Manipulating a sample audio dataset

print(dataset)

DatasetDict({ train: Dataset({features: ['file', 'audio','label'], num_rows: 1000 }), ... })

Standardize the audio format

- Standardize number of samples

- Sampling rate: Number of samples per second

- Max duration: Number of seconds of audio

sampling_rate = 16000 # 16 kHzmax_duration = 1 # 1 secondmax_length = sampling_rate * max_durationprint(f"max_length = {max_length:,} samples")

max_length = 16,000 samples

Standardize the audio format

from transformers import AutoFeatureExtractor model = "facebook/wav2vec2-base" feature_extractor = AutoFeatureExtractor.from_pretrained(model)def preprocess_function(split_data):audio_arrays = [x["array"] for x in split_data["audio"]]inputs = feature_extractor(audio_arrays,sampling_rate=feature_extractor.sampling_rate, max_length=int(feature_extractor.sampling_rate * max_duration),truncation=True) return inputs

Apply the preprocesssing function

- Map the

preprocess_functionto thedataset remove_columns: removeaudioandfilecolumnsbatched: processdatasetexamples in batches

dataset = dataset["train"].map(preprocess_function,remove_columns=["audio", "file"],batched=True)

Apply the preprocesssing function

print(dataset)

DatasetDict({

train: Dataset({

features: ['label', 'input_values'],

num_rows: 1000

})

Prepare data for distributed training

DataLoader: prepare the data for loading and iterating during trainingaccelerator.prepare(): place the data on CPUs or GPUs based on availability- Data sharding: each GPU processes a subset of training data, like sharing slices of pizza

accelerator.prepare()works with PyTorch DataLoaders (torch.utils.data.DataLoader)

from accelerate import Accelerator from torch.utils.data import DataLoader dataloader = DataLoader(dataset, batch_size=32, shuffle=True)accelerator = Accelerator() dataloader = accelerator.prepare(dataloader)

Let's practice!

Efficient AI Model Training with PyTorch