Fine-tune models with Trainer

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

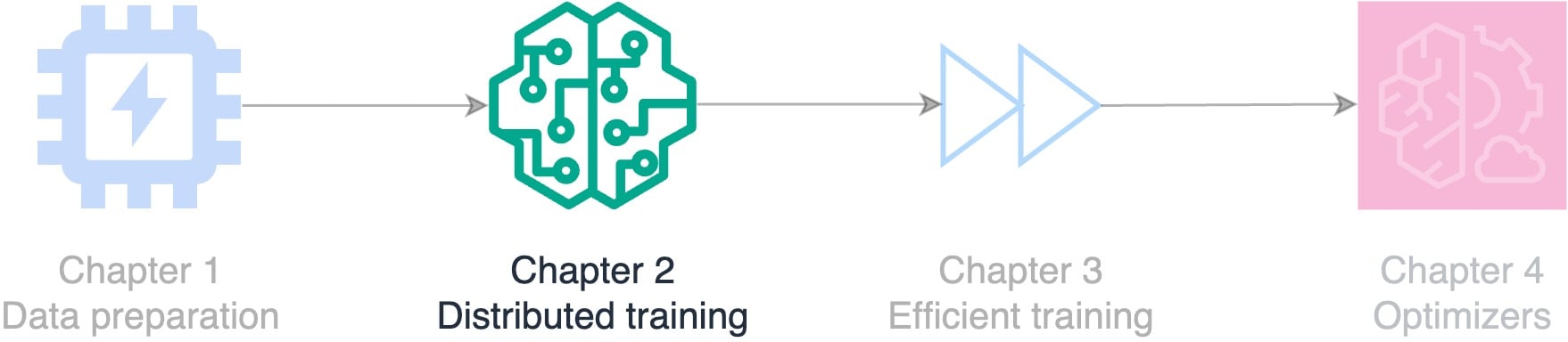

Data preparation

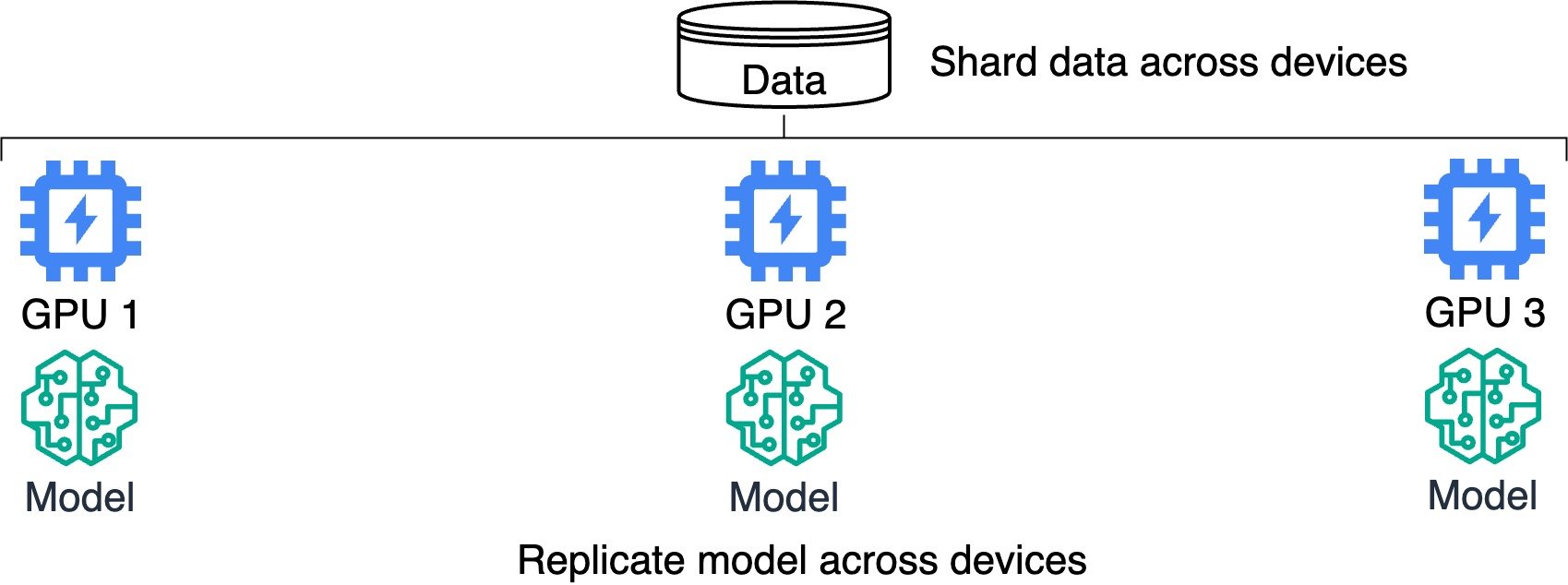

Distributed training

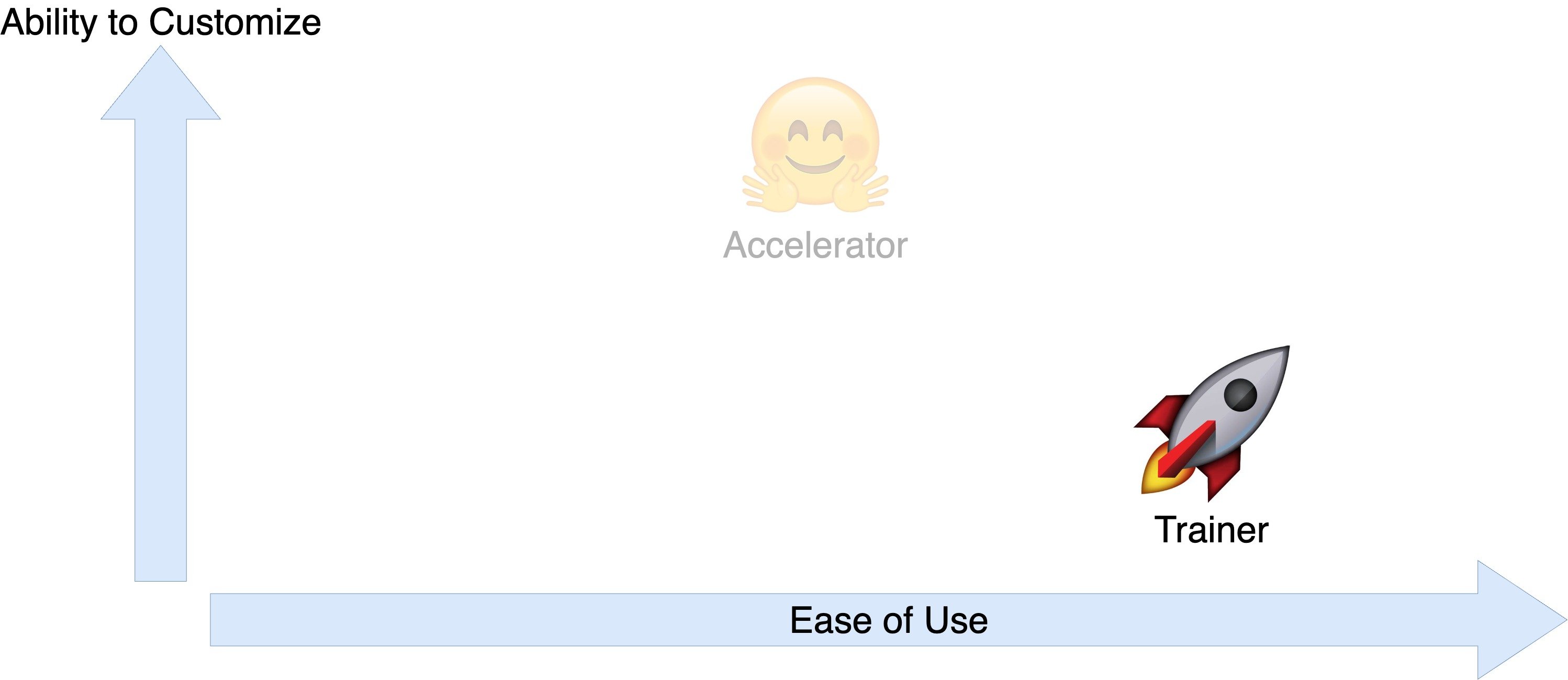

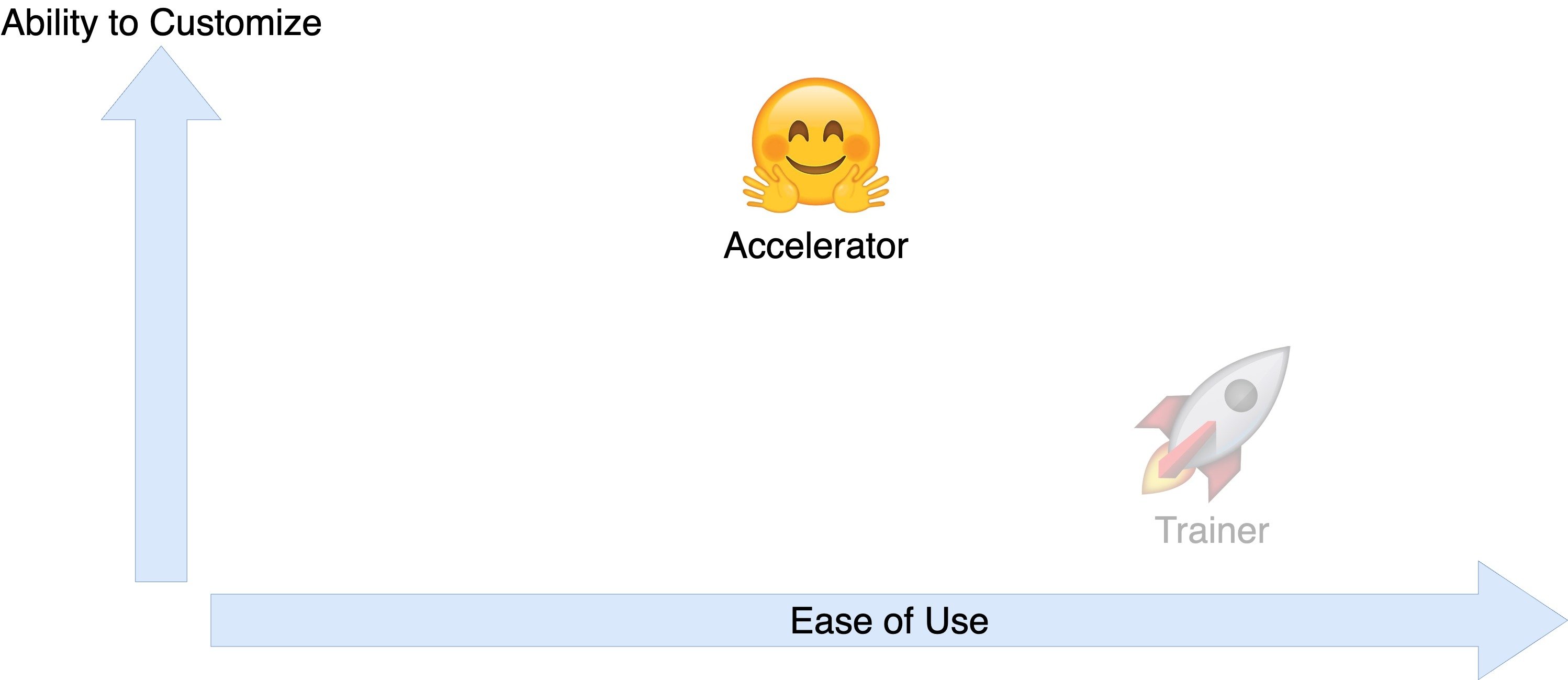

Trainer and Accelerator

Trainer and Accelerator

Turbocharge training with Trainer

Trainer library

from transformers import TrainerRun model on each device in parallel

- Speed up training, like assembly lines

- Review inputs: dataset, model, metrics

- Develop sentiment analysis for e-commerce

Product review sentiment dataset

print(dataset)

DatasetDict({

train: Dataset({

features: ['Text', 'Label'],

num_rows: 1000

}), ...})

print(f'"{dataset["train"]["Text"][0]}": {dataset["train"]["Label"][0]}')

"I love this product!": positive

Convert labels to integers

def map_labels(example): if example["Label"] == "negative": return {"labels": 0}else: return {"labels": 1} dataset = dataset.map(map_labels)print(f'First label: {dataset["train"]["labels"][0]}')

First label: 1

Define the tokenizer and model

- Load pre-trained model and tokenizer:

model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased", num_labels=2)tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased")

- Apply tokenizer to the

textfield:

def encode(examples):return tokenizer(examples["Text"], padding="max_length", truncation=True, return_tensors="pt")dataset = dataset.map(encode, batched=True)print(f'The first tokenized review is {dataset["train"]["input_ids"][0]}.')

The first tokenized review is [101, 1045, 2293, 2023, 4031, 999, 102].

Define evaluation metrics

import evaluatedef compute_metrics(eval_predictions):load_accuracy = evaluate.load("accuracy") load_f1 = evaluate.load("f1")logits, labels = eval_predictionspredictions = np.argmax(logits, axis=-1)accuracy = load_accuracy.compute(predictions=predictions, references=labels)[ "accuracy" ]f1 = load_f1.compute(predictions=predictions, references=labels)["f1"]return {"accuracy": accuracy, "f1": f1}

Training arguments

output_dir: Where to save model- Specify hyperparameters (e.g.,

learning_rateandweight_decay) save_strategy: Save after each epochevaluation_strategy: Evaluate metrics after each epoch

from transformers import ( TrainingArguments) training_args = TrainingArguments( output_dir="output_folder",learning_rate=2e-5, per_device_train_batch_size=16, per_device_eval_batch_size=16, num_train_epochs=2, weight_decay=0.01,save_strategy="epoch", evaluation_strategy="epoch", )

Setting up Trainer

from transformers import Trainer trainer = Trainer(model=model,args=training_args,train_dataset=dataset["train"], eval_dataset=dataset["validation"],compute_metrics=compute_metrics)trainer.train()

{'epoch': 1.0, 'eval_loss': 0.79, 'eval_accuracy': 0.00, 'eval_f1': 0.00}

{'epoch': 2.0, 'eval_loss': 0.65, 'eval_accuracy': 0.11, 'eval_f1': 0.15}

print(trainer.args.device)

cpu

Running sentiment analysis for e-commerce

sample_review = "This product is amazing!"input_ids = tokenizer.encode(sample_review, return_tensors='pt') print(f"Tokenized review: {input_ids}")

Tokenized review: tensor([[ 101, 2023, 4031, 2003, 6429, 999, 102 ]])

Running sentiment analysis for e-commerce

output = model(input_ids)

print(f"Output logits: {output.logits}")

Output logits: tensor([[ -0.0538, 0.1300 ]])

predicted_label = torch.argmax(output.logits, dim=1).item()

print(f"Predicted label: {predicted_label}")

Predicted label: 1

sentiment = "Negative" if predicted_label == 0 else "Positive"

print(f'The sentiment of the product review is "{sentiment}."')

The sentiment of the product review is "Positive."

Checkpoints with Trainer

- Resume from the latest checkpoint, like pausing a movie

trainer.train(resume_from_checkpoint=True)

{'epoch': 3.0, 'eval_loss': 0.29, 'eval_accuracy': 0.37, 'eval_f1': 0.51}

{'epoch': 4.0, 'eval_loss': 0.23, 'eval_accuracy': 0.46, 'eval_f1': 0.58}

- Resume from specific checkpoint saved in output directory

trainer.train(resume_from_checkpoint="model/checkpoint-1000")

Let's practice!

Efficient AI Model Training with PyTorch