Gradient accumulation

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Distributed training

Efficient training

Improving training efficiency

Improving training efficiency

Improving training efficiency

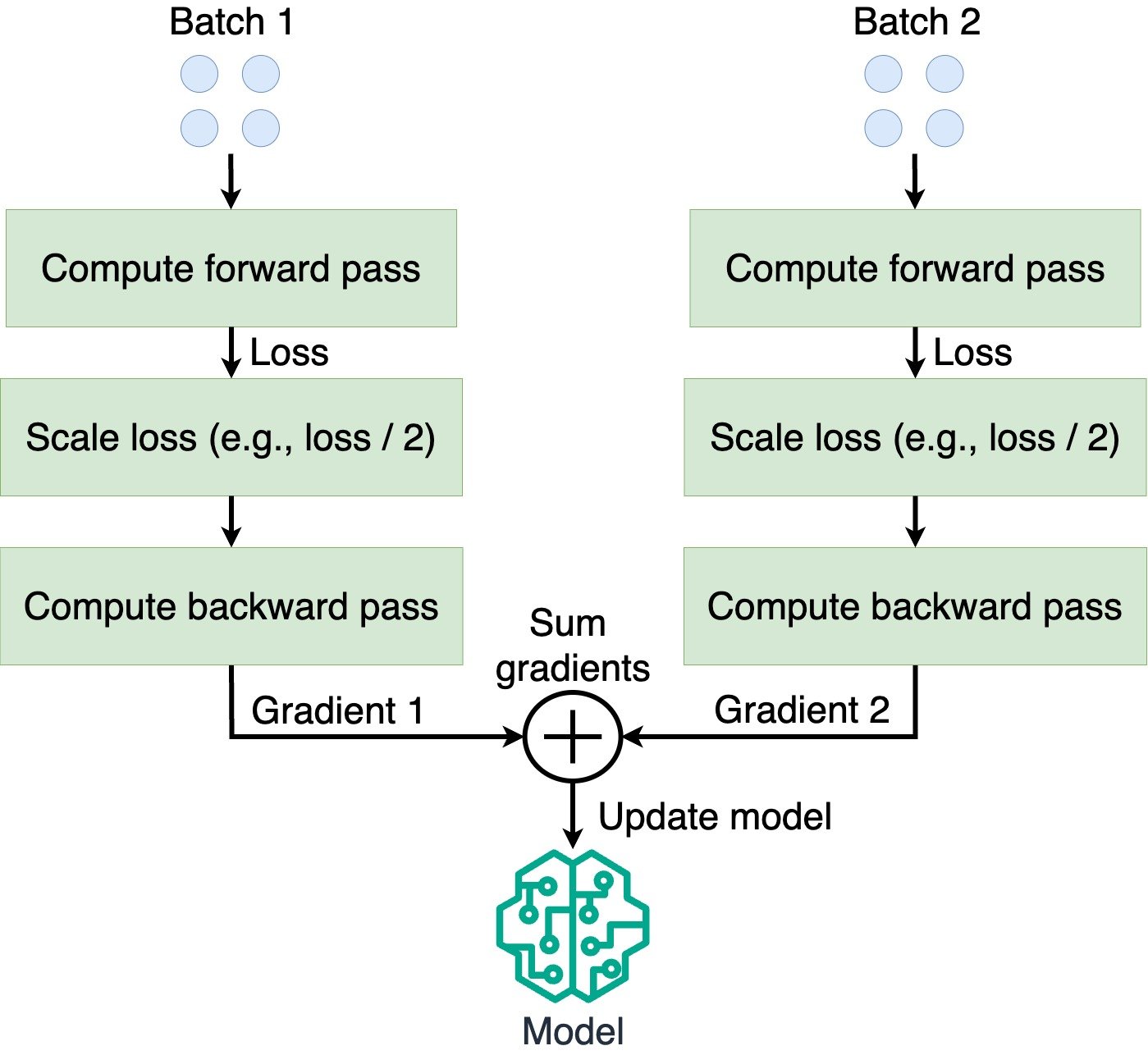

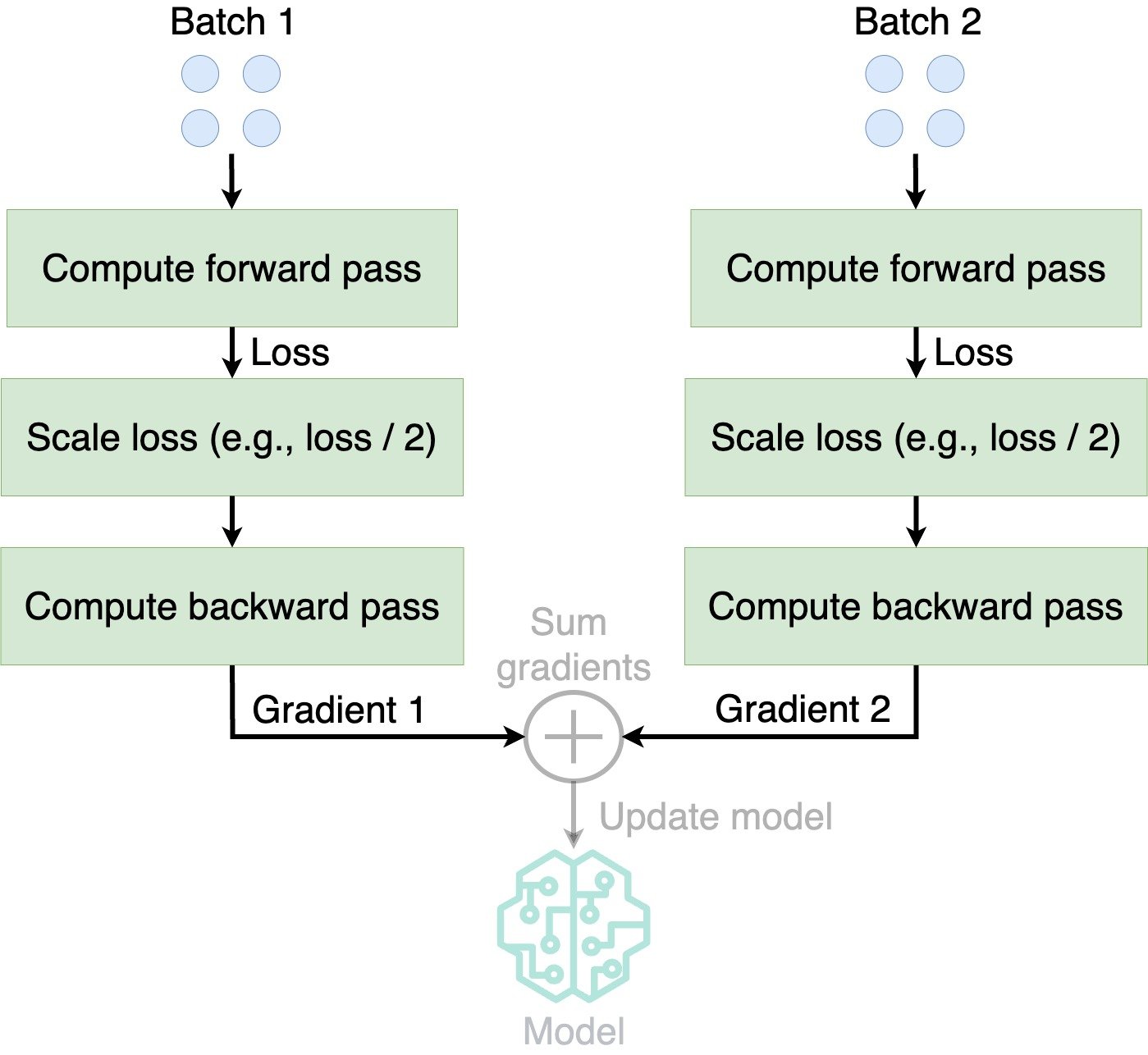

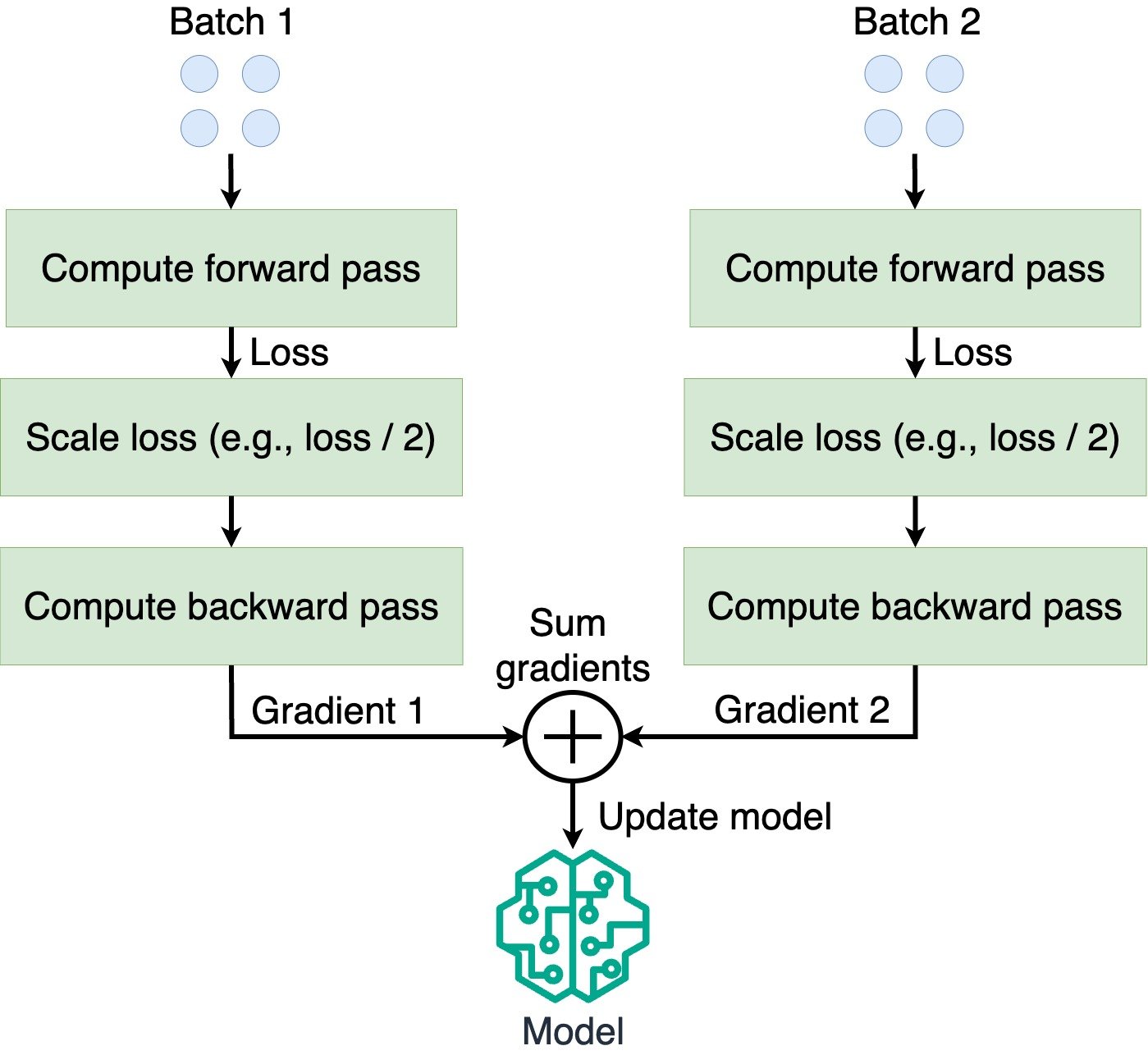

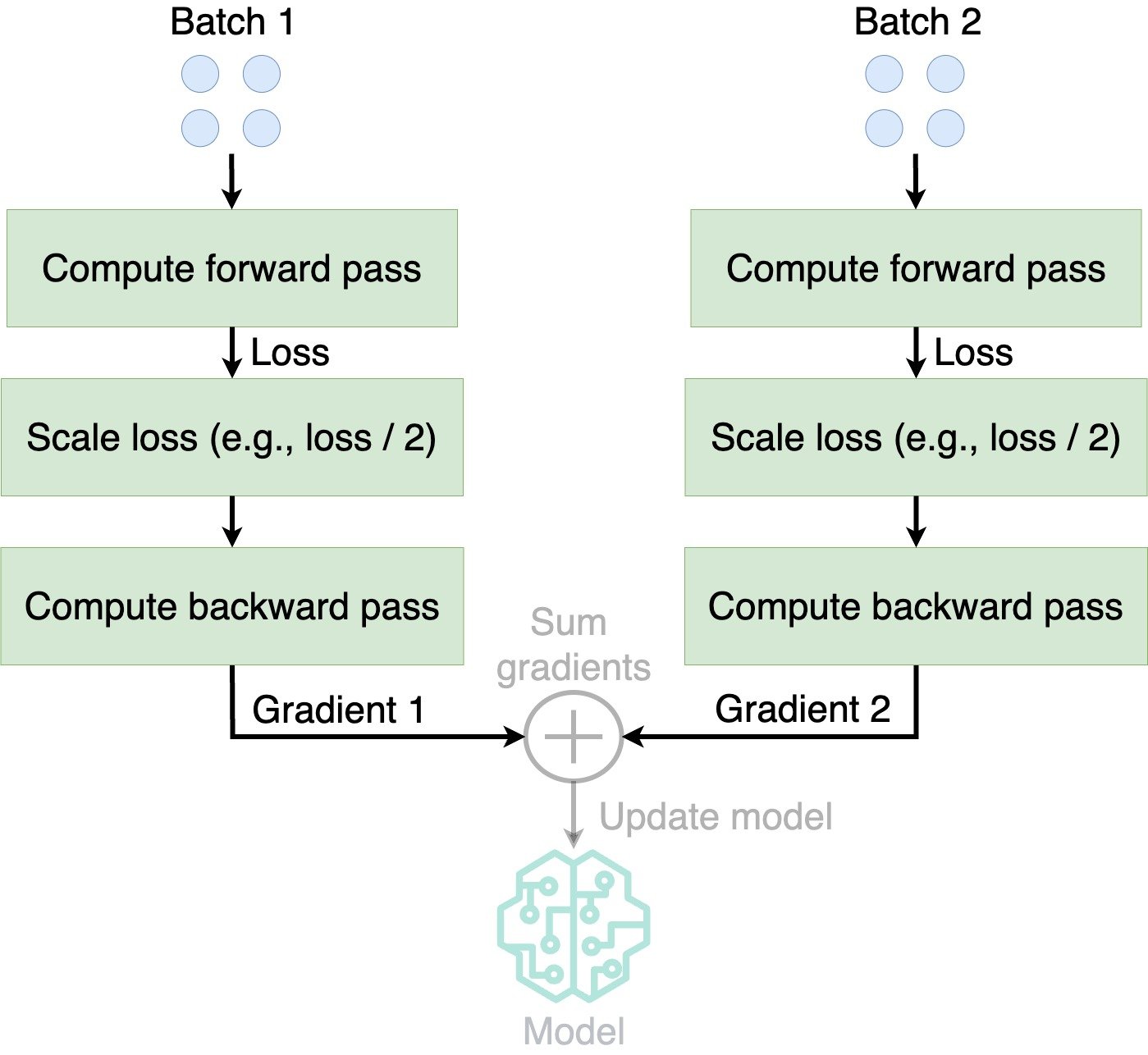

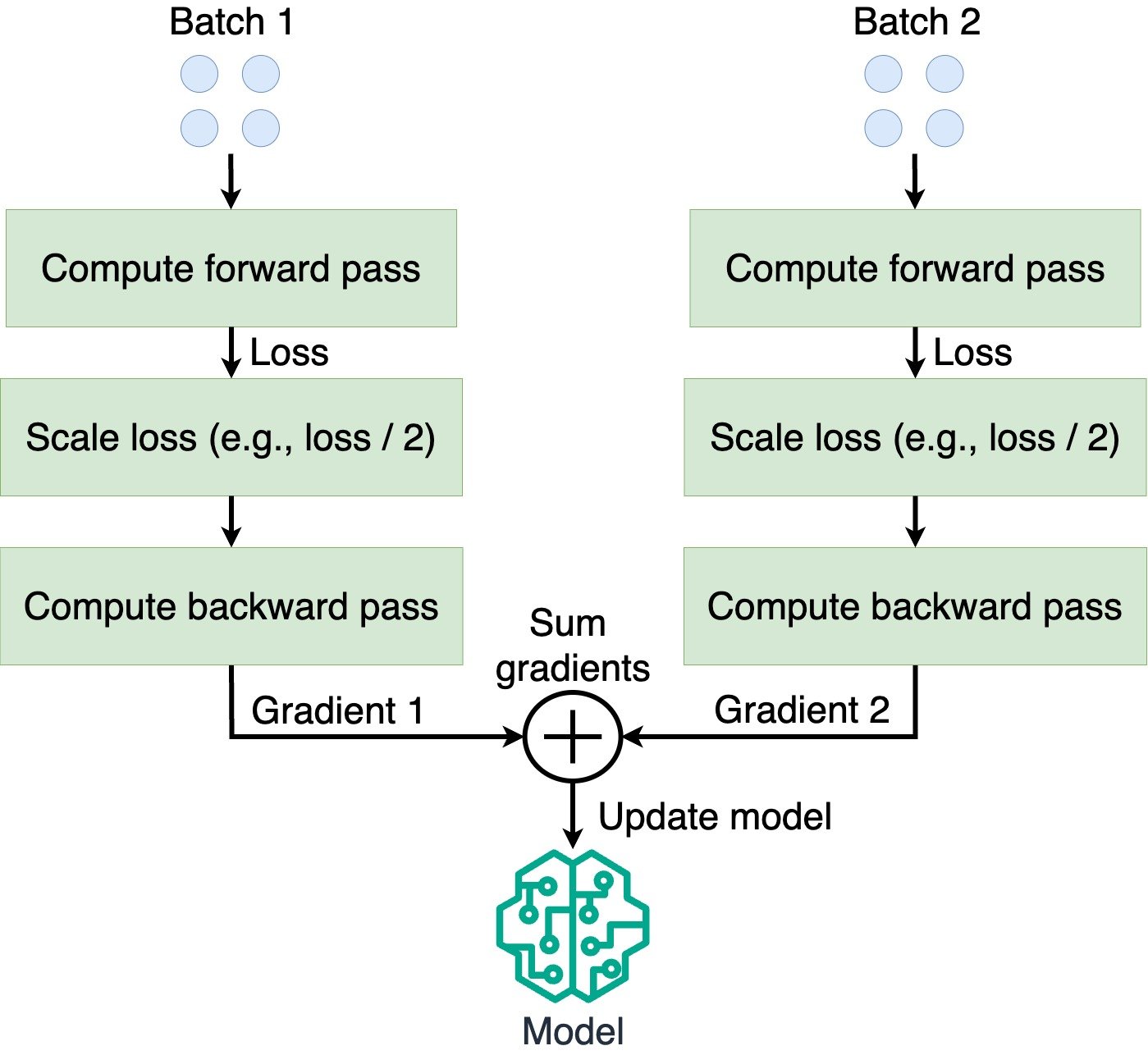

Gradient accumulation improves memory efficiency

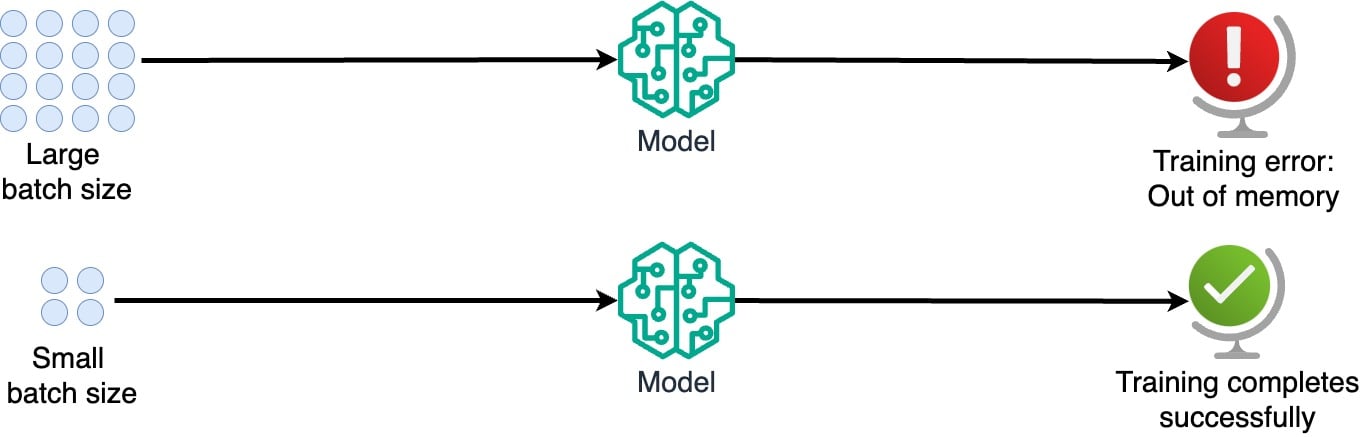

The problem with large batch sizes

- Large batch sizes: Robust gradient estimates for quicker learning

- GPU memory constrains batch sizes

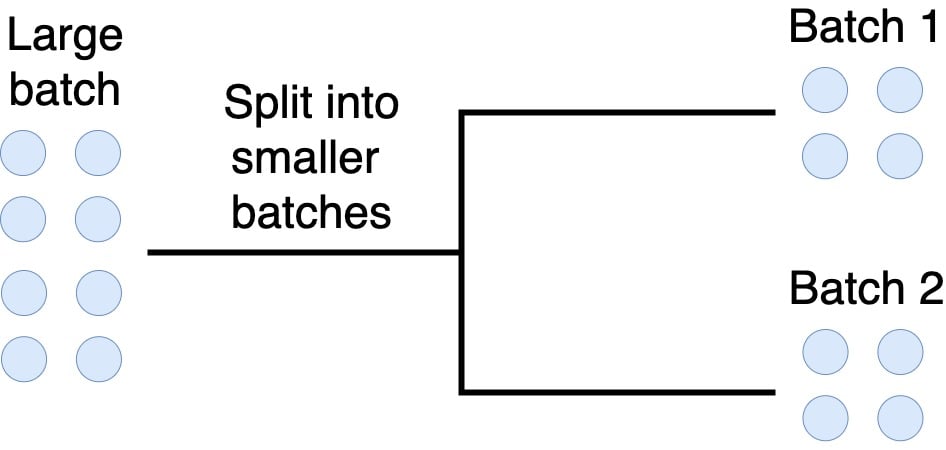

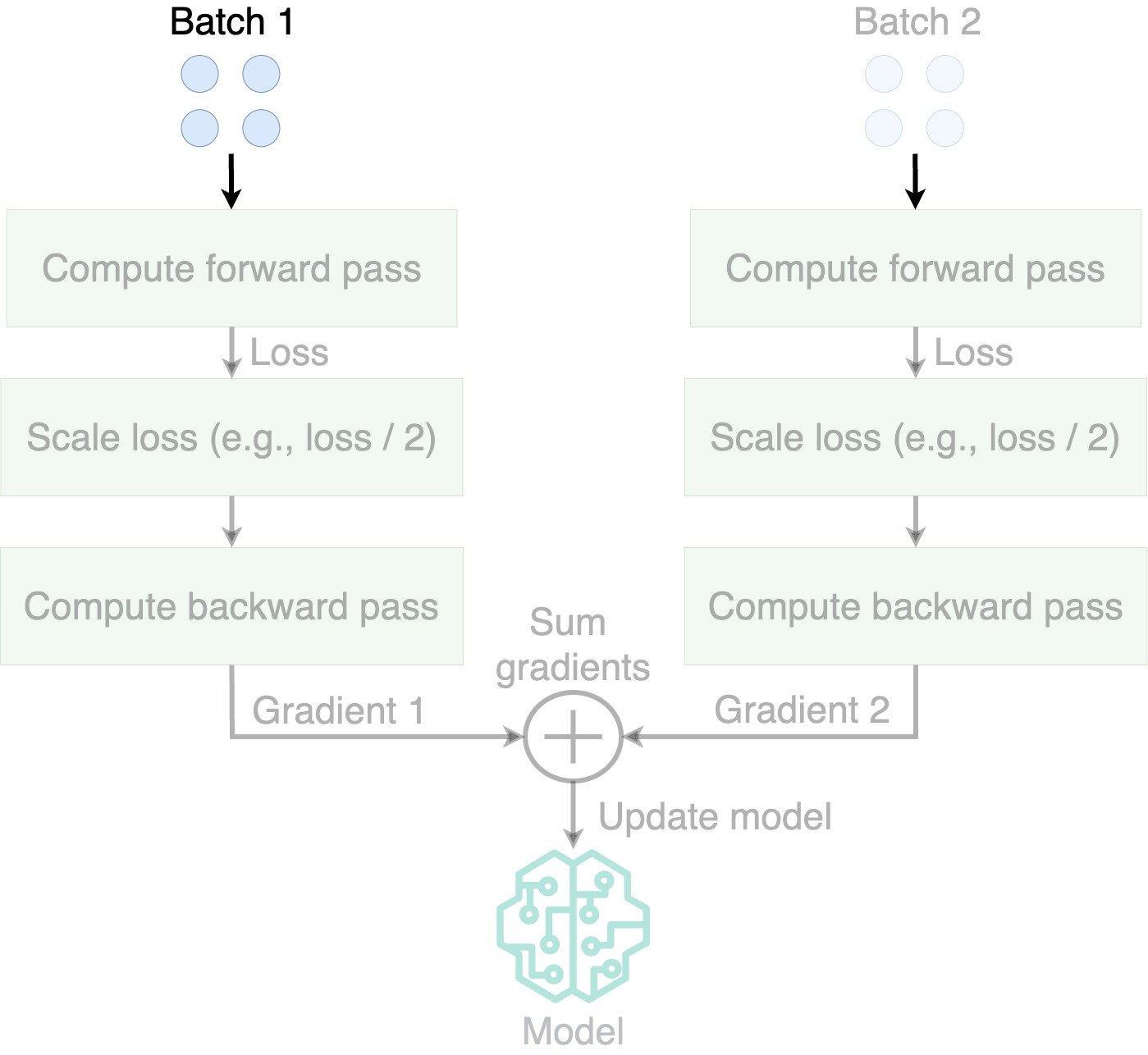

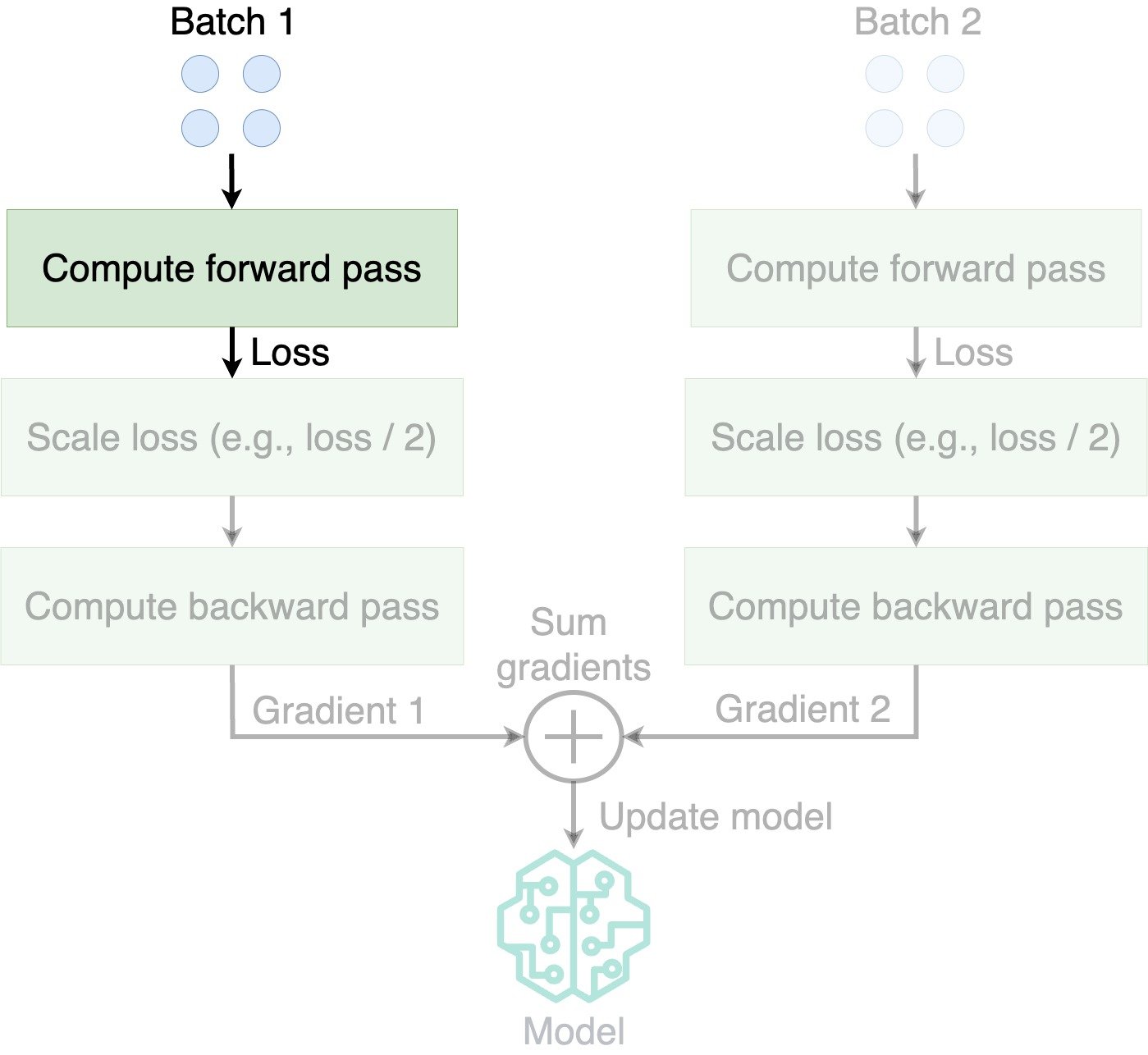

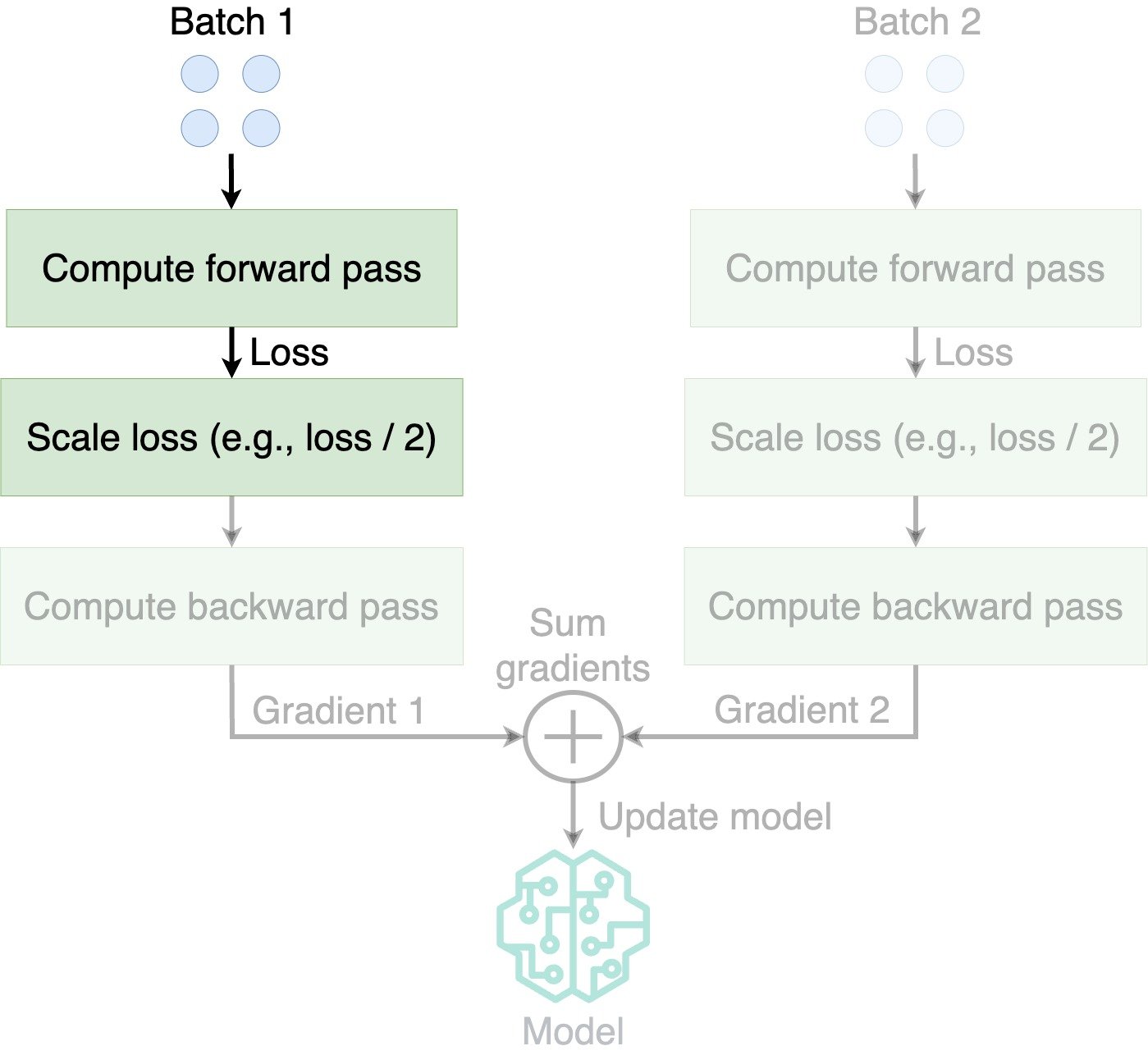

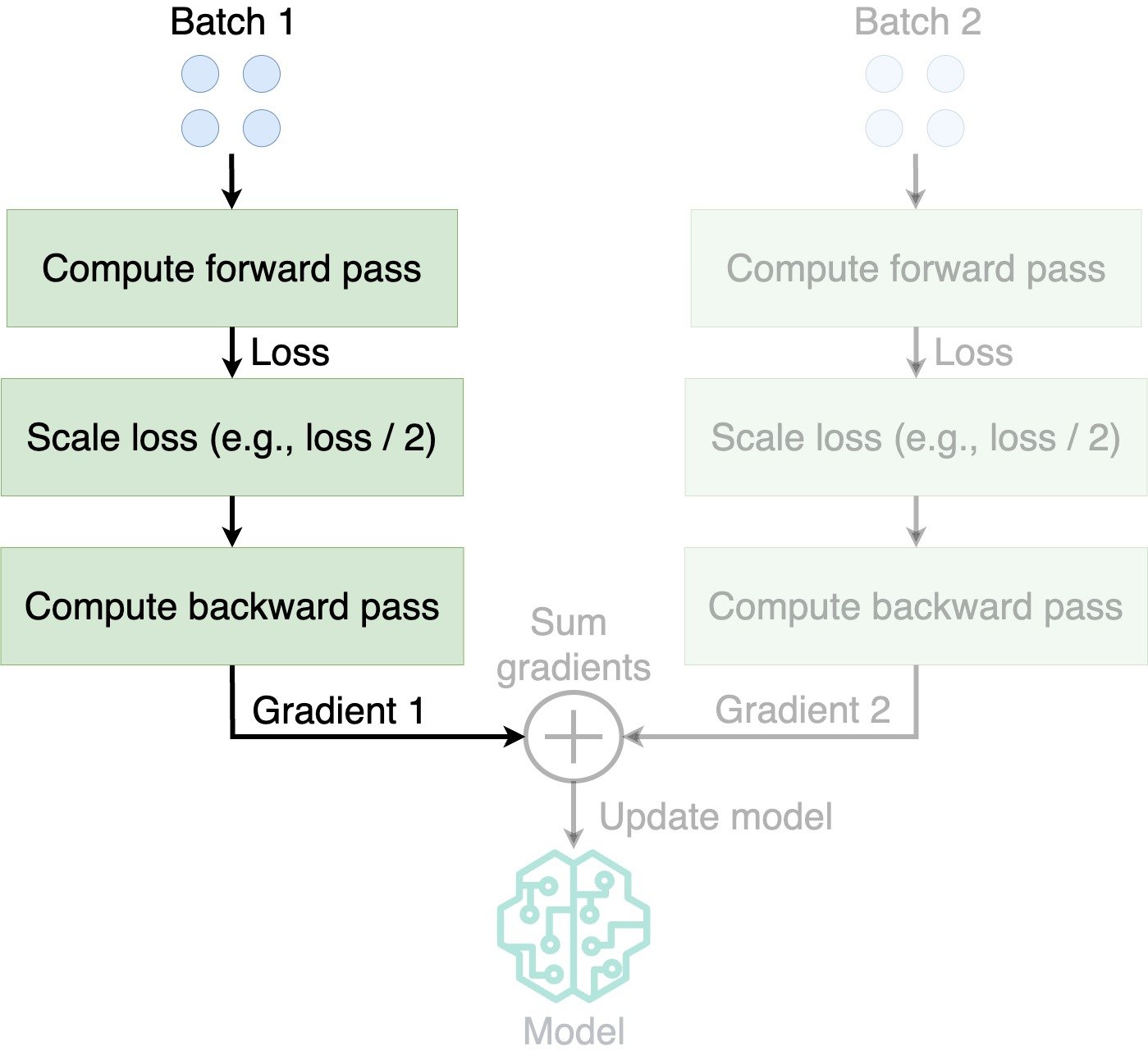

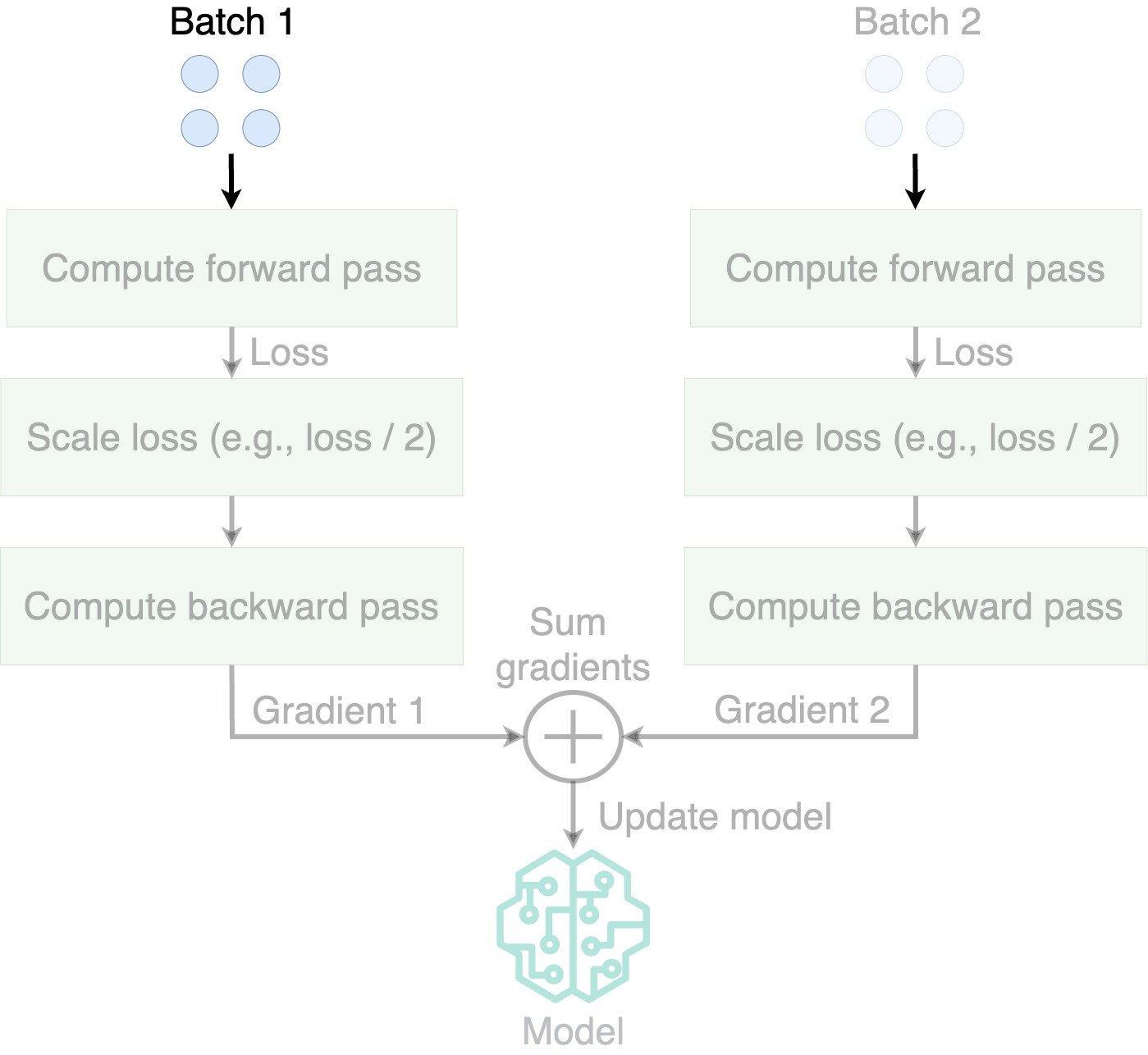

How does gradient accumulation work?

- Gradient accumulation: Sum gradients over smaller batches

- Effectively train the model on a large batch

- Update model parameters after summing gradients

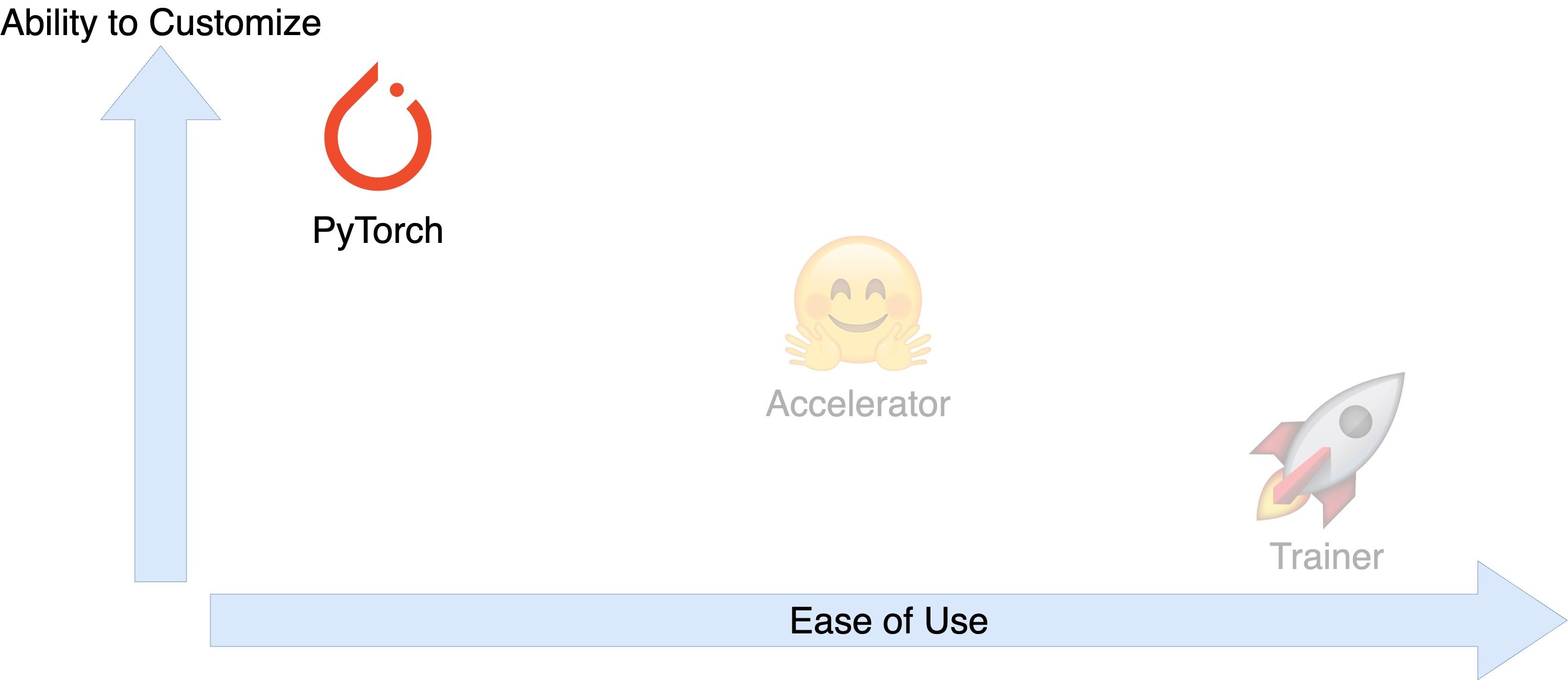

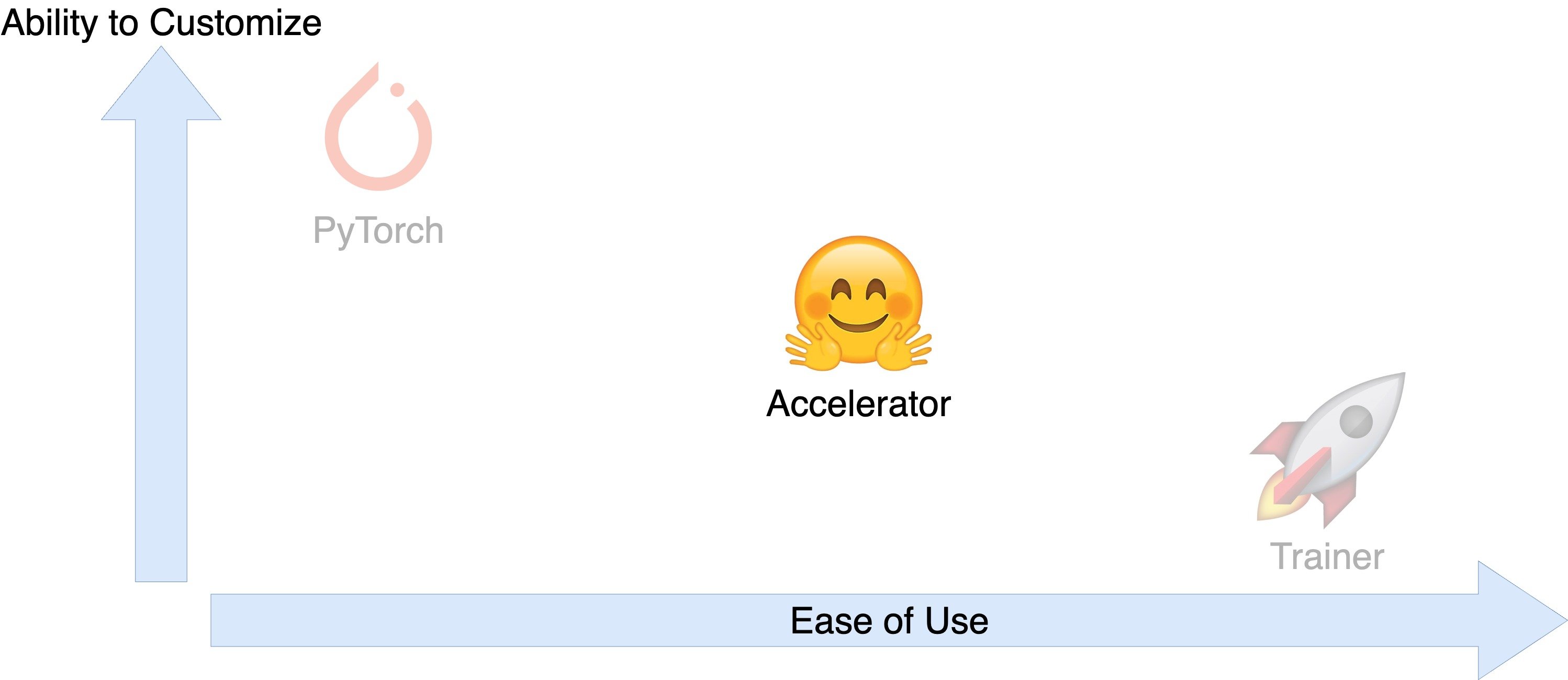

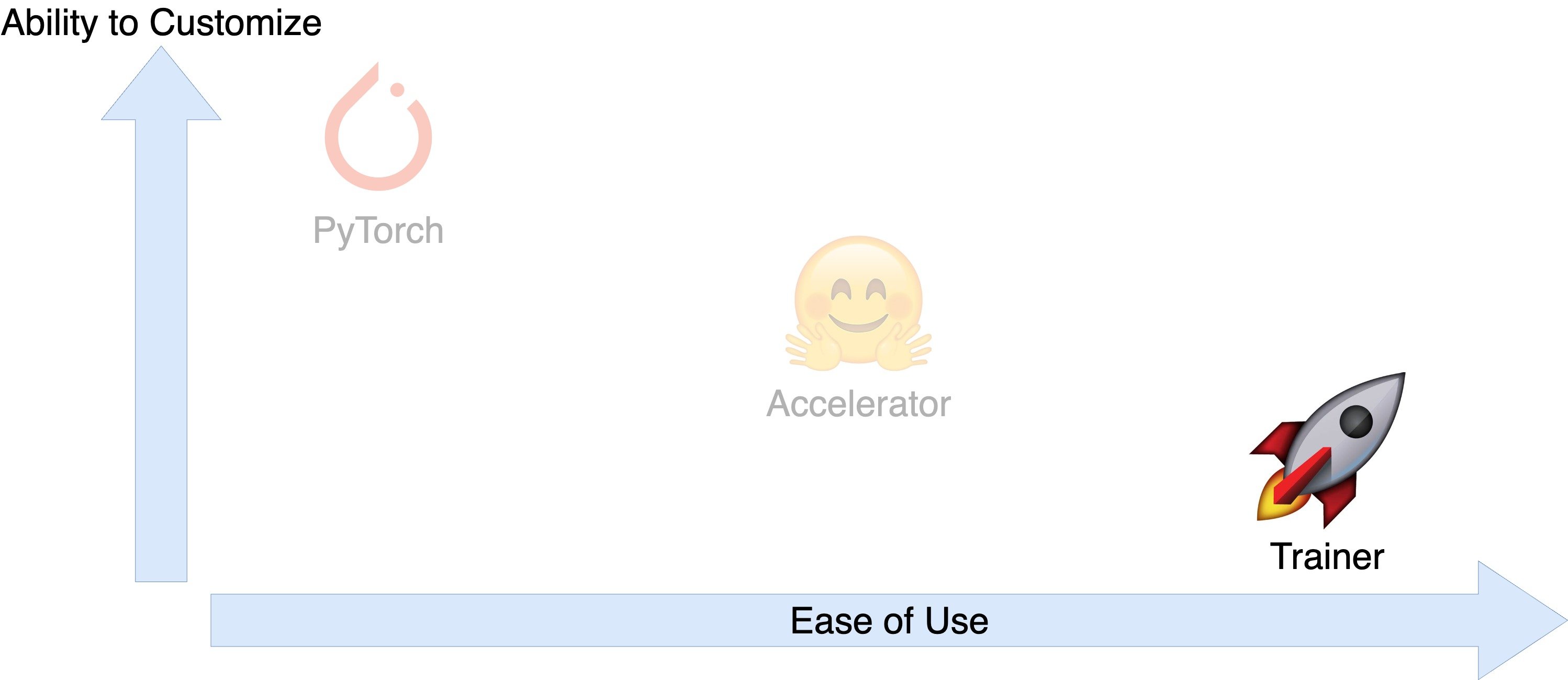

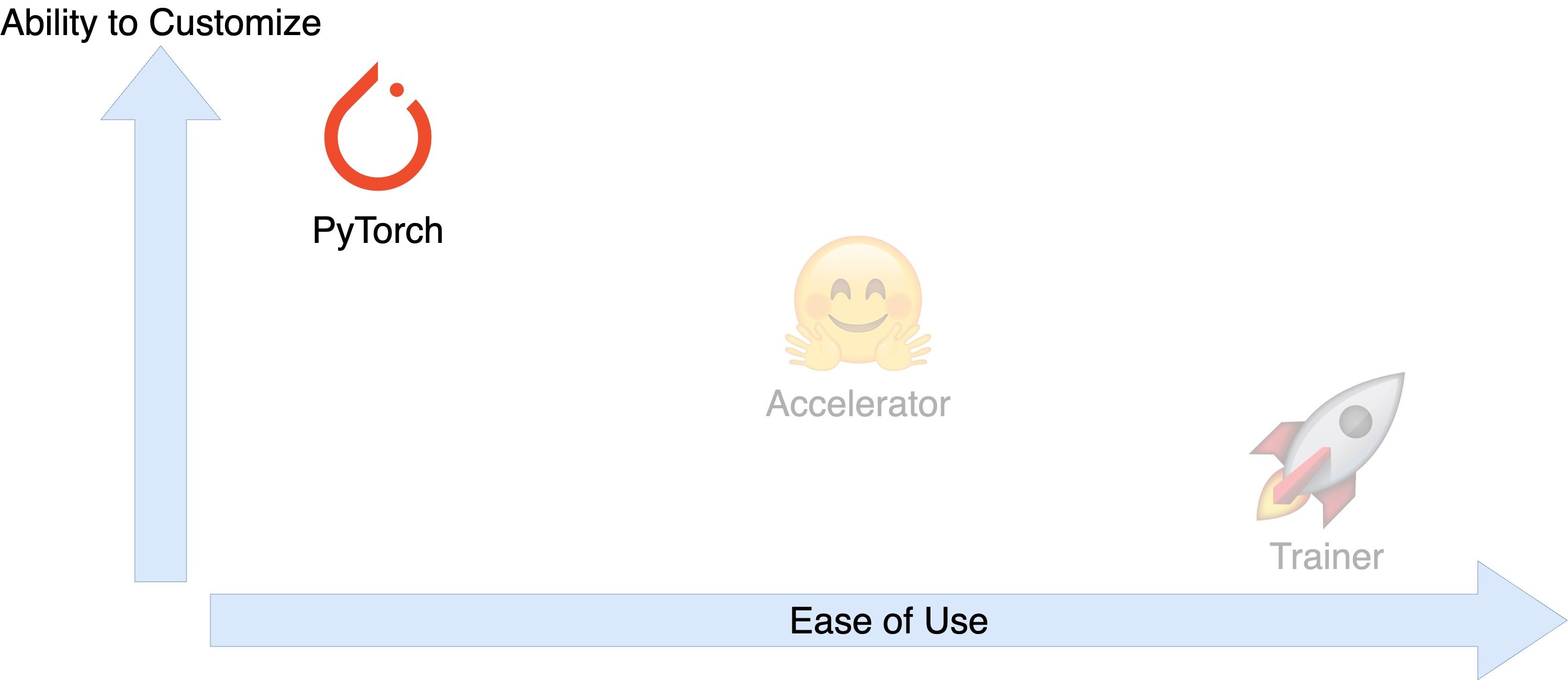

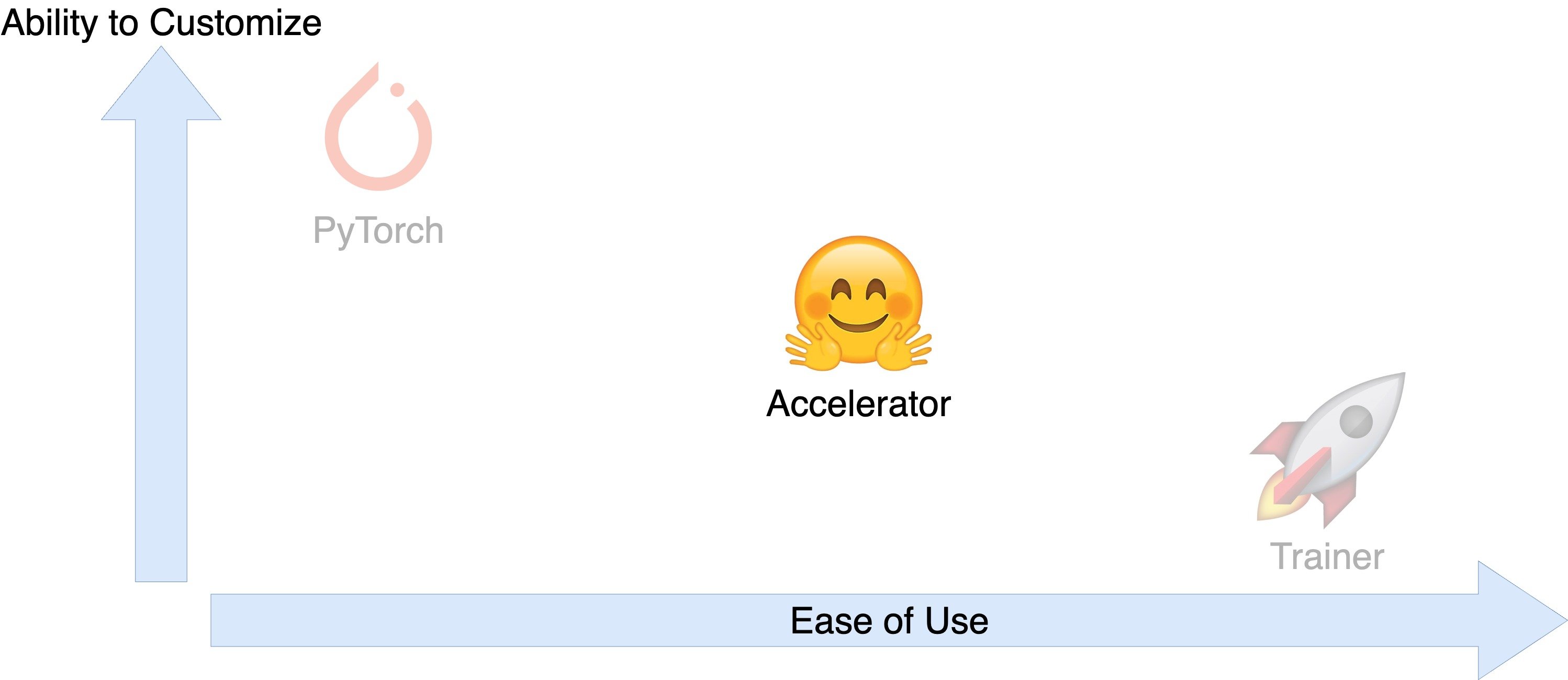

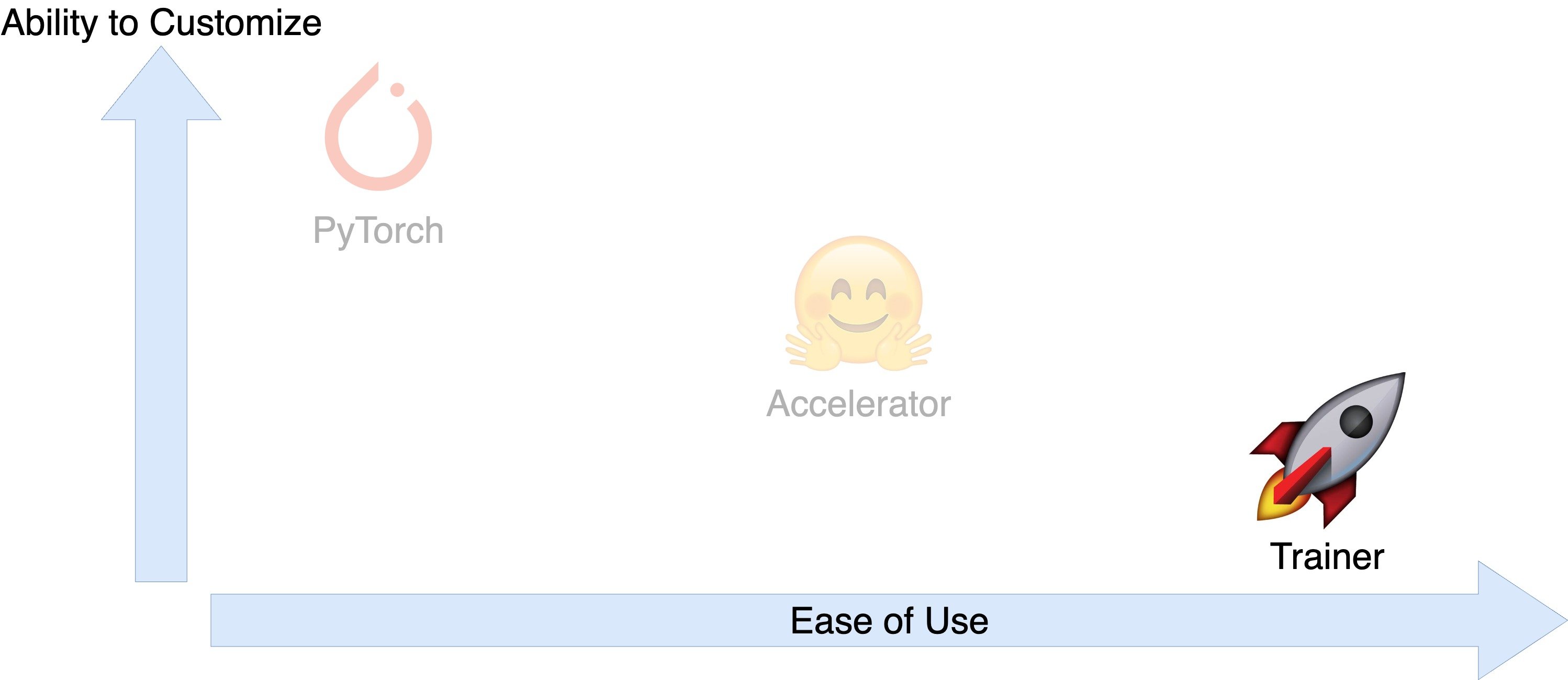

PyTorch, Accelerator, and Trainer

PyTorch, Accelerator, and Trainer

PyTorch, Accelerator, and Trainer

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

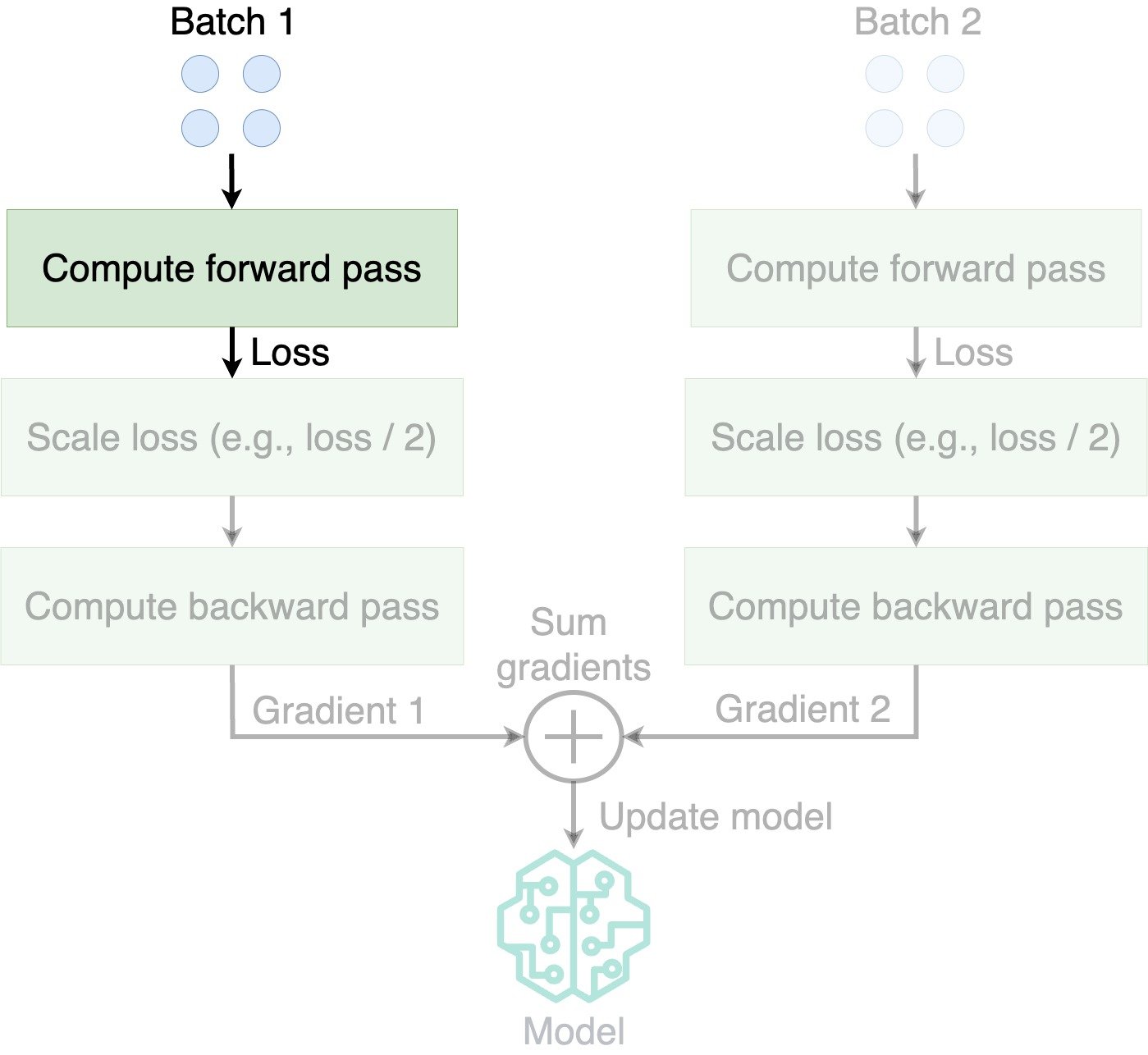

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

outputs = model(inputs, labels=targets)

loss = outputs.loss

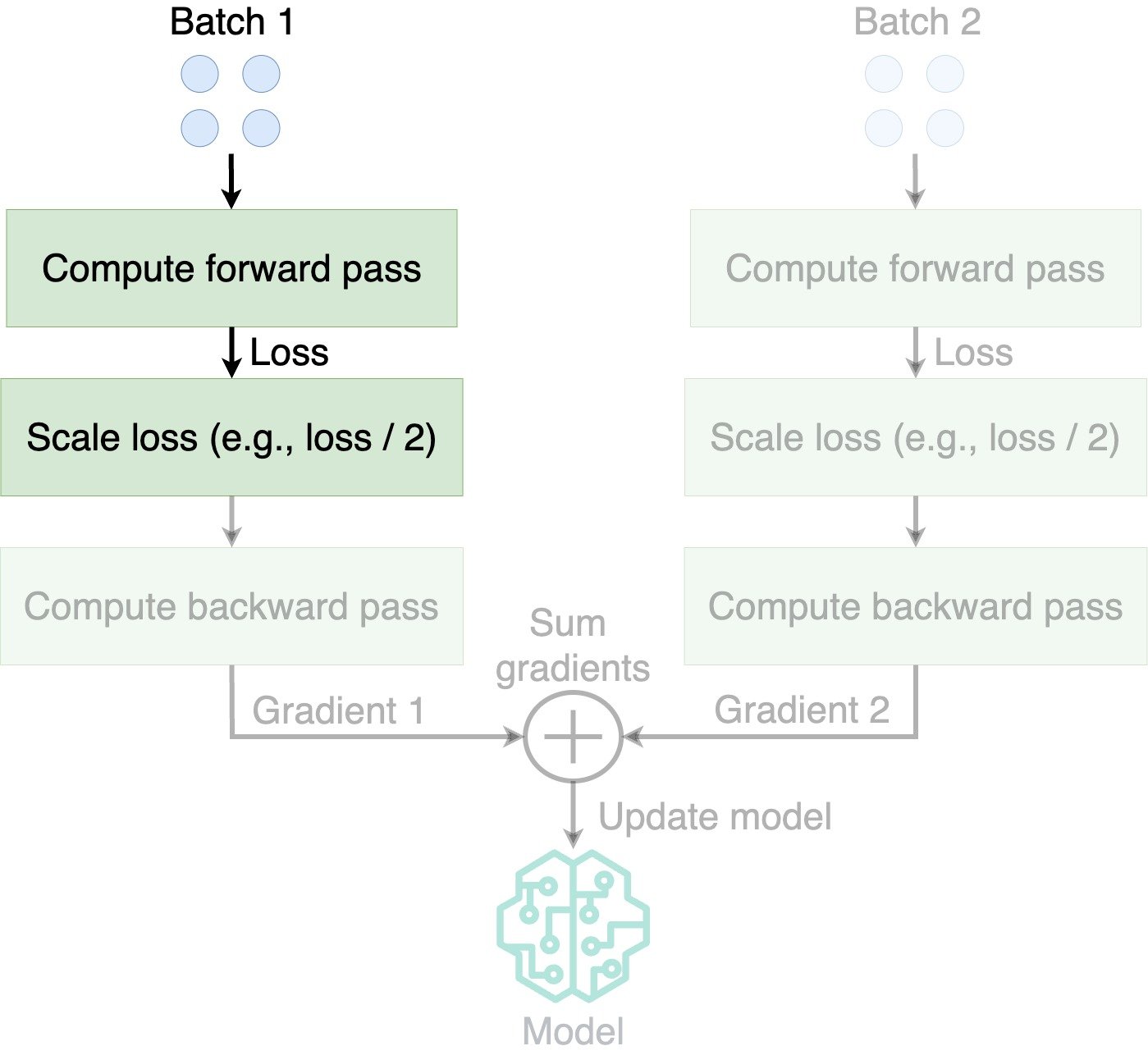

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

outputs = model(inputs, labels=targets)

loss = outputs.loss

loss = loss / gradient_accumulation_steps

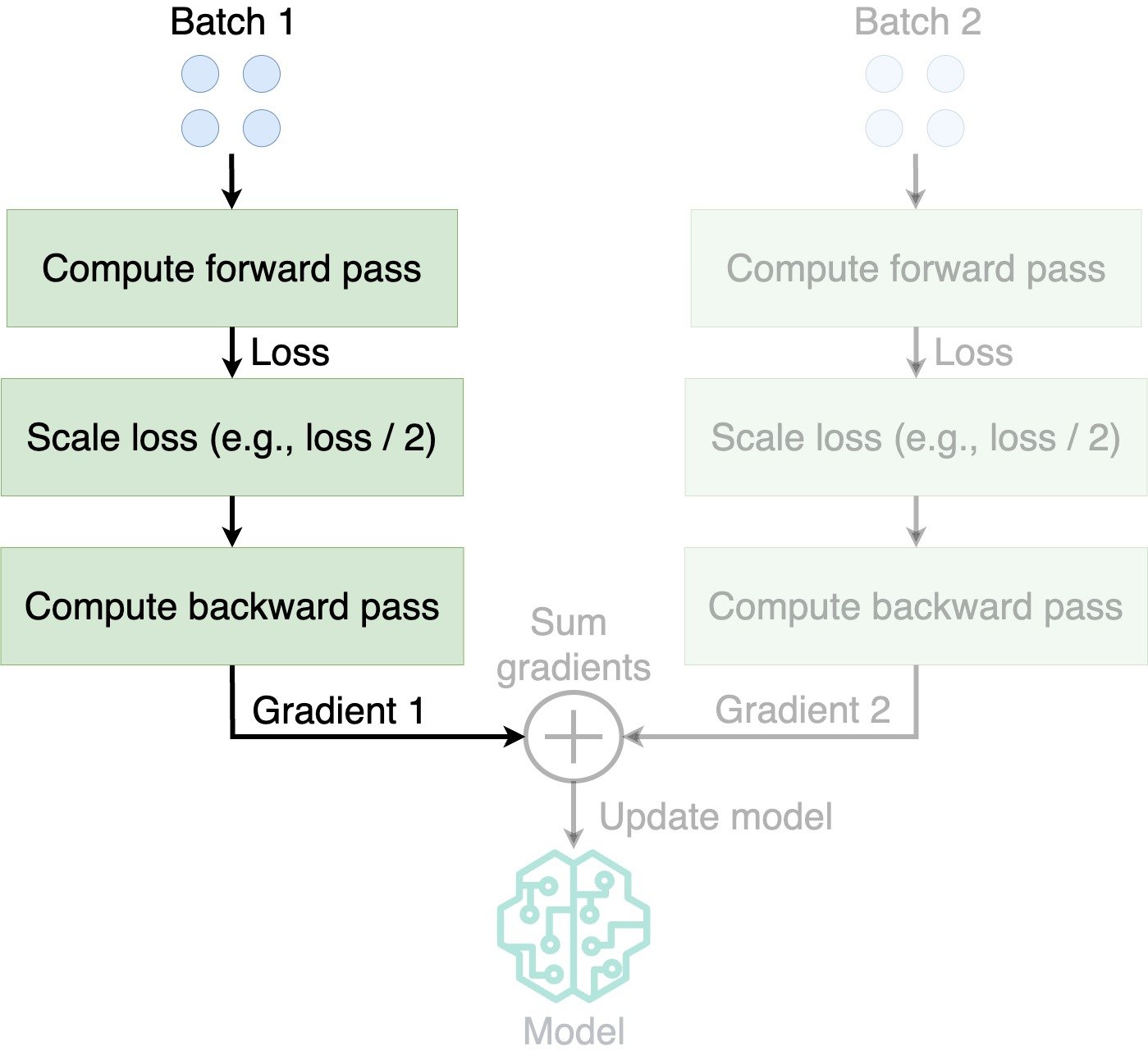

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

outputs = model(inputs, labels=targets)

loss = outputs.loss

loss = loss / gradient_accumulation_steps

loss.backward()

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

outputs = model(inputs, labels=targets)

loss = outputs.loss

loss = loss / gradient_accumulation_steps

loss.backward()

if ((index + 1)

% gradient_accumulation_steps == 0):

Gradient accumulation with PyTorch

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

inputs, targets = (inputs.to(device),

targets.to(device))

outputs = model(inputs, labels=targets)

loss = outputs.loss

loss = loss / gradient_accumulation_steps

loss.backward()

if ((index + 1)

% gradient_accumulation_steps == 0):

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

From PyTorch to Accelerator

From PyTorch to Accelerator

Gradient accumulation with Accelerator

accelerator = \ Accelerator(gradient_accumulation_steps=2)for index, batch in enumerate(dataloader): inputs, targets = (batch["input_ids"], batch["labels"])

Gradient accumulation with Accelerator

accelerator = \

Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

inputs, targets = (batch["input_ids"],

batch["labels"])

outputs = model(inputs,

labels=targets)

loss = outputs.loss

Gradient accumulation with Accelerator

accelerator = \

Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = (batch["input_ids"],

batch["labels"])

outputs = model(inputs,

labels=targets)

loss = outputs.loss

Gradient accumulation with Accelerator

accelerator = \

Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = (batch["input_ids"],

batch["labels"])

outputs = model(inputs,

labels=targets)

loss = outputs.loss

accelerator.backward(loss)

Gradient accumulation with Accelerator

accelerator = \

Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = (batch["input_ids"],

batch["labels"])

outputs = model(inputs,

labels=targets)

loss = outputs.loss

accelerator.backward(loss)

Gradient accumulation with Accelerator

accelerator = \

Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = (batch["input_ids"],

batch["labels"])

outputs = model(inputs,

labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

From Accelerator to Trainer

From Accelerator to Trainer

Gradient accumulation with Trainer

training_args = TrainingArguments(output_dir="./results", evaluation_strategy="epoch", gradient_accumulation_steps=2)trainer = Trainer(model=model, args=training_args, train_dataset=dataset["train"], eval_dataset=dataset["validation"], compute_metrics=compute_metrics)trainer.train()

{'epoch': 1.0, 'eval_loss': 0.73, 'eval_accuracy': 0.03, 'eval_f1': 0.05}

{'epoch': 2.0, 'eval_loss': 0.68, 'eval_accuracy': 0.19, 'eval_f1': 0.25}

Let's practice!

Efficient AI Model Training with PyTorch