Gradient checkpointing and local SGD

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Improving training efficiency

Gradient checkpointing improves memory efficiency

Local SGD addresses communication efficiency

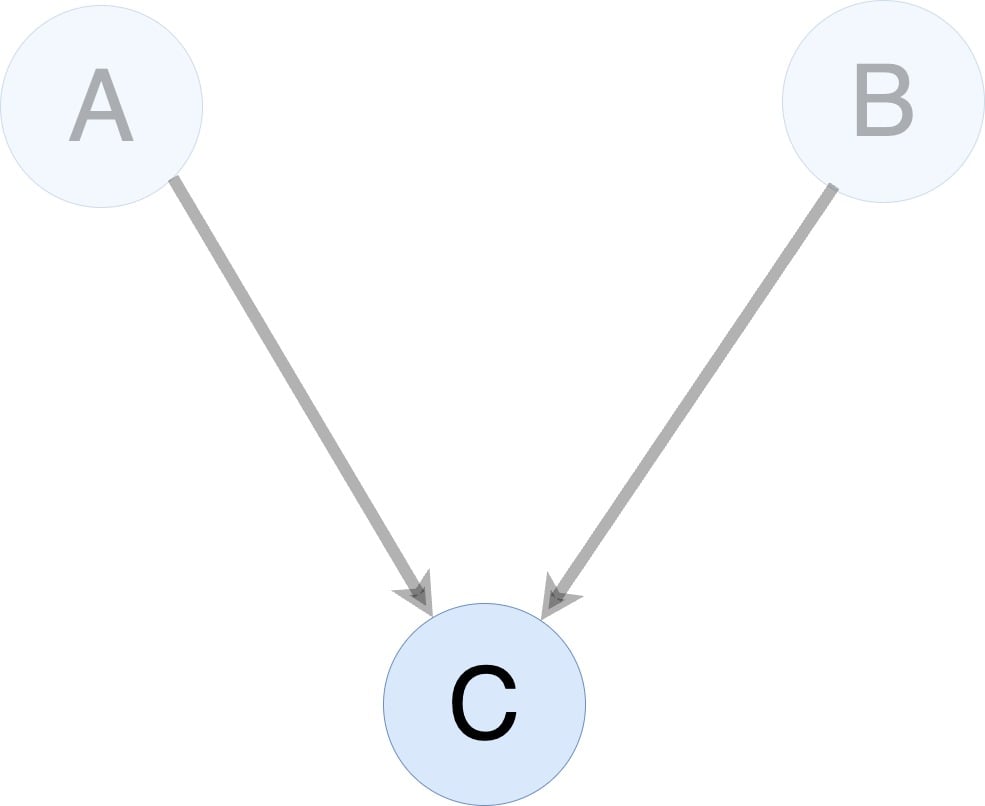

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

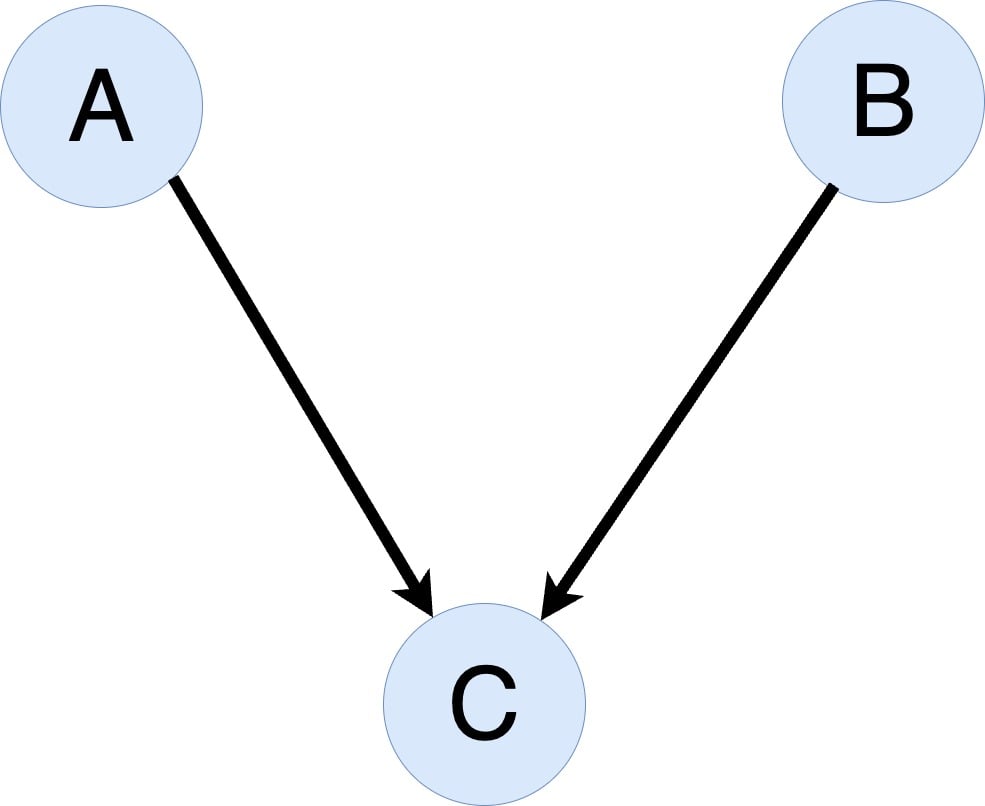

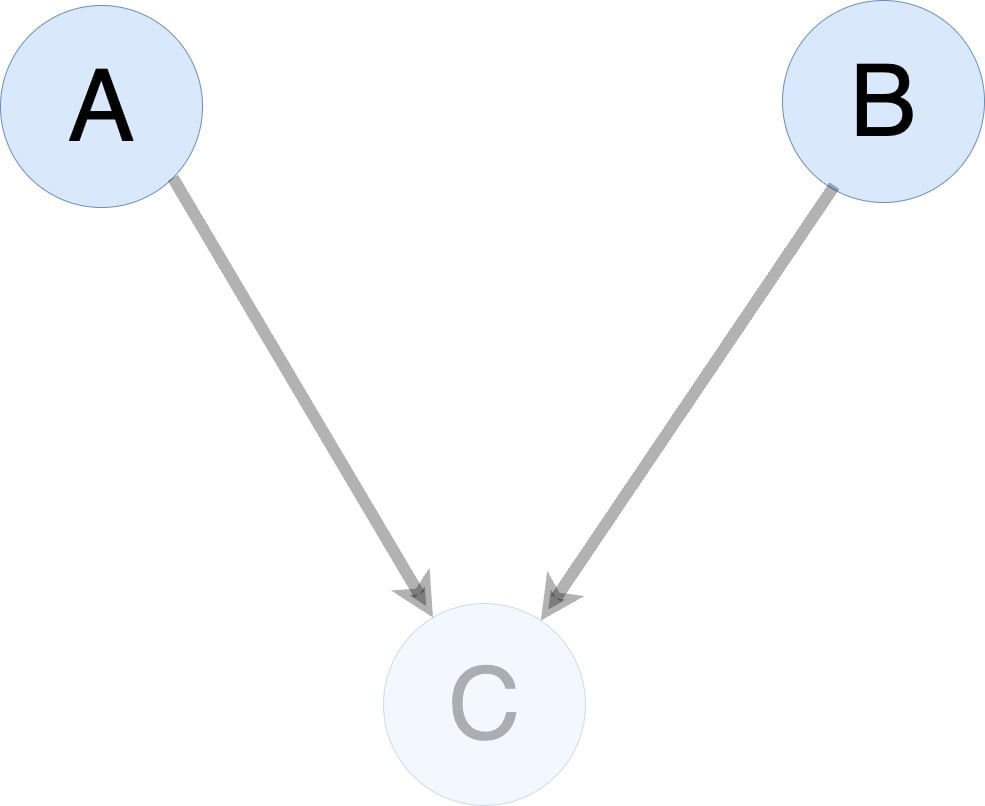

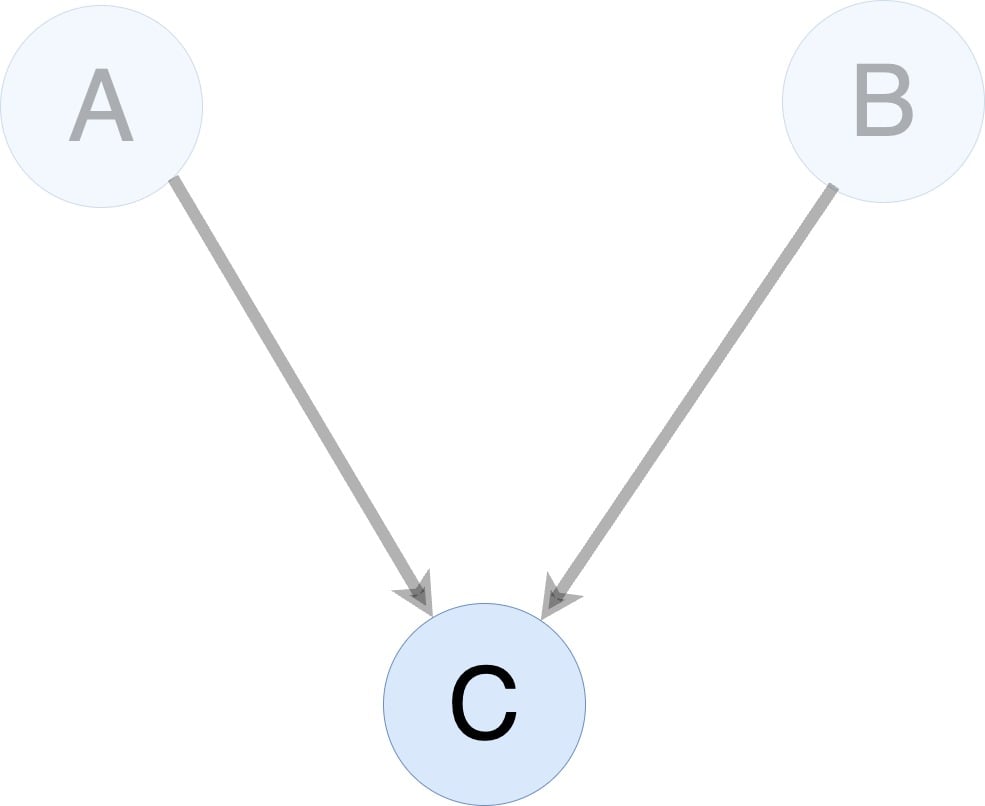

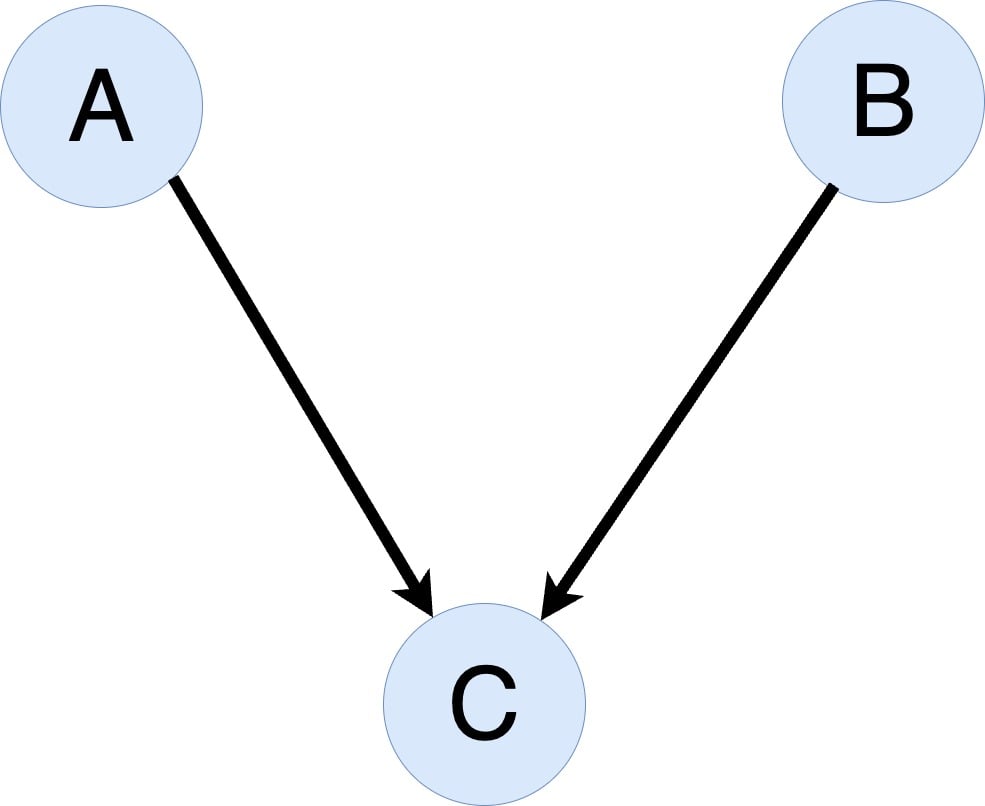

- Example: compute A + B = C

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

- A, B not needed for rest of forward pass

- Should we save or remove A and B?

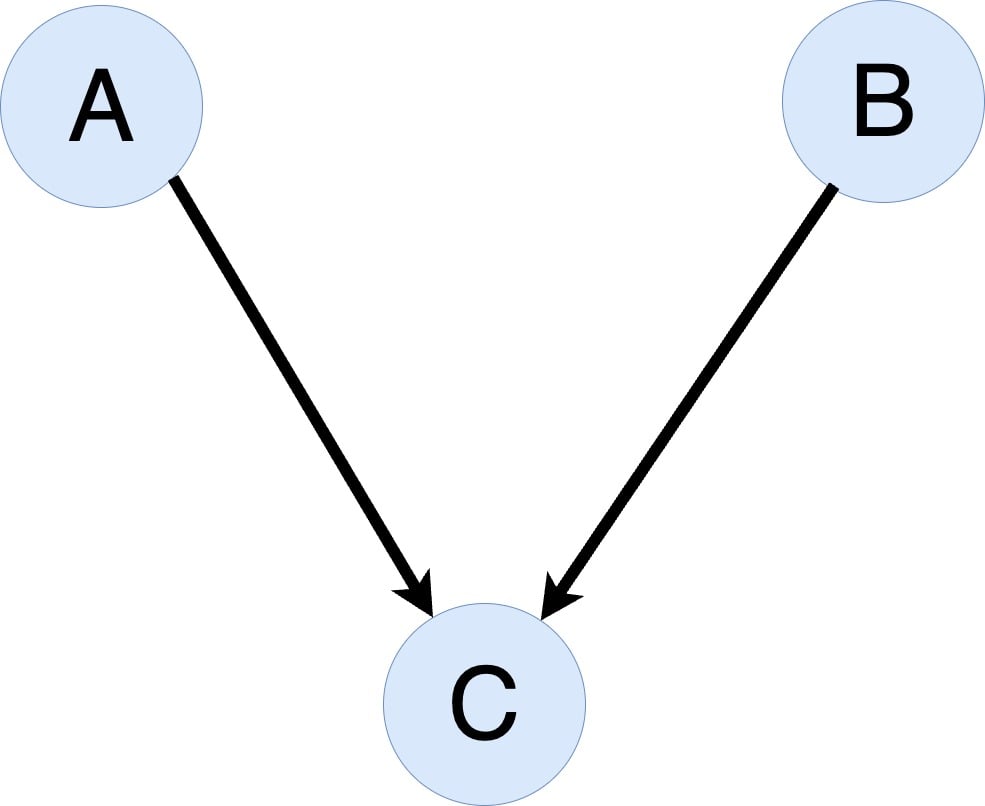

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

- A, B not needed for rest of forward pass

- Should we save or remove A and B?

- No gradient checkpointing: save A, B

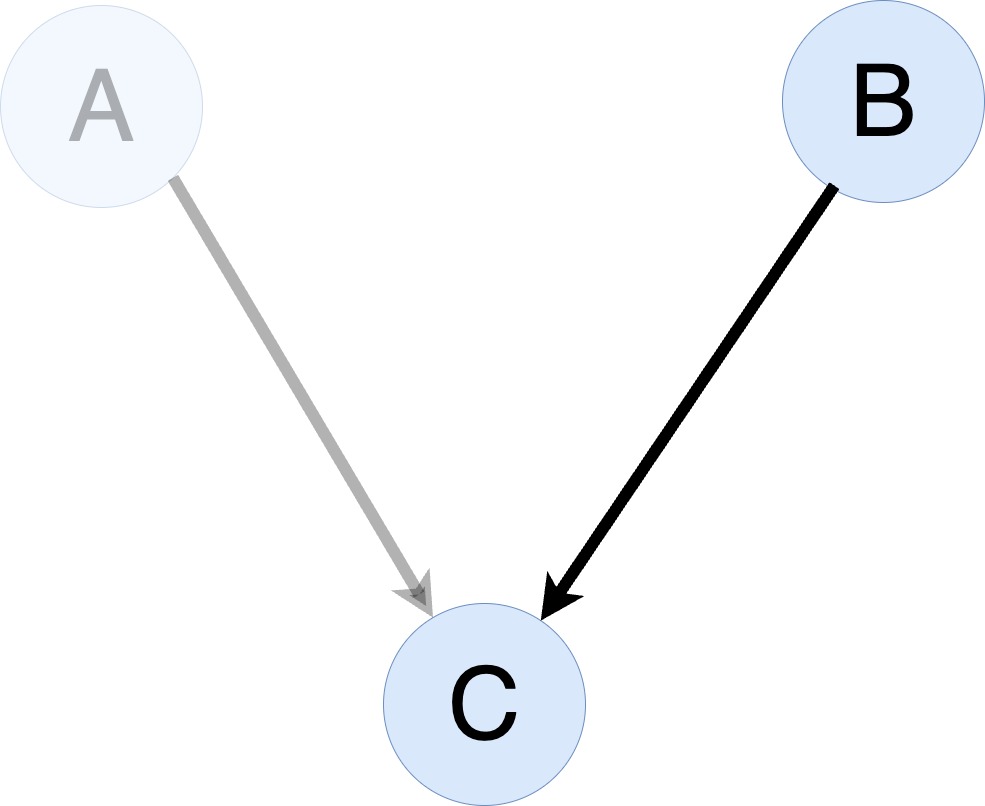

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

- A, B not needed for rest of forward pass

- Should we save or remove A and B?

- No gradient checkpointing: save A, B

- Gradient checkpointing: remove A, B

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

- A, B not needed for rest of forward pass

- Should we save or remove A and B?

- No gradient checkpointing: save A, B

- Gradient checkpointing: remove A, B

- Recompute A, B during backward pass

What is gradient checkpointing?

- Gradient checkpointing: reduce memory by selecting which activations to save

- Example: compute A + B = C

- First compute A, B, then compute C

- A, B not needed for rest of forward pass

- Should we save or remove A and B?

- No gradient checkpointing: save A, B

- Gradient checkpointing: remove A, B

- Recompute A, B during backward pass

- If B is expensive to recompute, save it

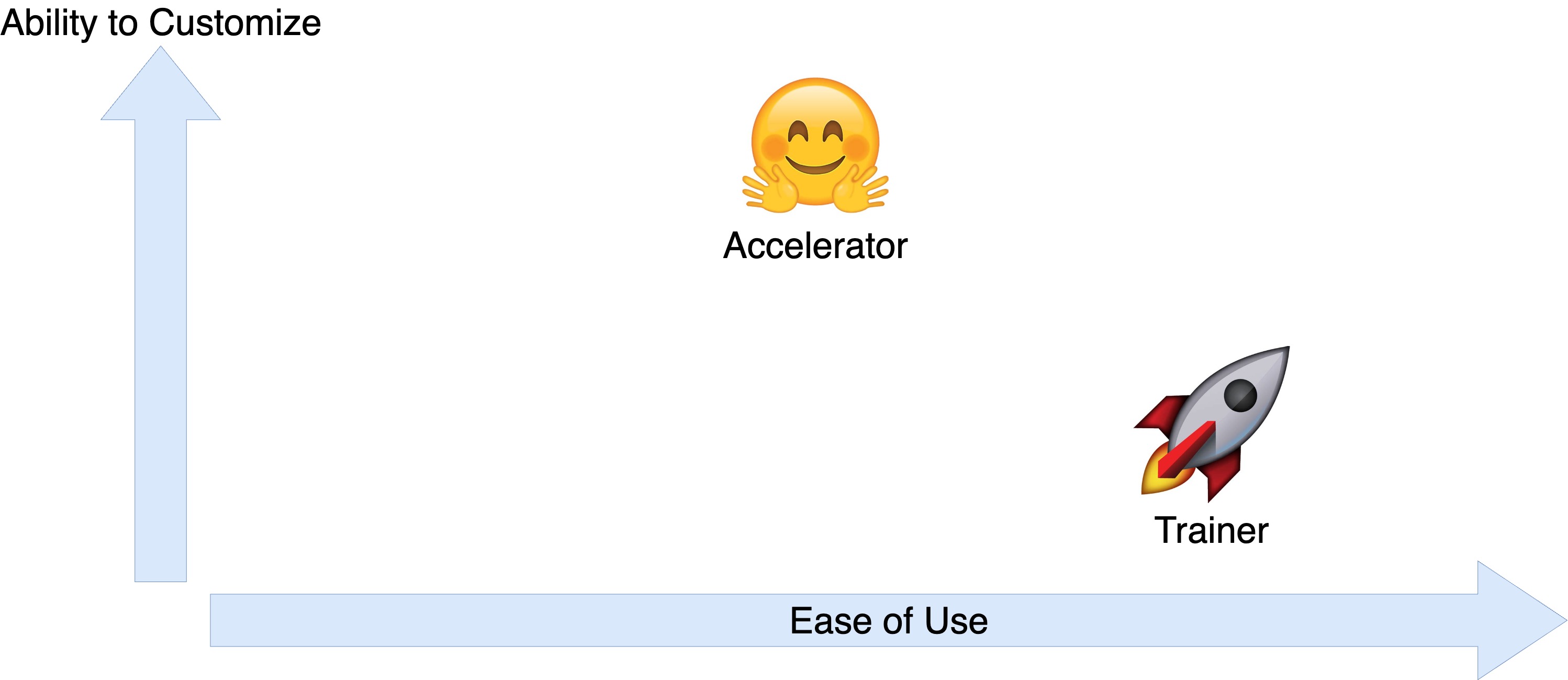

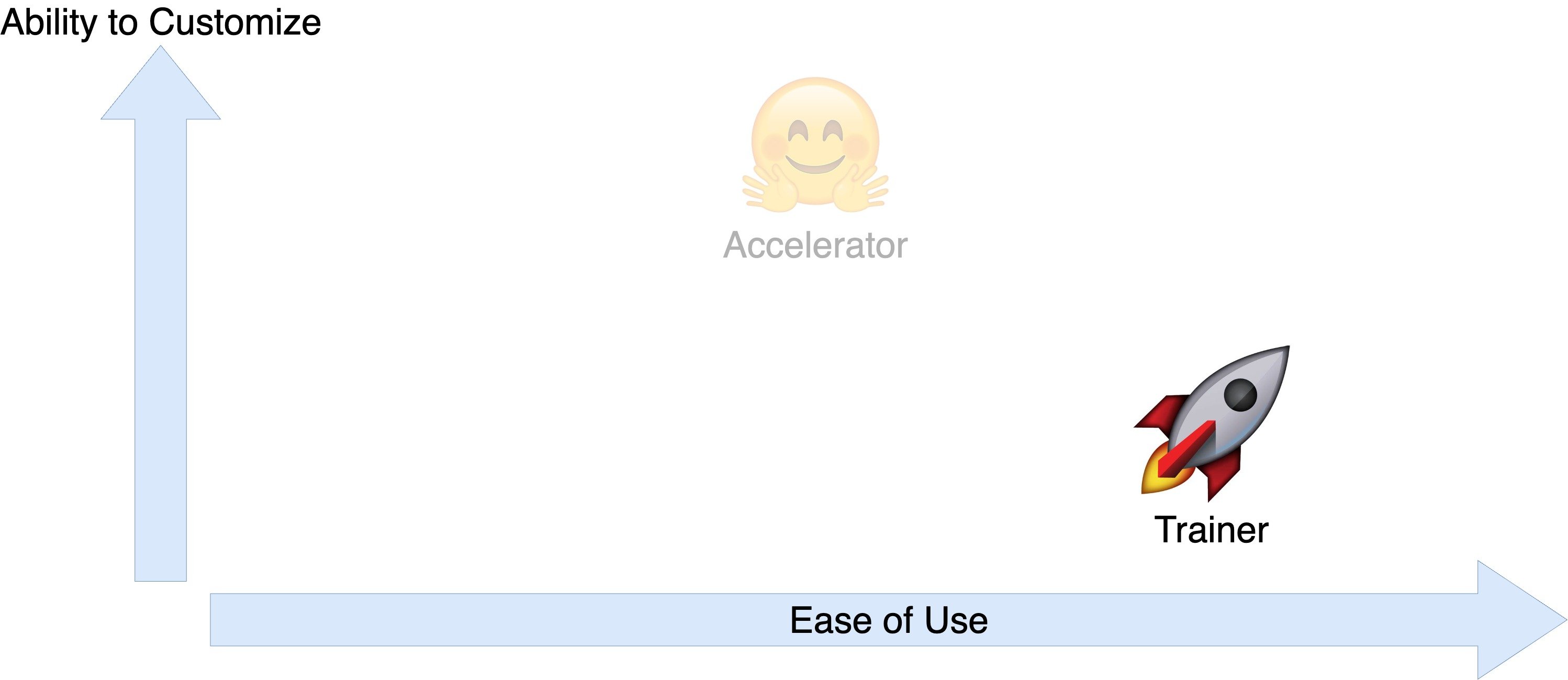

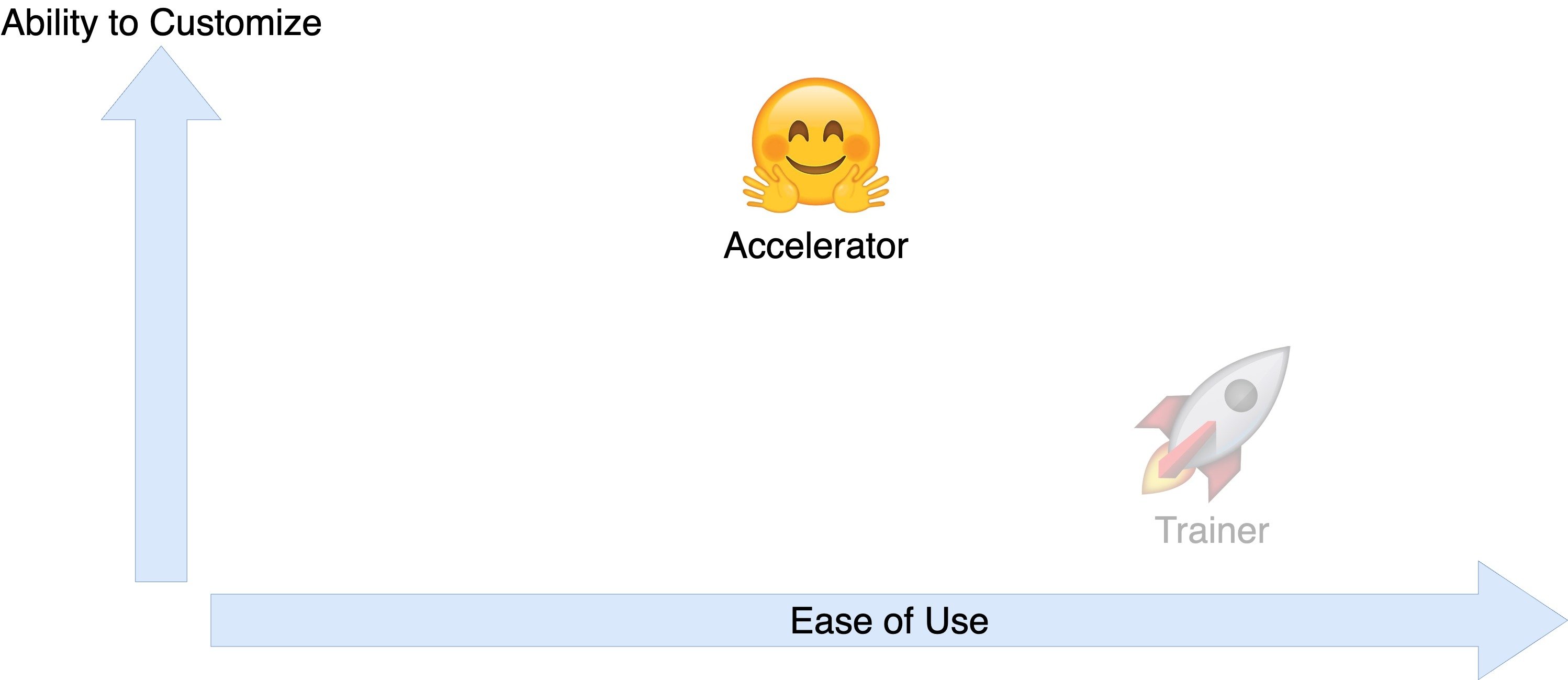

Trainer and Accelerator

Trainer and Accelerator

Gradient checkpointing with Trainer

training_args = TrainingArguments(output_dir="./results",

evaluation_strategy="epoch",

gradient_accumulation_steps=4)

Gradient checkpointing with Trainer

training_args = TrainingArguments(output_dir="./results", evaluation_strategy="epoch", gradient_accumulation_steps=4, gradient_checkpointing=True)trainer = Trainer(model=model, args=training_args, train_dataset=dataset["train"], eval_dataset=dataset["validation"], compute_metrics=compute_metrics)trainer.train()

{'epoch': 1.0, 'eval_loss': 0.73, 'eval_accuracy': 0.03, 'eval_f1': 0.05}

From Trainer to Accelerator

Gradient checkpointing with Accelerator

accelerator = Accelerator(gradient_accumulation_steps=2)

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = batch["input_ids"], batch["labels"]

outputs = model(inputs, labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

Gradient checkpointing with Accelerator

accelerator = Accelerator(gradient_accumulation_steps=2)

model.gradient_checkpointing_enable()

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = batch["input_ids"], batch["labels"]

outputs = model(inputs, labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

Local SGD improves communication efficiency

What is local SGD?

- Each device computes gradients in parallel

What is local SGD?

- Each device computes gradients in parallel

- Gradient synchronization: Driver node updates model parameters on each device

- Local SGD: Reduce frequency of gradient synchronization

Local SGD with Accelerator

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = batch["input_ids"], batch["labels"]

outputs = model(inputs, labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

Local SGD with Accelerator

from accelerate.local_sgd import LocalSGD

with LocalSGD(accelerator=accelerator, model=model, local_sgd_steps=8,

enabled=True) as local_sgd:

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = batch["input_ids"], batch["labels"]

outputs = model(inputs, labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

Local SGD with Accelerator

from accelerate.local_sgd import LocalSGD

with LocalSGD(accelerator=accelerator, model=model, local_sgd_steps=8,

enabled=True) as local_sgd:

for index, batch in enumerate(dataloader):

with accelerator.accumulate(model):

inputs, targets = batch["input_ids"], batch["labels"]

outputs = model(inputs, labels=targets)

loss = outputs.loss

accelerator.backward(loss)

optimizer.step()

lr_scheduler.step()

optimizer.zero_grad()

local_sgd.step()

Let's practice!

Efficient AI Model Training with PyTorch