Train models with Accelerator

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Trainer and Accelerator

Custom training loops

- Trainer: no custom training loops

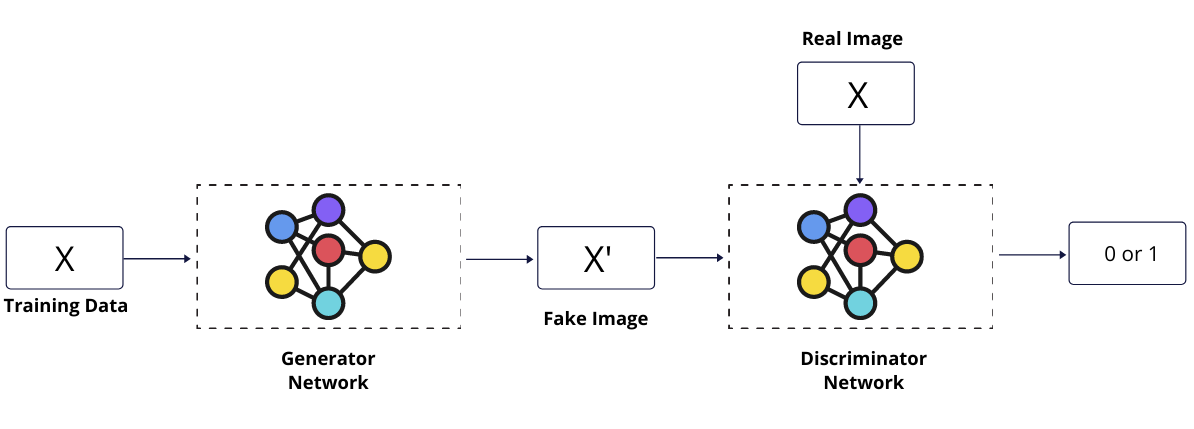

- Some advanced tasks in generative AI require two networks

1 https://www.aitude.com/basics-of-generative-adversarial-network-model/

Trainer and Accelerator

Modifying a basic training loop

for batch in dataloader:optimizer.zero_grad()inputs, targets = batch inputs = inputs.to(device) targets = targets.to(device)outputs = model(inputs)loss = outputs.lossloss.backward()optimizer.step() scheduler.step()

- Zero the gradients

- Move data to a specified device:

.to(device) - Perform forward pass

- Compute cross-entropy loss

- Compute gradients in a backward pass

- Update model parameters, learning rate

Create an Accelerator object

Acceleratorprovides an interface for distributed training

from accelerate import Accelerator

accelerator = Accelerator(

device_placement=True

)

device_placement(bool, defaultTrue): Handle device placement by default

Define the model and optimizer

- Load a pre-trained model

from transformers import AutoModelForSequenceClassification

model = AutoModelForSequenceClassification.from_pretrained(

"distilbert-base-cased", return_dict=True)

- Optimize model parameters with

Adam

from torch.optim import Adam

optimizer = Adam(params=model.parameters(), lr=2e-5)

Define the scheduler

from transformers import get_linear_schedule_with_warmup lr_scheduler = get_linear_schedule_with_warmup( optimizer=optimizer,num_warmup_steps=num_warmup_steps,num_training_steps=num_training_steps)

optimizer(obj): PyTorch optimizer, likeAdamnum_warmup_steps(int): steps to linearly increaselr, set toint(num_training_steps * 0.1)num_training_steps(int): total training steps, set tolen(train_dataloader) * num_epochs

Prepare the model for efficient training

- The

preparemethod handles device placement

model, optimizer, dataloader, lr_scheduler = \ accelerator.prepare(model,optimizer,dataloader,lr_scheduler)

Building a training loop with Accelerator

for batch in dataloader:optimizer.zero_grad()inputs, targets = batch inputs = inputs.to(device) targets = targets.to(device)

- Zero the gradients

- Previously moved data to the device

Building a training loop with Accelerator

for batch in dataloader:optimizer.zero_grad()inputs, targets = batch

- Zero the gradients

- Previously moved data to the device

- Remove lines that manually move data

Building a training loop with Accelerator

for batch in dataloader:optimizer.zero_grad()inputs, targets = batchoutputs = model(inputs)loss = outputs.loss loss.backward()

- Zero the gradients

- Previously moved data to the device

- Remove lines that manually move data

- Perform a forward pass

- Compute cross-entropy loss and gradients

Building a training loop with Accelerator

for batch in dataloader:optimizer.zero_grad()inputs, targets = batchoutputs = model(inputs) loss = outputs.lossaccelerator.backward(loss)optimizer.step() scheduler.step()

- Zero the gradients

- Previously moved data to the device

- Remove lines that manually move data

- Perform a forward pass

- Compute cross-entropy loss and gradients

- Replace

loss.backwardwithaccelerator - Update model parameters, learning rate

Summary of changes

Before Accelerator

- Need to manually move data to devices

inputs.to(device)targets.to(device)

- Compute gradients with

loss.backward()

After Accelerator

- Automatic device placement and data parallelism

accelerator.prepare(model)accelerator.prepare(dataloader)

- Handle gradient synchronization with

accelerator.backward(loss) - Customizable loop

- User-friendly, hardware-agnostic, scalable, and maintainable

Let's practice!

Efficient AI Model Training with PyTorch