Prepare models with AutoModel and Accelerator

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Meet your instructor!

$$

- Data engineer

Meet your instructor!

$$

- Data engineer

- Data scientist

Meet your instructor!

$$

- Data engineer

- Data scientist

- Ph.D. in Electrical Engineering

Meet your instructor!

$$

- Data engineer

- Data scientist

- Ph.D. in Electrical Engineering

$$

$$

$$

Excited to share best practices!

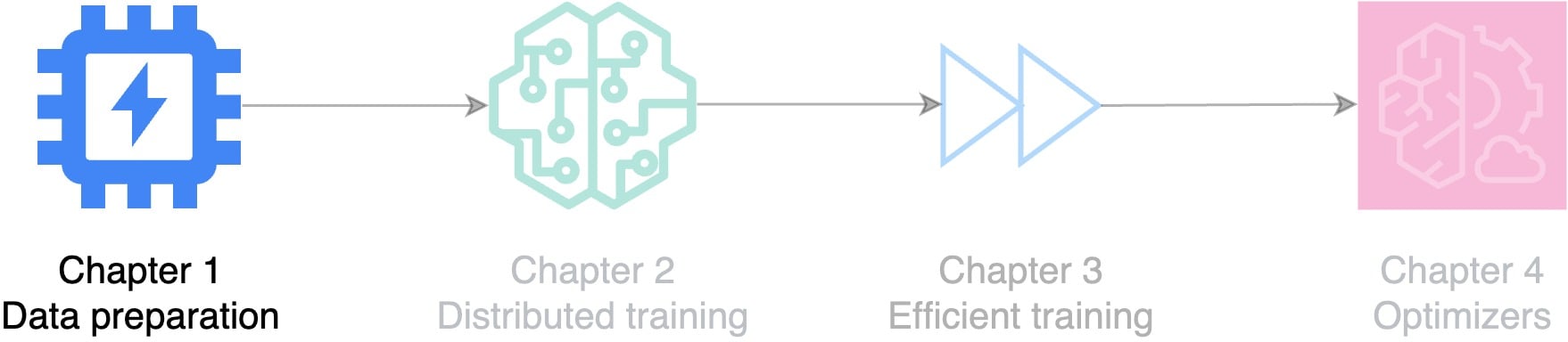

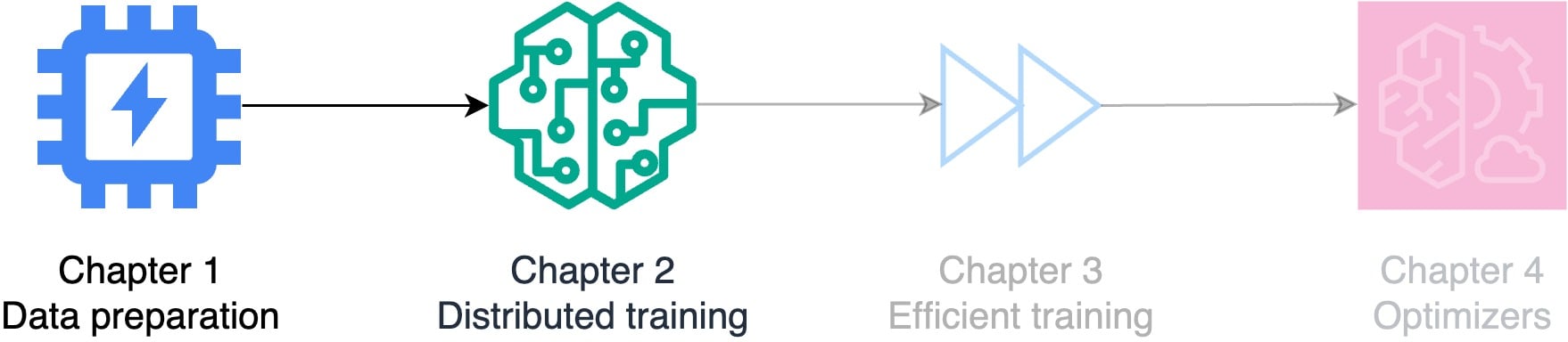

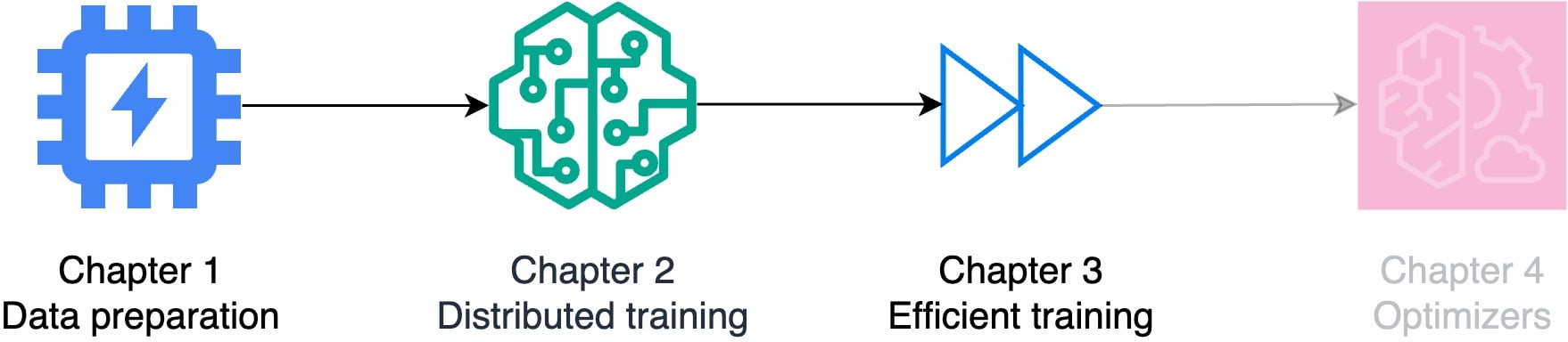

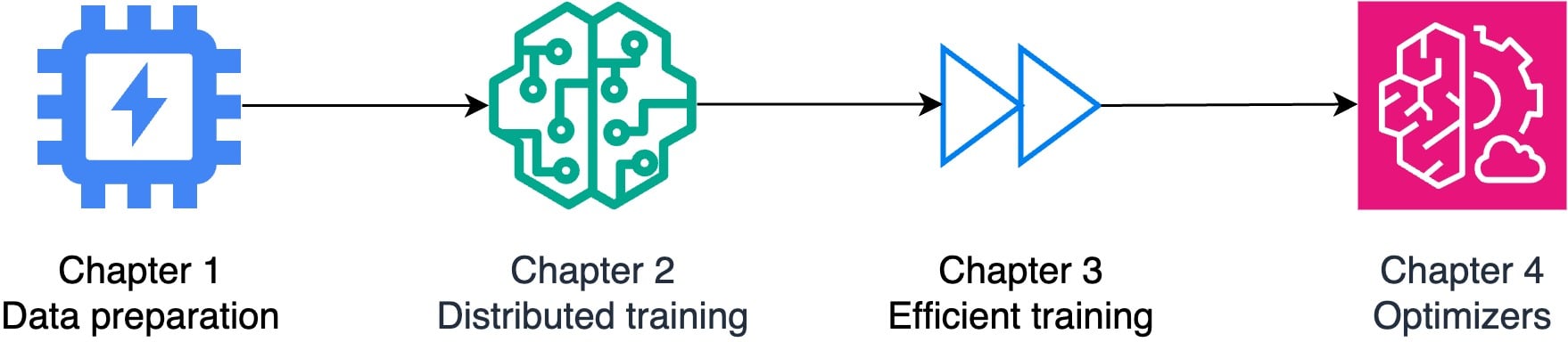

Our roadmap to efficient AI training

- Distributed AI model training

Our roadmap to efficient AI training

- Distributed AI model training

Our roadmap to efficient AI training

- Distributed AI model training

- ↓ ↓ Training times for large language models

Our roadmap to efficient AI training

$$

- Data preparation: placing data on multiple devices

Our roadmap to efficient AI training

$$

- Data preparation: placing data on multiple devices

- Distributed training: scaling training to multiple devices

Our roadmap to efficient AI training

$$

- Data preparation: placing data on multiple devices

- Distributed training: scaling training to multiple devices

- Efficient training: optimizing available devices

Our roadmap to efficient AI training

$$

- Data preparation: placing data on multiple devices

- Distributed training: scaling training to multiple devices

- Efficient training: optimizing available devices

- Optimizers: accelerating training

CPUs

- Most laptops have CPUs

GPUs

- GPUs can train large models

CPUs vs GPUs

CPUs

- Most laptops have CPUs

- Designed for general purpose computing

- Better control flow

GPUs

- GPUs can train large models

- Specialize in highly parallel computing

- Excel at matrix operations

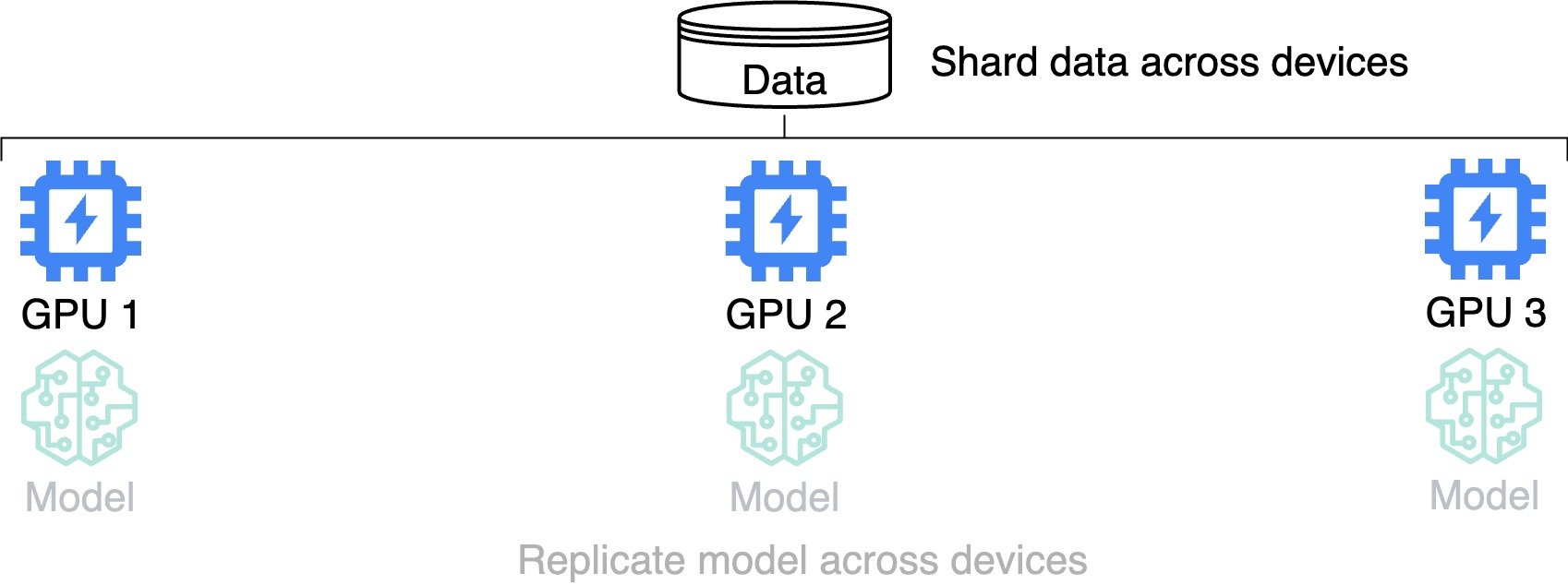

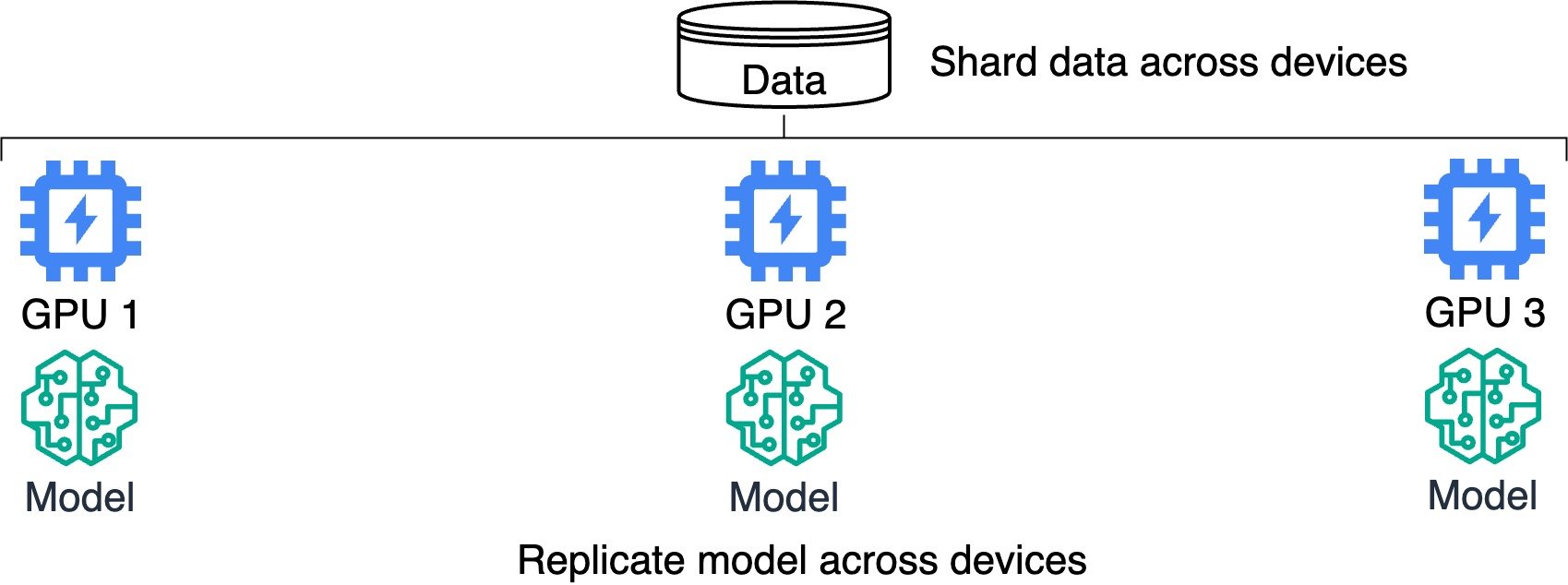

Distributed training

- Data sharding: each device processes a subset of data in parallel

Distributed training

- Data sharding: each device processes a subset of data in parallel

- Model replication: each device performs forward/backward passes

- Gradient aggregation: designated device aggregates gradients

- Parameter synchronization: designated device shares updated parameters

Effortless efficiency: leveraging pre-trained models

- Leverage pre-trained Transformer models

- Initialize model parameters by calling

AutoModelForSequenceClassification - Display the configuration

from transformers import AutoModelForSequenceClassification model = AutoModelForSequenceClassification.from_pretrained(model_name)print(model.config)

DistilBertConfig {

"architectures": ["DistilBertForMaskedLM"],

"dim": 768,

"dropout": 0.1,

"hidden_dim": 3072,

...

Device placement with Accelerator

- A Hugging Face class 🤗

Acceleratordetects which devices are available on our computer- Automate device placement and data parallelism:

accelerator.prepare() - Place the model (with type

torch.nn.Module) on the first available GPU - Defaults to the CPU if no GPU is found

from accelerate import Accelerator accelerator = Accelerator() model = accelerator.prepare(model)print(accelerator.device)

cpu

Let's practice!

Efficient AI Model Training with PyTorch