Balanced training with AdamW

Efficient AI Model Training with PyTorch

Dennis Lee

Data Engineer

Efficient training

Optimizers for training efficiency

Optimizers for training efficiency

Optimizers for training efficiency

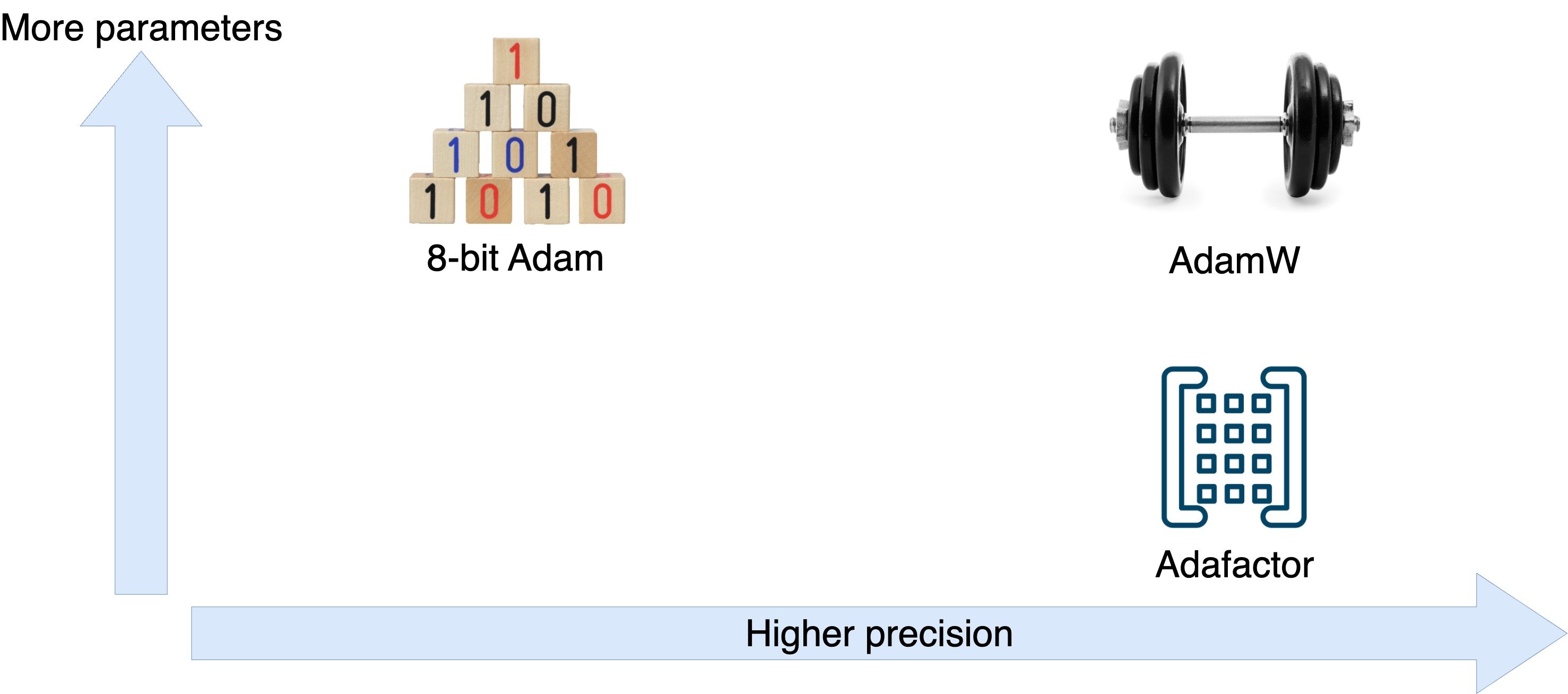

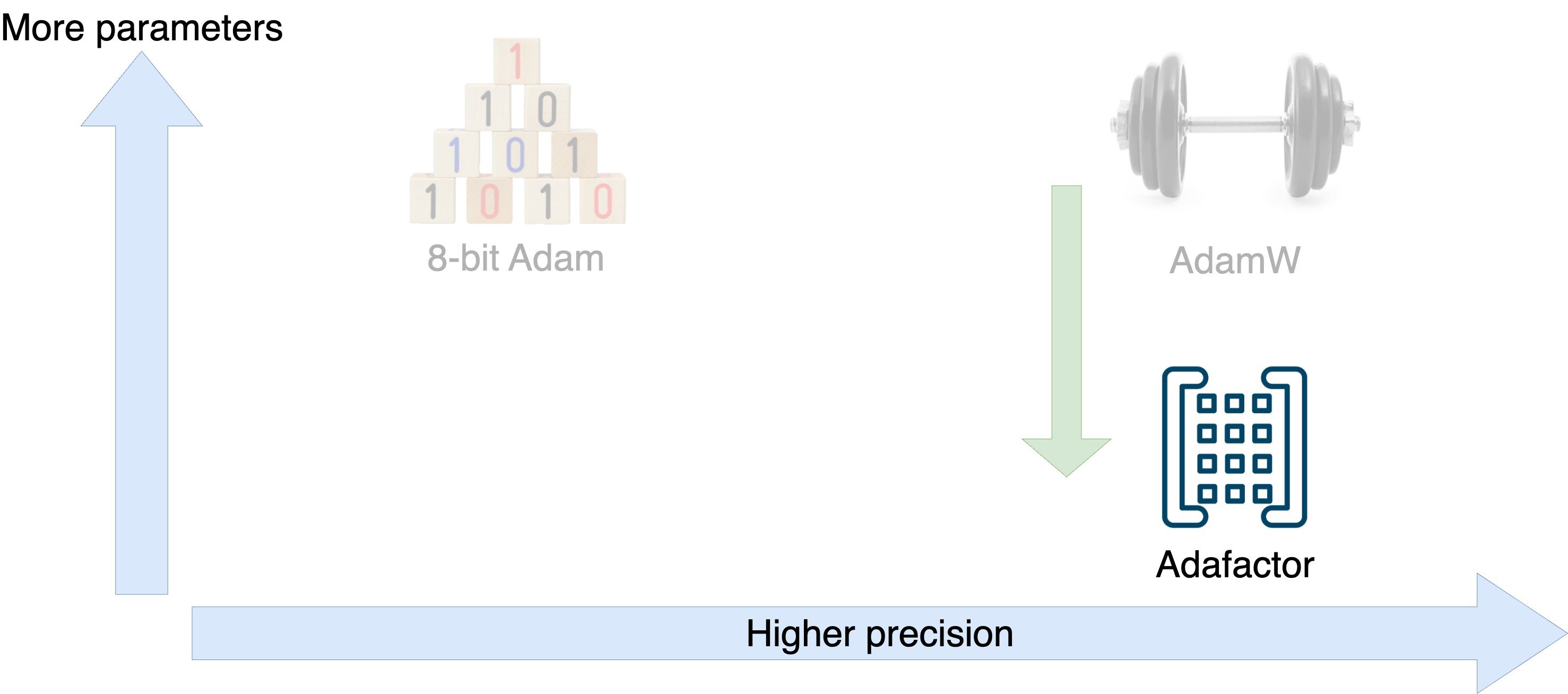

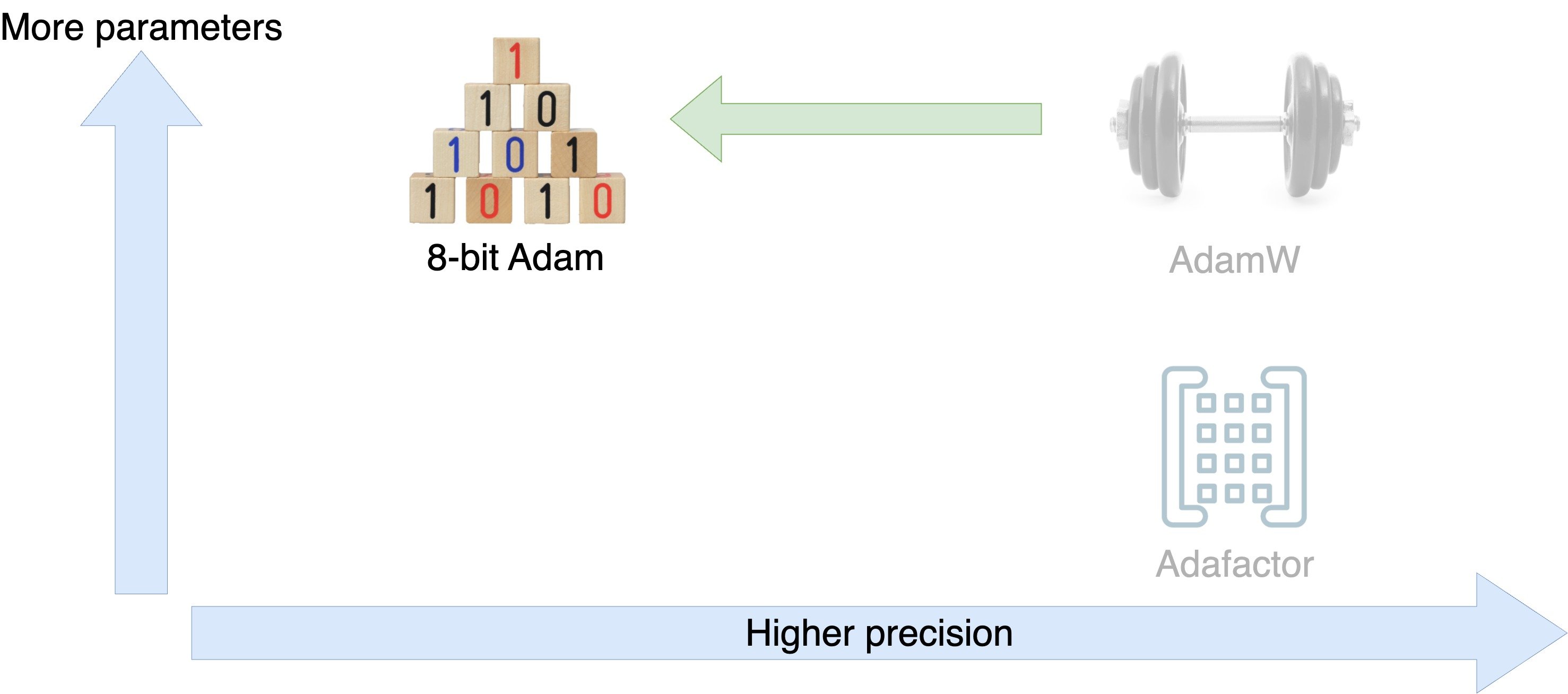

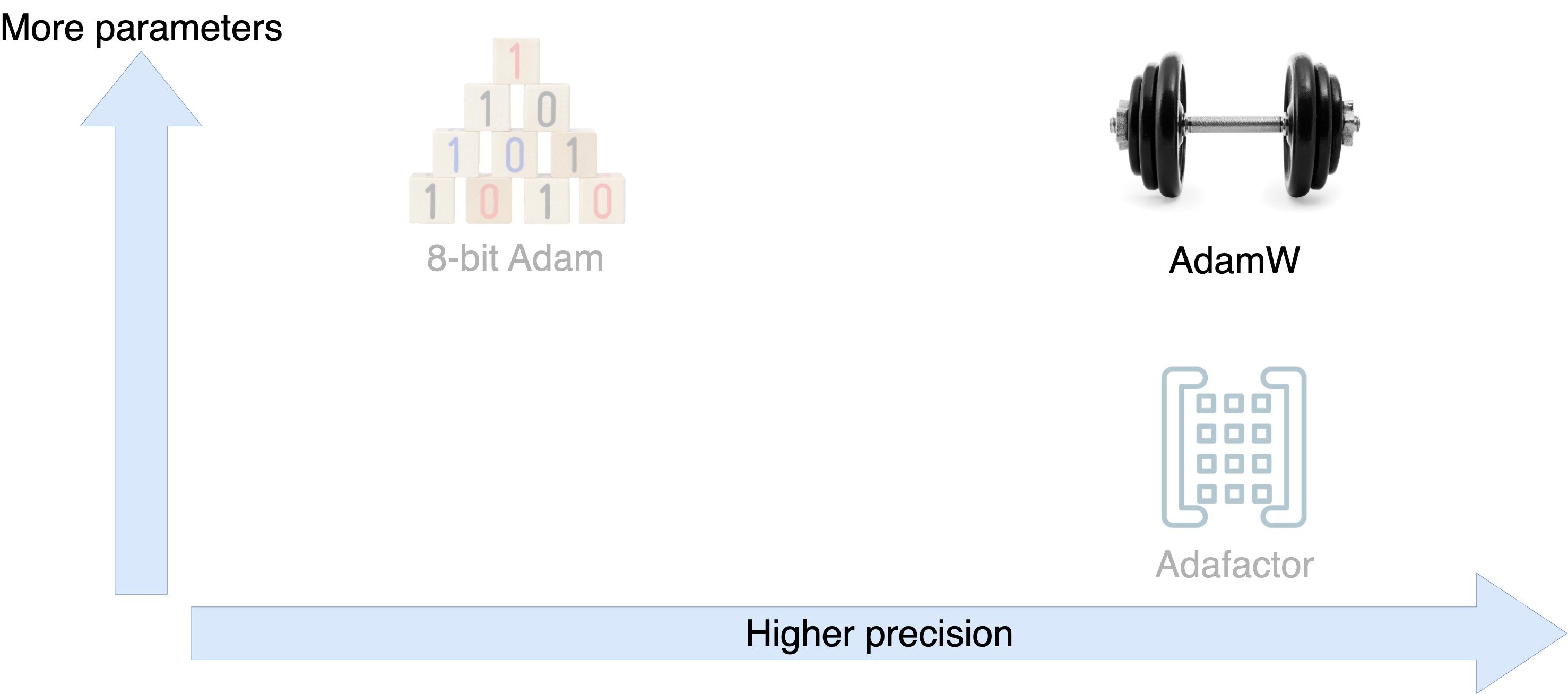

Optimizer tradeoffs

Optimizer tradeoffs

Optimizer tradeoffs

Optimizer tradeoffs

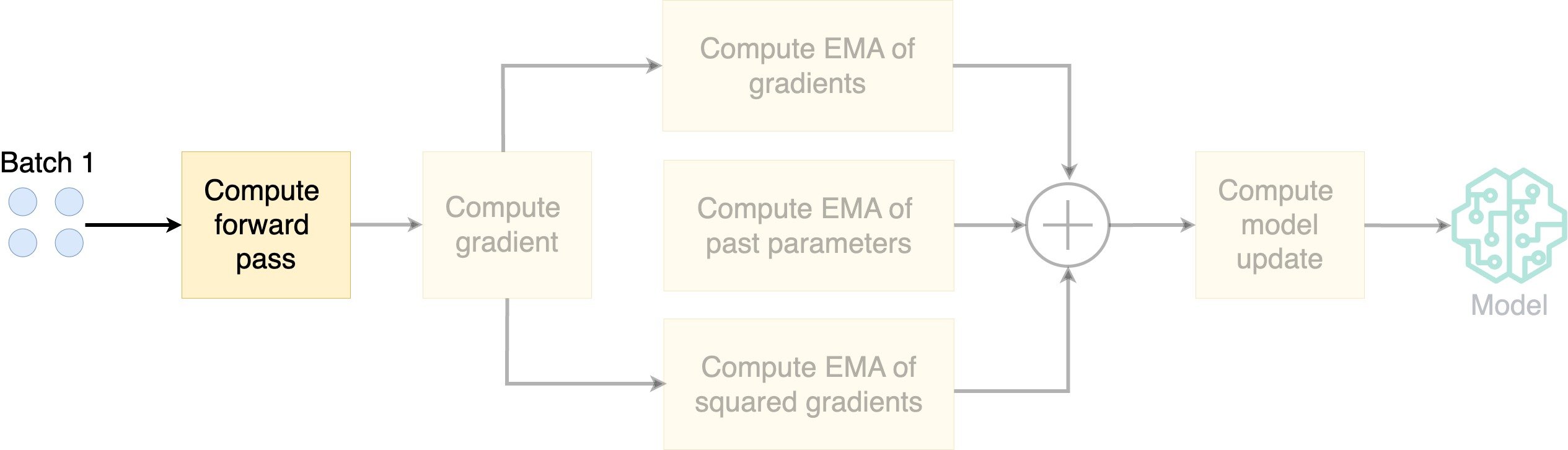

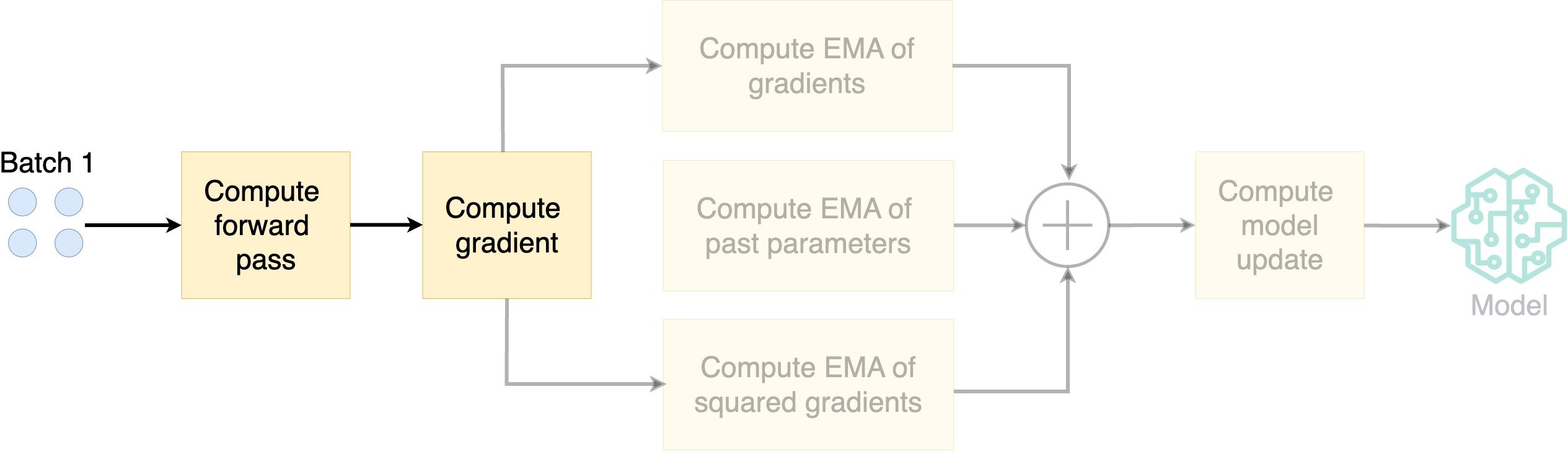

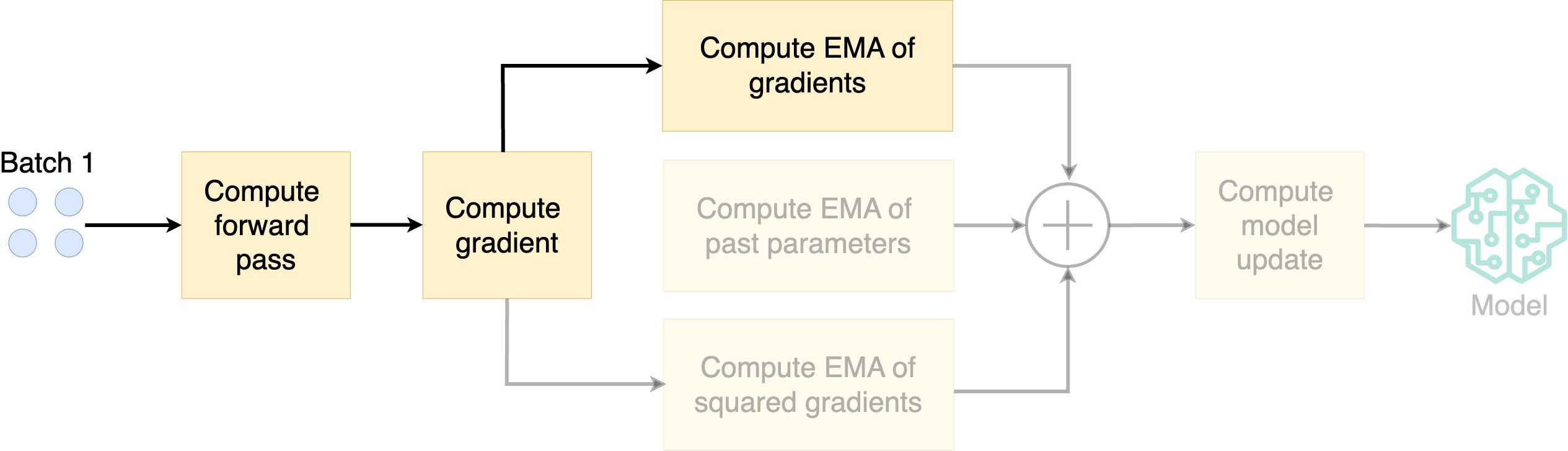

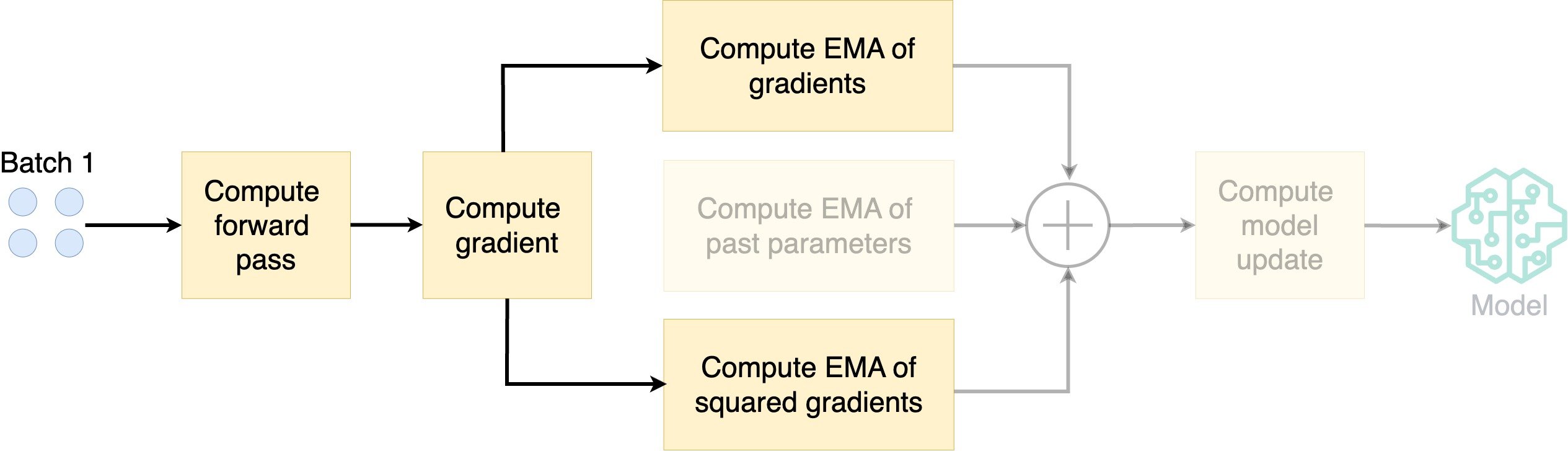

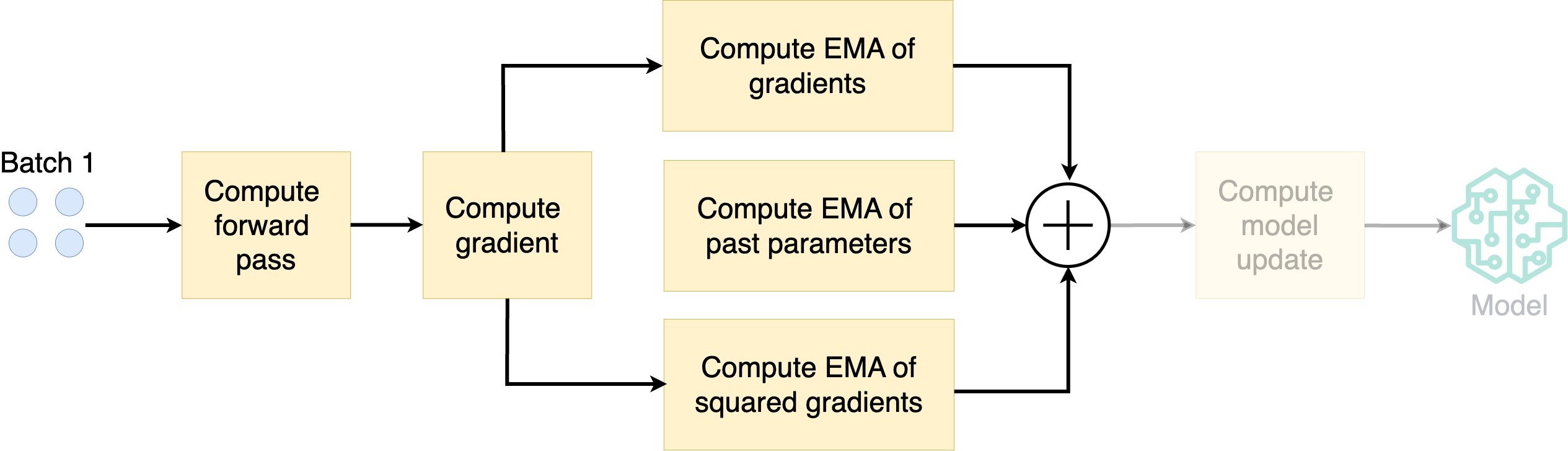

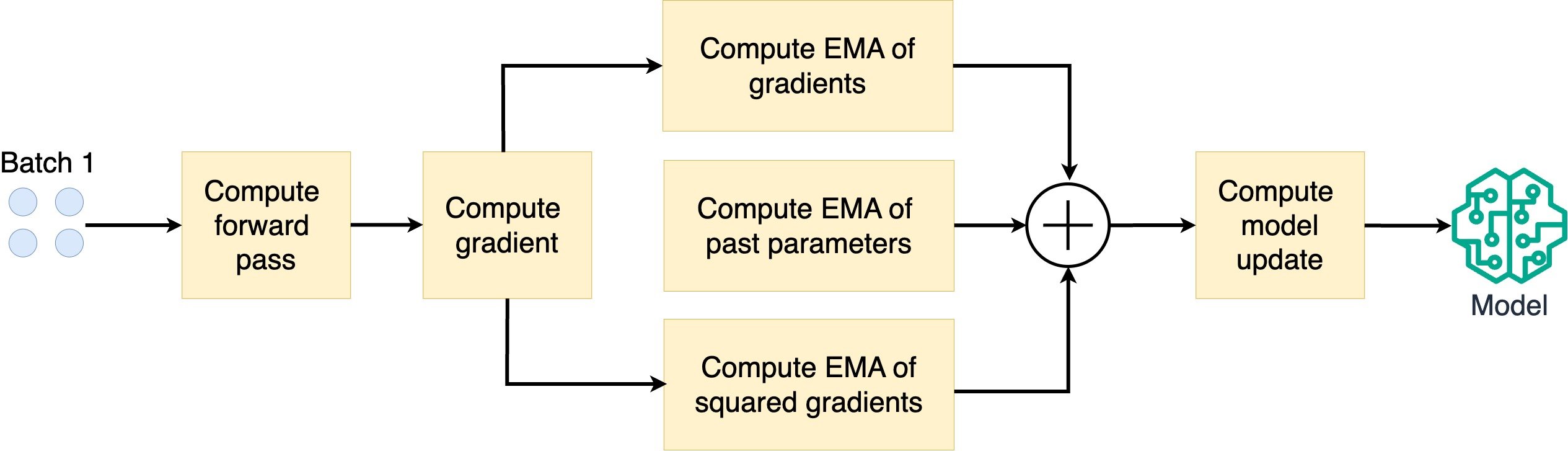

How does AdamW work?

How does AdamW work?

How does AdamW work?

- Compute the exponential moving average (EMA) of the gradients

How does AdamW work?

- Compute the exponential moving average (EMA) of the gradients

- Compute EMA of squared gradients

How does AdamW work?

- Compute the exponential moving average (EMA) of the gradients

- Compute EMA of squared gradients

How does AdamW work?

- Compute the exponential moving average (EMA) of the gradients

- Compute EMA of squared gradients

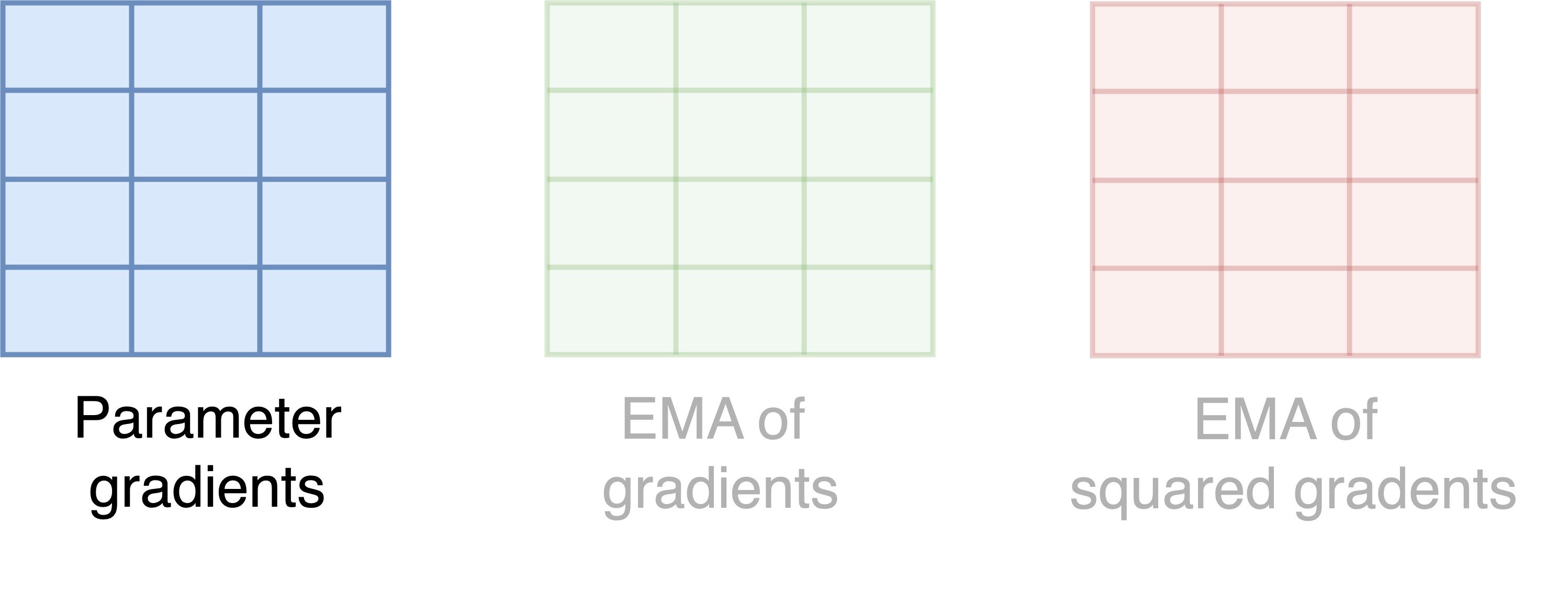

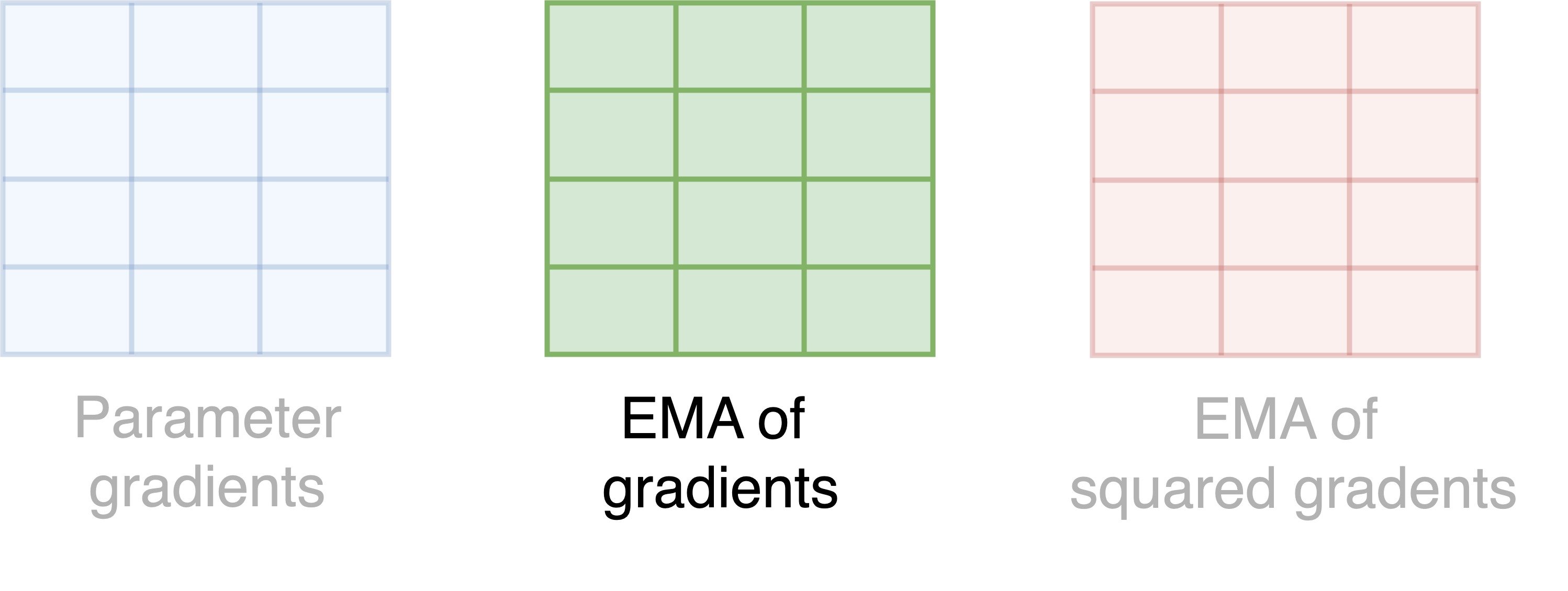

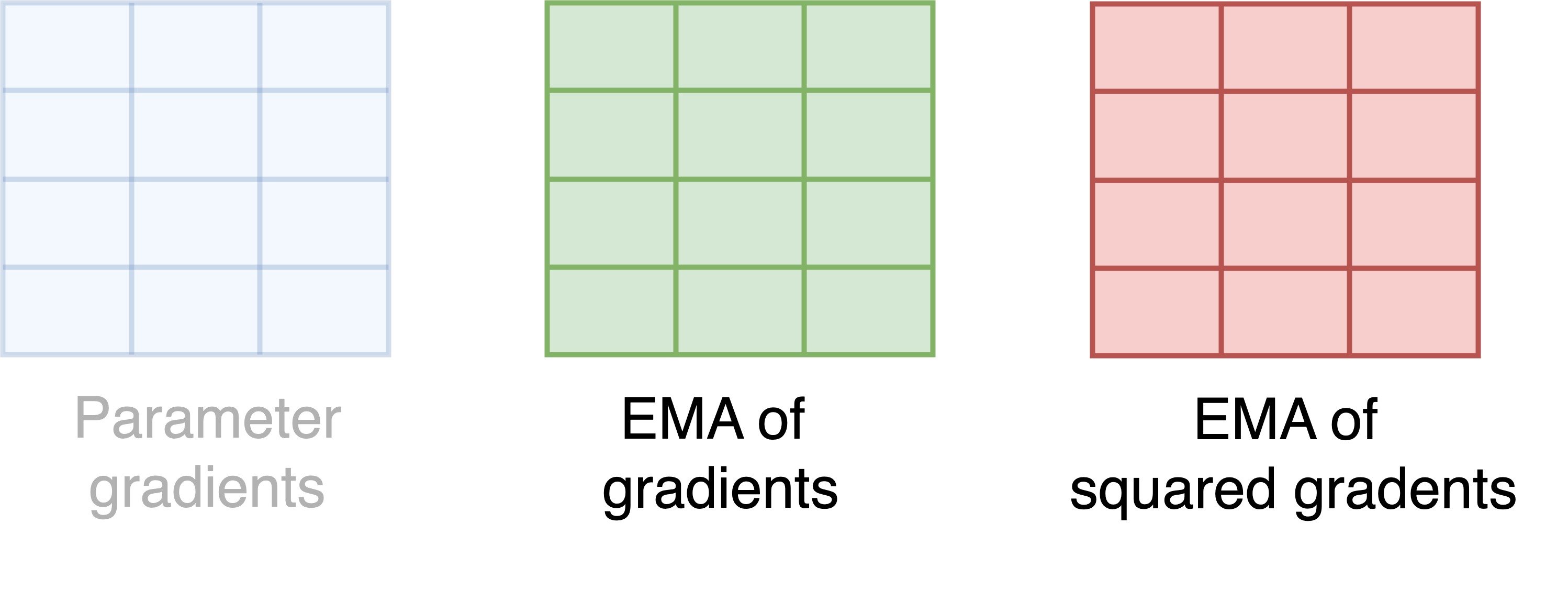

Memory usage of AdamW

- Each square is a parameter, and each color is a state

Memory usage of AdamW

- Each square is a parameter, and each color is a state

Memory usage of AdamW

- Each square is a parameter, and each color is a state

- Memory per parameter = 8 bytes = 4 bytes per state * 2 states

- Total memory = Memory per parameter (8 bytes) * Number of parameters

Estimate memory usage of AdamW

model = AutoModelForSequenceClassification.from_pretrained( "distilbert-base-cased", return_dict=True)num_parameters = sum(p.numel() for p in model.parameters()) print(f"Number of model parameters: {num_parameters:,}")

Number of model parameters: 65,783,042

estimated_memory = num_parameters * 8 / (1024 ** 2)

print(f"Estimated memory usage of AdamW: {estimated_memory:.0f} MB")

Estimated memory usage of AdamW: 502 MB

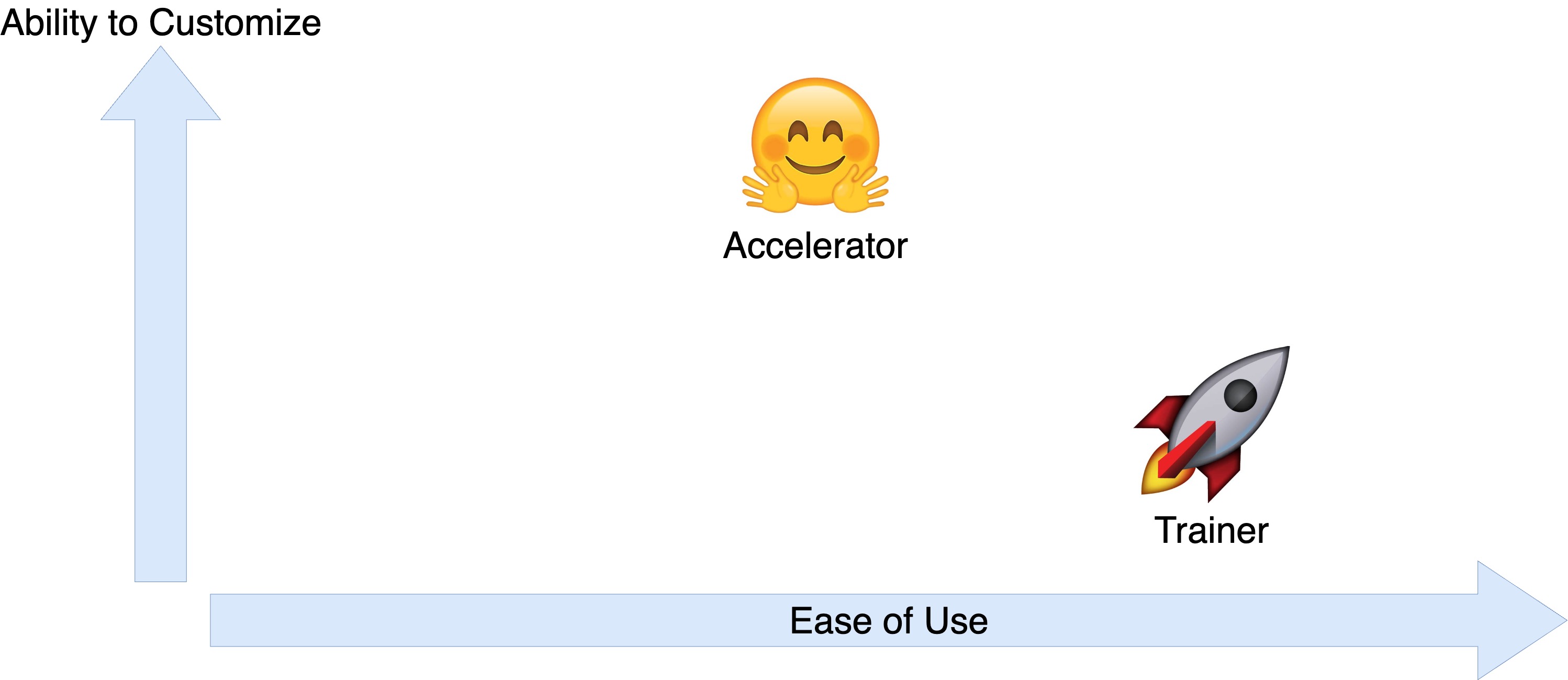

Trainer and Accelerator

Implement AdamW with Trainer

from torch.optim import AdamW optimizer = AdamW(params=model.parameters())trainer = Trainer(model=model, args=training_args, train_dataset=train_dataset, eval_dataset=validation_dataset, compute_metrics=compute_metrics, optimizers=(optimizer, lr_scheduler))trainer.train()

{'epoch': 1.0, 'eval_accuracy': 0.7, 'eval_f1': 0.8}

Implement AdamW with Accelerator

from torch.optim import AdamW optimizer = AdamW(params=model.parameters())for batch in train_dataloader: inputs, targets = batch["input_ids"], batch["labels"] outputs = model(inputs, labels=targets) loss = outputs.loss accelerator.backward(loss) optimizer.step() lr_scheduler.step() optimizer.zero_grad() print(f"Loss = {loss}")

Loss = 0.7

Inspecting the optimizer state

optimizer_state = optimizer.state.values()

print(optimizer_state)

dict_values([{'step': tensor(3.),'exp_avg': tensor([[0., 0., 0., ..., 0., 0., 0.], ...]),'exp_avg_sq': tensor([[0., 0., 0., ..., 0., 0., 0.], ...])}, ...])

Computing the optimizer size

def compute_optimizer_size(optimizer_state): total_size_megabytes, total_num_elements = 0, 0for params in optimizer_state:for name, tensor in params.items(): tensor = torch.tensor(tensor)num_elements = tensor.numel()element_size = tensor.element_size()total_num_elements += num_elementstotal_size_megabytes += num_elements * element_size / (1024 ** 2)return total_size_megabytes, total_num_elements

Computing the optimizer size

total_size_megabytes, total_num_elements = \

compute_optimizer_size(trainer.optimizer.state.values())

print(f"Number of optimizer parameters: {total_num_elements:,}")

Number of optimizer parameters: 131,566,188

print(f"Optimizer size: {total_size_megabytes:.0f} MB")

Optimizer size: 502 MB

Let's practice!

Efficient AI Model Training with PyTorch