Data Aggregation

Transform and Analyze Data with Microsoft Fabric

Luis Silva

Solution Architect - Data & AI

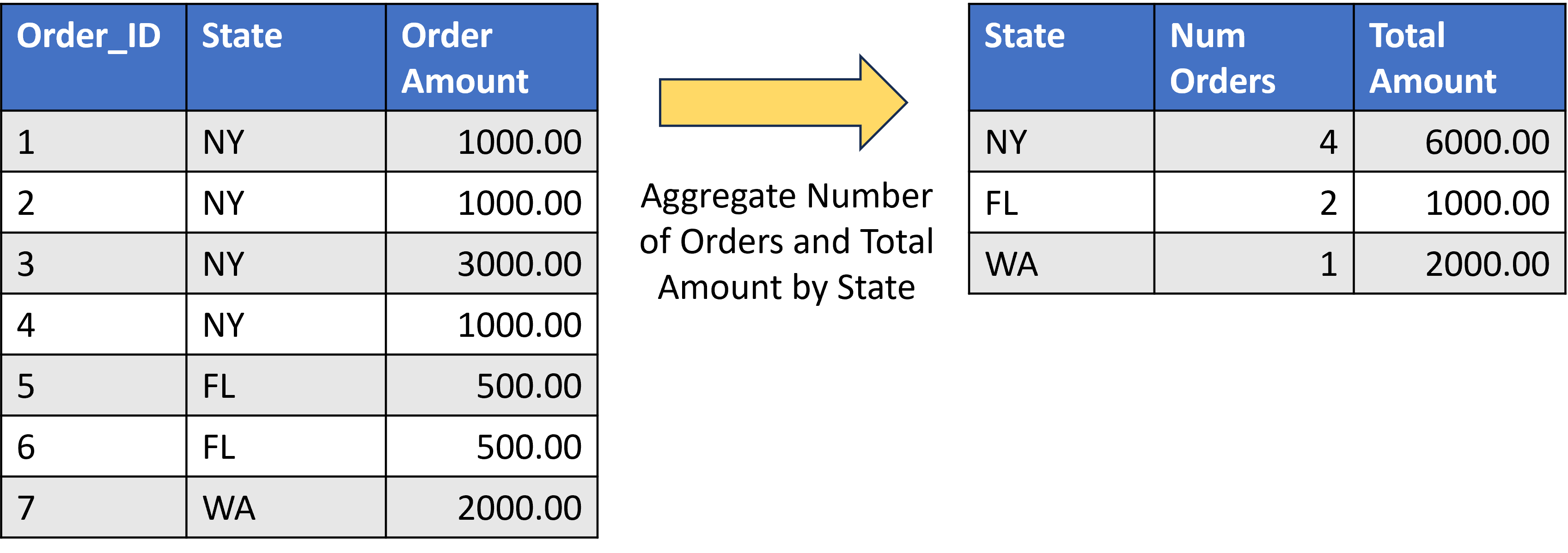

When you should aggregate data?

- Condense the number of rows in a dataset, using an aggregation function to produce summaries.

- Count

- Summarize

- Average

- Maximum

- Minimum

When you should aggregate data?

- Condense the number of rows in a dataset, using an aggregation function to produce summaries.

- Count

- Summarize

- Average

- Maximum

- Minimum

Tools for implementing aggregation of data

Aggregating data with SQL

- Common SQL aggregation functions:

SUM()COUNT()AVG()MIN()MAX()

- Usually used in combination with

GROUP BY - Statistical functions

STDEV()VAR()

SELECT

<unaggregated columns>,

function(<aggregated column>)

FROM

<table>

GROUP BY

<unaggregated columns>;

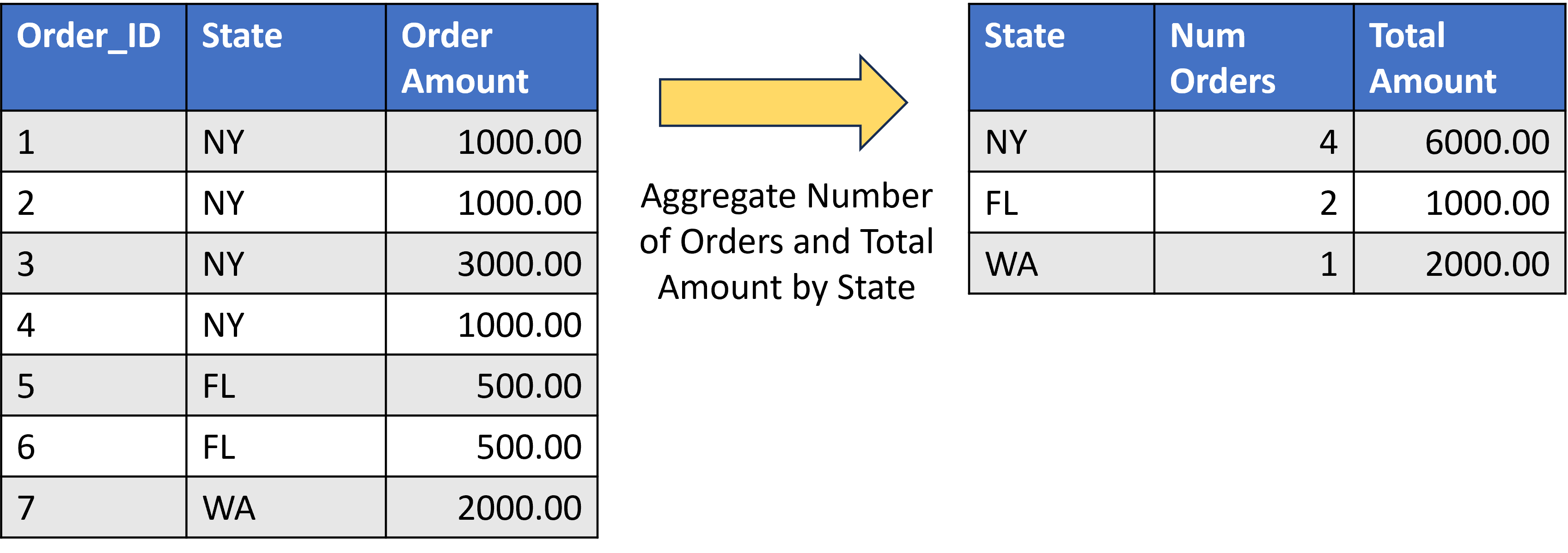

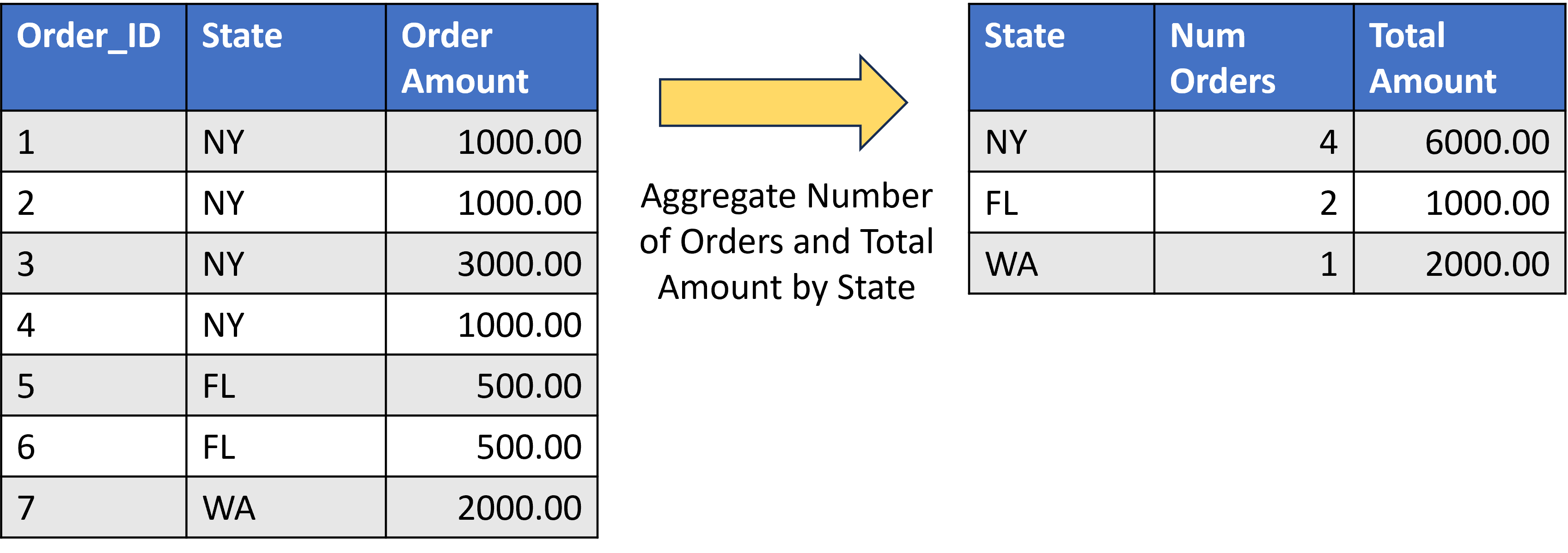

Aggregating data with SQL

SELECT

[State],

COUNT([Order_ID]) AS [Num Orders],

SUM([Order_Amount]) AS [Total Amount]

FROM

[tbl_Orders]

GROUP BY

[State]

Aggregating data with Spark

- Common PySpark aggregation functions:

sum()count()avg()min()andmax()first()andlast()

- Statistical functions

stdev()variance()

- Used with

groupBy()andagg()

df.groupBy(<unaggregated columns>)

.agg(function(<aggregated column>))

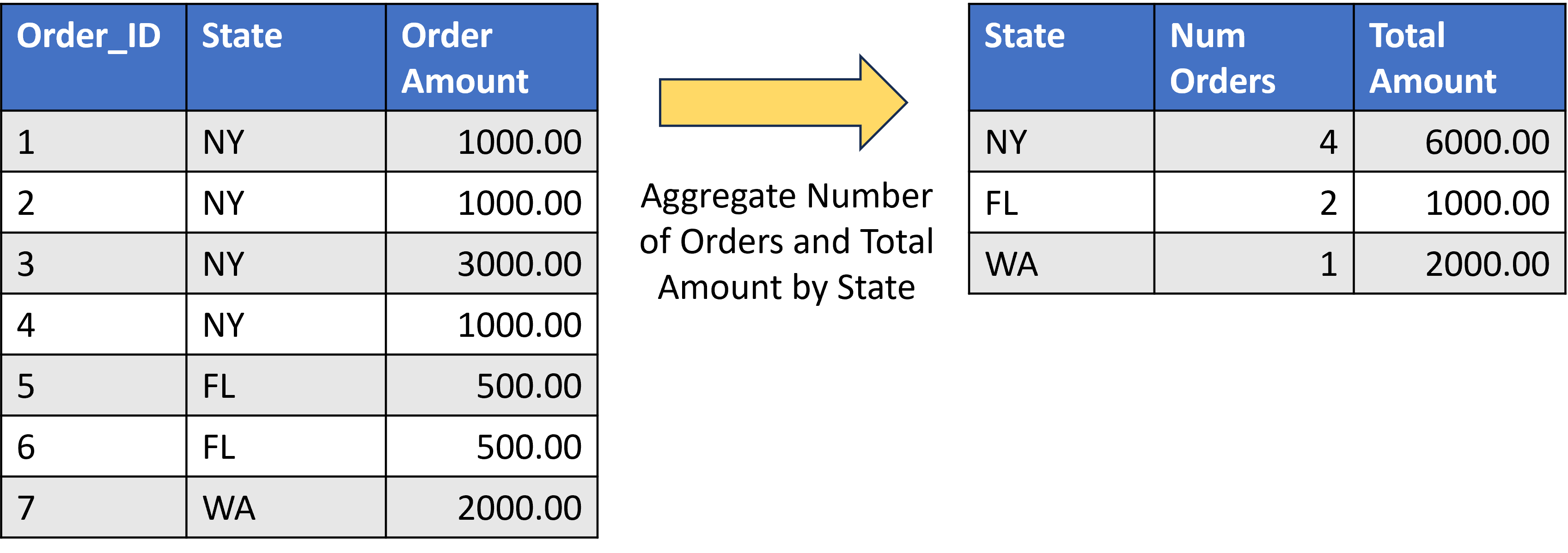

Aggregating data with Spark

from pyspark.sql.functions import sum

df.groupBy("state").agg(count("order_id"), sum("order_amount")).show()

Aggregating data with Spark

- Aggregation functions must be imported from

pyspark.sql.functionsby including a statement at the star of your code.

#----- Import one or multiple functions:

from pyspark.sql.functions import sum, avg, count, min, max

#----- Import all SQL functions:

from pyspark.sql.functions import *

#----- Import all SQL functions with an alias:

import pyspark.sql.functions as F

# call sum: F.sum()

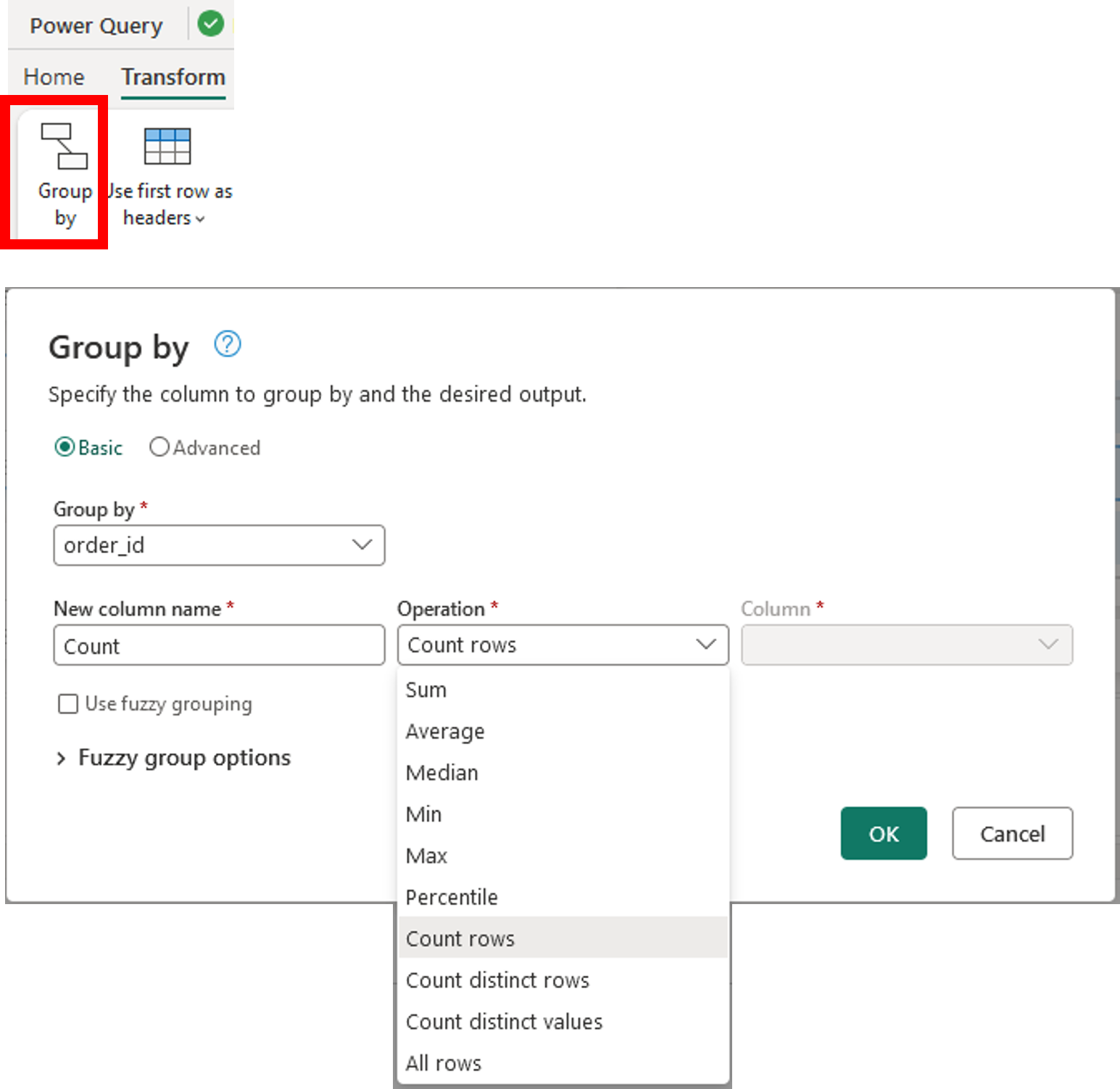

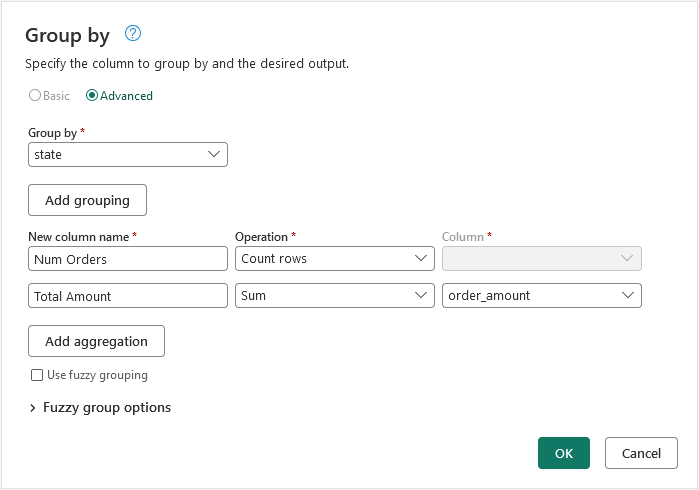

Aggregating data with Dataflows

- Group by Transform

SumAverageMedianMinMaxPercentileCount rows

Aggregating data with Dataflows

Let's practice!

Transform and Analyze Data with Microsoft Fabric