Data Engineering in Microsoft Fabric

Transform and Analyze Data with Microsoft Fabric

Luis Silva

Solution Architect - Data & AI

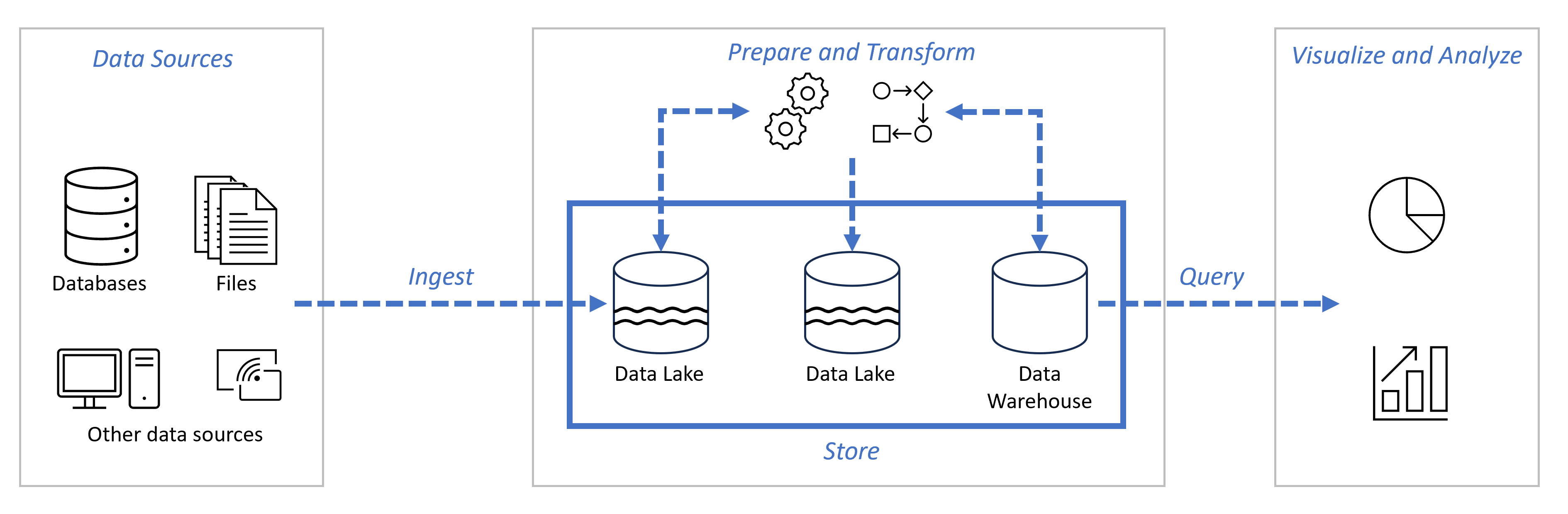

Data Analytics End To End

- Ingest data from source and store it in a data lake

- Prepare and transform the data

- Visualize and analyze the data

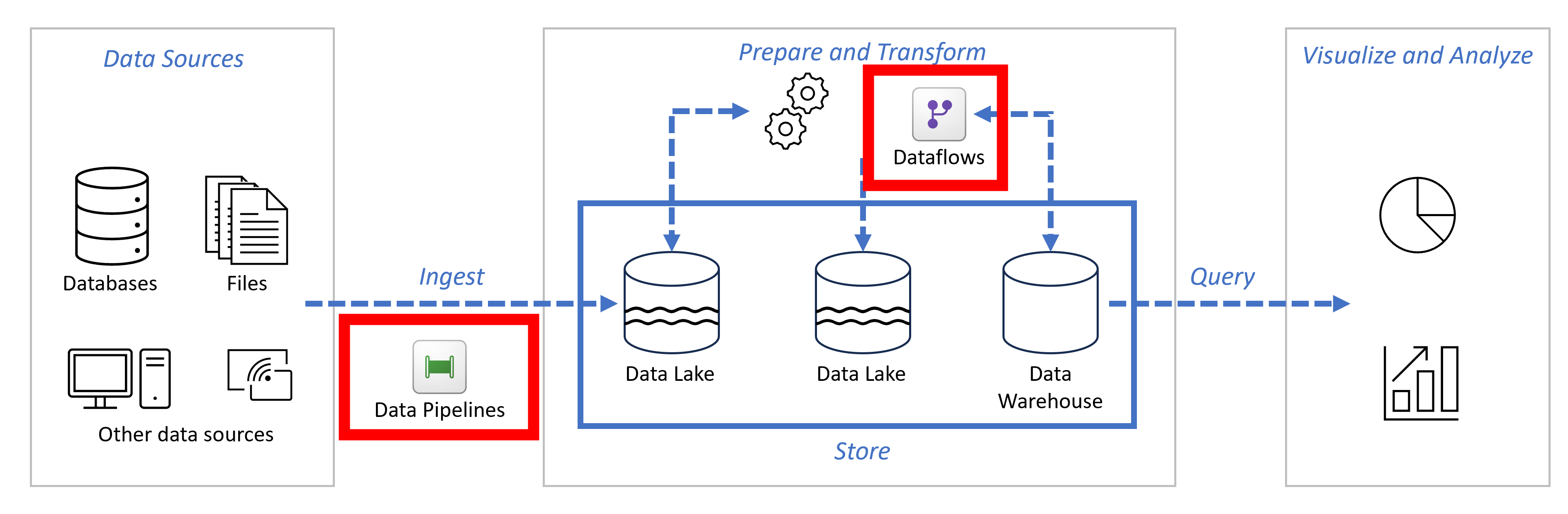

Data Factory

- Ingest, prepare and transform data

- Dataflows and Data Pipelines

Dataflows

- Low-code interface for data ingestion and transformation

- Power Query transformation engine

Data Pipelines

- Collection of activities that perform a task

- Types of activities:

- Data movement (Copy activity, Dataflow)

- Data transformation (Notebook, Stored Procedure, Script)

- Control (Switch, If, ForEach, Wait)

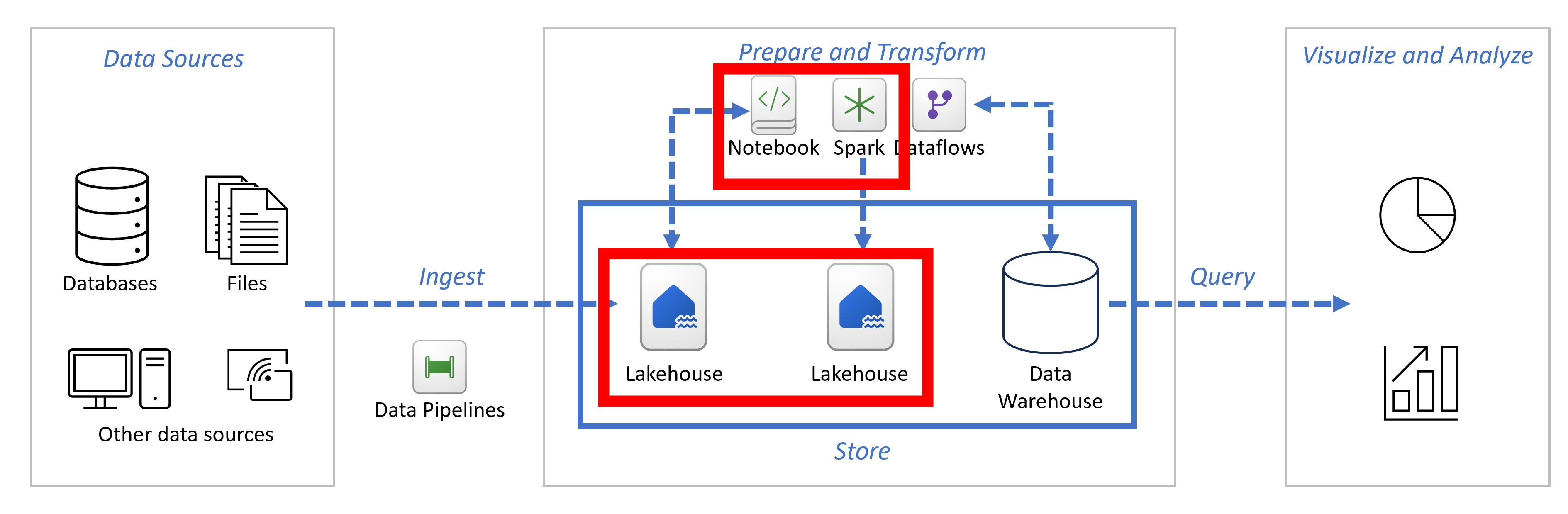

Synapse Data Engineering

- Lakehouses

- Notebooks

- Apache Spark Job definitions

Lakehouses

- Structured data (tables)

- Unstructured data (files)

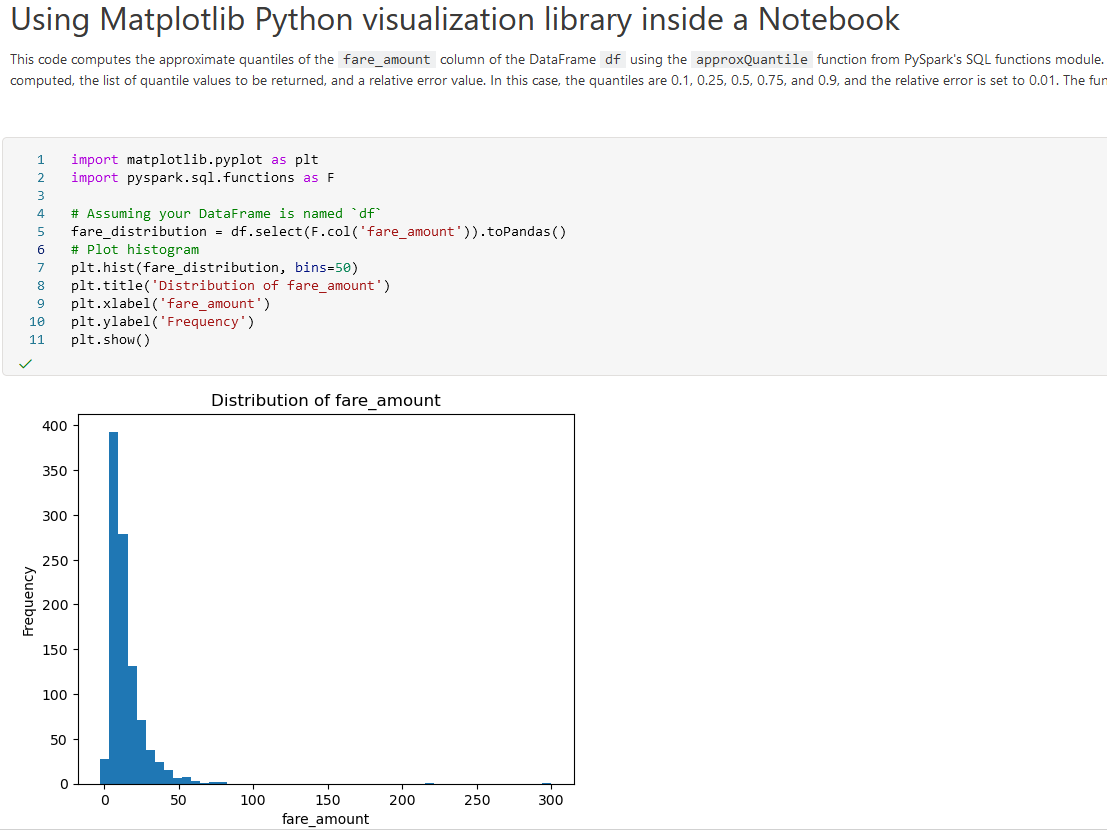

Notebooks

- Interactive web interface

- Data manipulation code

- Data visualizations

- Comments / Explanations

- Multi-language support:

- PySpark (Python)

- Spark (Scala)

- Spark SQL (SQL)

- SparkR (R)

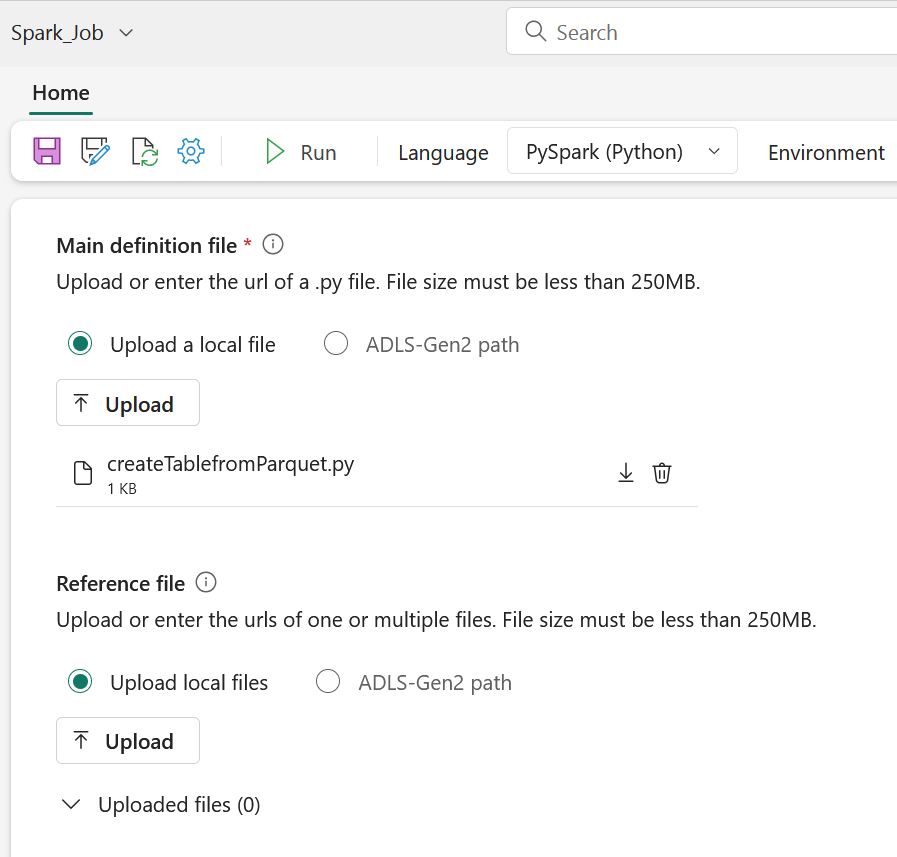

Apache Spark Job Definitions

- Submit batch/streaming jobs to Spark clusters

- Alternative or complementary to Notebooks:

- Notebooks for data exploration, prototyping and collaborative development

- Spark Job Definition for automation of production-ready data processing code

Synapse Data Warehouse

- Behaves like a traditional relational data warehouse

- Stores data in OneLake using the open Delta Lake format

- Enables interoperability with other Fabric workloads

- No need to create multiple copies of data

Choosing a Data Store

- Lakehouse

- Unstructured data (files)

- Spark as the primary development interface

- Warehouse

- Structured data (tables)

- T-SQL as the primary development interface

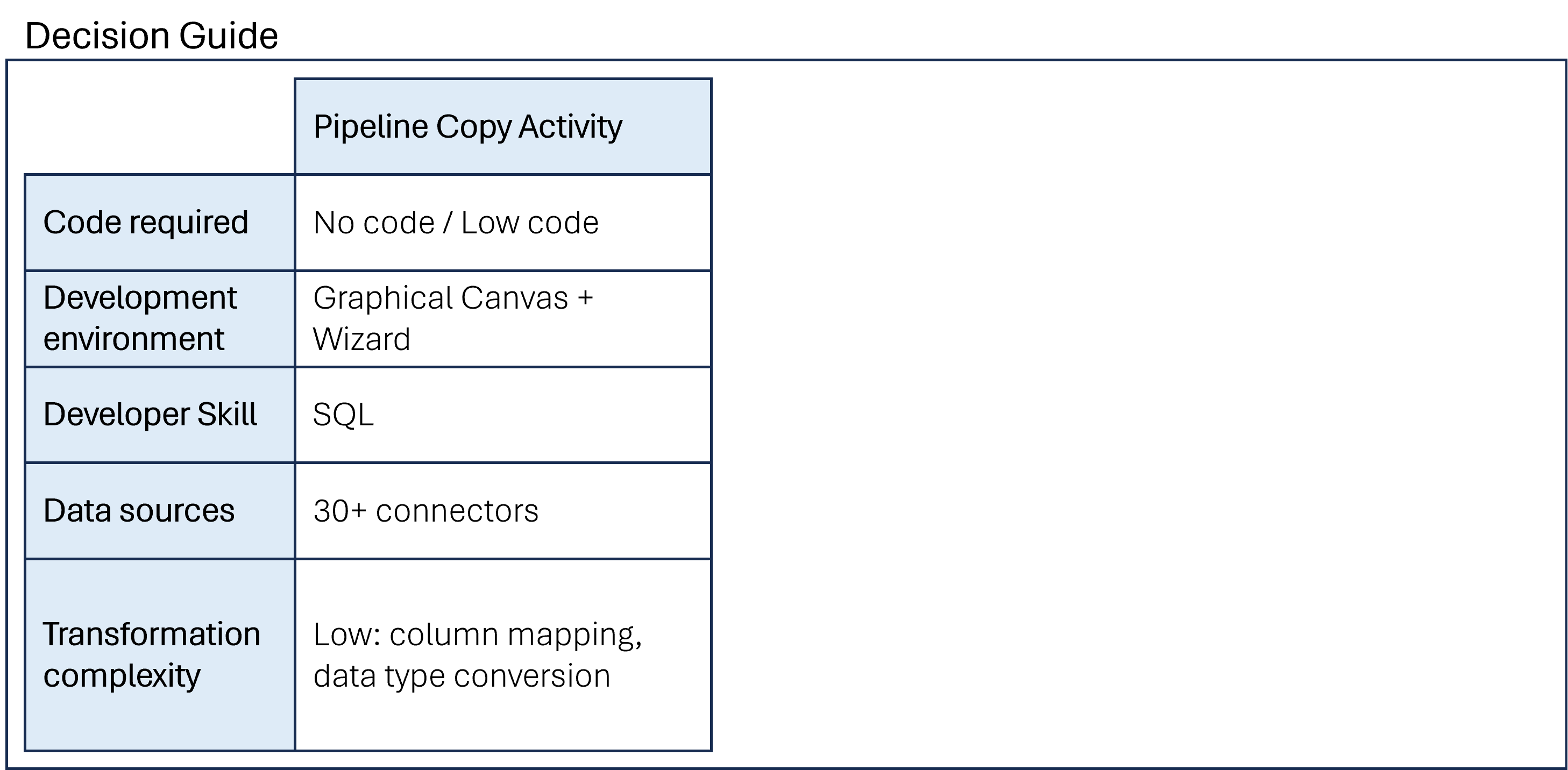

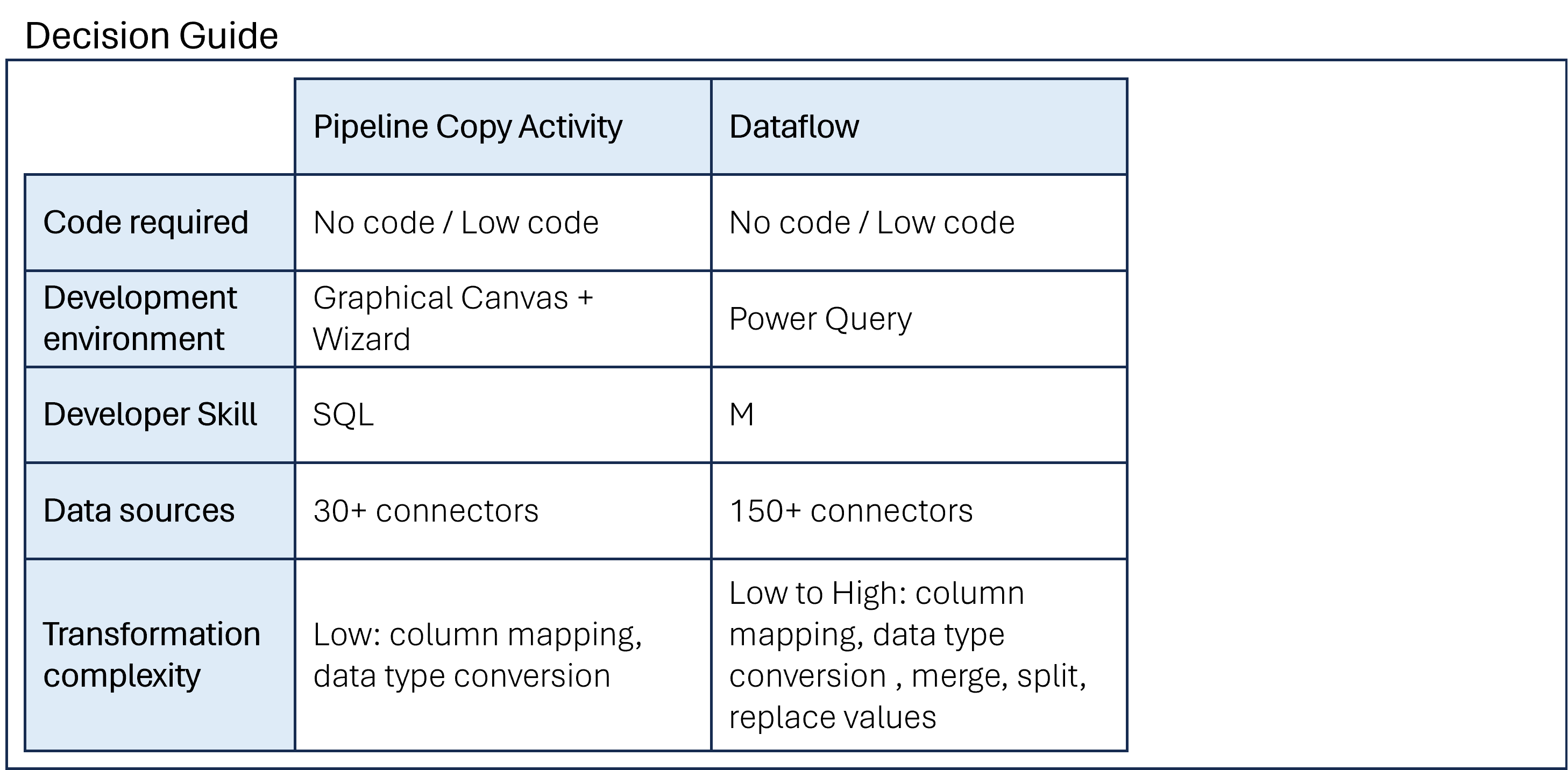

Choosing a Data Copy Tool

Choosing a Data Copy Tool

Choosing a Data Copy Tool

Let's practice!

Transform and Analyze Data with Microsoft Fabric