Delta Lake table optimization

Transform and Analyze Data with Microsoft Fabric

Luis Silva

Solution Architect - Data & AI

What is Delta Lake?

- Open source storage layer for lakehouses

- ACID transactions, metadata handling and versioning

- Fabric uses Delta Lake table format (Parquet) as the standard

- Interoperability across Fabric experiences

Table maintenance

- Keep delta tables in shape

- Table maintenance operations:

- Optimize

- V-Order

- Vacuum

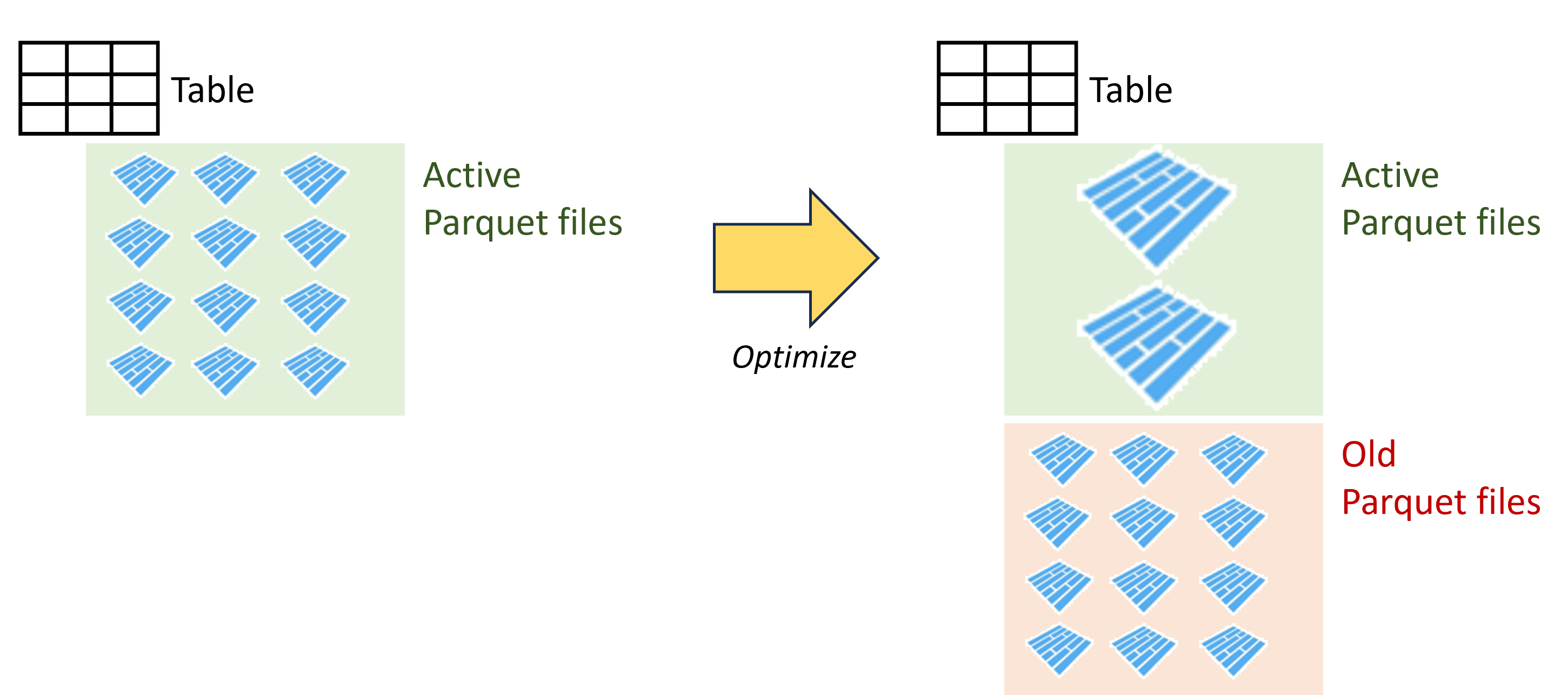

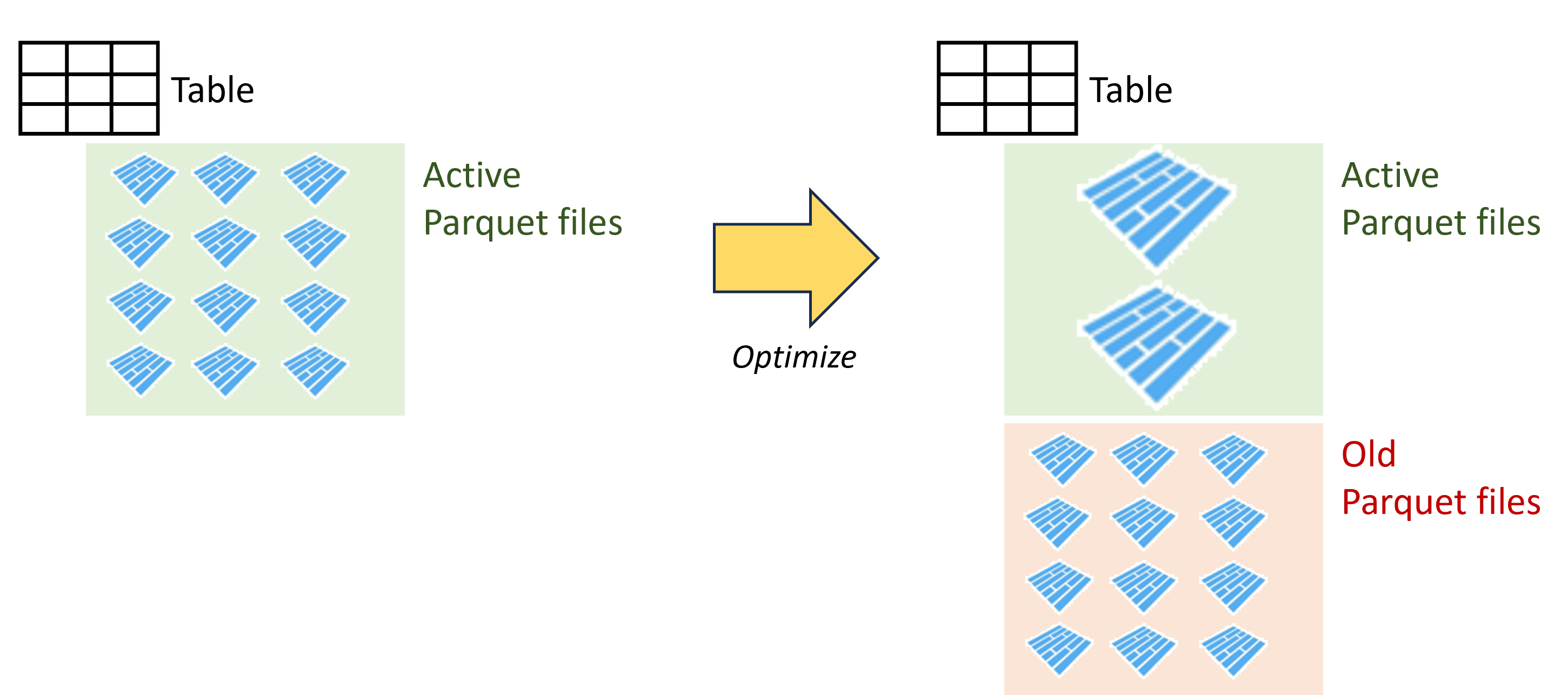

Optimize

- Consolidate multiple small Parquet files into a large file

- Ideal file size between 128MB and 1GB

- Improve compression and distribution, leading to efficient reads

- Recommended to optimize after loading large tables

Optimize

- Consolidate multiple small Parquet files into a large file

- Ideal file size between 128MB and 1GB

- Improve compression and distribution, leading to efficient reads

- Recommended to optimize after loading large tables

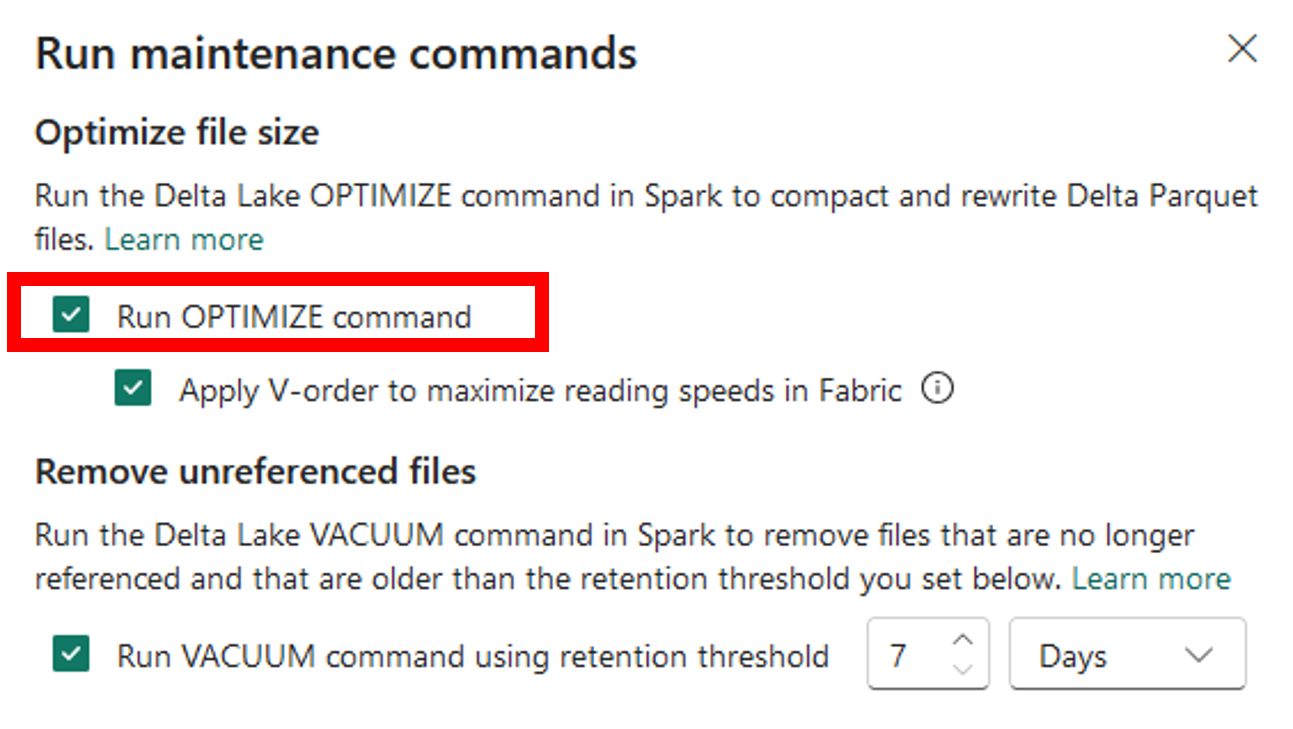

Running the Optimize command from Lakehouse explorer

Running the Optimize command in Spark SQL

%%sql

OPTIMIZE <lakehouse>.<table>;

Running the Optimize command in PySpark

from delta.tables import DeltaTable

dt = DeltaTable.forPath( spark, "Tables/<table>" )

dt.optimize().executeCompaction()

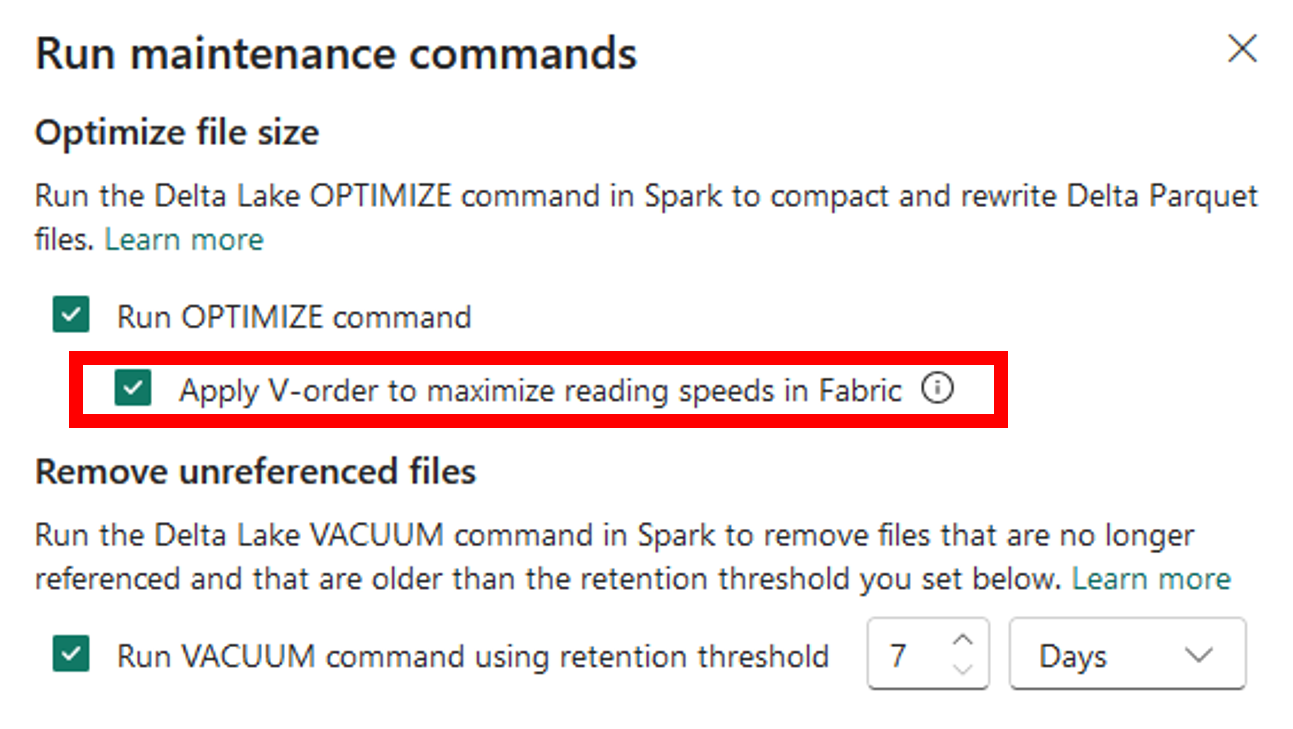

V-Order

- Special optimization applied when writing Parquet files

- Enabled by default

Applying V-Order from Lakehouse explorer

Controlling V-Order writing in Apache Spark session

Enable V-Order for the session

%%sql SET spark.sql.parquet.vorder.enabled=TRUEDisable V-Order for the session

%%sql SET spark.sql.parquet.vorder.enabled=FALSE

Applying V-Order when optimizing a table

%%sql

OPTIMIZE <table|fileOrFolderPath> VORDER;

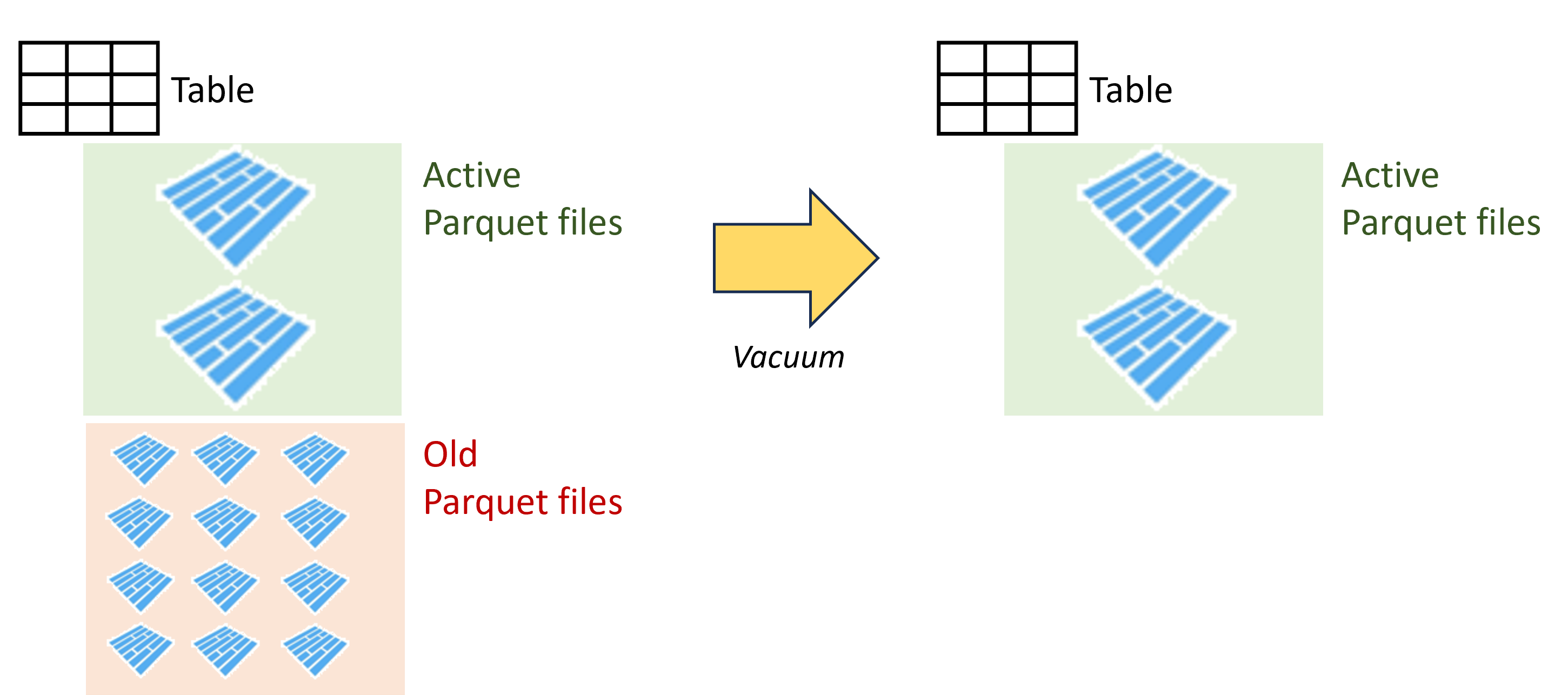

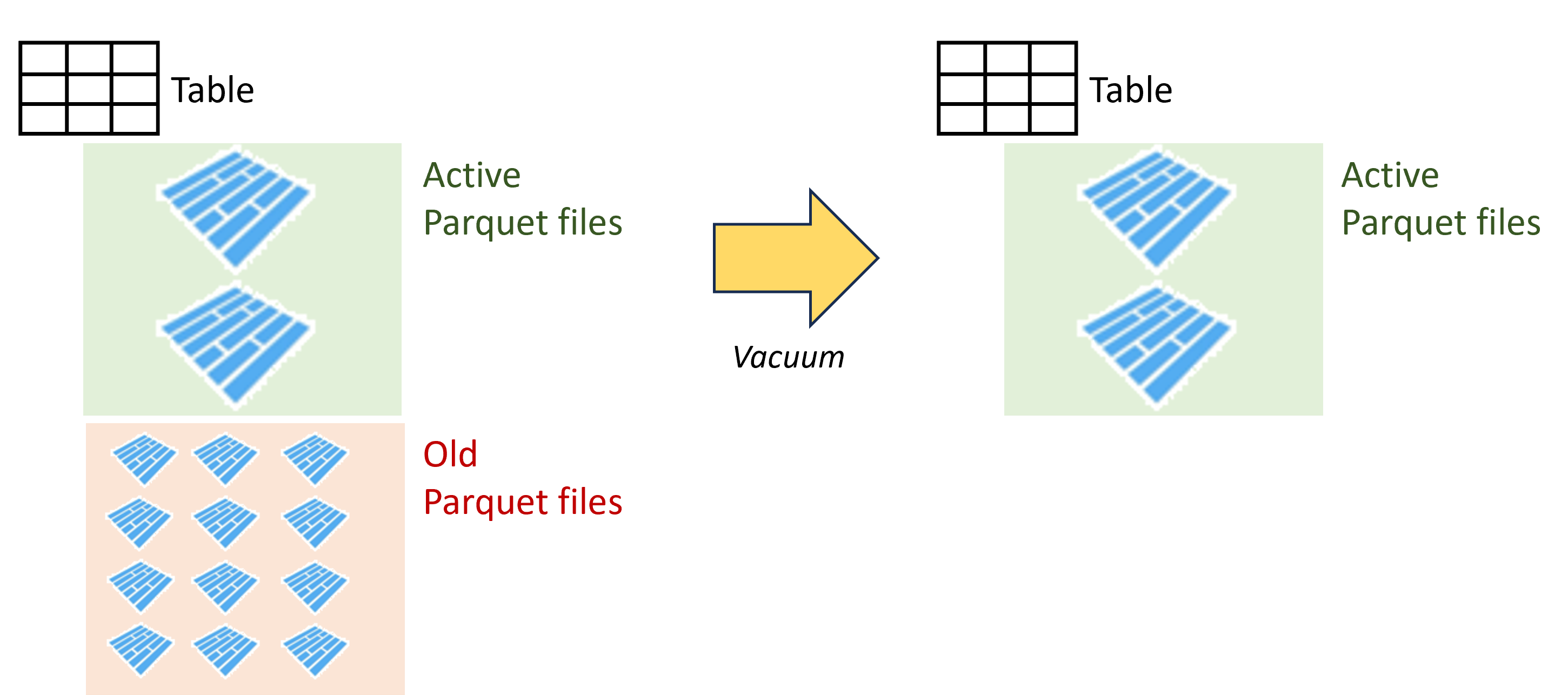

Vacuum

- Remove older files that are no longer needed and that are older than the retention threshold

- Reduce cloud storage costs

Vacuum

- Remove older files that are no longer needed and that are older than the retention threshold

- Reduce cloud storage costs

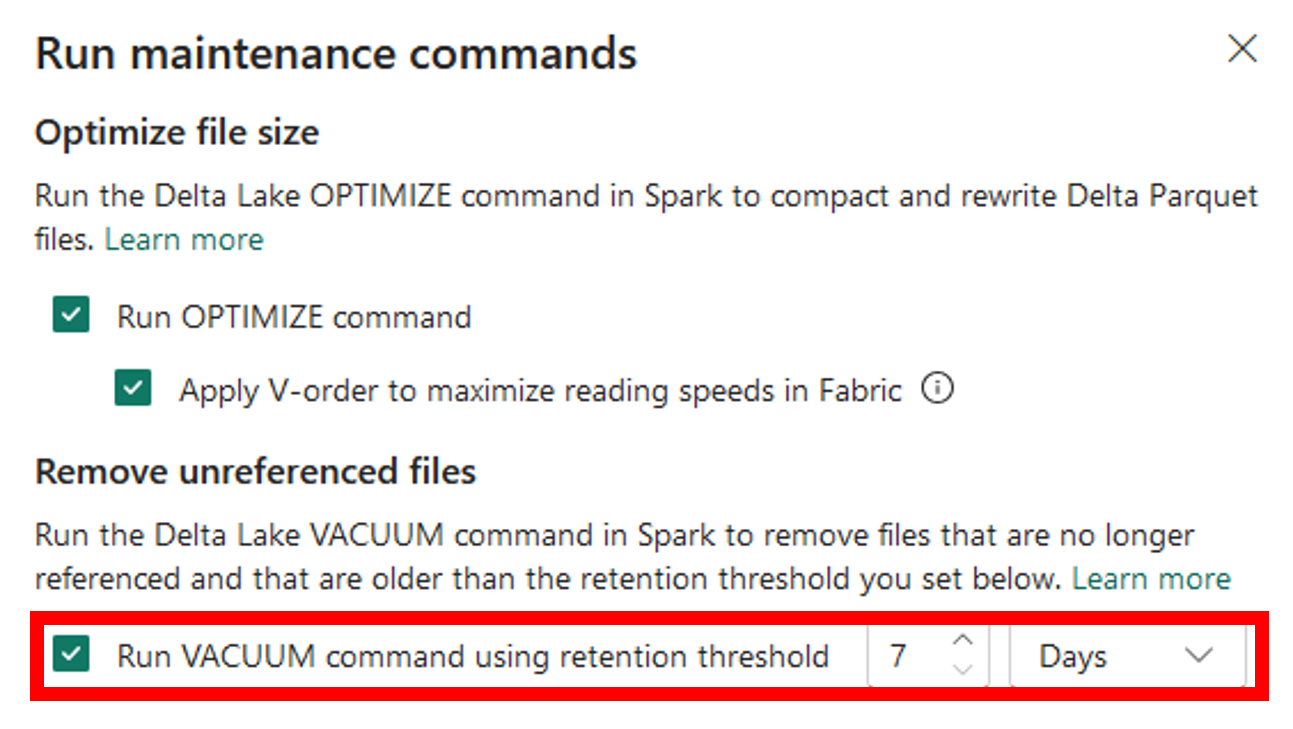

Running Vacuum from Lakehouse explorer

Let's practice!

Transform and Analyze Data with Microsoft Fabric