Methods for high-quality feedback gathering

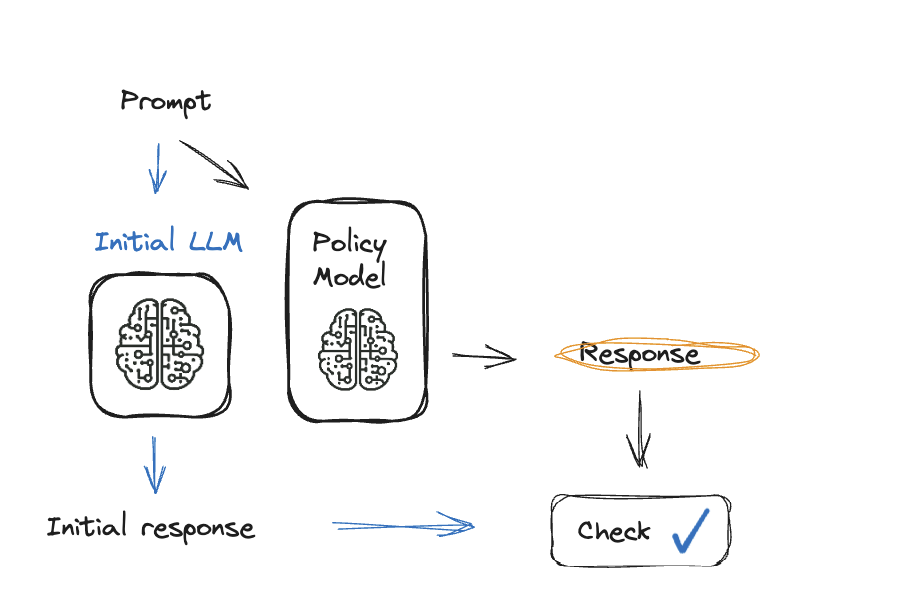

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

Methods for high-quality feedback gathering

Methods for high-quality feedback gathering

Pairwise comparisons

- Choosing between two options:

- Advantages: Simple, intuitive, reduces bias

- Challenges: Provides less information per label

- Example: Movie A vs. Movie B: "Which do you prefer?

Pairwise comparisons

def evaluate_responses(responses_A, responses_B):

wins_A, wins_B = 0, 0

for (response_A, score_A), (response_B, score_B) in zip(responses_A, responses_B):

if score_A > score_B:

wins_A += 1

else:

wins_B += 1

success_rate_A = (wins_A / len(responses_A)) * 100

success_rate_B = (wins_B / len(responses_B)) * 100

return success_rate_A, success_rate_B

Ratings

Assigning a score on a scale:

Advantages: Provides more detailed feedback

- Challenges: Prone to biases, inconsistent scales

- Example:

Movie A: 4/5 Movie B: 3/5

Psychological factors

- Cognitive Biases:

- Framing Effect: How a question is presented can influence responses

- Serial Position Effect: The order in which options are presented can affect decisions

- Anchoring: Previous information biases current decisions

Guidelines for collecting high-quality feedback

- Cognitive load: tired users, inconsistent feedback

- Carefully phrase questions

- To combat risks from cognitive load.

- Randomize query order

- To minimize bias due to anchoring and framing

- Collect Diverse Data

- To mitigate the issue of noise.

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)