Reward models explored

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

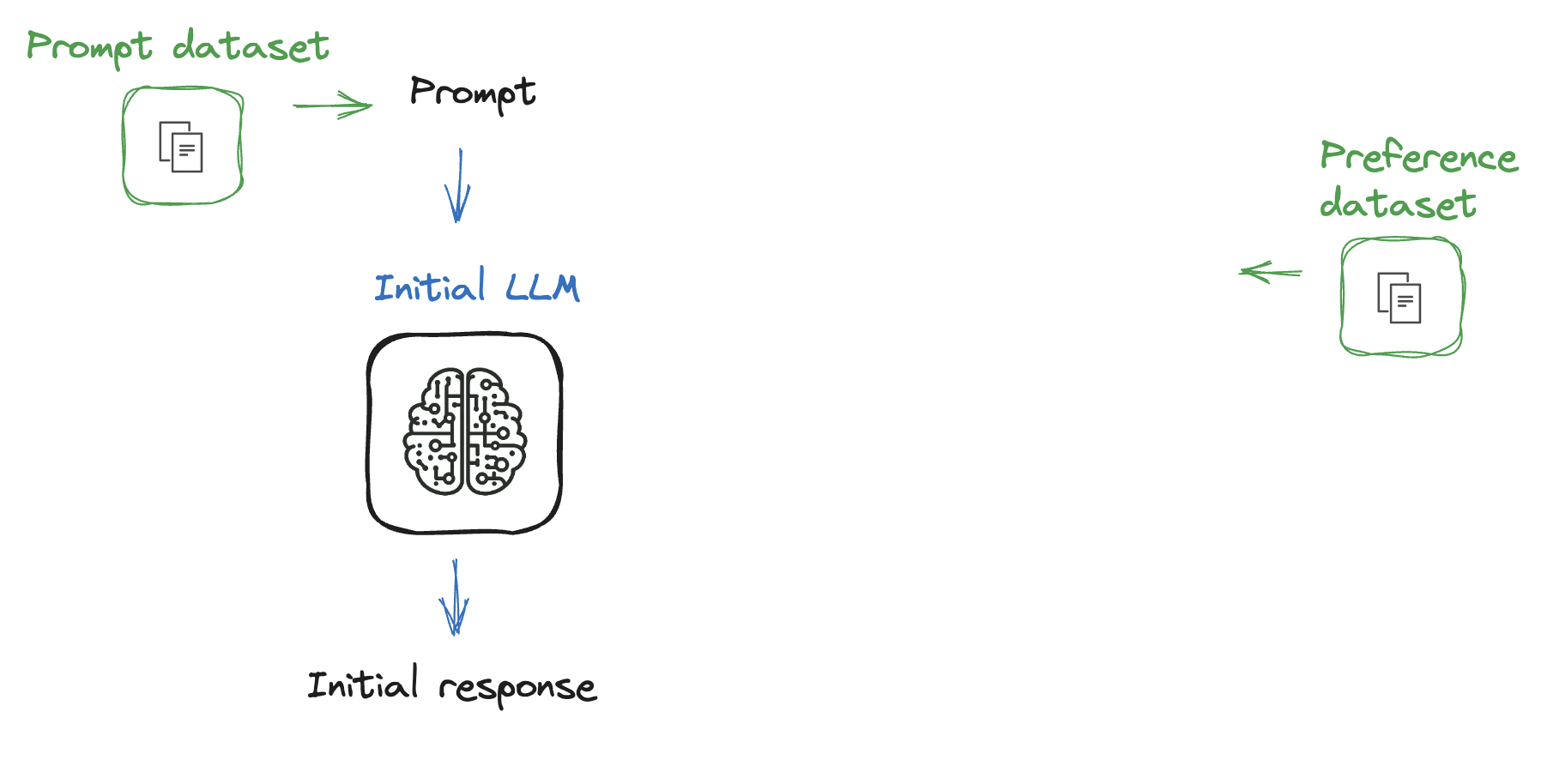

Process so far

Process so far

What is a reward model?

What is a reward model?

- Model informs the agent

- Agent evaluates the model to maximize rewards

Using the reward trainer

from trl import RewardTrainer, RewardConfigfrom transformers import AutoModelForSequenceClassification, AutoTokenizerfrom datasets import load_dataset

# Load pre-trained model and tokenizer model = AutoModelForSequenceClassification.from_pretrained("gpt2", num_labels=1) tokenizer = AutoTokenizer.from_pretrained("gpt2")# Load dataset in the required format dataset = load_dataset("path/to/dataset")

Training the reward model

# Define training arguments training_args = RewardConfig(output_dir="path/to/output/dir",per_device_train_batch_size=8, per_device_eval_batch_size=8,num_train_epochs=3,learning_rate=1e-3)

Training the reward model

# Initialize the RewardTrainer

trainer = RewardTrainer(

model=model,

args=training_args,

train_dataset=dataset["train"],

eval_dataset=dataset["validation"],

tokenizer=tokenizer,

)

# Train the reward model

trainer.train()

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)