Efficient fine-tuning in RLHF

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

Parameter-efficient fine-tuning

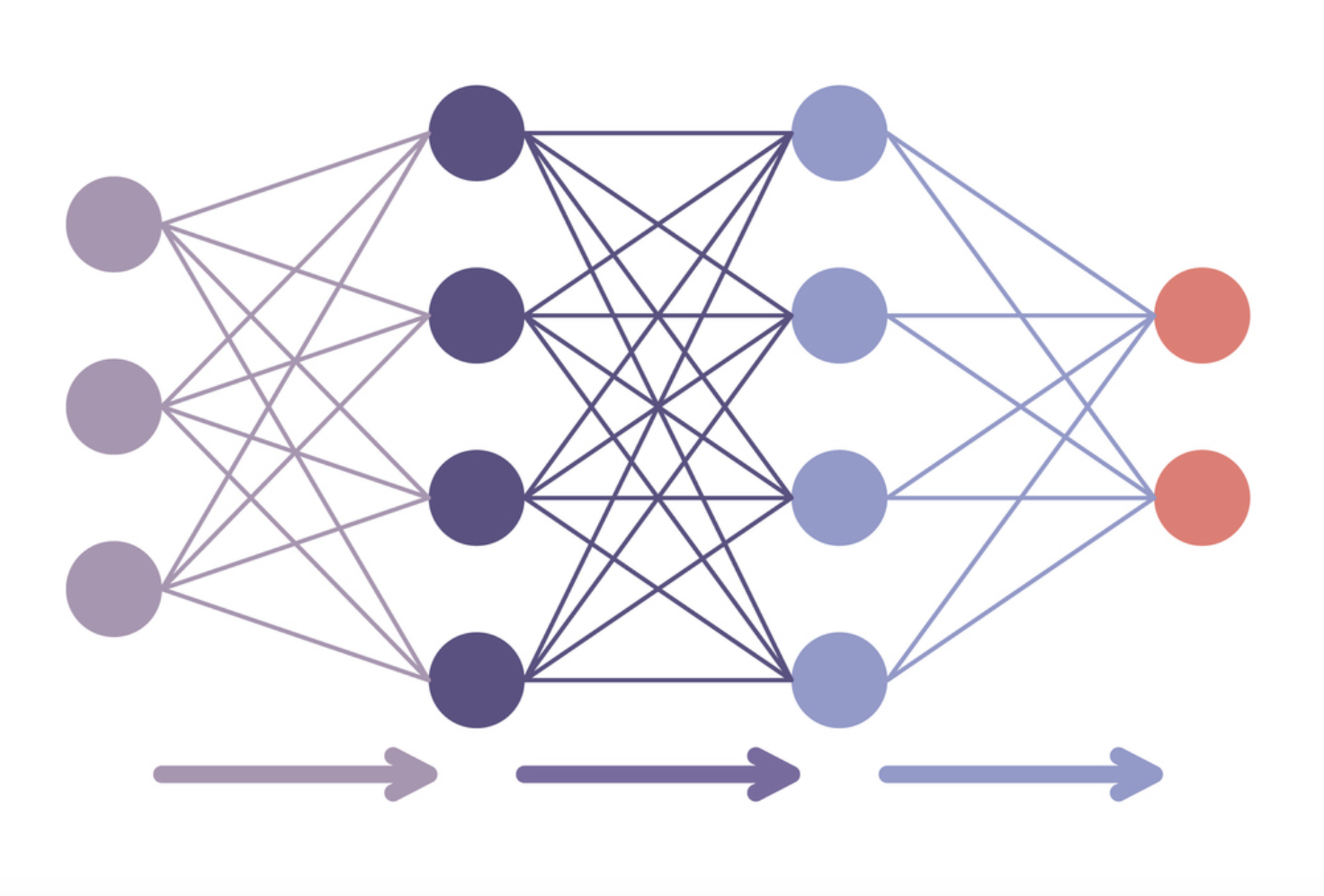

- Fine-tuning a full model

Parameter-efficient fine-tuning

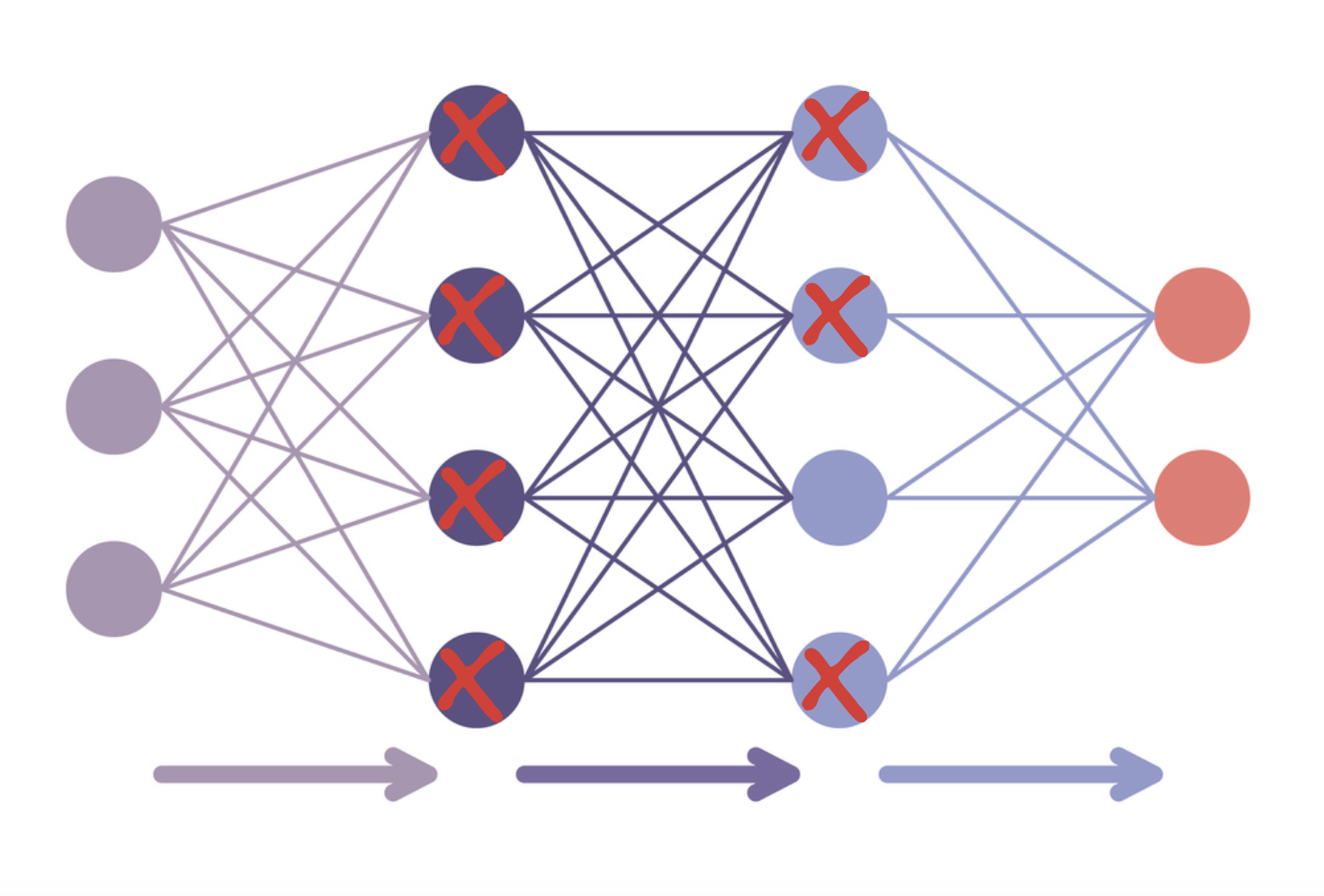

- Fine-tuning with PEFT

- LoRA: adjusts only a few layers

- Quantization: lowers data type precision

Step1: load your active model in 8-bit precision

from peft import prepare_model_for_int8_trainingpretrained_model = AutoModelForCausalLM.from_pretrained( model_name, load_in_8bit=True )pretrained_model_8bit = prepare_model_for_int8_training(pretrained_model)

Step 2: add extra trainable adapters using peft

from peft import LoraConfig, get_peft_modelconfig = LoraConfig(r=32, # Rank of the low-rank matriceslora_alpha=32, # Scaling factor for the LoRA updateslora_dropout=0.1, # Dropout rate for LoRA layersbias="lora_only"# Only update bias terms for LoRA layers, others remain frozen)lora_model = get_peft_model(pretrained_model_8bit, config) model = AutoModelForCausalLMWithValueHead.from_pretrained(lora_model)

Step 3: use one model for reference and active logits

ppo_trainer = PPOTrainer(

config, # The config we just defined

model, # Our PPO model

ref_model=None,

tokenizer=tokenizer,

dataset=dataset,

data_collator=collator,

optimizer=optimizer

)

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)