Exploring pre-trained LLMs

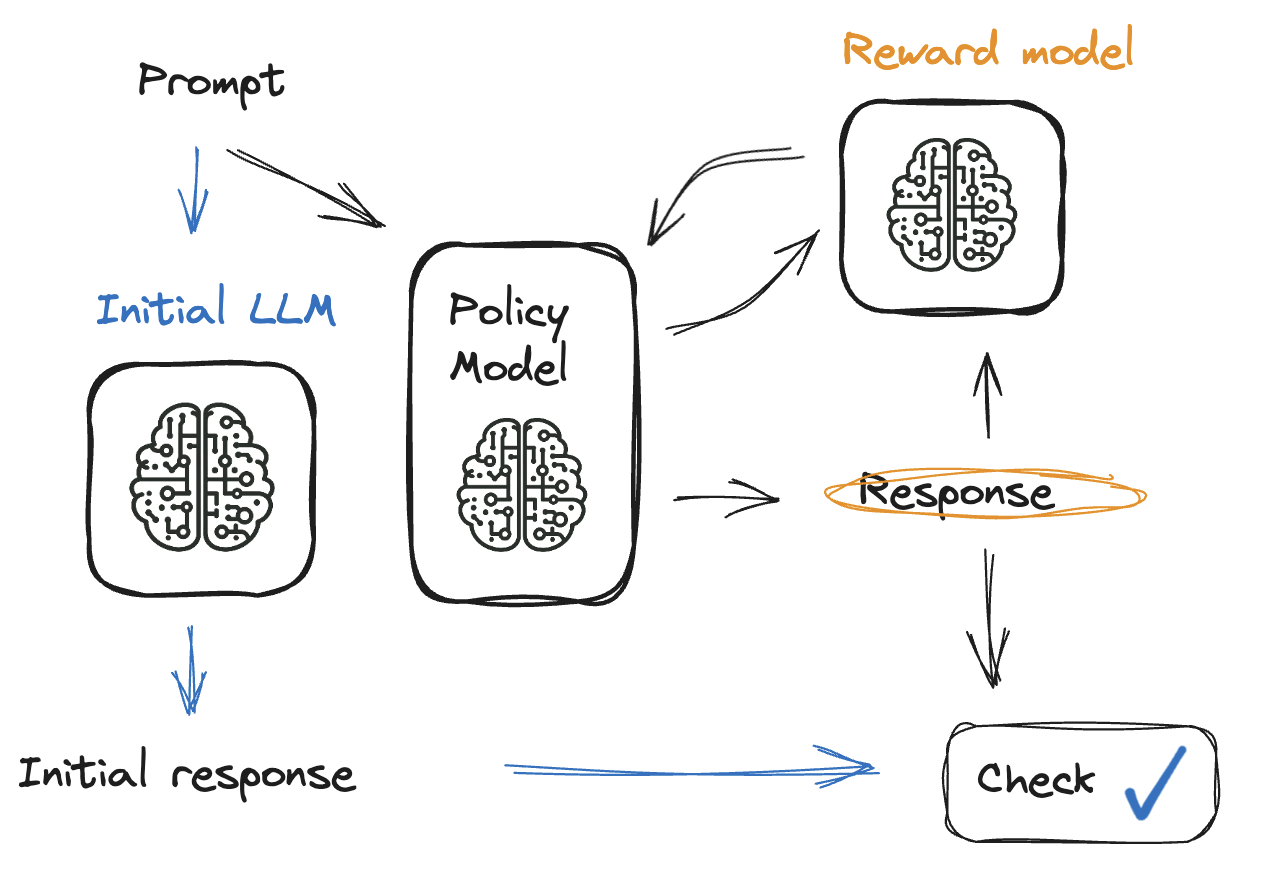

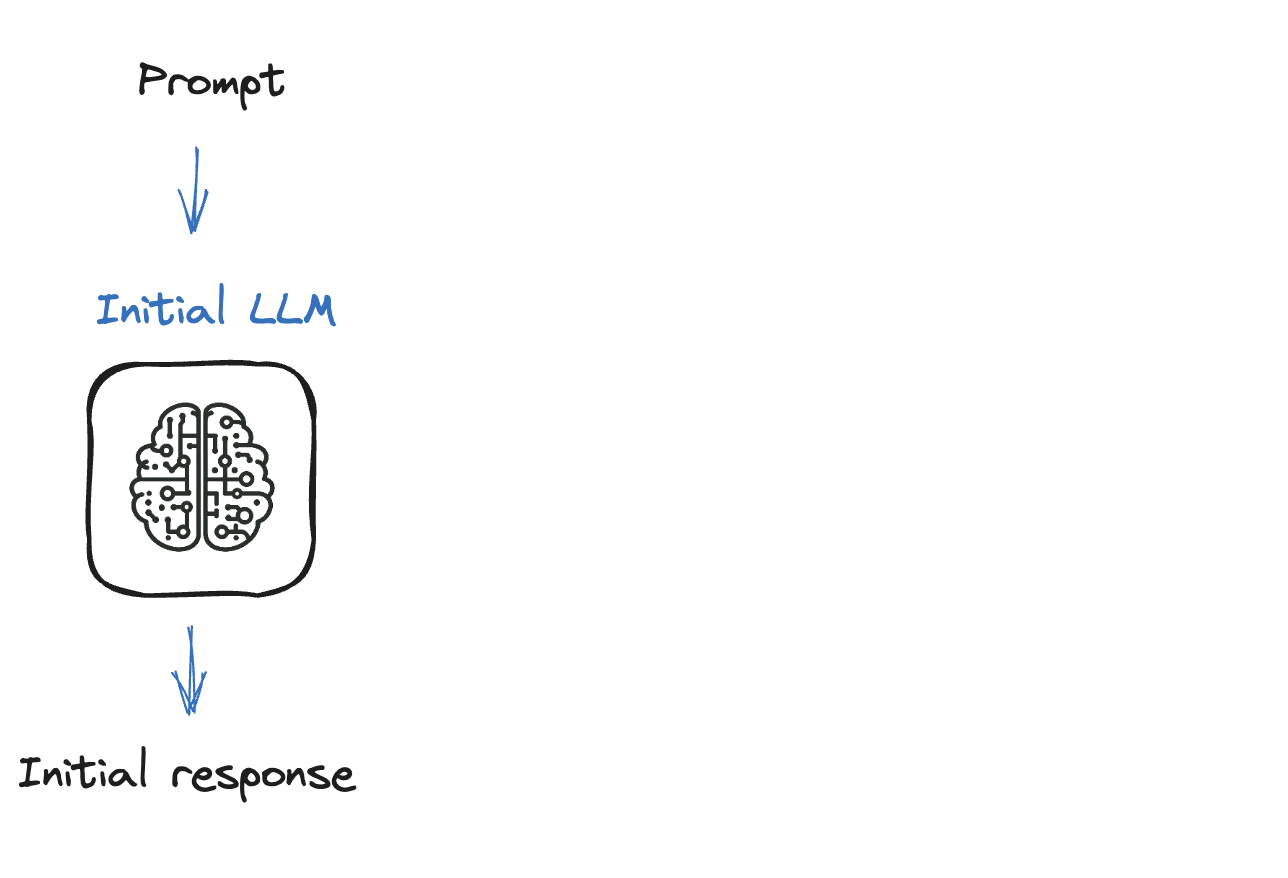

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

The importance of fine-tuning

The importance of fine-tuning

A step-by-step guide to fine-tuning an LLM

A step-by-step guide to fine-tuning an LLM

A step-by-step guide to fine-tuning an LLM

Step 1: load the data to use

from datasets import load_dataset

import pandas as pd

# `load_dataset` simplifies loading and preprocessing datasets from various sources

# It provides easy access to a wide range of datasets with minimal setup

dataset = load_dataset("mteb/tweet_sentiment_extraction")

df = pd.DataFrame(dataset['train'])

id text label label_text

0 cb774db0d1 I'd have responded, if I were going 1 neutral

1 549e992a42 Sooo SAD I will miss you in San Diego!!! 0 negative

2 08ac60f138 my boss is bullying me... 0 negative

Step 2: choose a pre-trained model

from transformers import AutoModelForCausalLM

# AutoModelForCausalLM simplifies loading and switching models

model = AutoModelForCausalLM.from_pretrained("openai-gpt")

- Causal models: previous tokens "cause" subsequent ones

Step 3: tokenizer

from transformers import AutoTokenizer

# `AutoTokenizer` loads the correct tokenizer for the specified model

tokenizer = AutoTokenizer.from_pretrained("openai-gpt")

tokenizer.add_special_tokens({'pad_token': '[PAD]'})

model.resize_token_embeddings(len(tokenizer))

- Padding: to have equal-sized batches of text

Step 3: tokenizer

def tokenize_function(examples):

tokenized = tokenizer(examples["content"], padding="max_length", truncation=True)

return tokenized

tokenized_datasets = dataset.map(tokenize_function, batched=True)

- Batched parameter: for faster processing

Step 4: fine-tune using the Trainer method

training_args = TrainingArguments(

output_dir="test_trainer",

per_device_train_batch_size=1,

per_device_eval_batch_size=1,

gradient_accumulation_steps=4)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset["train"],

eval_dataset=tokenized_dataset["test"])

trainer.train()

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)