Introduction to RLHF

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

Welcome to the course!

- Instructor: Mina Parham

- AI Engineer

- Large Language Models (LLMs)

- Reinforcement Learning from Human Feedback (RLHF)

- Topic: Reinforcement Learning from Human Feedback (RLHF)

Welcome to the course!

- Instructor: Mina Parham

- AI Engineer

- Large Language Models (LLMs)

- Reinforcement Learning from Human Feedback (RLHF)

- Topic: Reinforcement Learning from Human Feedback (RLHF)

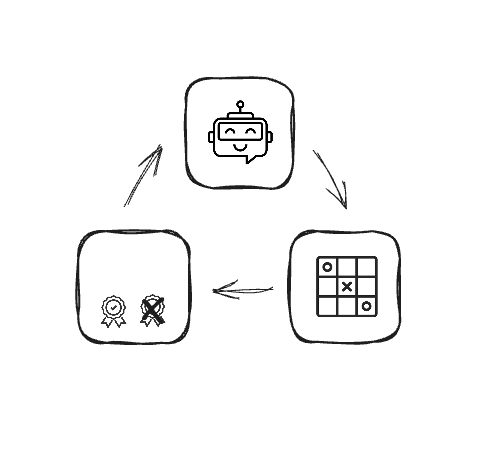

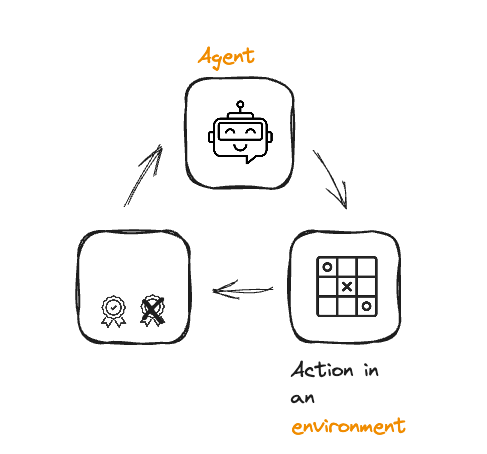

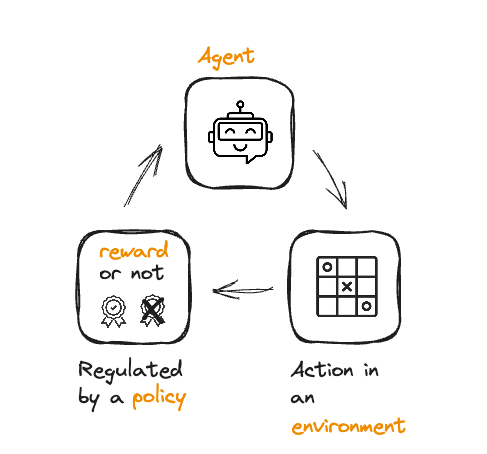

Reinforcement learning review

Reinforcement learning review

Reinforcement learning review

Reinforcement learning review

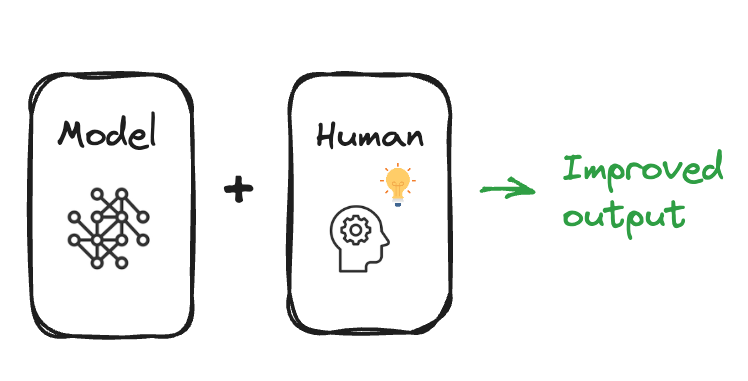

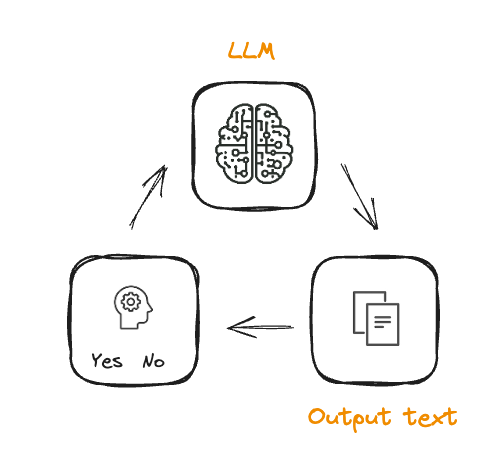

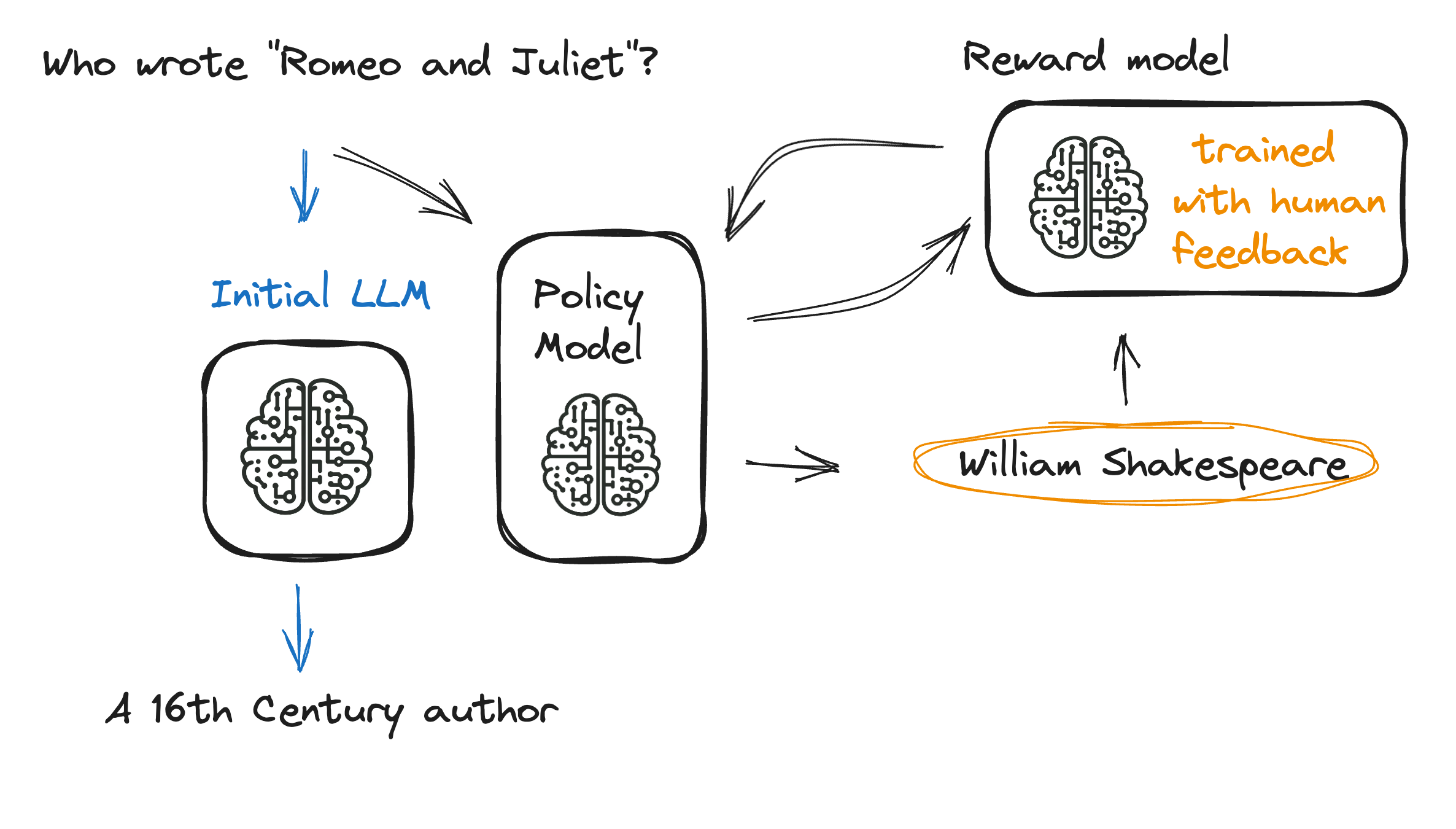

From RL to RLHF

From RL to RLHF

From RL to RLHF

- Training the reward model

- Alignment with human preferences

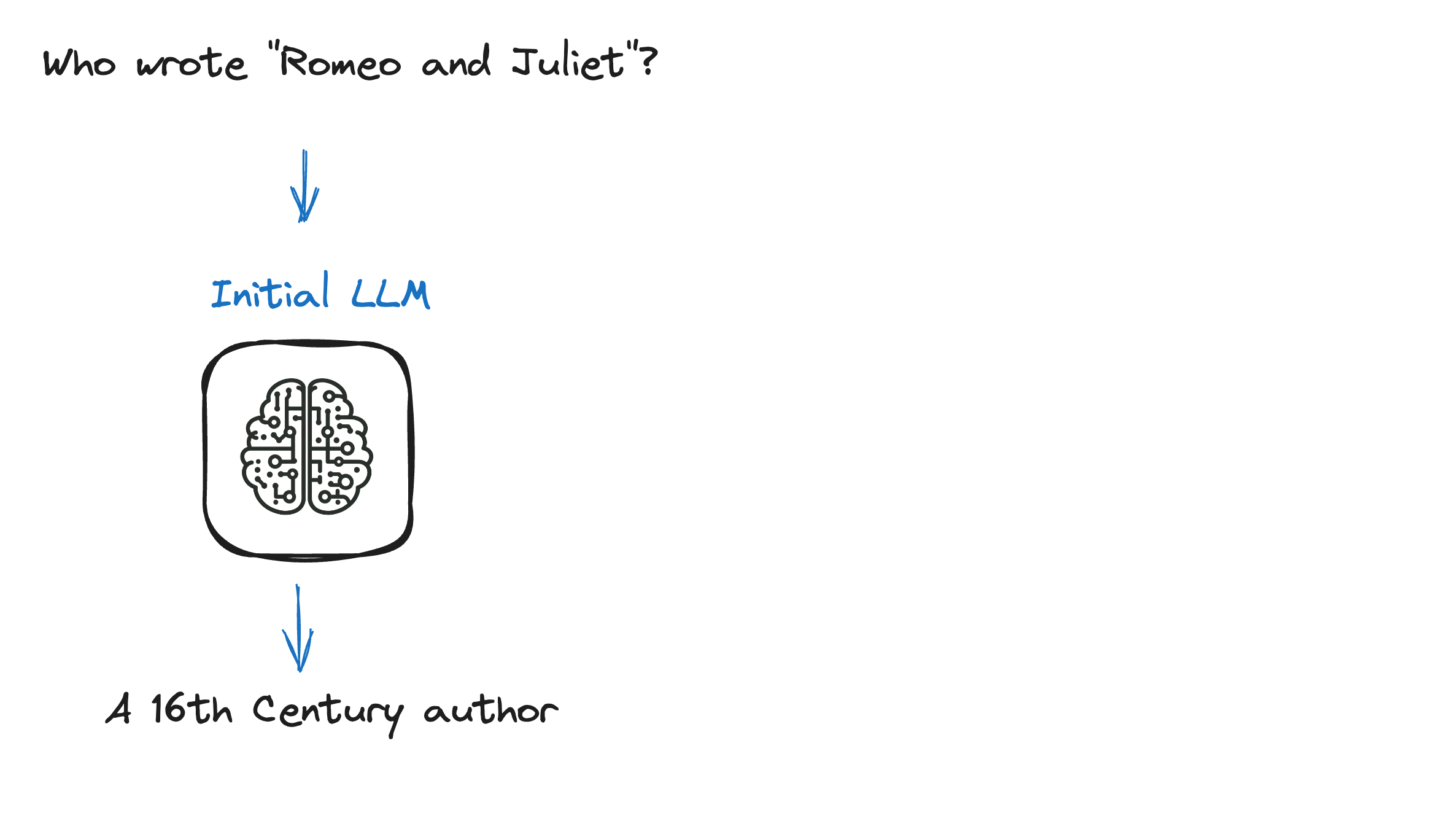

LLM fine-tuning in RLHF

LLM fine-tuning in RLHF

- Training the initial LLM

The full RLHF process

The full RLHF process

The full RLHF process

The full RLHF process

The full RLHF process

The full RLHF process

Interacting with RLHF-tuned LLMs

- Pre-trained RLHF models on Hugging Face 🤗

from transformers import pipelinetext_generator = pipeline('text-generation', model='lvwerra/gpt2-imdb-pos-v2')# Provide a review prompt review_prompt = "This is definitely a" # Generate the continuation output = text_generator(review_prompt, max_length=50) #Print the generated text print(output[0]['generated_text'])

This is definitely a crucial improvement.

Interacting with RLHF-tuned LLMs

from transformers import pipeline, AutoModelForSequenceClassification, AutoTokenizer# Instantiate the pre-trained model and tokenizer model = AutoModelForSequenceClassification.from_pretrained("lvwerra/distilbert-imdb") tokenizer = AutoTokenizer.from_pretrained("lvwerra/distilbert-imdb")# Use pipeline to create the sentiment analyzer sentiment_analyzer = pipeline('sentiment-analysis', model=model, tokenizer=tokenizer) # Pass the text to the sentiment analyzer and print the result sentiment = sentiment_analyzer("This is definitely a crucial improvement.")print(f"Sentiment Analysis Result: {sentiment}")

positive

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)