Model metrics and adjustments

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

Why use a reference model?

- Meaningless outputs

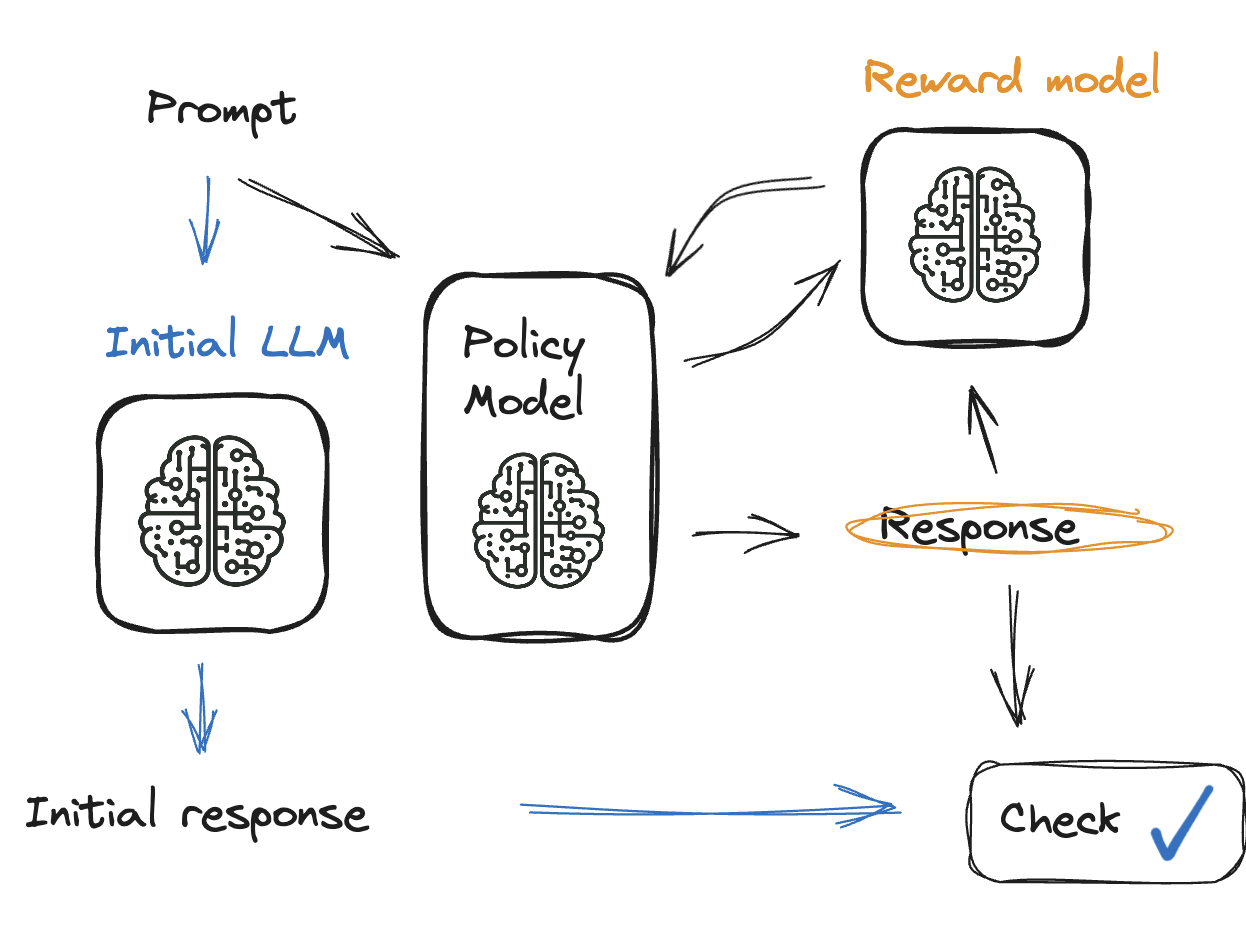

Checking model output

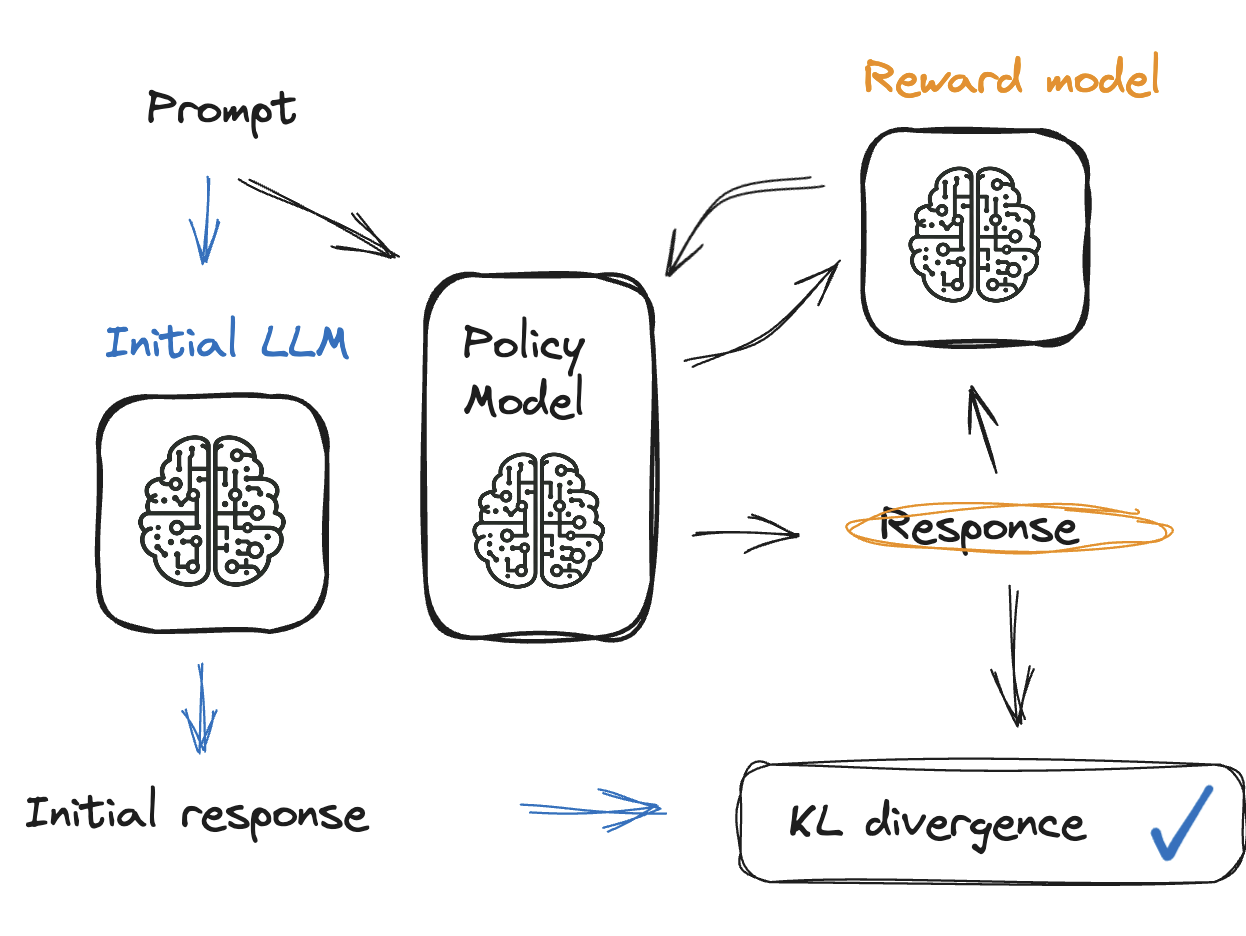

Solution: KL divergence

Solution: KL divergence

- Penalty is added to the reward model

- Penalty redirects the model if it produces unrelated outputs

- KL divergence compares current and reward model

- Between 0 and 10, and never negative

Adjusting parameters

generation_kwargs = {"min_length": -1, # don't ignore the EOS token"top_k": 0.0, # no top-k sampling"top_p": 1.0, "do_sample": True, "pad_token_id": tokenizer.eos_token_id, "max_new_tokens": 32}

- Parameters are passed to the policy model

Checking the reward model

Checking the reward model

Checking output (reward)

reward_model_results.head()

|ID | Comment |Sentiment |Reward|

|---|---------------------------------------------|----------|------|

| 1 | This event was lit! So much fun! | Positive | 0.9 |

| 2 | Terrible experience, never attending again. | Negative | -0.8 |

| 3 | It was okay, nothing extraordinary. | Neutral | 0.2 |

| 4 | The event was poorly organized and chaotic. | Negative | -0.85|

| 5 | Had an amazing time with great people! | Positive | 0.95|

Checking the reward model

- 👍 👎 Check extreme cases

extreme_positive = reward_model_results[reward_model_results['Reward'] >= 0.9] extreme_negative = reward_model_results[reward_model_results['Reward'] <= -0.8]

🧘 Ensure balanced dataset

sentiment_distribution = reward_model_results['Sentiment'].value_counts()📊 Normalize the reward model

from sklearn.preprocessing import MinMaxScaler scaler = MinMaxScaler(feature_range=(-1, 1)) scaler.fit_transform(reward_model_results[['Reward']])

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)