Incorporating diverse feedback sources

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

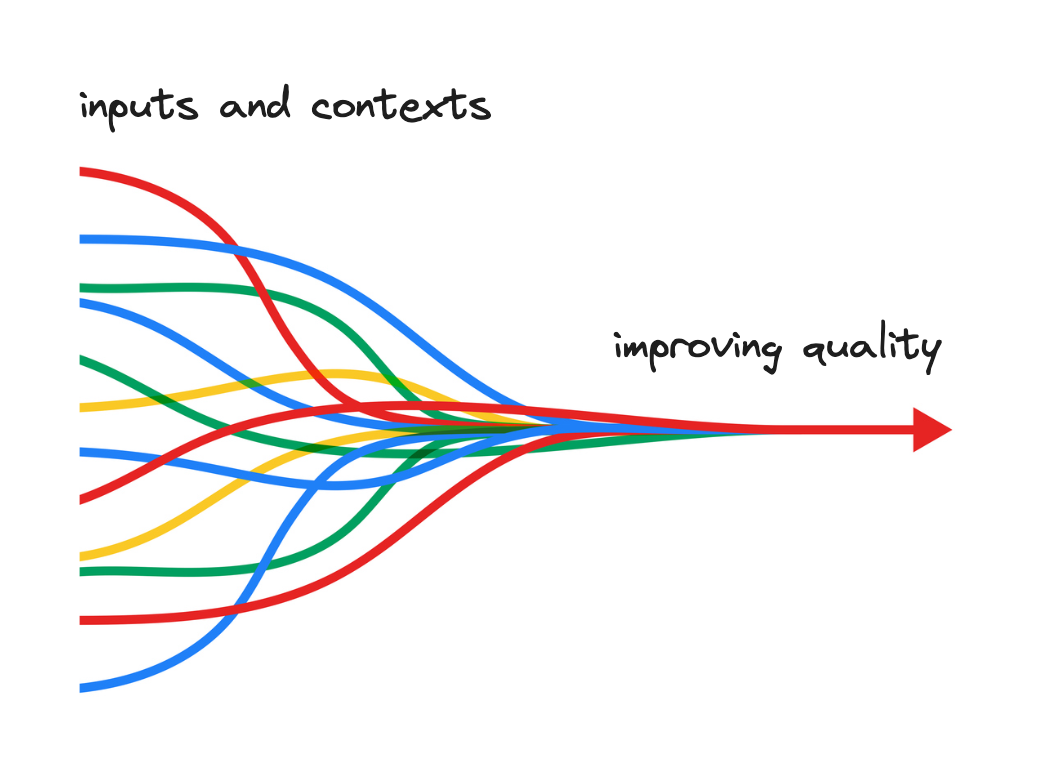

Improved model generalization

- Represent different viewpoints and contexts

- Generalizes preferences and values

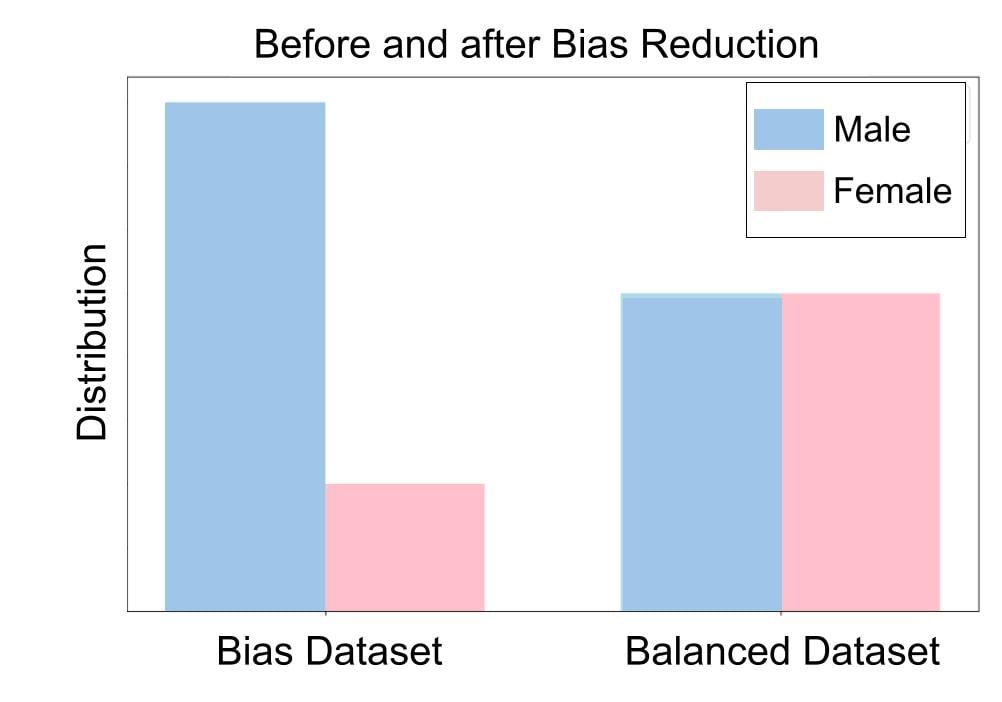

Reduced bias

- Mitigates individual biases

- Creates a more balanced and fair model output

Better alignment with human values

- Complex human preferences

- Cultures and backgrounds represented

Enhanced adaptability

- Model responds to wider range of user needs and preferences

- Represent different viewpoints

Increased robustness

- Resilient to different types of inputs

- Improving its performance

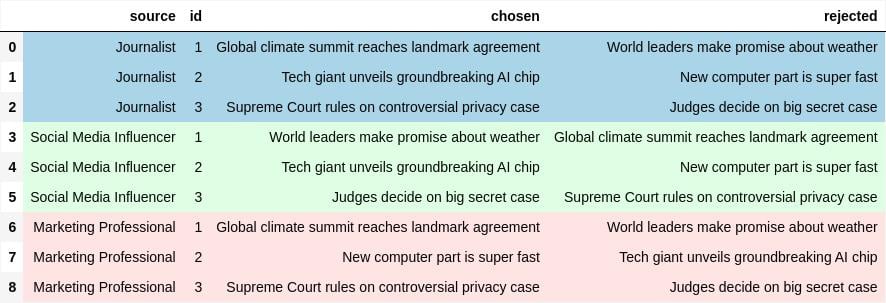

Integrating preference data from multiple sources

Preference data preference_df with sources 'Journalist', 'Social Media Influencer', and 'Marketing Professional':

Majority voting

This sample data could easily be integrated by grouping by 'id':

df_majority = preference_df.groupby(['id']).apply(majority_vote)

Then, using majority voting:

from collections import Counter

def majority_vote(df):

votes = Counter(zip(df['chosen'], df['rejected']))

return max(votes, key=votes.get)

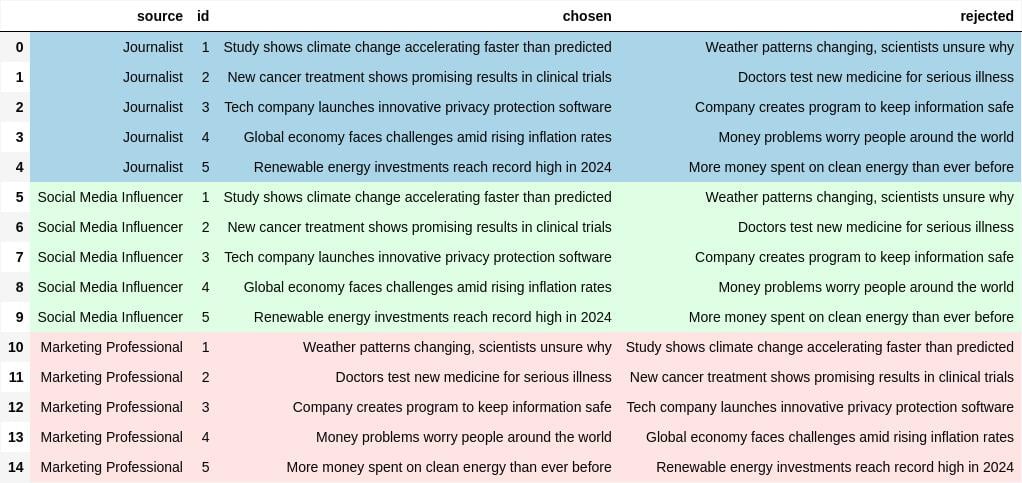

Unreliable preference data sources

Preference data preference_df2 with the same three experts:

Unreliable preference data sources

- Iterating over the rows of

preference_df2to identify unreliable sources:

df_majority = preference_df2.groupby('id').apply(majority_vote)disagreements = {source: 0 for source in preference_df2['source'].unique()}for _, row in preference_df2.iterrows(): if (row['chosen'], row['rejected']) != df_majority[row['id']]: disagreements[row['source']] += 1detect_unreliable_source = max(disagreements, key=disagreements.get)

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)