Evaluating RLHF models

Reinforcement Learning from Human Feedback (RLHF)

Mina Parham

AI Engineer

Automation metrics

- Classification task: Accuracy, F1 score

classification_results.head(3)

| ID | Feedback_Text | True_Category | Predicted_Category |

|----|---------------------------------------|---------------|--------------------|

| 1 | "Arrived on time and works great." | Positive | Positive |

| 2 | "I had issues with customer service." | Negative | Neutral |

| 3 | "The website is easy to navigate." | Positive | Positive |

Automation metrics

- Text generation, summarization: ROUGE, BLEU

text_generation.head(3)

| ID | Prompt | True_Completion | Pred_Completion |

|----|----------------------|------------------|-------------------|

| 1 | "Customer service" | "can help you." | "will assist." |

| 2 | "To get a refund," | "contact us." | "reach out." |

| 3 | "Support team is" | "here 24/7." | "available 24/7." |

Automation metrics

Reference statement:

- RLHF improves model alignment with human values.

ROUGE score: 0.83

Statement to compare:

- RLHF aligns models with human values.

Artifact curves

config = PPOConfig(

model_name="lvwerra/gpt2-imdb",learning_rate=1.41e-5, log_with="wandb")

import wandb

wandb.init()

Artifact curves

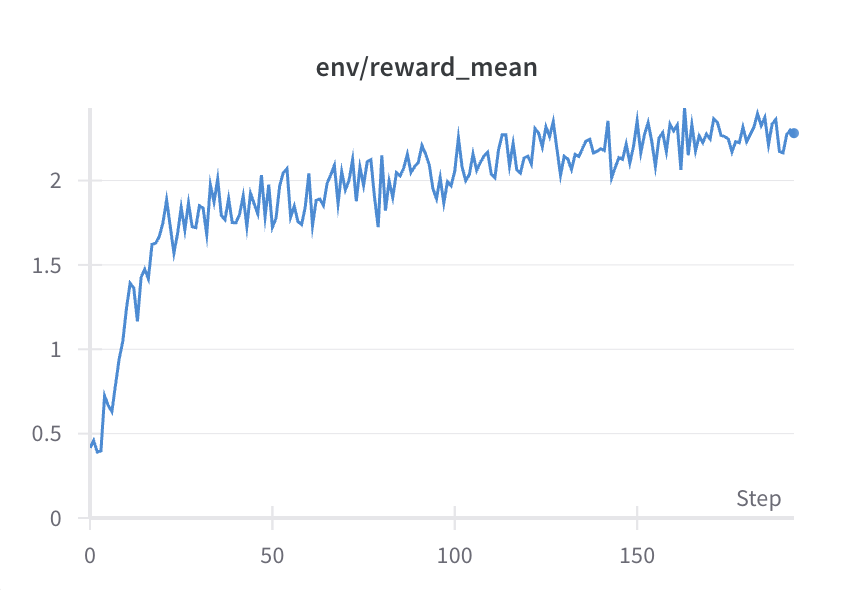

- Reward increases as the model learns.

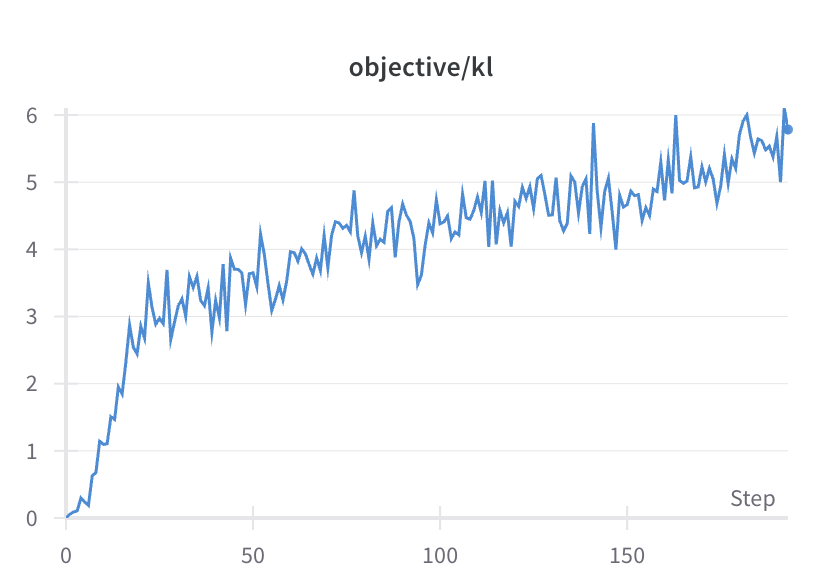

- The KL curve should increase gradually.

Human centered evaluation

- Human evaluation: subjective judgments or a deep understanding of context

- Models evaluation: scalability and consistency

Let's practice!

Reinforcement Learning from Human Feedback (RLHF)