Wie gut ist dein Modell?

Überwachtes Lernen mit scikit-learn

George Boorman

Core Curriculum Manager, DataCamp

Kennzahlen bei der Klassifikation

Bewertung der Leistung eines Modells anhand der Korrektklassifikationsrate:

Anteil korrekt klassifizierter Daten

Nicht immer eine nützliche Kennzahl

Klassenungleichgewicht

Klassifikation zur Vorhersage betrügerischer Banktransaktionen

- 99 % der Transaktionen sind legitim, 1 % sind betrügerisch

Szenario: ein Klassifikator sagt vorher, dass ALLE Transaktionen legitim sind

Korrektklassifikationsrate von 99 %

Aber ungeeignet zur Vorhersage betrügerischer Transaktionen

Verfehlt seinen ursprünglichen Zweck

Klassenungleichgewicht: ungleichmäßige Häufigkeit der Klassen

Alternative Methode zur Leistungsbewertung nötig

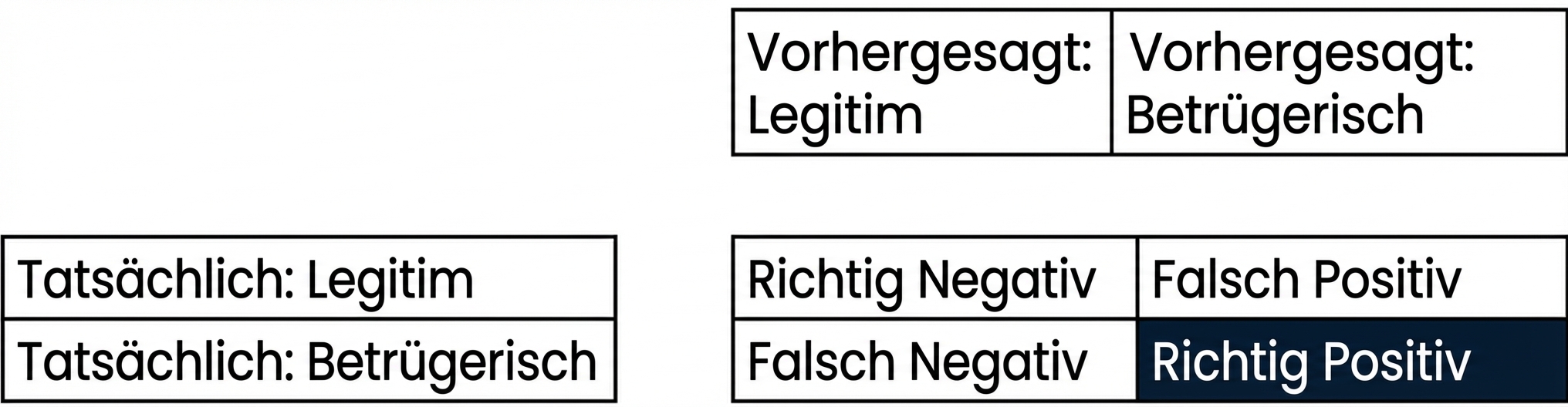

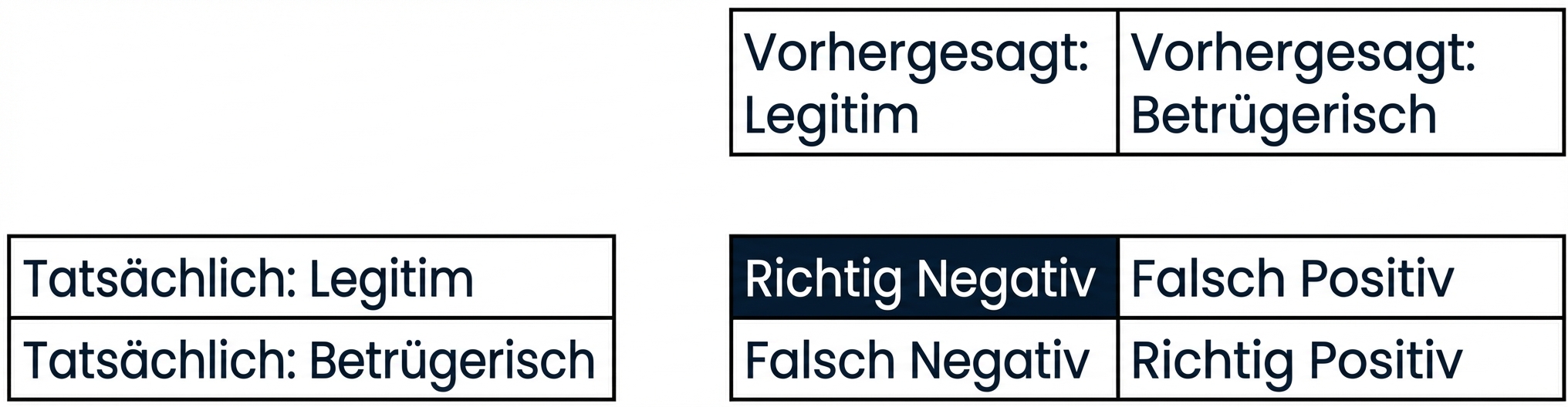

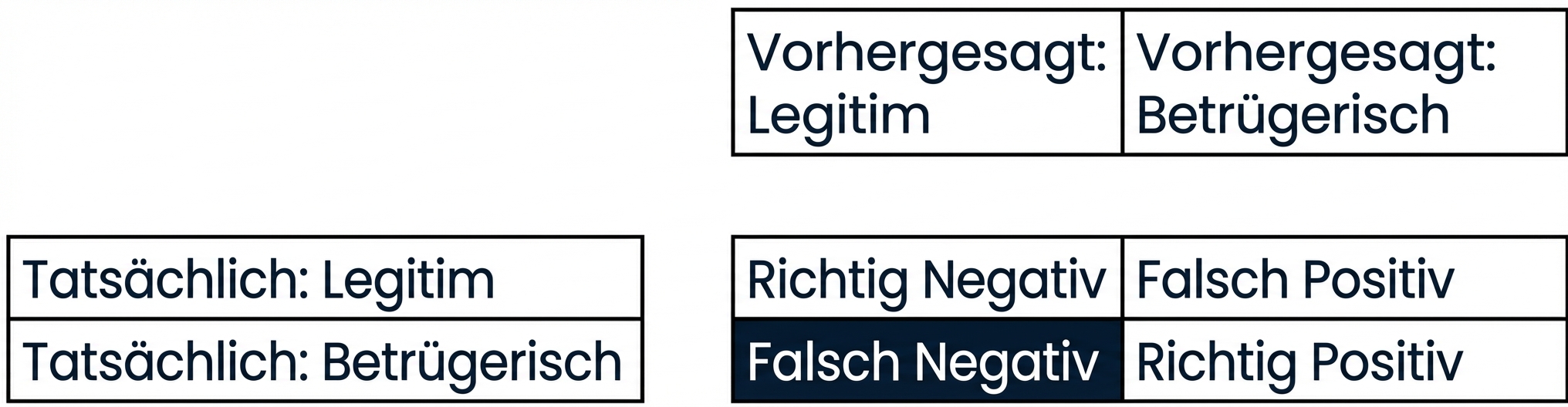

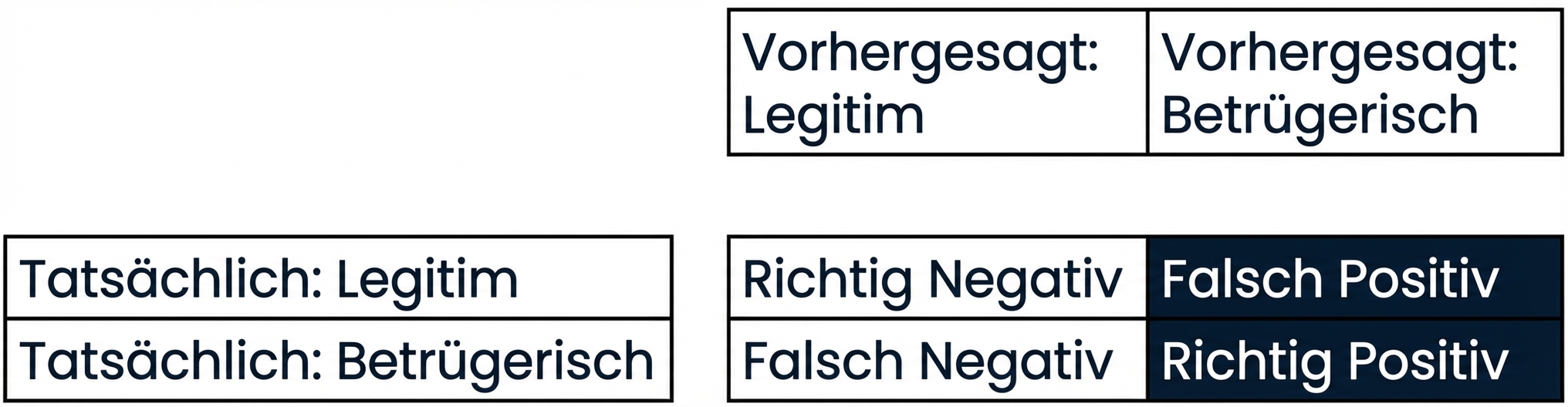

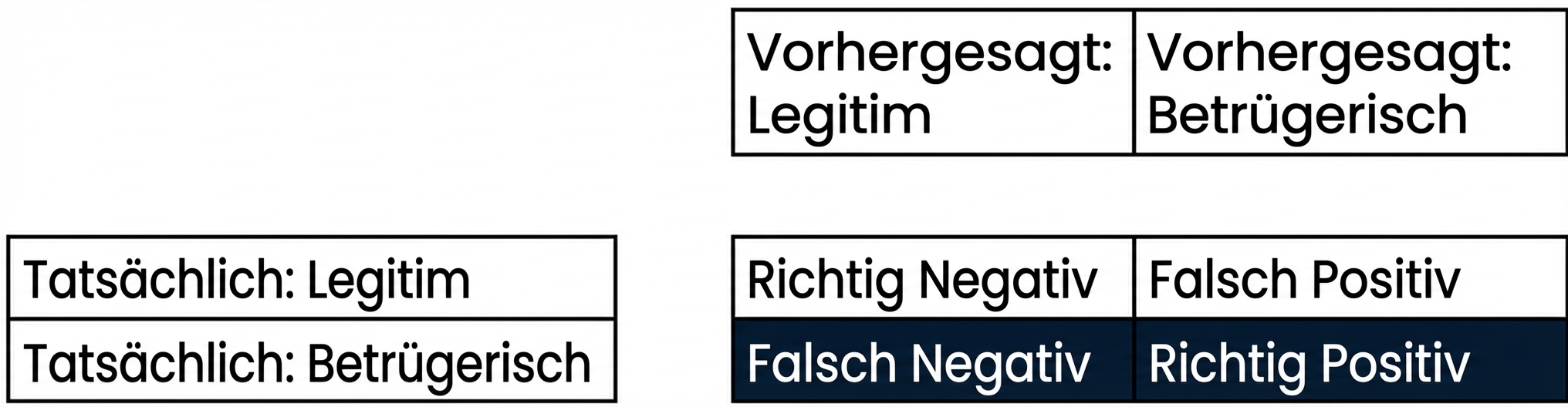

Konfusionsmatrix zur Bewertung der Klassifikationsleistung

- Konfusionsmatrix

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

Bewertung der Klassifikationsleistung

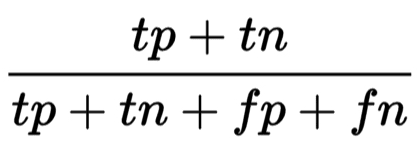

- Korrektklassifikationsrate („Accuracy“):

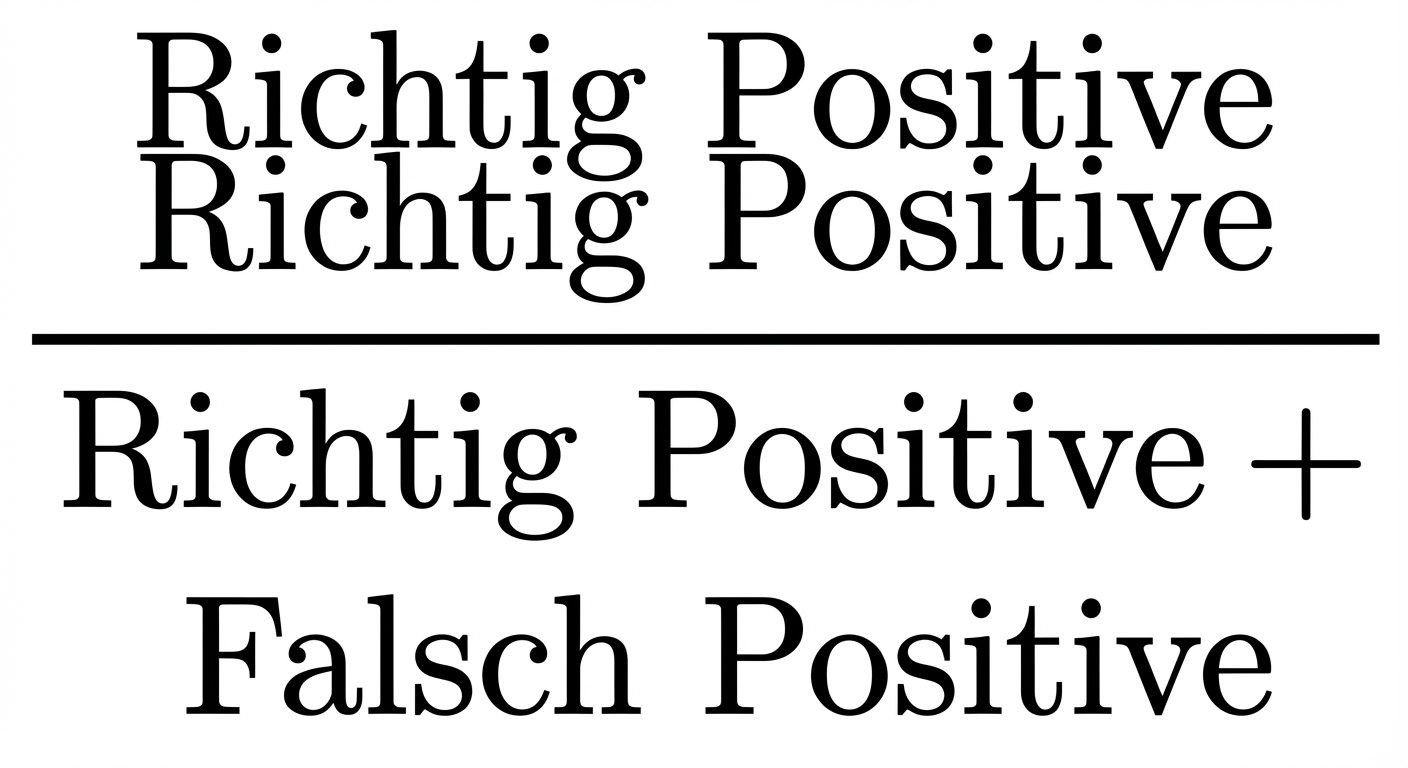

Genauigkeit

- Genauigkeit („Precision“):

- Hohe Genauigkeit = weniger falsche Positive

- Im Beispiel: nur wenige legitime Transaktionen werden als betrügerisch klassifiziert

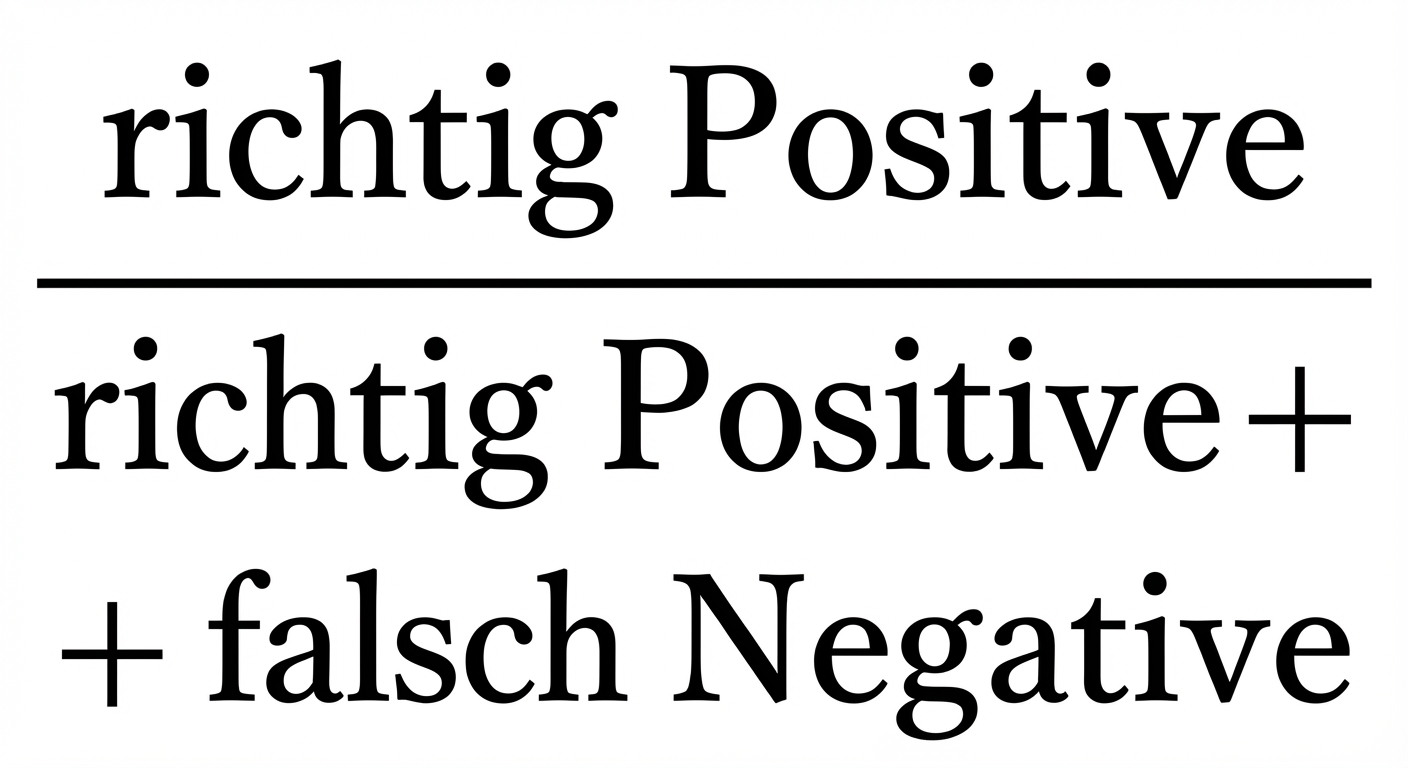

Sensitivität

- Sensitivität („Recall“):

- Hohe Sensitivität = weniger falsche Negative

- Im Beispiel: die meisten betrügerischen Transaktionen werden korrekt klassifiziert

F-Maß

- F-Maß: $2 * \frac{precision \ * \ recall}{precision \ + \ recall}$

Konfusionsmatrix in scikit-learn

from sklearn.metrics import classification_report, confusion_matrixknn = KNeighborsClassifier(n_neighbors=7)X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.4, random_state=42)knn.fit(X_train, y_train)y_pred = knn.predict(X_test)

Konfusionsmatrix in scikit-learn

print(confusion_matrix(y_test, y_pred))

[[1106 11]

[ 183 34]]

Klassifikationsbericht in scikit-learn

print(classification_report(y_test, y_pred))

precision recall f1-score support

0 0.86 0.99 0.92 1117

1 0.76 0.16 0.26 217

accuracy 0.85 1334

macro avg 0.81 0.57 0.59 1334

weighted avg 0.84 0.85 0.81 1334

Lass uns üben!

Überwachtes Lernen mit scikit-learn