Optimierung von Hyperparametern

Überwachtes Lernen mit scikit-learn

George Boorman

Core Curriculum Manager

Optimierung von Hyperparametern

Ridge-/Lasso-Regression: Auswahl von

alphaKNN: Auswahl von

n_neighborsHyperparameter: Parameter, die vor der Anpassung des Modells festgelegt werden

- Beispiele:

alphaundn_neighbors

- Beispiele:

Auswahl geeigneter Hyperparameter

Ausprobieren vieler verschiedener Werte für die Hyperparameter

Separate Anpassung

Bewertung der Leistung

Auswahl der besten Werte

Prozess wird als Tuning oder Optimierung von Hyperparametern bezeichnet

Wichtig: Kreuzvalidierung zur Vermeidung einer Überanpassung an die Testdaten!

Datenmenge kann immer noch zerlegt werden, aber Kreuzvalidierung wird mit Trainingsdaten durchgeführt

Testdaten werden für die finale Bewertung zurückgelegt

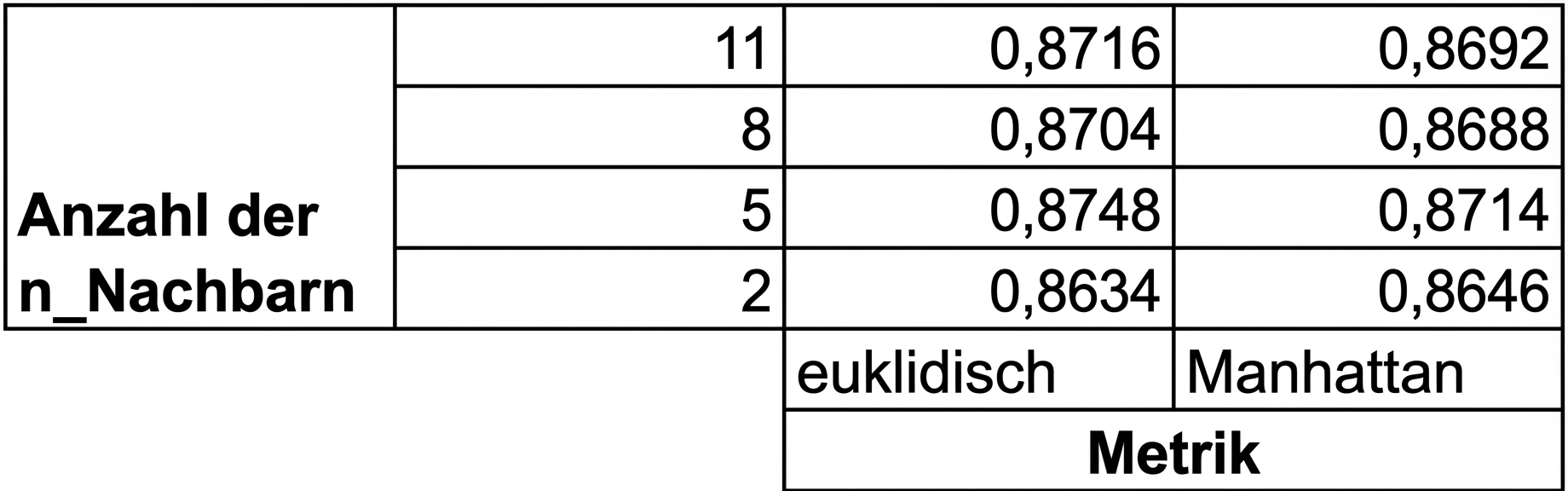

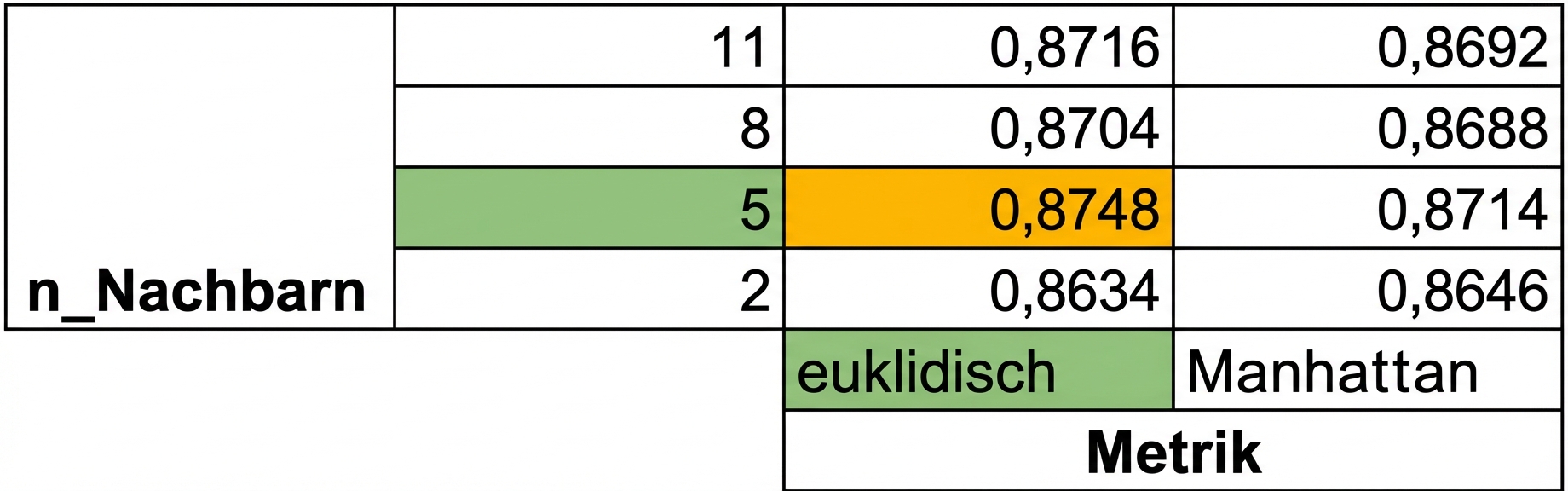

Rastersuche mit Kreuzvalidierung

Rastersuche mit Kreuzvalidierung

Rastersuche mit Kreuzvalidierung

GridSearchCV in scikit-learn

from sklearn.model_selection import GridSearchCVkf = KFold(n_splits=5, shuffle=True, random_state=42)param_grid = {"alpha": np.arange(0.0001, 1, 10), "solver": ["sag", "lsqr"]}ridge = Ridge()ridge_cv = GridSearchCV(ridge, param_grid, cv=kf)ridge_cv.fit(X_train, y_train)print(ridge_cv.best_params_, ridge_cv.best_score_)

{'alpha': 0.0001, 'solver': 'sag'}

0.7529912278705785

Einschränkungen und alternativer Ansatz

- 3-fache Kreuzvalidierung, 1 Hyperparameter, 10 Werte insgesamt = 30 Anpassungen

- 10-fache Kreuzvalidierung, 3 Hyperparameter, 30 Werte insgesamt = 900 Anpassungen

RandomizedSearchCV

from sklearn.model_selection import RandomizedSearchCVkf = KFold(n_splits=5, shuffle=True, random_state=42) param_grid = {'alpha': np.arange(0.0001, 1, 10), "solver": ['sag', 'lsqr']} ridge = Ridge()ridge_cv = RandomizedSearchCV(ridge, param_grid, cv=kf, n_iter=2) ridge_cv.fit(X_train, y_train)print(ridge_cv.best_params_, ridge_cv.best_score_)

{'solver': 'sag', 'alpha': 0.0001}

0.7529912278705785

Bewertung mithilfe der Testdaten

test_score = ridge_cv.score(X_test, y_test)print(test_score)

0.7564731534089224

Lass uns üben!

Überwachtes Lernen mit scikit-learn