ReLU-Aktivierungsfunktionen

Einführung in Deep Learning mit PyTorch

Jasmin Ludolf

Senior Data Science Content Developer, DataCamp

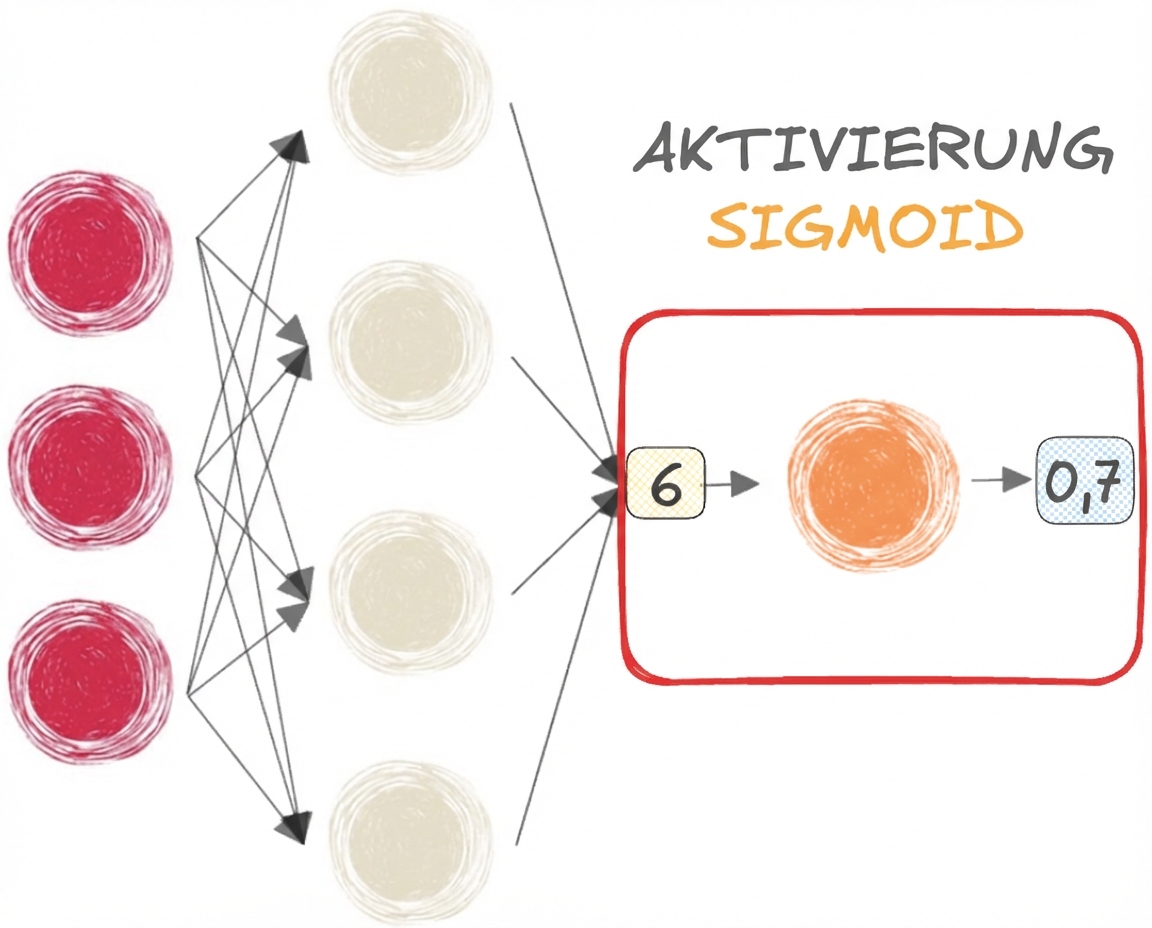

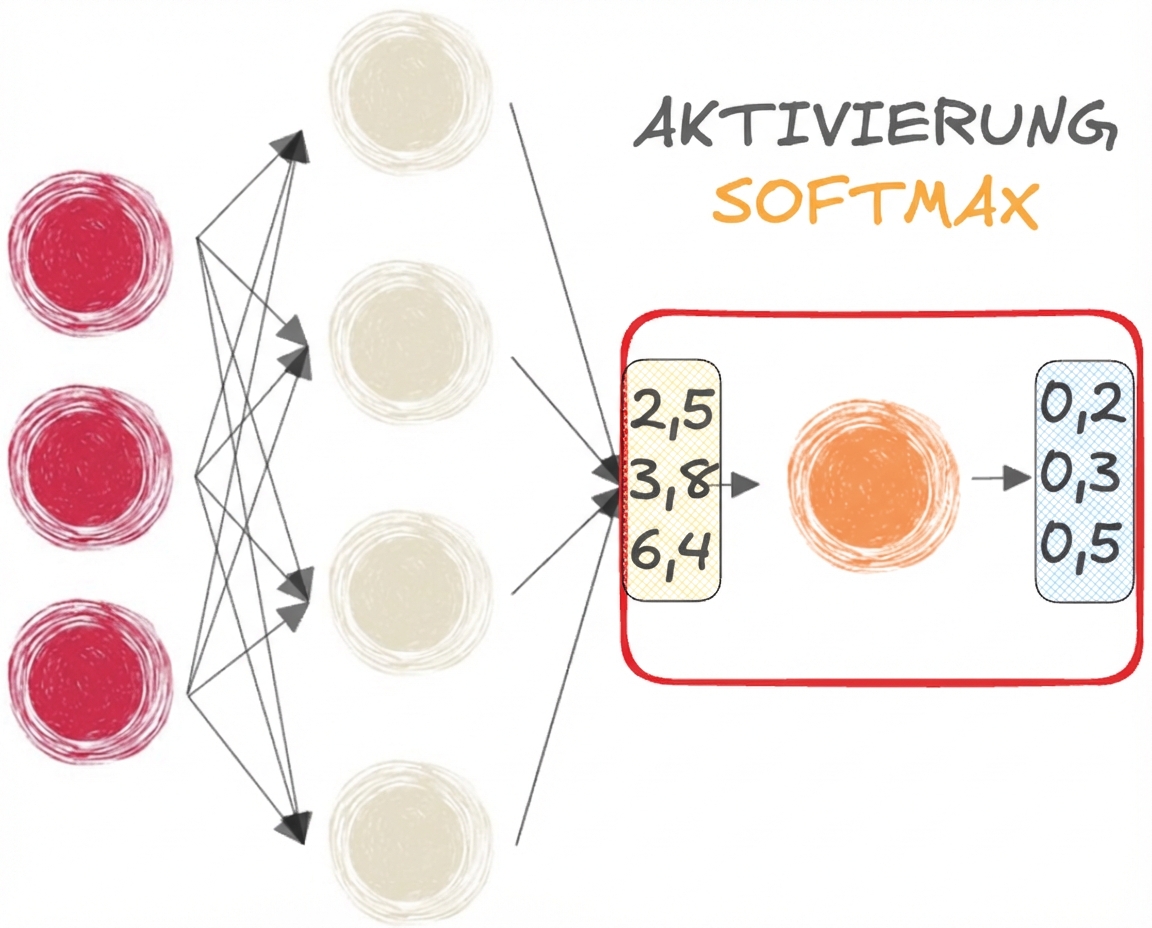

Sigmoid- und Softmax-Funktionen

$$

- SIGMOID für BINARY Klassifizierung

$$

- SOFTMAX für die MULTI-CLASS-Klassifizierung

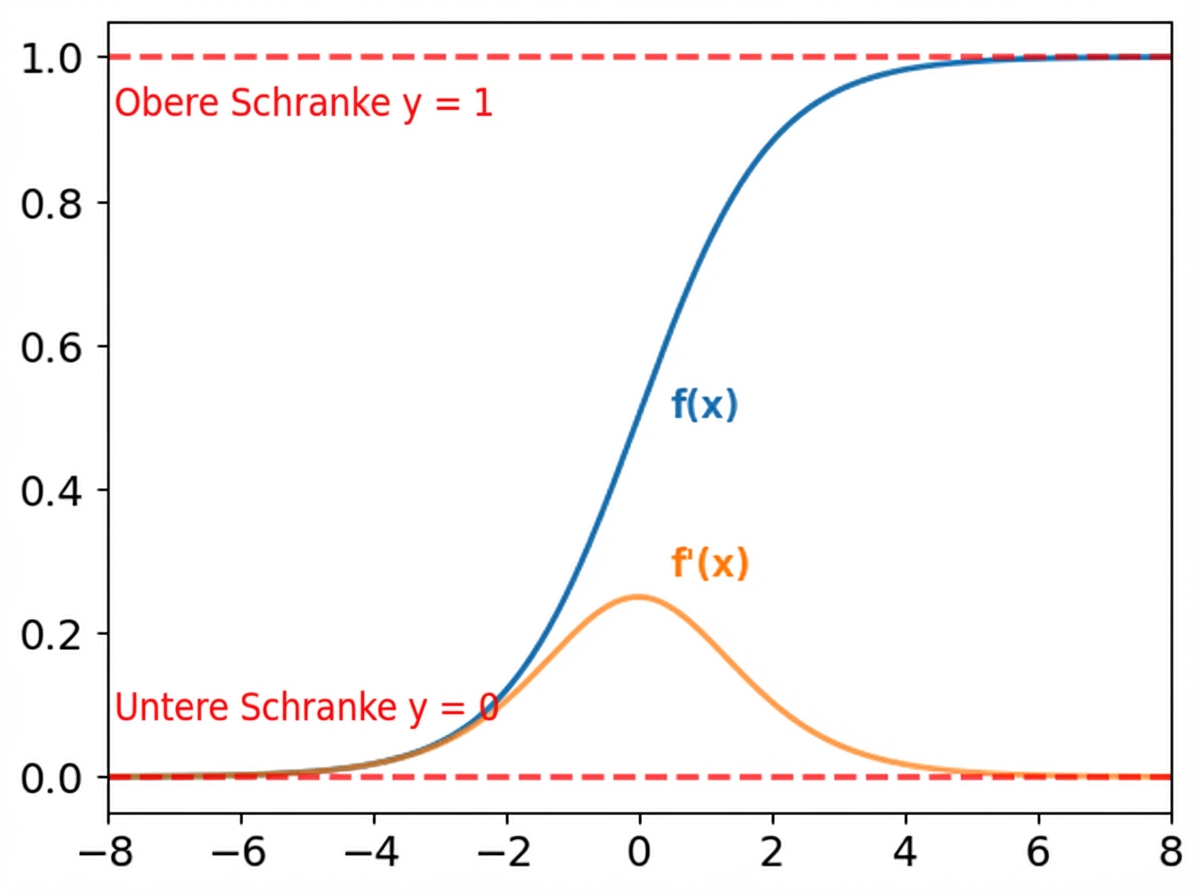

Grenzen der Sigmoid- und Softmax-Funktion

Sigmoid-Funktion:

- Ausgaben, die zwischen 0 und 1 liegen

- Überall im Netz einsetzbar

Steigungen:

- Sehr klein für große und kleine Werte von x

- Verursachen Sättigung, was zu verschwindenden Gradienten führt

$$

Auch die Softmax-Funktion leidet unter Sättigung

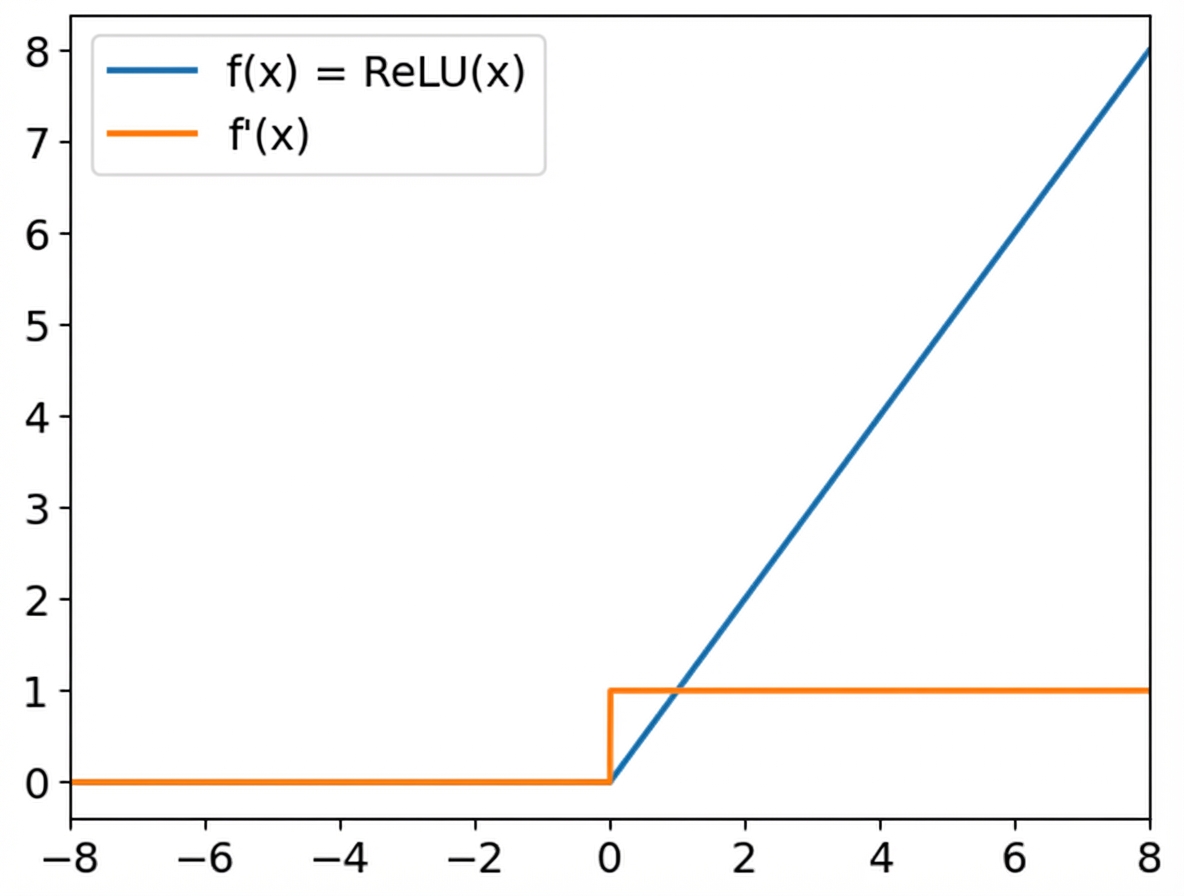

ReLU

Rectified Linear Unit (ReLU)

f(x) = max(x, 0)- Für positive Eingänge: Ausgang ist gleich Eingang

- Für negative Eingänge: Ausgang ist 0

- Hilft bei der Überwindung verschwindender Gradienten

$$

In PyTorch:

relu = nn.ReLU()

Leaky ReLU

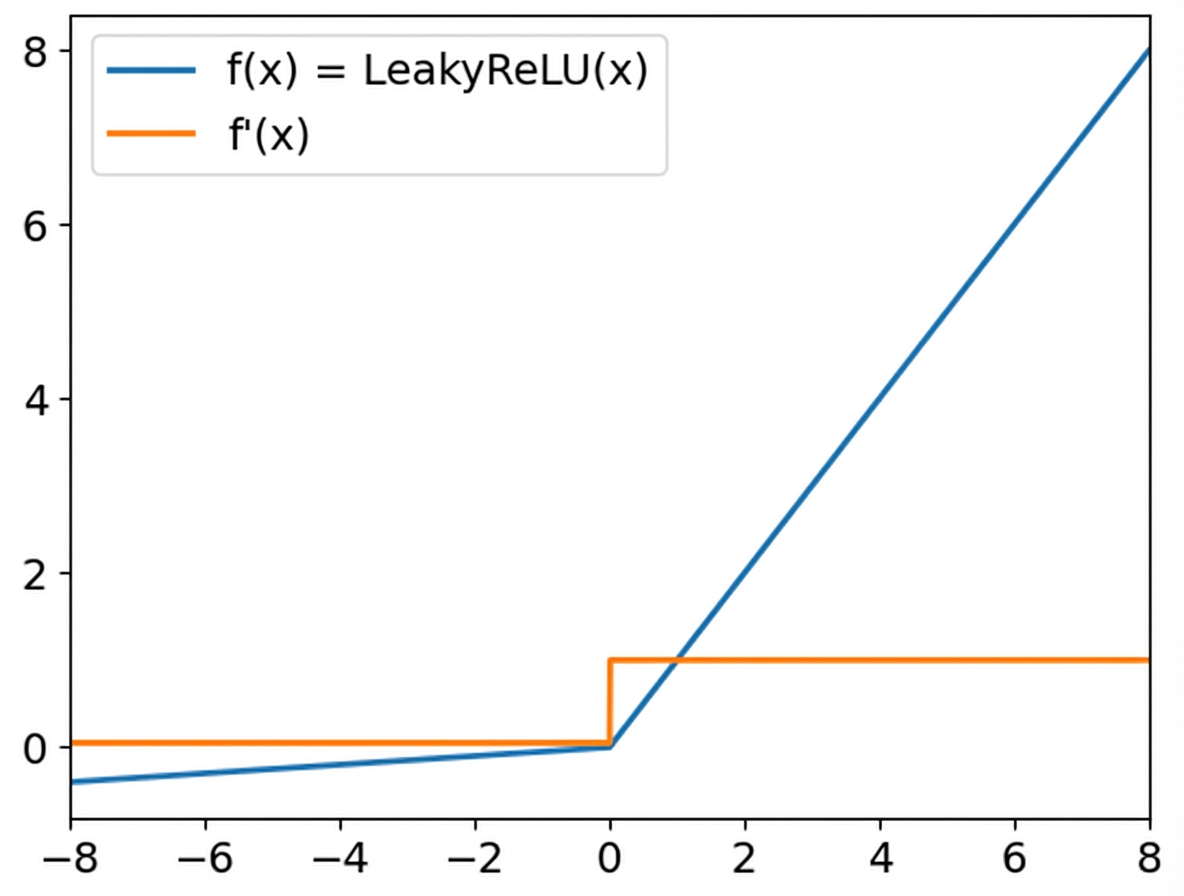

Leaky ReLU:

- Positive Eingänge verhalten sich wie ReLU

- Negative Eingaben werden mit einem kleinen Koeffizienten skaliert (Standardwert 0,01)

- Gradienten für negative Eingänge sind ungleich Null

$$

In PyTorch:

leaky_relu = nn.LeakyReLU(

negative_slope = 0.05)

Lass uns üben!

Einführung in Deep Learning mit PyTorch