The intuition behind tree-based methods

Supervised Learning in R: Regression

Nina Zumel and John Mount

Win-Vector, LLC

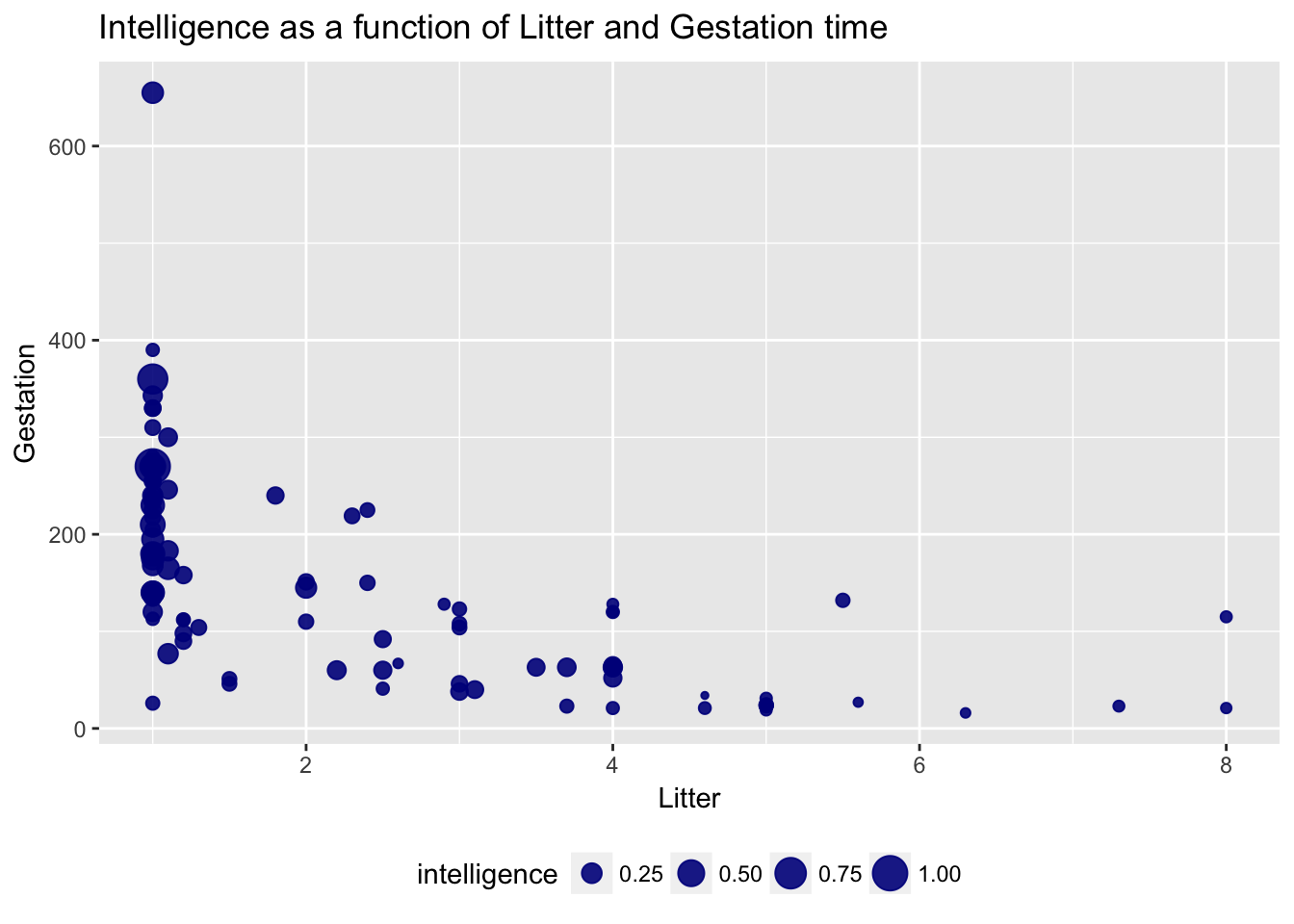

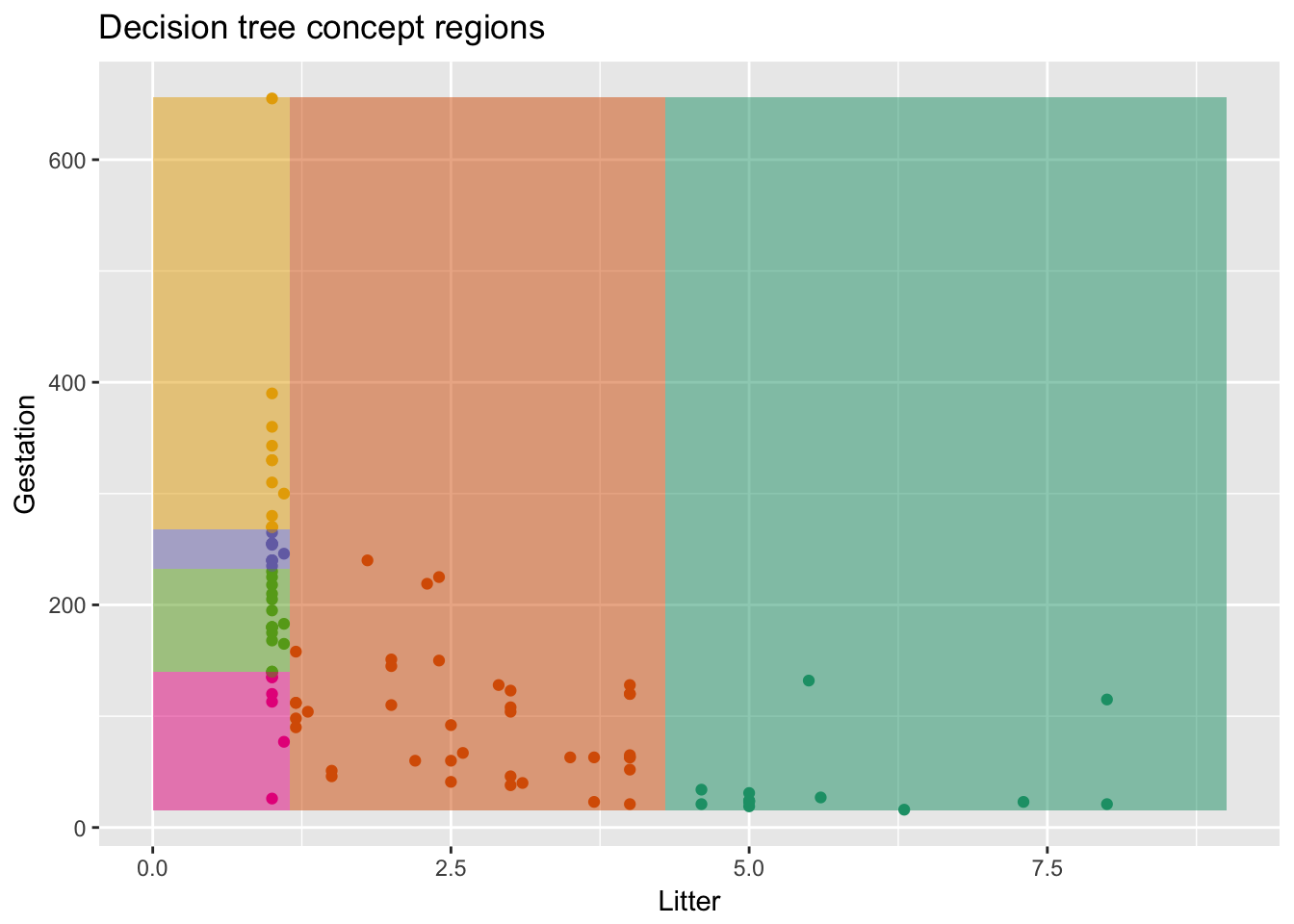

Example: Predict animal intelligence from Gestation Time and Litter Size

Decision Trees

Rules of the form:

- if a AND b AND c THEN y

Non-linear concepts

- intervals

- non-monotonic relationships

non-additive interactions

- AND: similar to multiplication

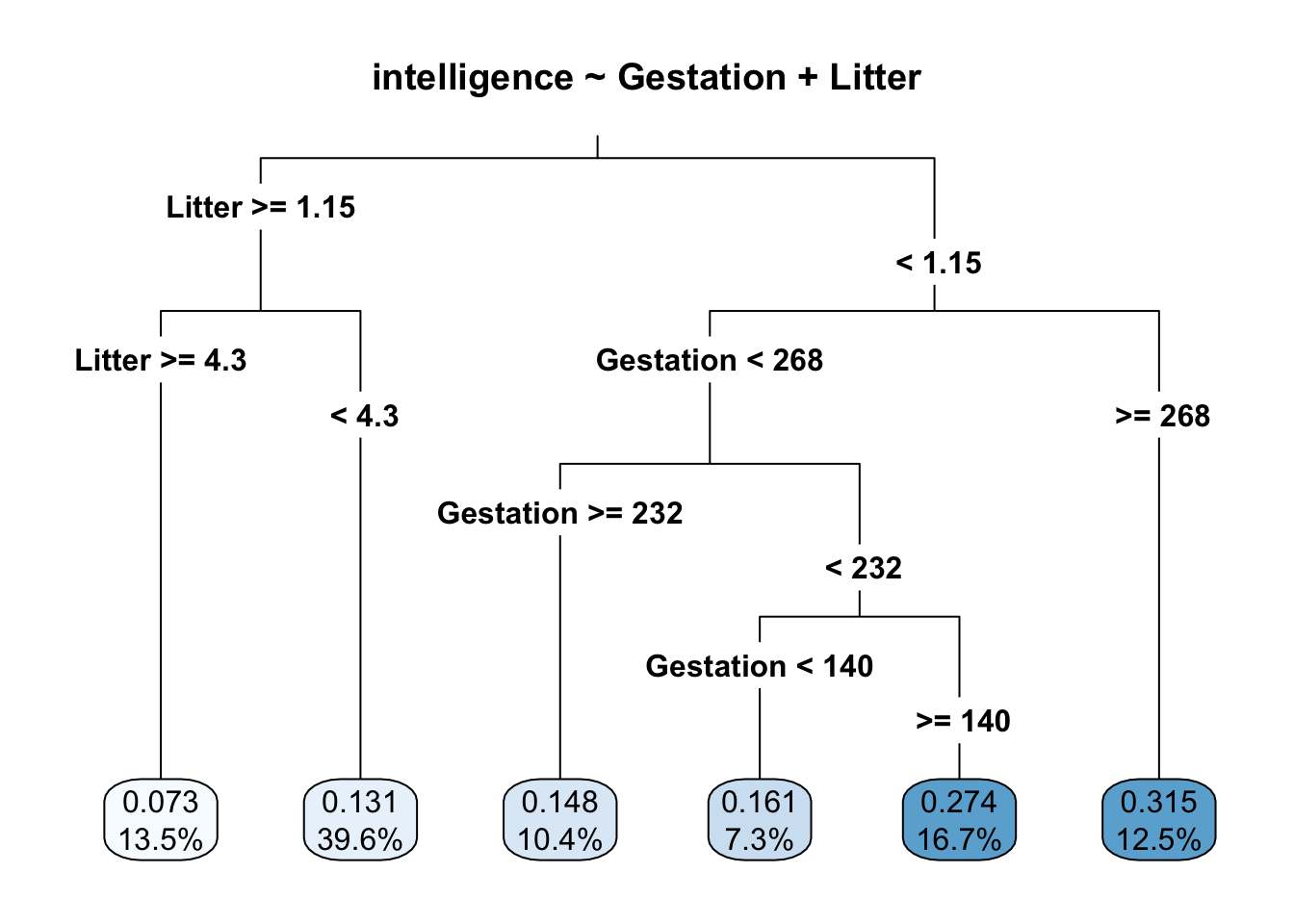

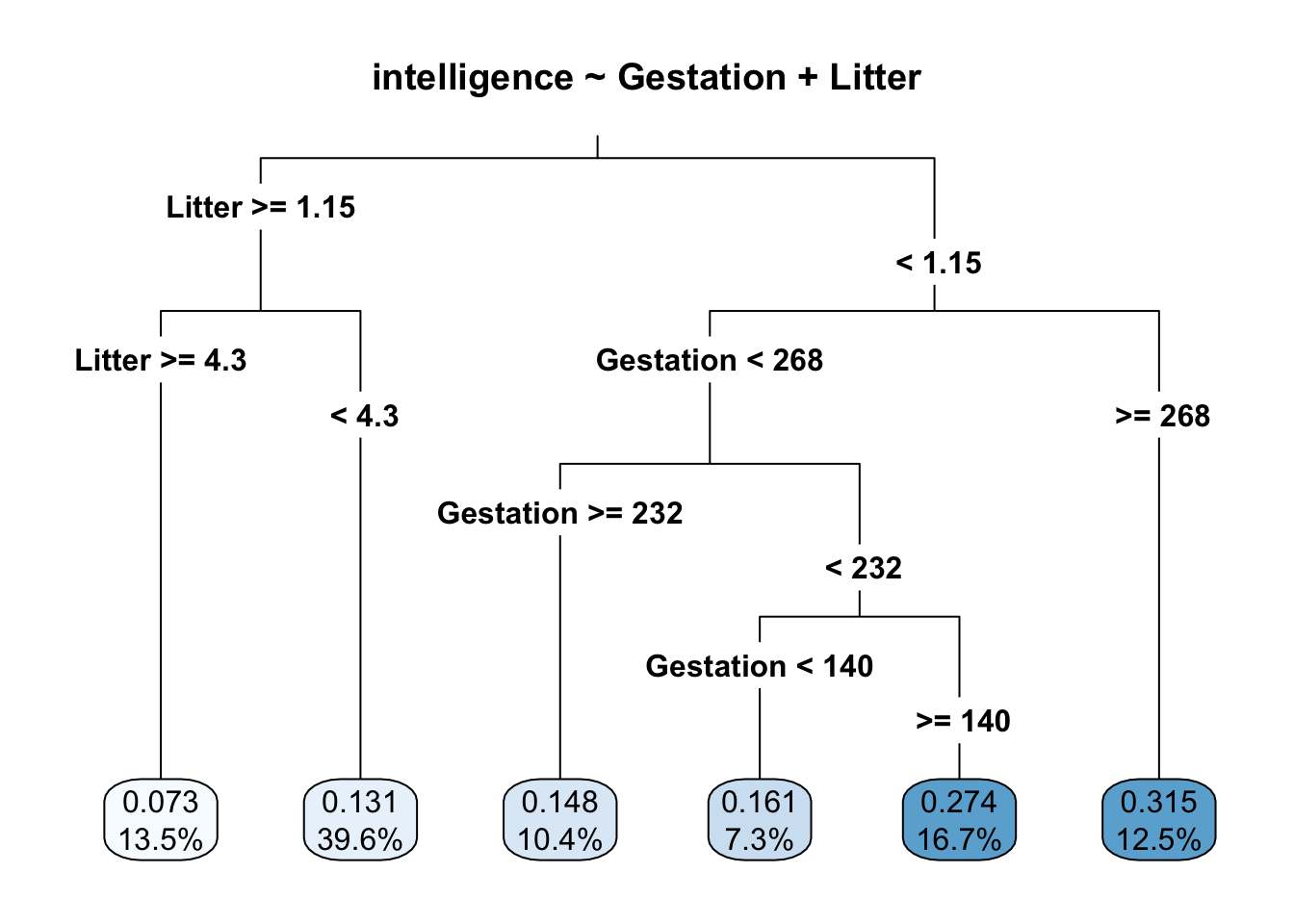

Decision Trees

- IF Litter < 1.15 AND Gestation $\ge$ 268 $\rightarrow$ intelligence = 0.315

- IF Litter IN [1.15, 4.3) $\rightarrow$ intelligence = 0.131

Decision Trees

Pro: Trees Have an Expressive Concept Space

| Model | RMSE |

|---|---|

| linear | 0.1200419 |

| tree | 0.1072732 |

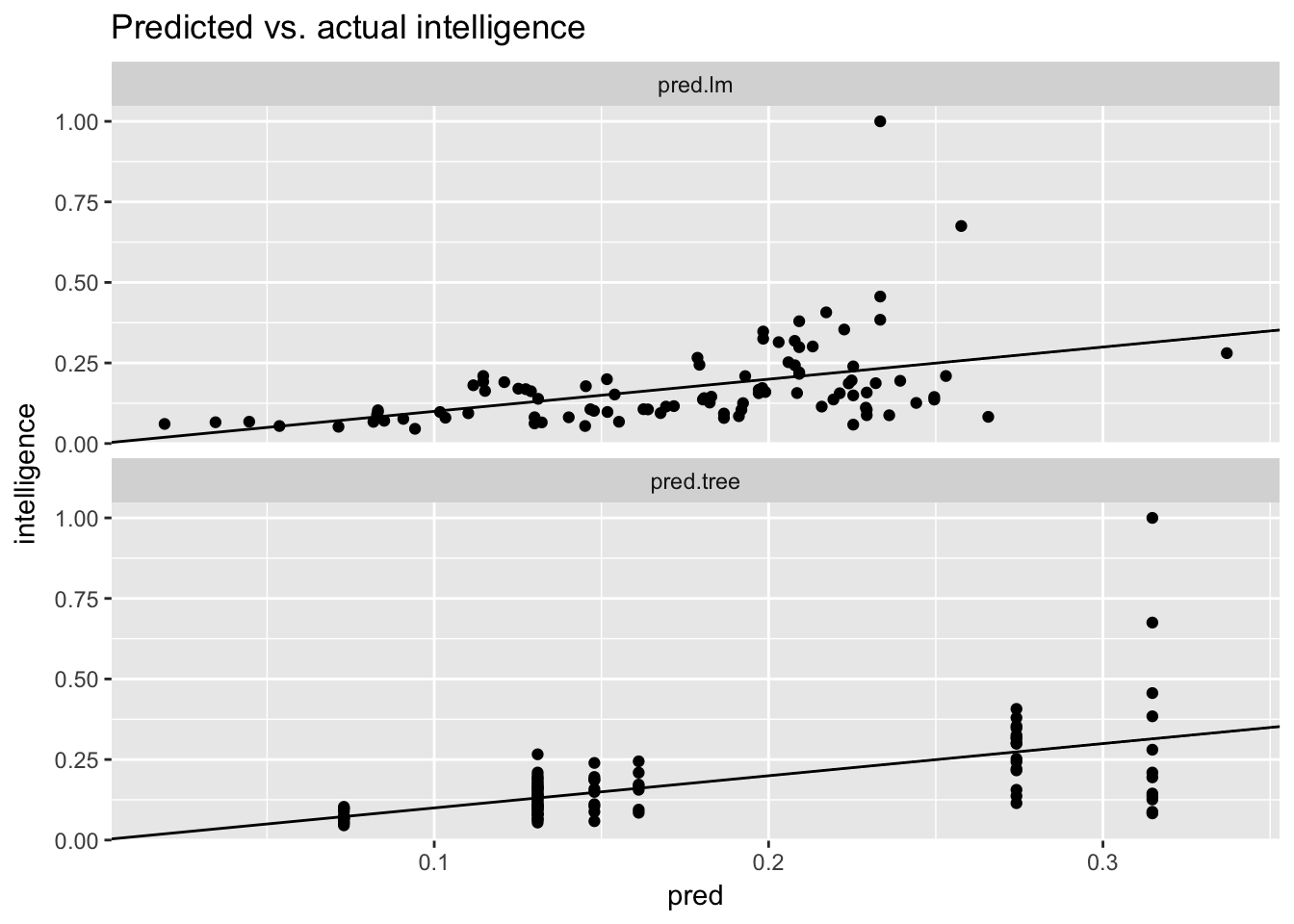

Decision Trees

Con: Coarse-Grained Predictions

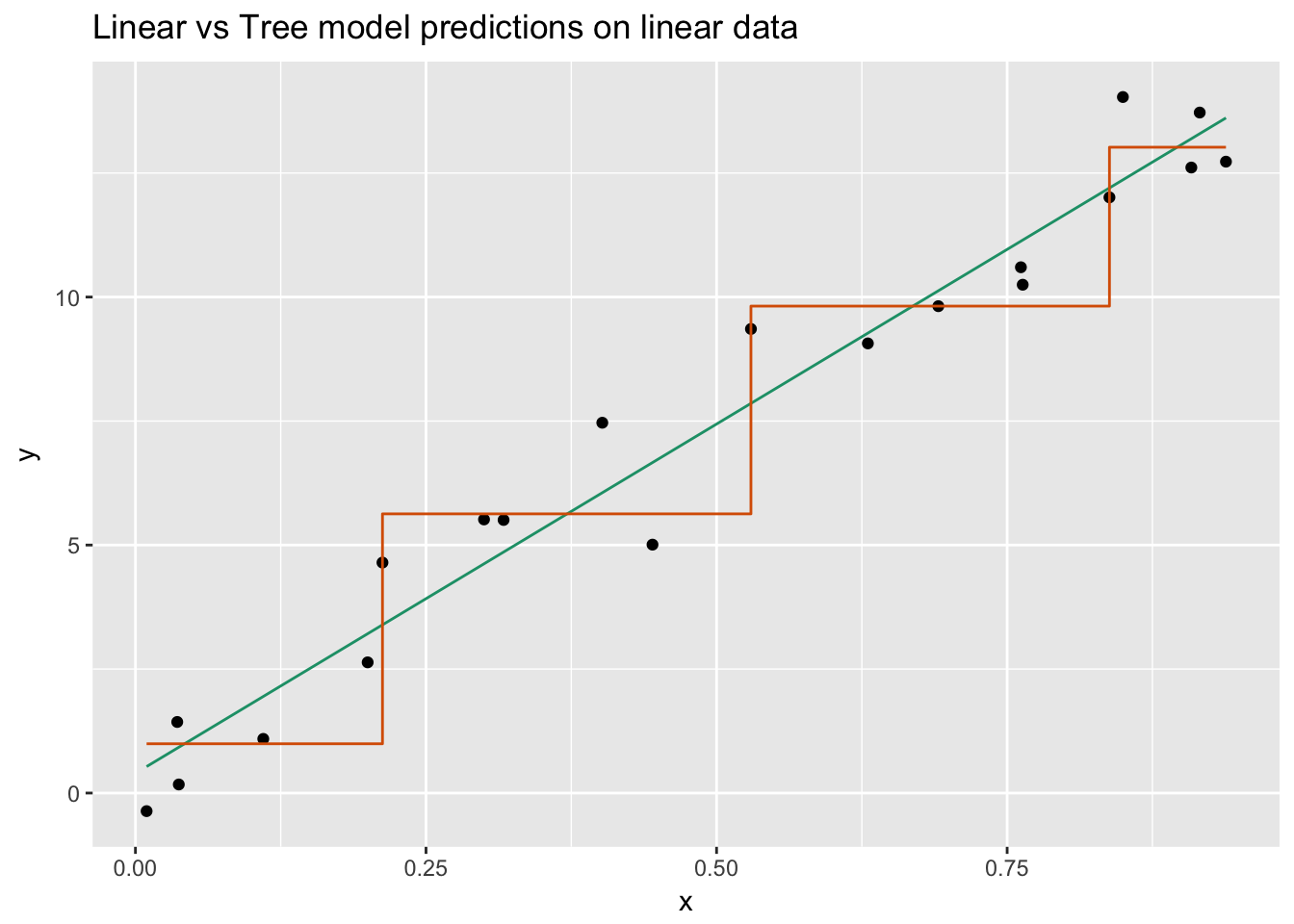

It's Hard for Trees to Express Linear Relationships

Trees Predict Axis-Aligned Regions

Each color is a different predicted value

It's Hard for Trees to Express Linear Relationships

It's Hard to Express Lines with Steps

Other Issues with Trees

- Tree with too many splits (deep tree):

- Too complex - danger of overfit

- Tree with too few splits (shallow tree):

- Predictions too coarse-grained

Ensembles of Trees

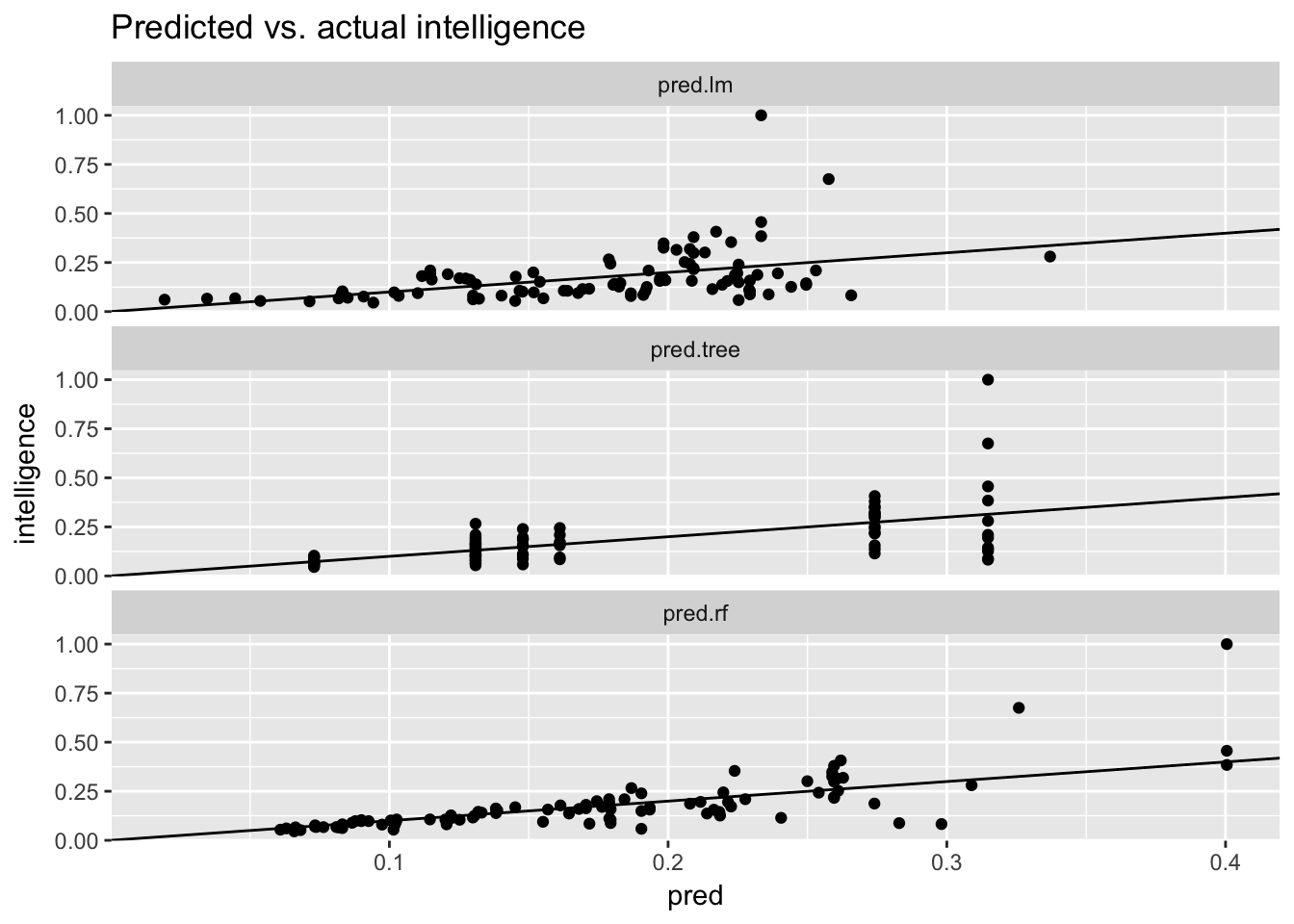

Ensembles Give Finer-grained Predictions than Single Trees

Ensembles of Trees

Ensemble Model Fits Animal Intelligence Data Better than Single Tree

| Model | RMSE |

|---|---|

| linear | 0.1200419 |

| tree | 0.1072732 |

| random forest | 0.0901681 |

Let's practice!

Supervised Learning in R: Regression