Gradient boosting machines

Supervised Learning in R: Regression

Nina Zumel and John Mount

Win-Vector, LLC

How Gradient Boosting Works

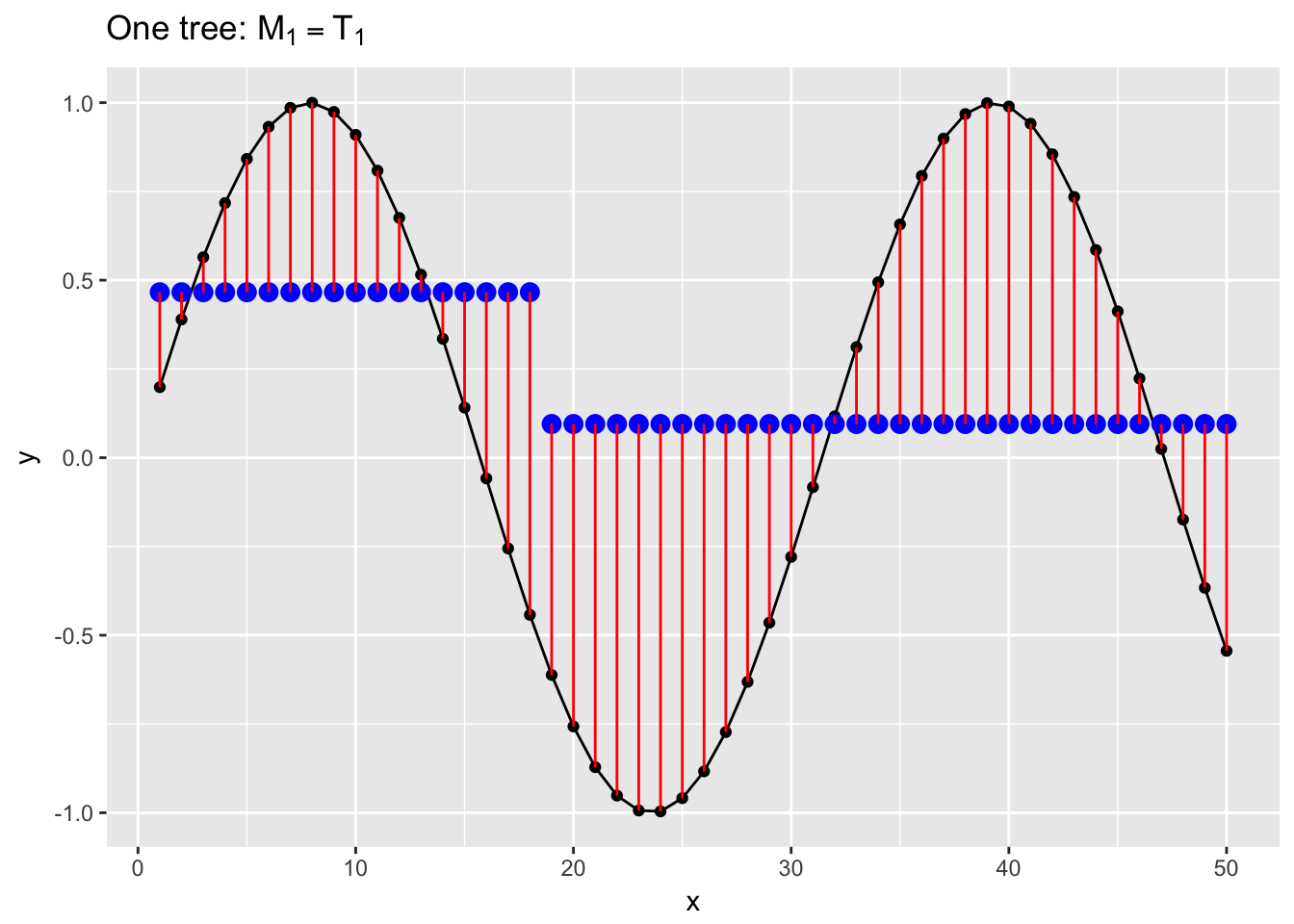

- Fit a shallow tree $T_1$ to the data: $M_1 = T_1$

How Gradient Boosting Works

- Fit a shallow tree $T_1$ to the data: $M_1 = T_1$

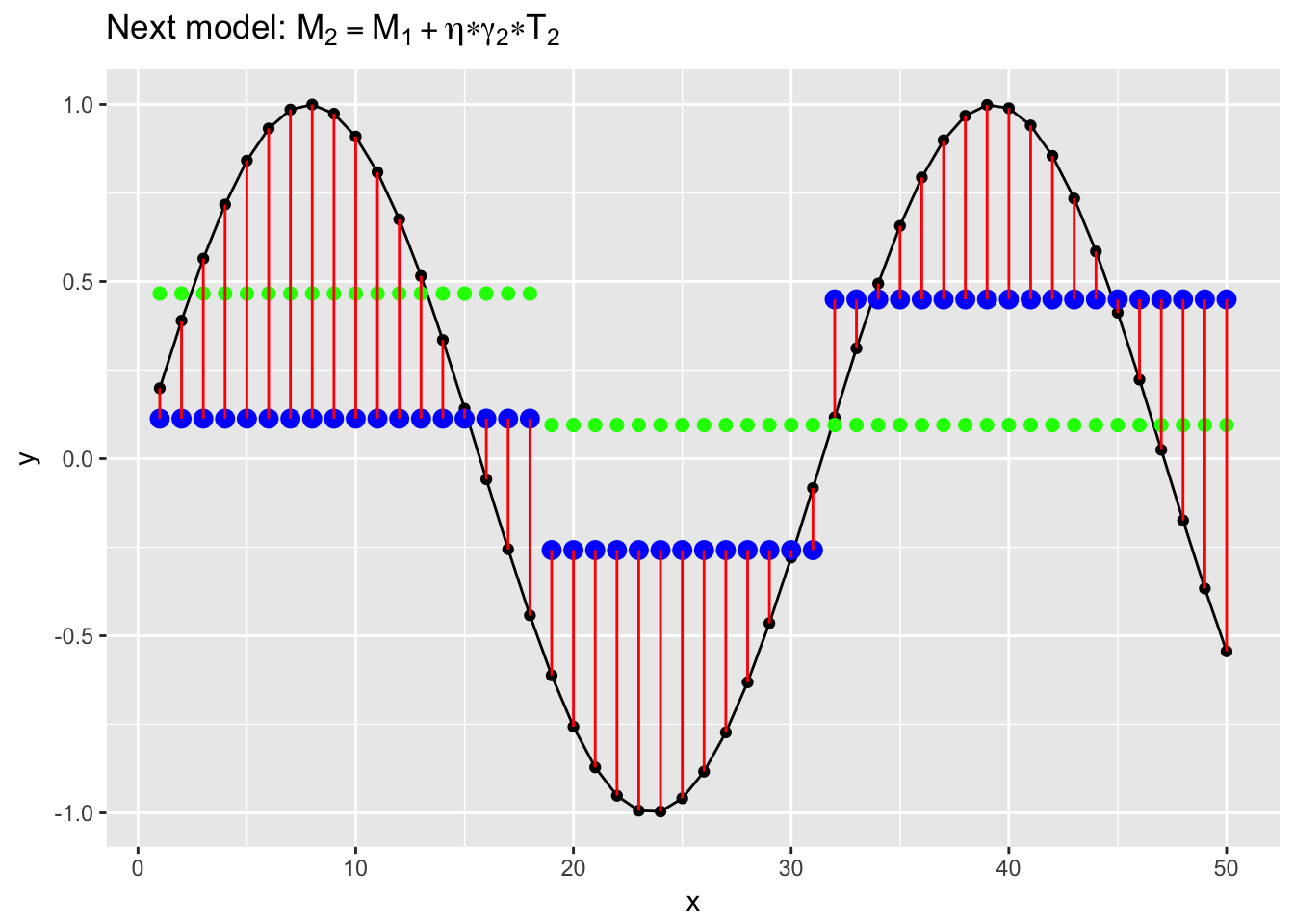

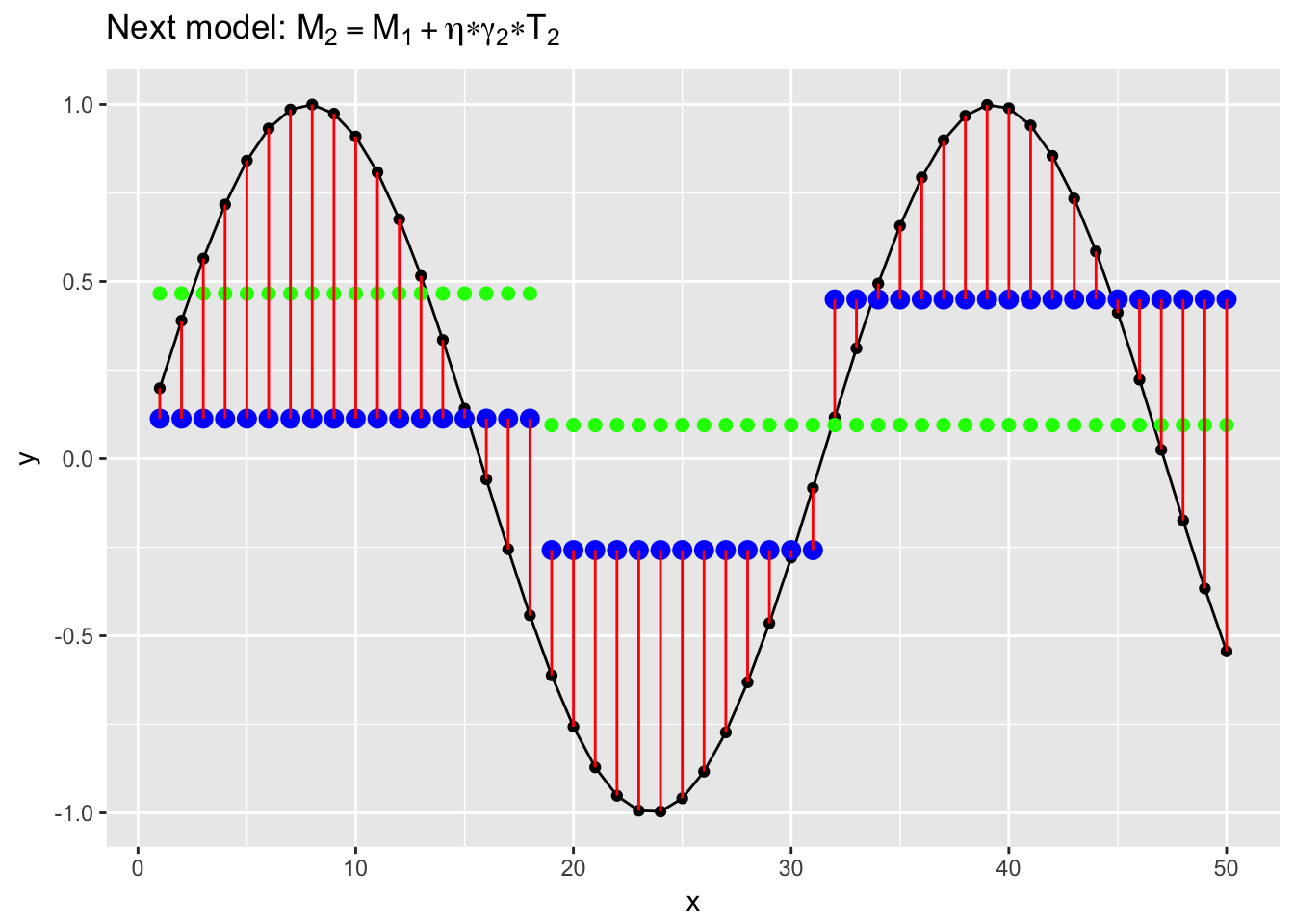

- Fit a tree T_2 to the residuals. Find $\gamma$ such that $M_2 = M_1 + \gamma T_2$ is the best fit to data

How Gradient Boosting Works

Regularization: learning rate $\eta \in(0,1)$

$$ M_2 = M_1 + \eta \gamma T_2 $$

- Larger $\eta$: faster learning

- Smaller $\eta$: less risk of overfit

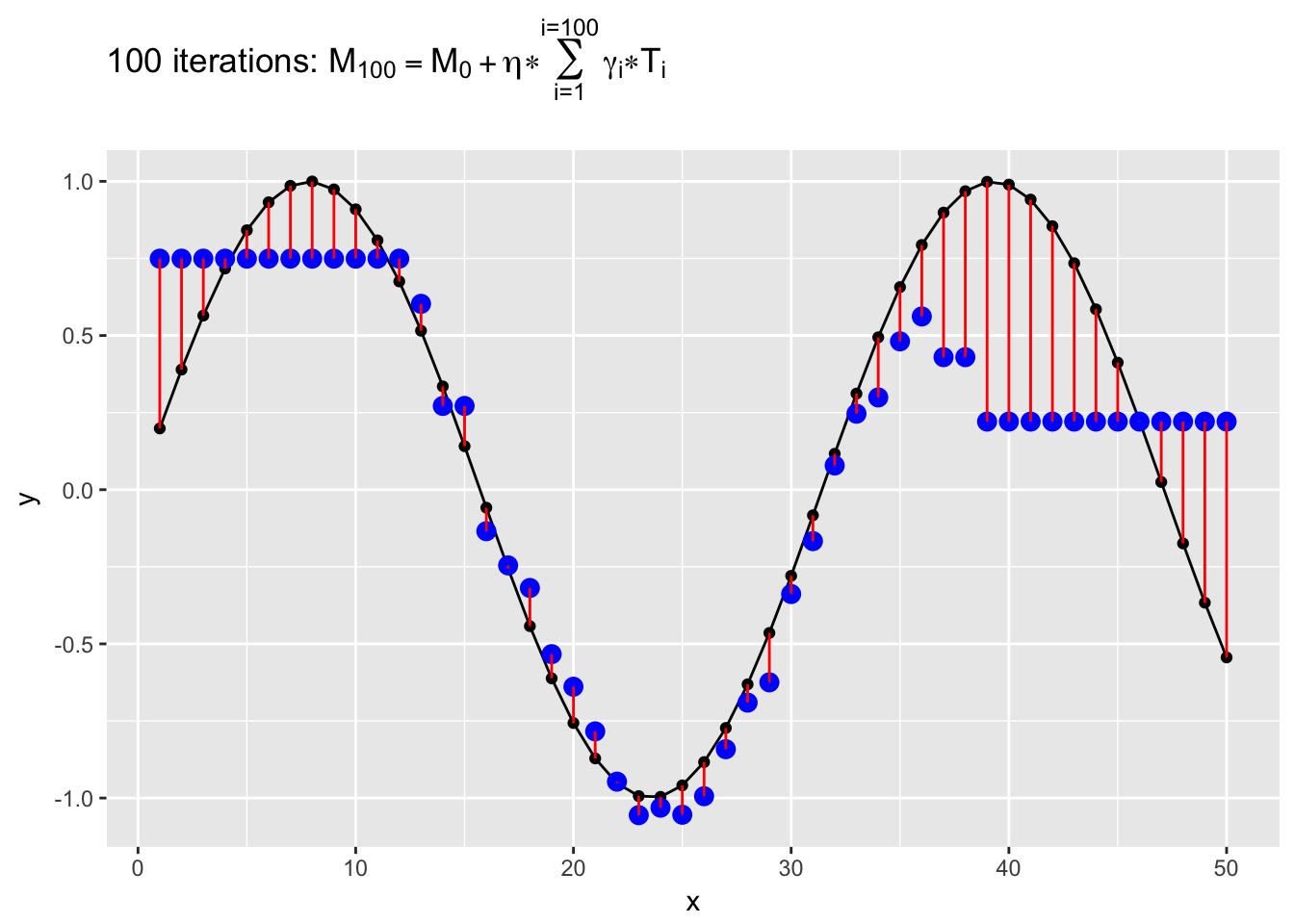

How Gradient Boosting Works

- Fit a shallow tree $T_1$ to the data

- $M_1 = T_1$

- Fit a tree T_2 to the residuals.

- $M_2 = M_1 + \eta \gamma_2 T_2$

- Repeat (2) until stopping condition met

Final Model:

$$ M = M_1 + \eta \sum \gamma_i T_i $$

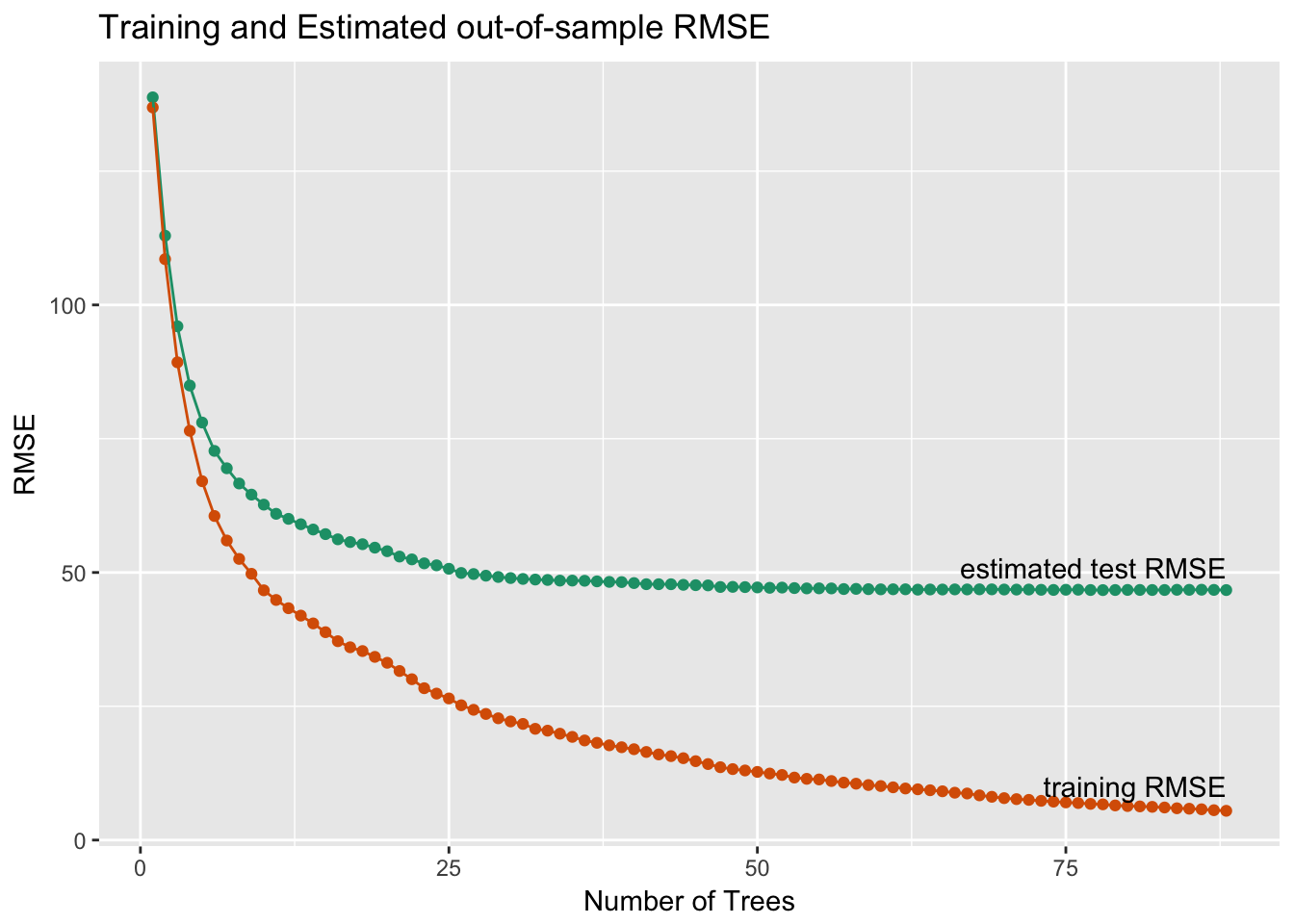

Cross-validation to Guard Against Overfit

Training error keeps decreasing, but test error doesn't

Best Practice (with xgboost())

- Run

xgb.cv()with a large number of rounds (trees).

Best Practice (with xgboost())

- Run

xgb.cv()with a large number of rounds (trees). xgb.cv()$evaluation_log: records estimated RMSE for each round.- Find the number of trees that minimizes estimated RMSE: $n_{best}$

Best Practice (with xgboost())

- Run

xgb.cv()with a large number of rounds (trees). xgb.cv()$evaluation_log: records estimated RMSE for each round.- Find the number of trees that minimizes estimated RMSE: $n_{best}$

- Run

xgboost(), settingnrounds= $n_{best}$

Example: Bike Rental Model

First, prepare the data

treatplan <- designTreatmentsZ(bikesJan, vars)

newvars <- treatplan$scoreFrame %>%

filter(code %in% c("clean", "lev")) %>%

use_series(varName)

bikesJan.treat <- prepare(treatplan, bikesJan, varRestriction = newvars)

For xgboost():

- Input data:

as.matrix(bikesJan.treat) - Outcome:

bikesJan$cnt

Training a model with xgboost() / xgb.cv()

cv <- xgb.cv(data = as.matrix(bikesJan.treat), label = bikesJan$cnt,

objective = "reg:squarederror",

nrounds = 100, nfold = 5, eta = 0.3, max_depth = 6)

Key inputs to xgb.cv() and xgboost()

data: input data as matrix ;label: outcomeobjective: for regression -"reg:squarederror"nrounds: maximum number of trees to fiteta: learning ratemax_depth: maximum depth of individual treesnfold(xgb.cv()only): number of folds for cross validation

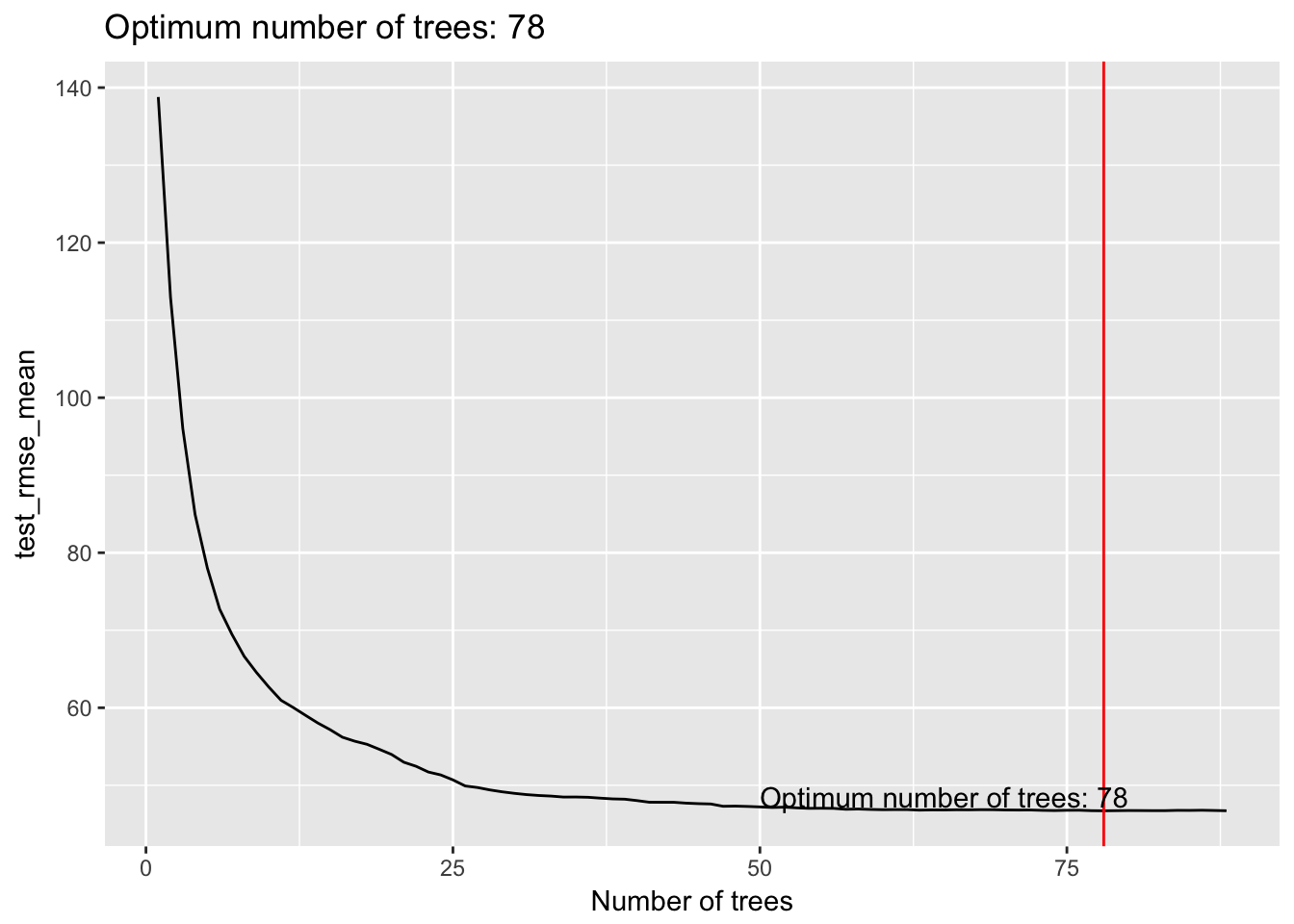

Find the Right Number of Trees

elog <- as.data.frame(cv$evaluation_log)

(nrounds <- which.min(elog$test_rmse_mean))

78

Run xgboost() for final model

nrounds <- 78

model <- xgboost(data = as.matrix(bikesJan.treat),

label = bikesJan$cnt,

nrounds = nrounds,

objective = "reg:squarederror",

eta = 0.3,

max_depth = 6)

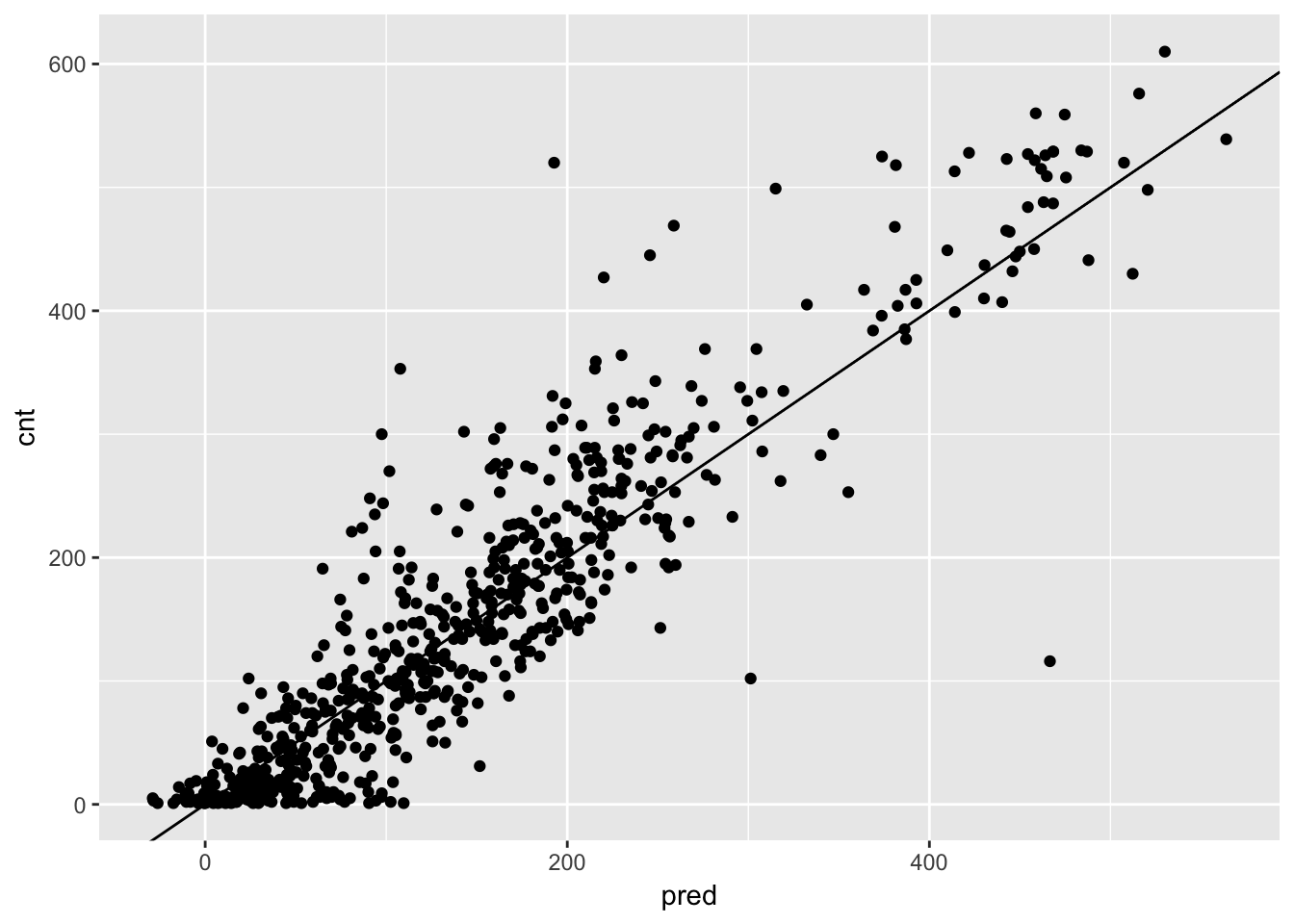

Predict with an xgboost() model

Prepare February data, and predict

bikesFeb.treat <- prepare(treatplan, bikesFeb, varRestriction = newvars)

bikesFeb$pred <- predict(model, as.matrix(bikesFeb.treat))

Model performances on Febrary Data

| Model | RMSE |

|---|---|

| Quasipoisson | 69.3 |

| Random forests | 67.15 |

| Gradient Boosting | 54.0 |

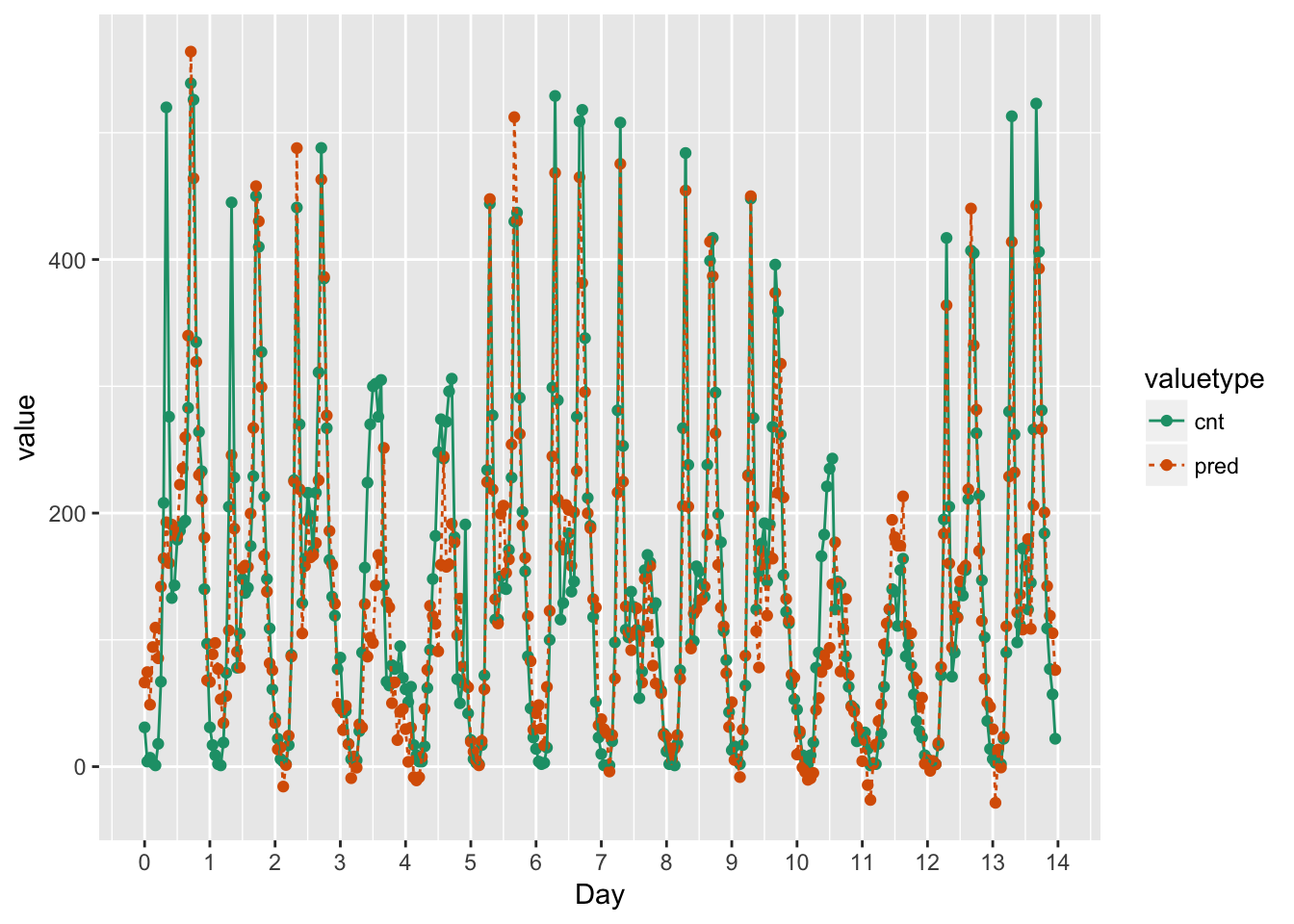

Visualize the Results

Predictions vs. Actual Bike Rentals, February

Predictions and Hourly Bike Rentals, February

Let's practice!

Supervised Learning in R: Regression