GAM to learn non-linear transformations

Supervised Learning in R: Regression

Nina Zumel and John Mount

Win-Vector, LLC

Generalized Additive Models (GAMs)

$$ y \sim b0 + s1(x1) + s2(x2) + .... $$

Learning Non-linear Relationships

gam() in the mgcv package

gam(formula, family, data)

family:

- gaussian (default): "regular" regression

- binomial: probabilities

- poisson/quasipoisson: counts

Best for larger datasets

The s() function

anx ~ s(hassles)

s()designates that variable should be non-linear- Use

s()with continuous variables- More than about 10 unique values

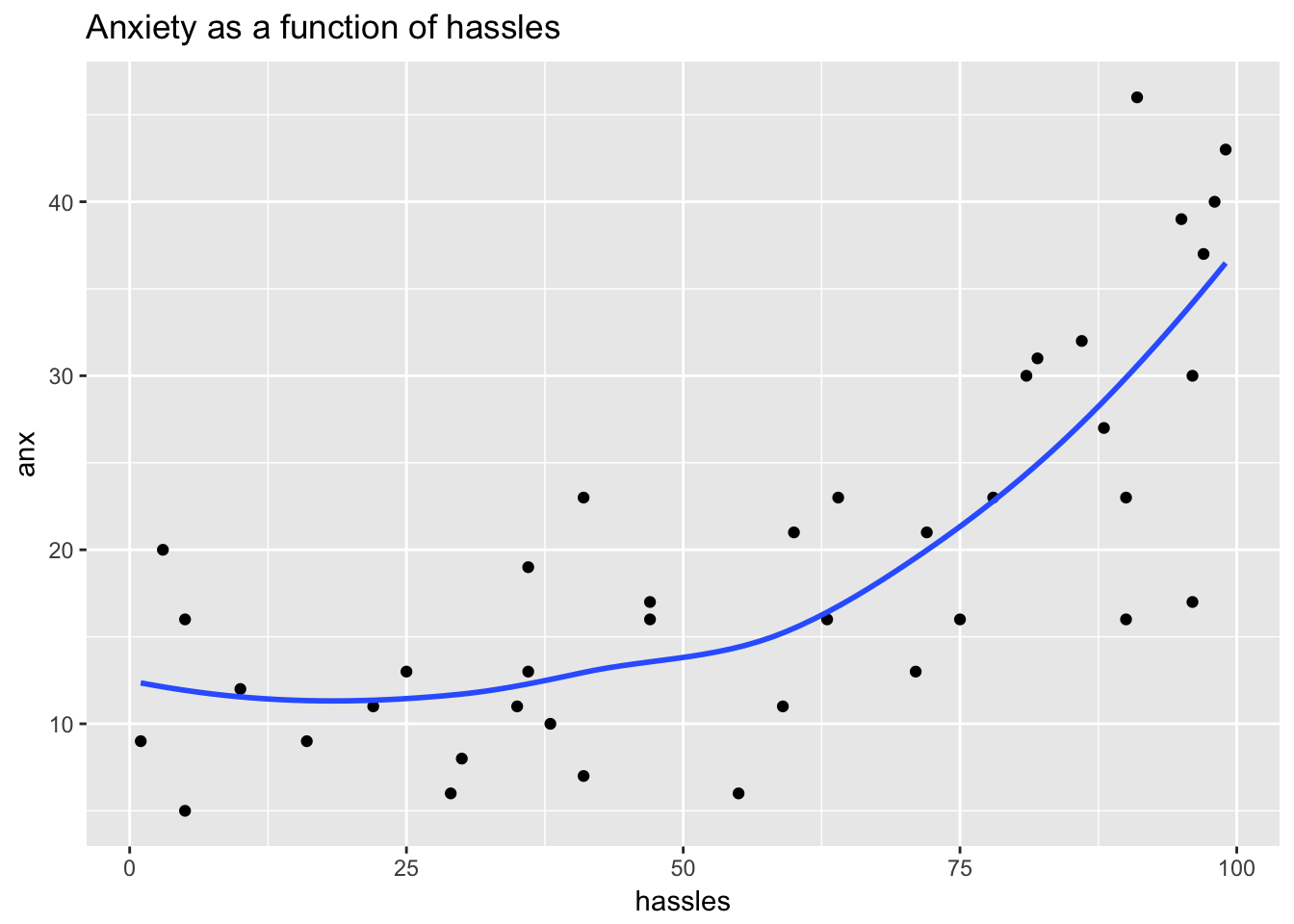

Revisit the hassles data

Revisit the hassles data

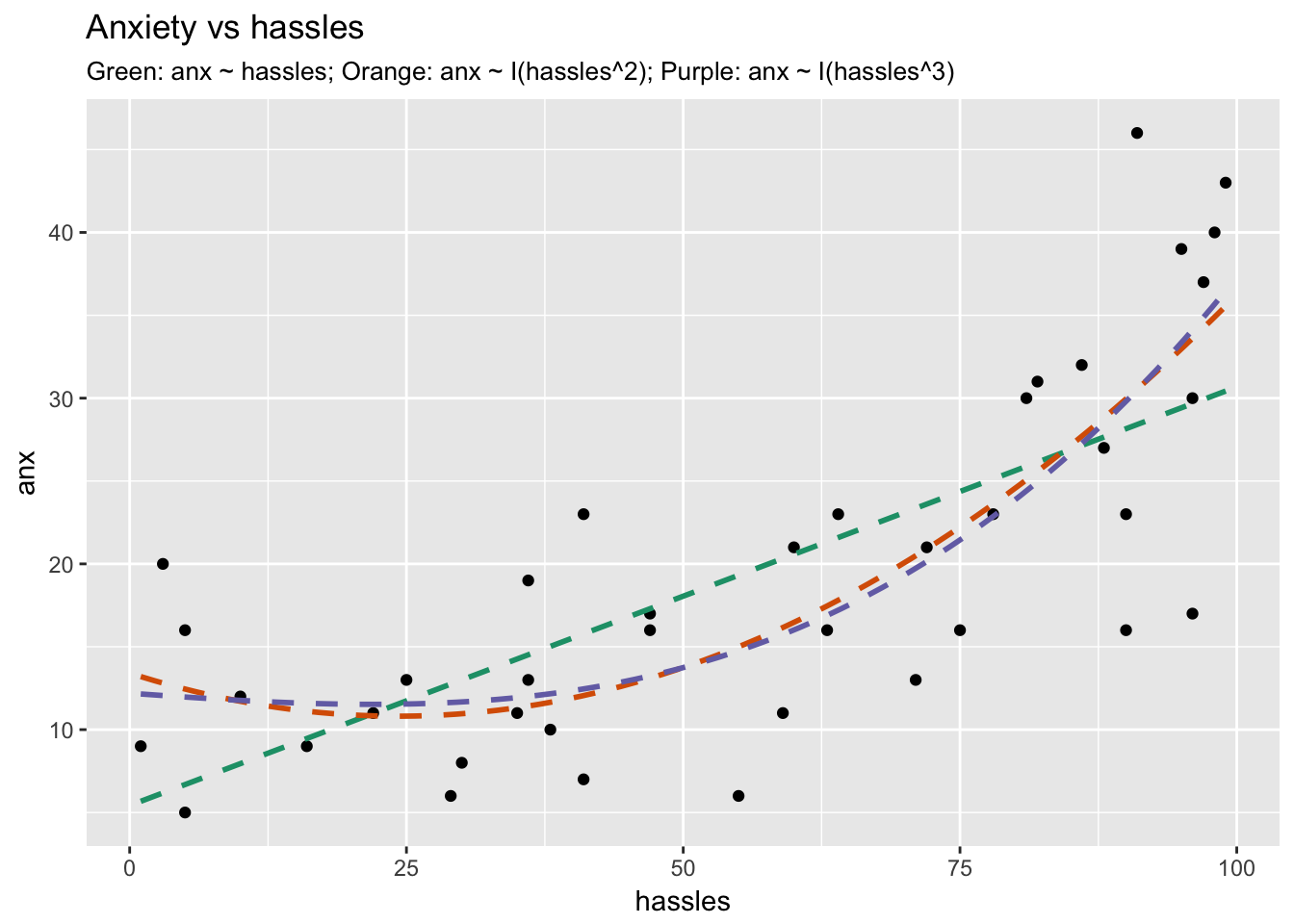

| Model | RMSE (cross-val) | $R^2$ (training) |

|---|---|---|

| Linear ($hassles$) | 7.69 | 0.53 |

| Quadratic ($hassles^2$) | 6.89 | 0.63 |

| Cubic ($hassles^3$) | 6.70 | 0.65 |

GAM of the hassles data

model <- gam(

anx ~ s(hassles),

data = hassleframe,

family = gaussian

)

summary(model)

...

R-sq.(adj) = 0.619 Deviance explained = 64.1%

GCV = 49.132 Scale est. = 45.153 n = 40

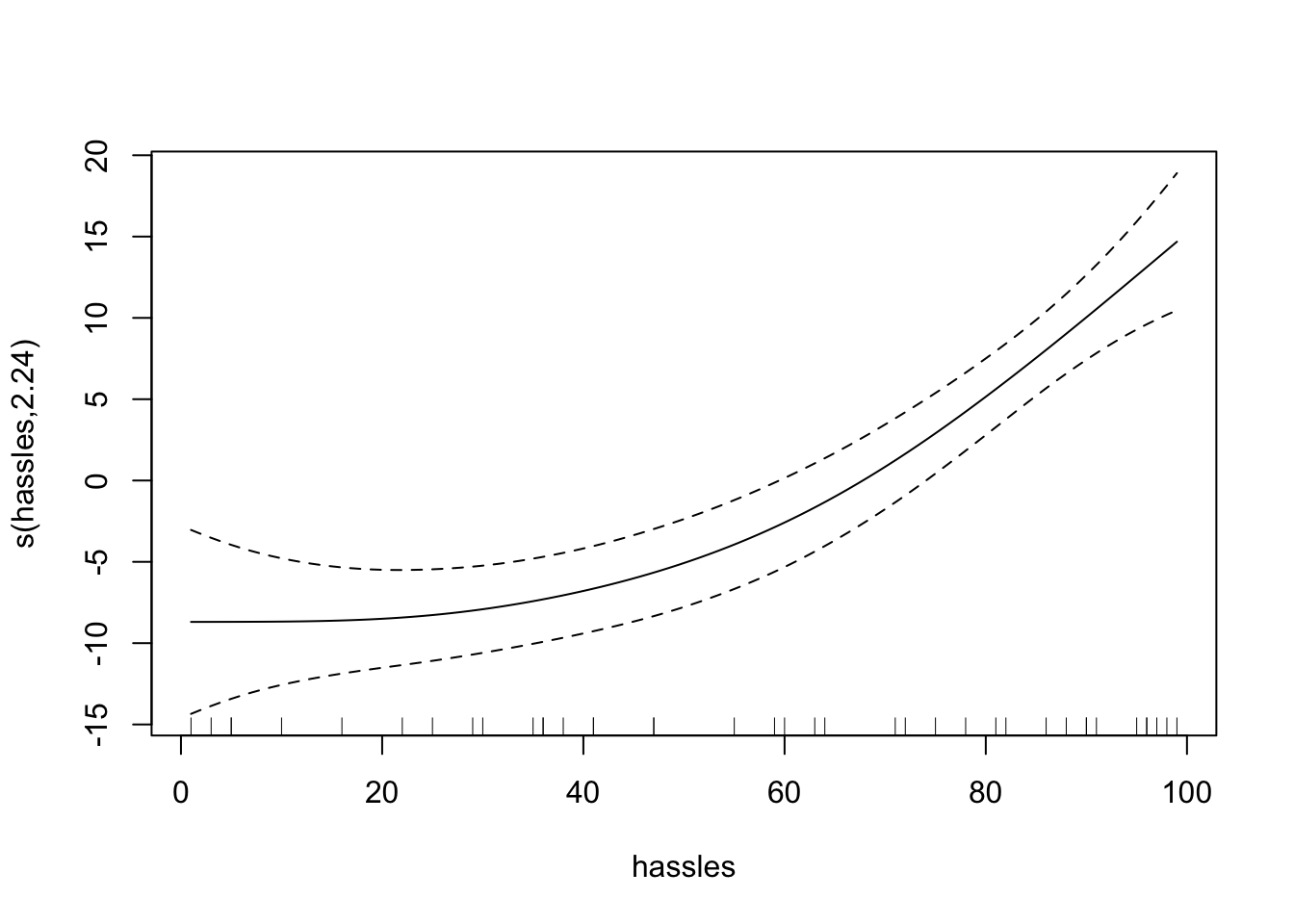

Examining the Transformations

plot(model)

$y$ values: predict(model, type = "terms")

Predicting with the Model

predict(model, newdata = hassleframe, type = "response")

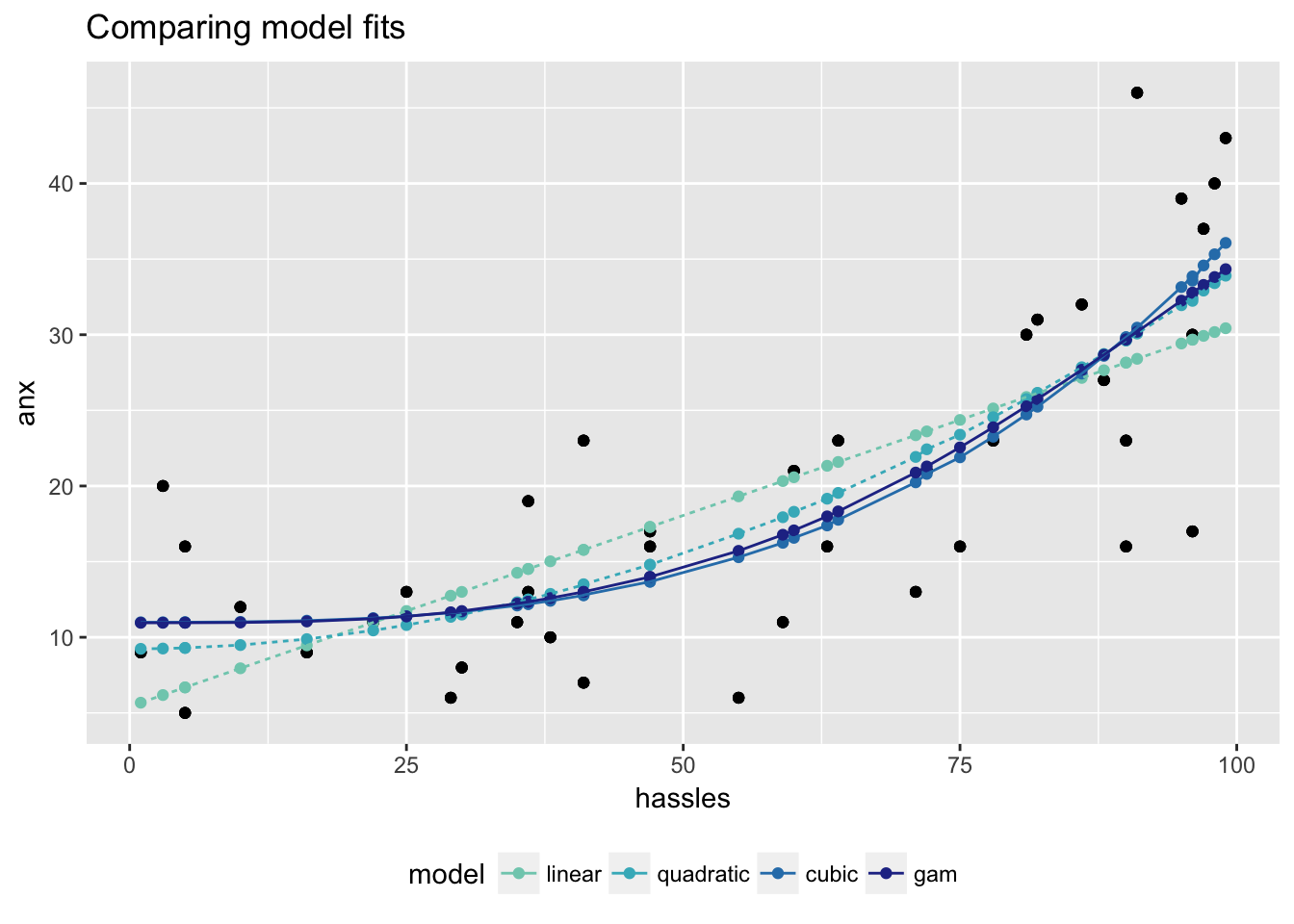

Comparing out-of-sample performance

Knowing the correct transformation is best, but GAM is useful when transformation isn't known

| Model | RMSE (cross-val) | $R^2$ (training) |

|---|---|---|

| Linear ($hassles$) | 7.69 | 0.53 |

| Quadratic ($hassles^2$) | 6.89 | 0.63 |

| Cubic ($hassles^3$) | 6.70 | 0.65 |

| GAM | 7.06 | 0.64 |

- Small dataset $\rightarrow$ noisier GAM

Let's practice!

Supervised Learning in R: Regression