Introduction to PySpark

Introduction to PySpark

Benjamin Schmidt

Data Engineer

Meet your instructor

- Almost a Decade of Data Experience with PySpark

Used PySpark for Machine Learning, ETL tasks, and much more more

Enthusiastic teacher of new tools for all!

-

What is PySpark?

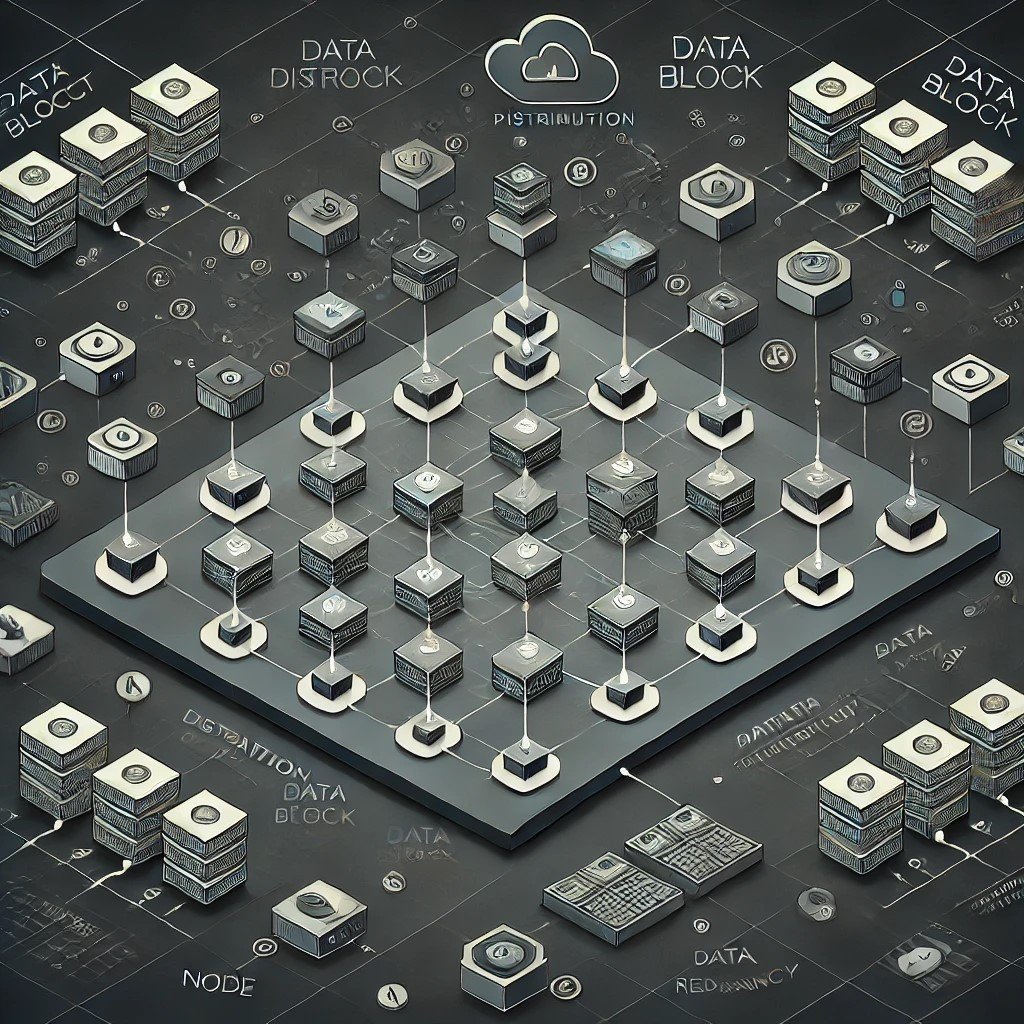

Distributed data processing: Designed to handle large datasets across clusters

Supports various data formats including CSV, Parquet, and JSON

SQL integration allows querying of data using both Python and SQL syntax

Optimized for speed at scale

When would we use PySpark?

Big data analytics

Distributed data processing

Real-time data streaming

Machine learning on large datasets

ETL and ELT pipelines

Working with diverse data sources:

- CSV

- JSON

- Parquet

- Many Many More

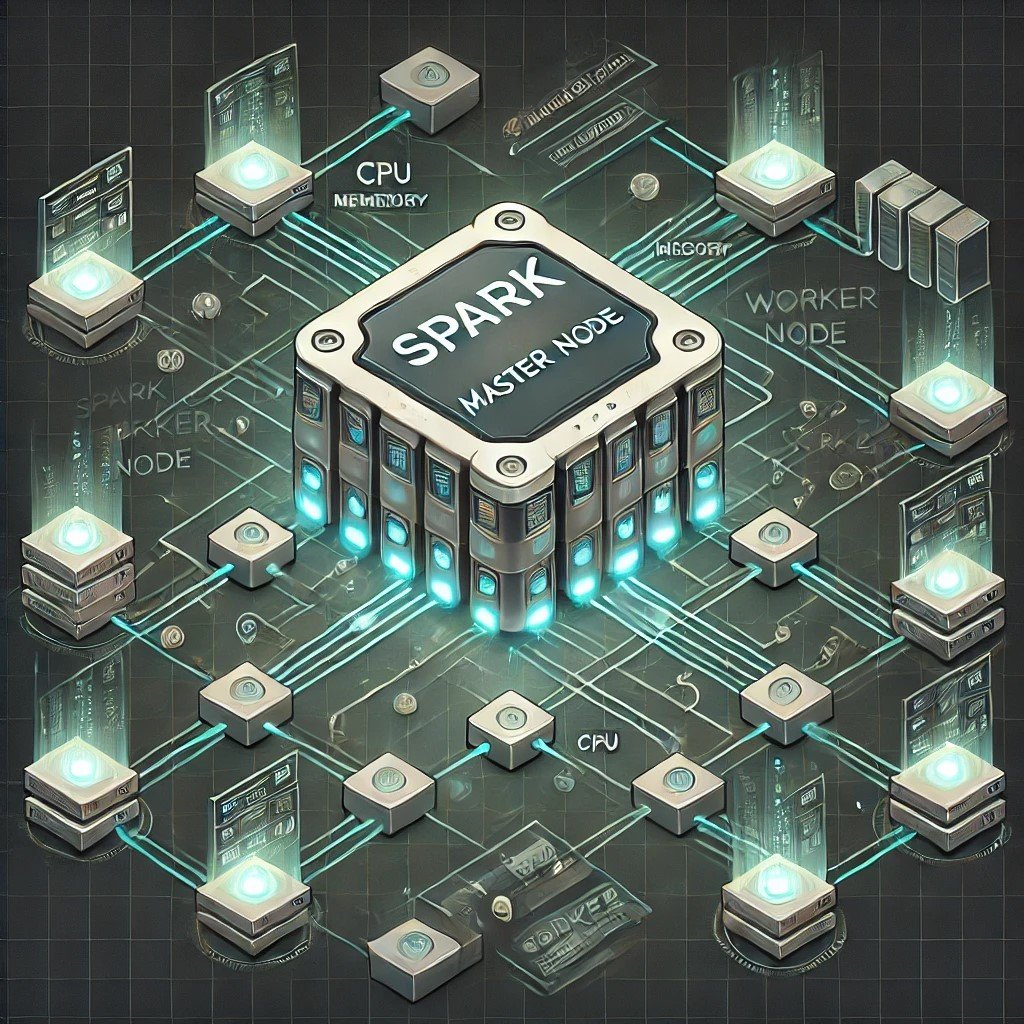

Spark cluster

Master Node

- Manages the cluster, coordinates tasks, and schedules jobs

Worker Nodes

- Execute the tasks assigned by the master

- Responsible for executing the actual computations and storing data in memory or disk

SparkSession

- SparkSessions allow you to access your Spark cluster and are critical for using PySpark.

# Import SparkSession

from pyspark.sql import SparkSession

# Initialize a SparkSession

spark = SparkSession.builder.appName("MySparkApp").getOrCreate()

$$

.builder()sets up a sessiongetOrCreate()creates or retrieves a session.appName()helps manage multiple sessions

PySpark DataFrames

- Similar to other DataFrames but

- Optimized for PySpark

# Import and initialize a Spark session

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("MySparkApp").getOrCreate()

# Create a DataFrame

census_df = spark.read.csv("census.csv",

["gender","age","zipcode","salary_range_usd","marriage_status"])

# Show the DataFrame

census_df.show()

Let's practice!

Introduction to PySpark