Activation functions

Introduction to TensorFlow in Python

Isaiah Hull

Visiting Associate Professor of Finance, BI Norwegian Business School

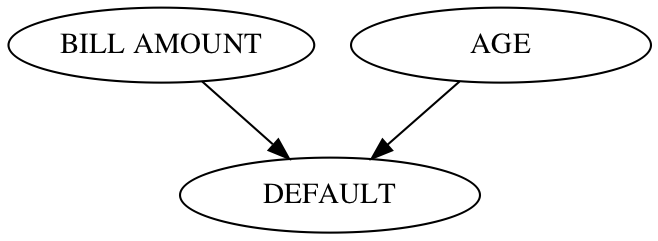

What is an activation function?

- Components of a typical hidden layer

- Linear: Matrix multiplication

- Nonlinear: Activation function

Why nonlinearities are important

Why nonlinearities are important

A simple example

import numpy as np

import tensorflow as tf

# Define example borrower features

young, old = 0.3, 0.6

low_bill, high_bill = 0.1, 0.5

# Apply matrix multiplication step for all feature combinations

young_high = 1.0*young + 2.0*high_bill

young_low = 1.0*young + 2.0*low_bill

old_high = 1.0*old + 2.0*high_bill

old_low = 1.0*old + 2.0*low_bill

A simple example

# Difference in default predictions for young

print(young_high - young_low)

# Difference in default predictions for old

print(old_high - old_low)

0.8

0.8

A simple example

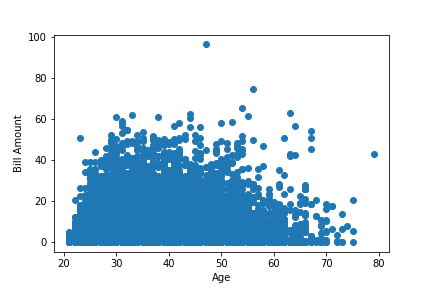

# Difference in default predictions for young

print(tf.keras.activations.sigmoid(young_high).numpy() -

tf.keras.activations.sigmoid(young_low).numpy())

# Difference in default predictions for old

print(tf.keras.activations.sigmoid(old_high).numpy() -

tf.keras.activations.sigmoid(old_low).numpy())

0.16337568

0.14204389

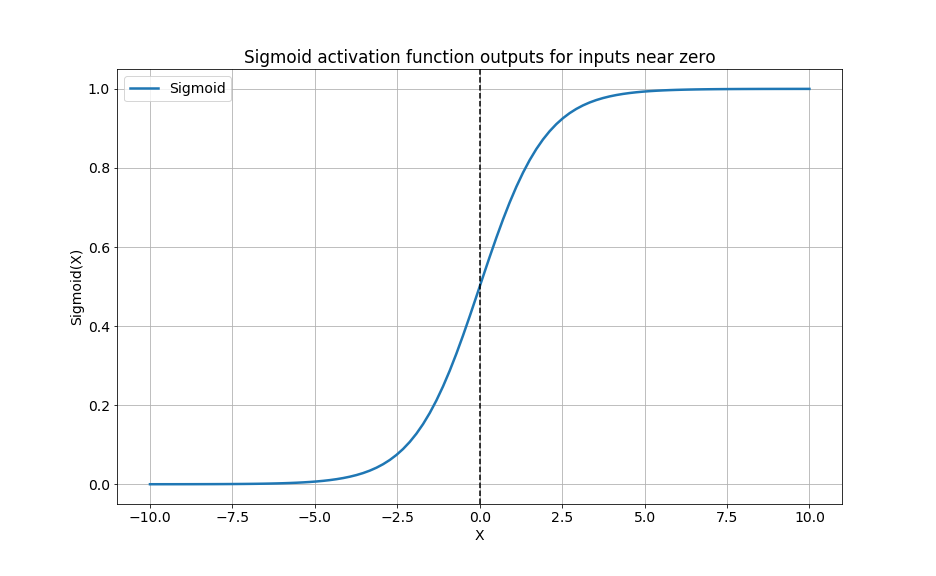

The sigmoid activation function

- Sigmoid activation function

- Binary classification

- Low-level:

tf.keras.activations.sigmoid() - High-level:

sigmoid

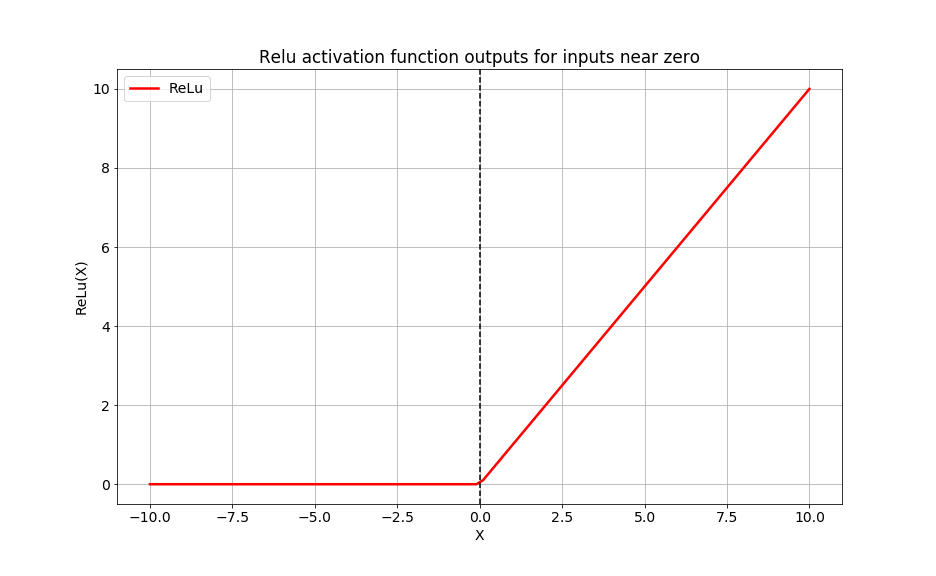

The relu activation function

- ReLu activation function

- Hidden layers

- Low-level:

tf.keras.activations.relu() - High-level:

relu

The softmax activation function

- Softmax activation function

- Output layer (>2 classes)

- Low-level:

tf.keras.activations.softmax() - High-level:

softmax

Activation functions in neural networks

import tensorflow as tf

# Define input layer

inputs = tf.constant(borrower_features, tf.float32)

# Define dense layer 1

dense1 = tf.keras.layers.Dense(16, activation='relu')(inputs)

# Define dense layer 2

dense2 = tf.keras.layers.Dense(8, activation='sigmoid')(dense1)

# Define output layer

outputs = tf.keras.layers.Dense(4, activation='softmax')(dense2)

Let's practice!

Introduction to TensorFlow in Python