Validación cruzada

Aprendizaje supervisado con scikit-learn

George Boorman

Core Curriculum Manager, DataCamp

Motivación de la validación cruzada

El rendimiento del modelo depende de la forma en que dividamos los datos

No es representativo de la capacidad del modelo para generalizar a datos no vistos

Solución: ¡la validación cruzada!

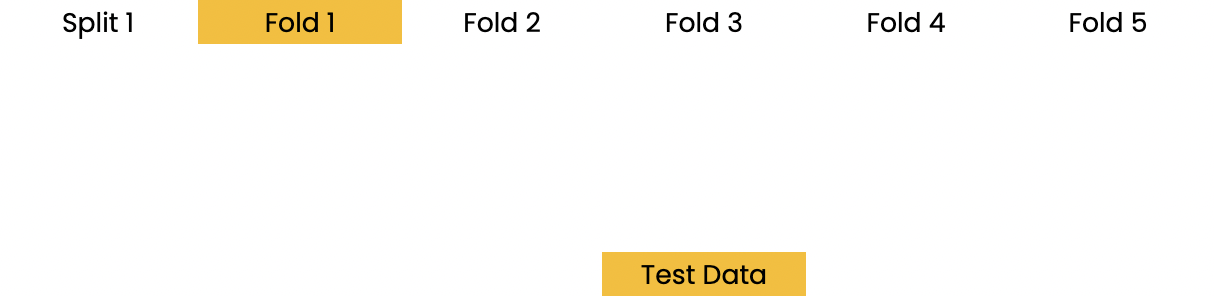

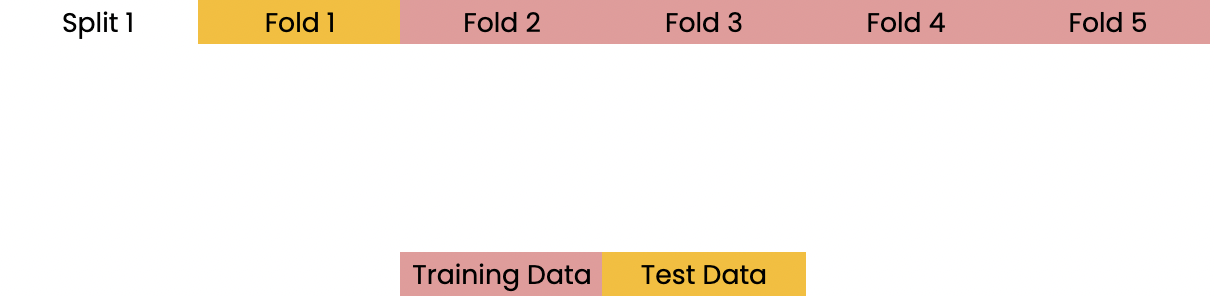

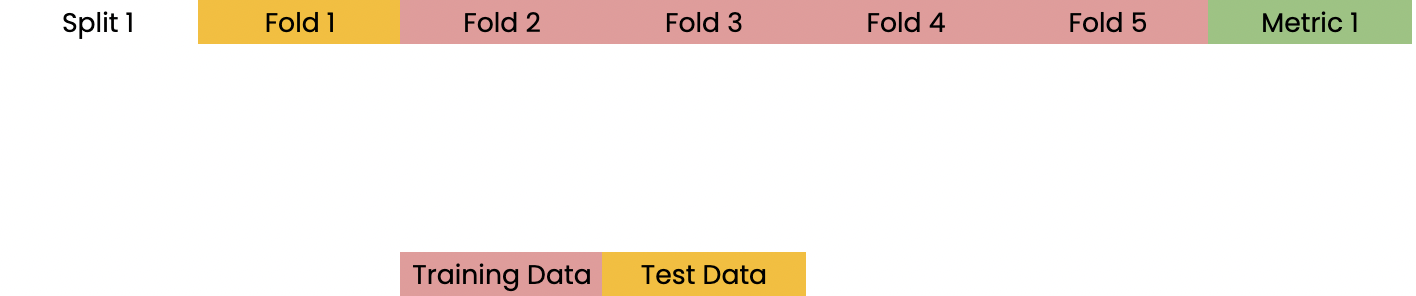

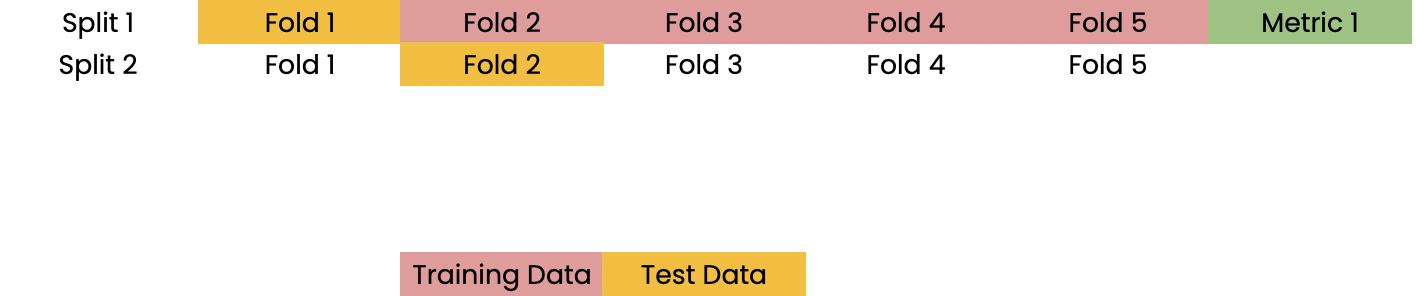

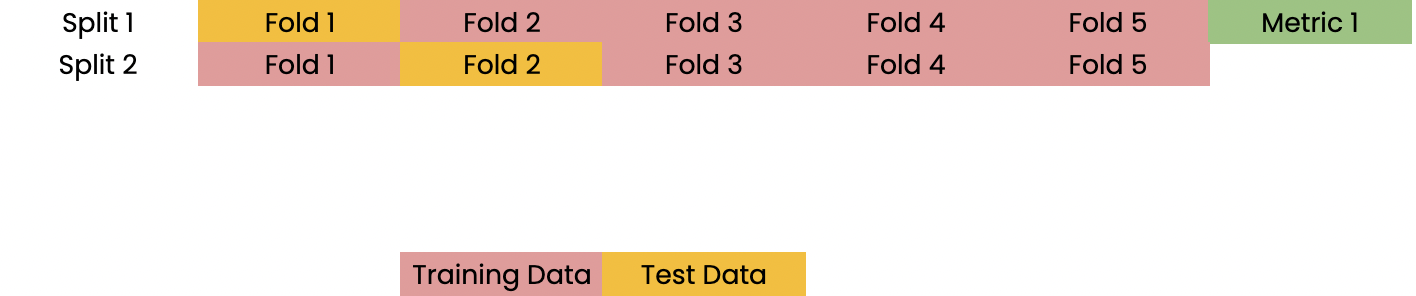

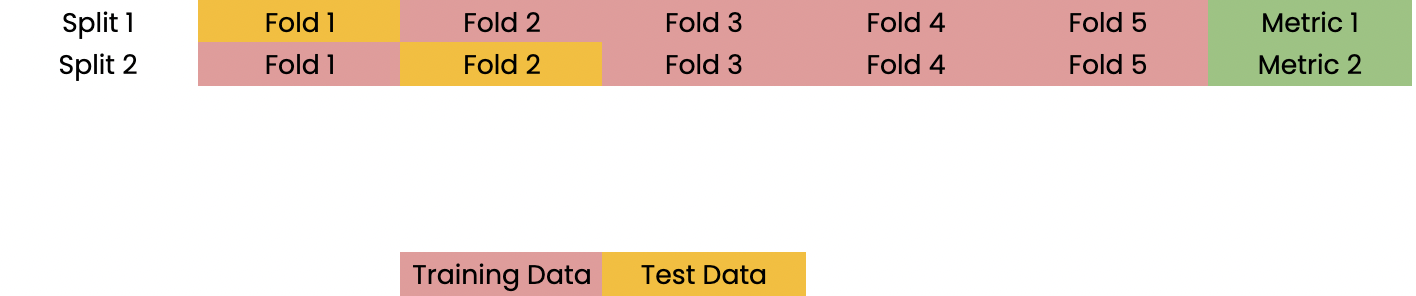

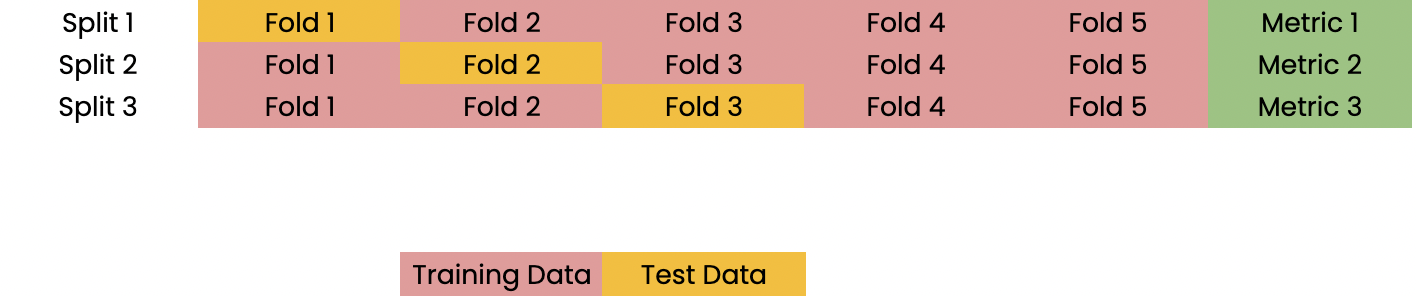

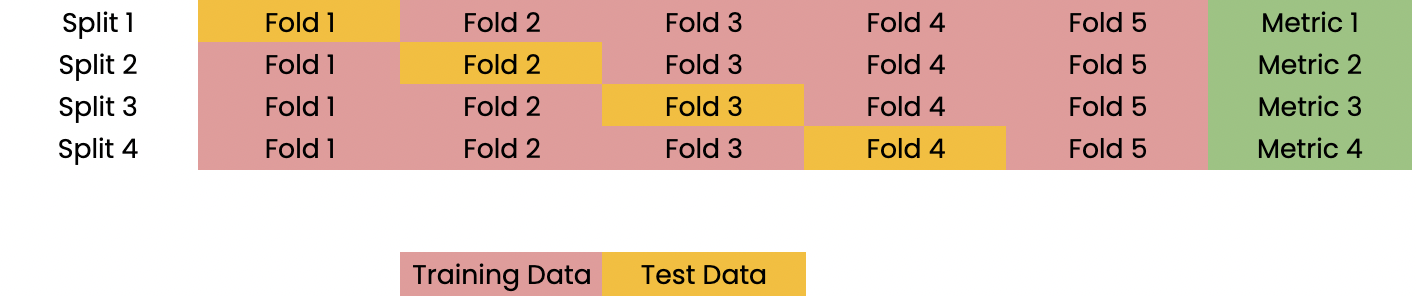

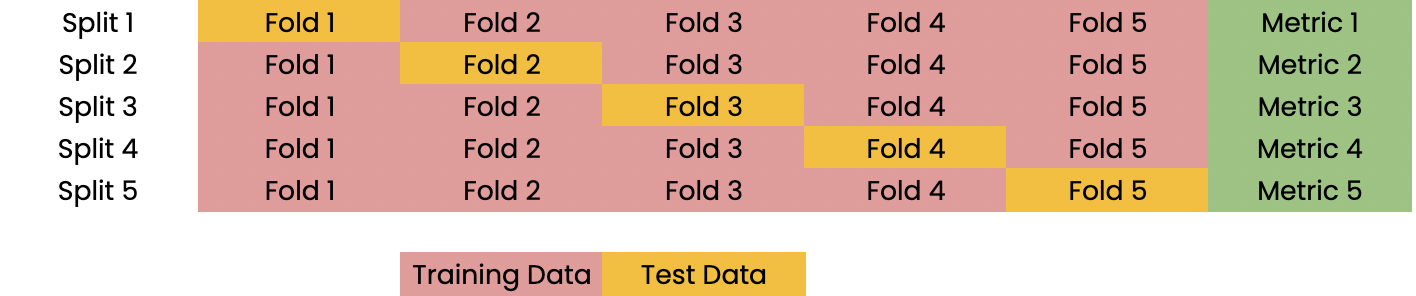

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Fundamentos de la validación cruzada

Validación cruzada y rendimiento del modelo

5 pliegues = 5 veces CV

10 pliegues = 10 veces CV

k pliegues = k veces CV

Más pliegues = Más caro computacionalmente

Validación cruzada en scikit-learn

from sklearn.model_selection import cross_val_score, KFoldkf = KFold(n_splits=6, shuffle=True, random_state=42)reg = LinearRegression()cv_results = cross_val_score(reg, X, y, cv=kf)

Evaluación del rendimiento de la validación cruzada

print(cv_results)

[0.70262578, 0.7659624, 0.75188205, 0.76914482, 0.72551151, 0.73608277]

print(np.mean(cv_results), np.std(cv_results))

0.7418682216666667 0.023330243960652888

print(np.quantile(cv_results, [0.025, 0.975]))

array([0.7054865, 0.76874702])

¡Vamos a practicar!

Aprendizaje supervisado con scikit-learn