Dimensionsreduktion mit PCA

Unsupervised Learning in Python

Benjamin Wilson

Director of Research at lateral.io

Dimensionsreduktion

- Repräsentiert dieselben Daten, braucht aber weniger Merkmale.

- Wichtiger Bestandteil von Machine-Learning-Pipelines

- Kann mittels PCA durchgeführt werden

Dimensionsreduktion mit PCA

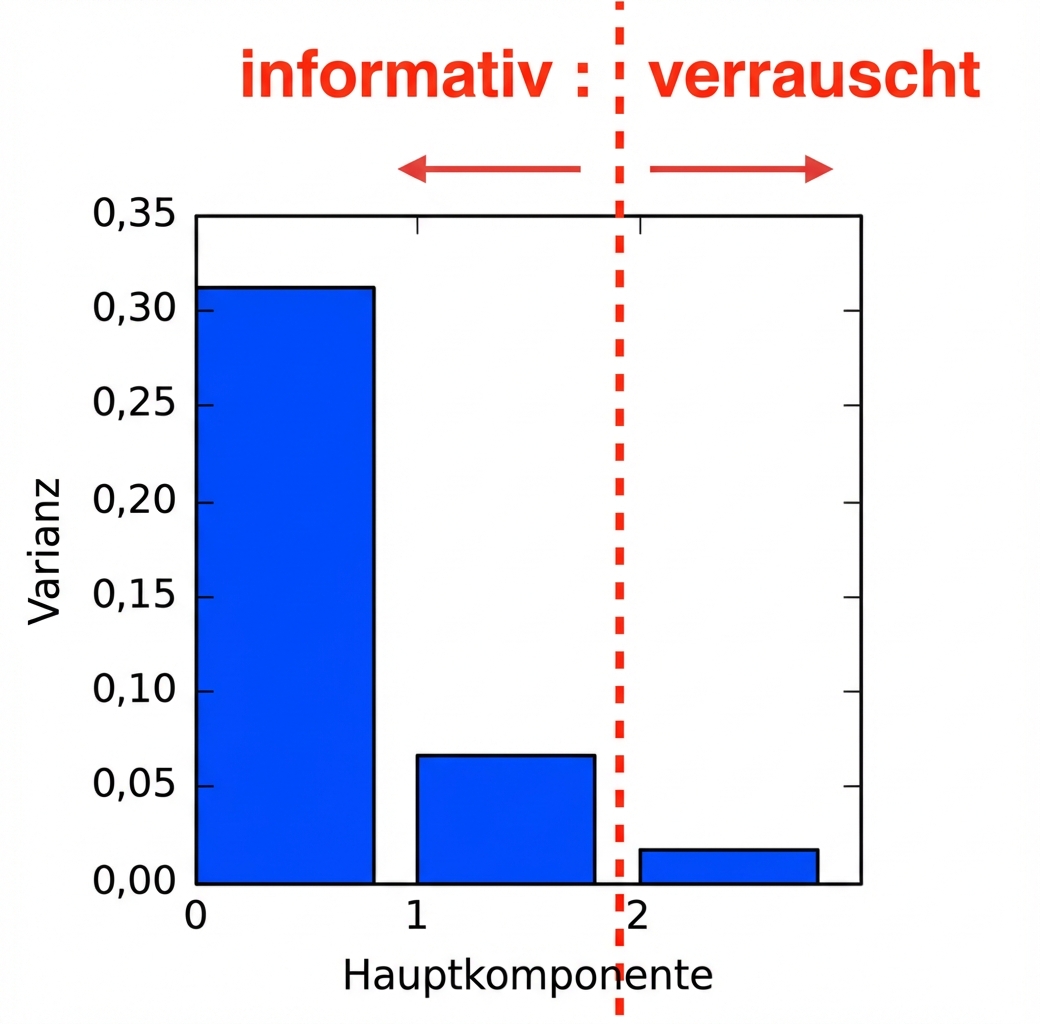

- Die PCA-Merkmale sind nach absteigender Varianz sortiert.

- Nimmt an, dass die Merkmale mit geringer Varianz „Rauschen” sind.

- Nimmt an, dass Merkmale mit hoher Varianz informativ sind.

Dimensionsreduktion mit PCA

- Definiere, wie viele Merkmale erhalten willst.

- Zum Beispiel

PCA(n_components=2) - Behält die ersten beiden PCA-Merkmale bei

- Die intrinsische Dimension ist eine gute Wahl.

Dimensionsreduktion des Iris-Datensatzes

samples= Array mit Iris-Messungen (vier Merkmale)species= Liste der Iris-Artennummern

from sklearn.decomposition import PCApca = PCA(n_components=2)pca.fit(samples)

PCA(n_components=2)

transformed = pca.transform(samples)

print(transformed.shape)

(150, 2)

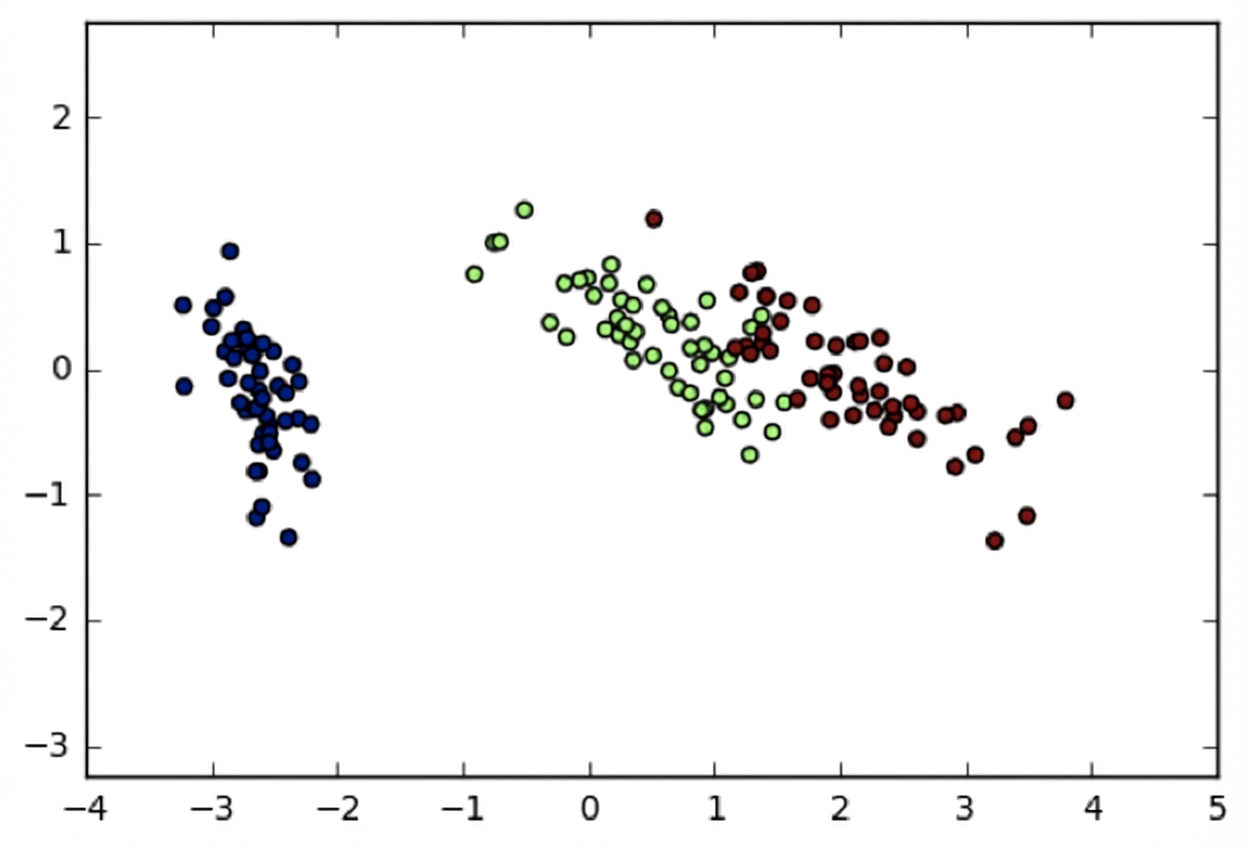

Iris-Datensatz in zwei Dimensionen

- PCA hat die Dimension auf Zwei reduziert.

- Die zwei PCA-Merkmale mit der größten Varianz wurden beibehalten.

- Wichtige Infos bleiben erhalten: Arten werden weiterhin unterschieden

import matplotlib.pyplot as plt

xs = transformed[:,0]

ys = transformed[:,1]

plt.scatter(xs, ys, c=species)

plt.show()

Dimensionsreduktion mit PCA

- Verwirft PCA-Merkmale mit geringer Varianz

- Geht davon aus, dass die Merkmale mit hoher Varianz informativ sind.

- Diese Annahme trifft in der Praxis meistens zu (z. B. beim Iris-Datensatz).

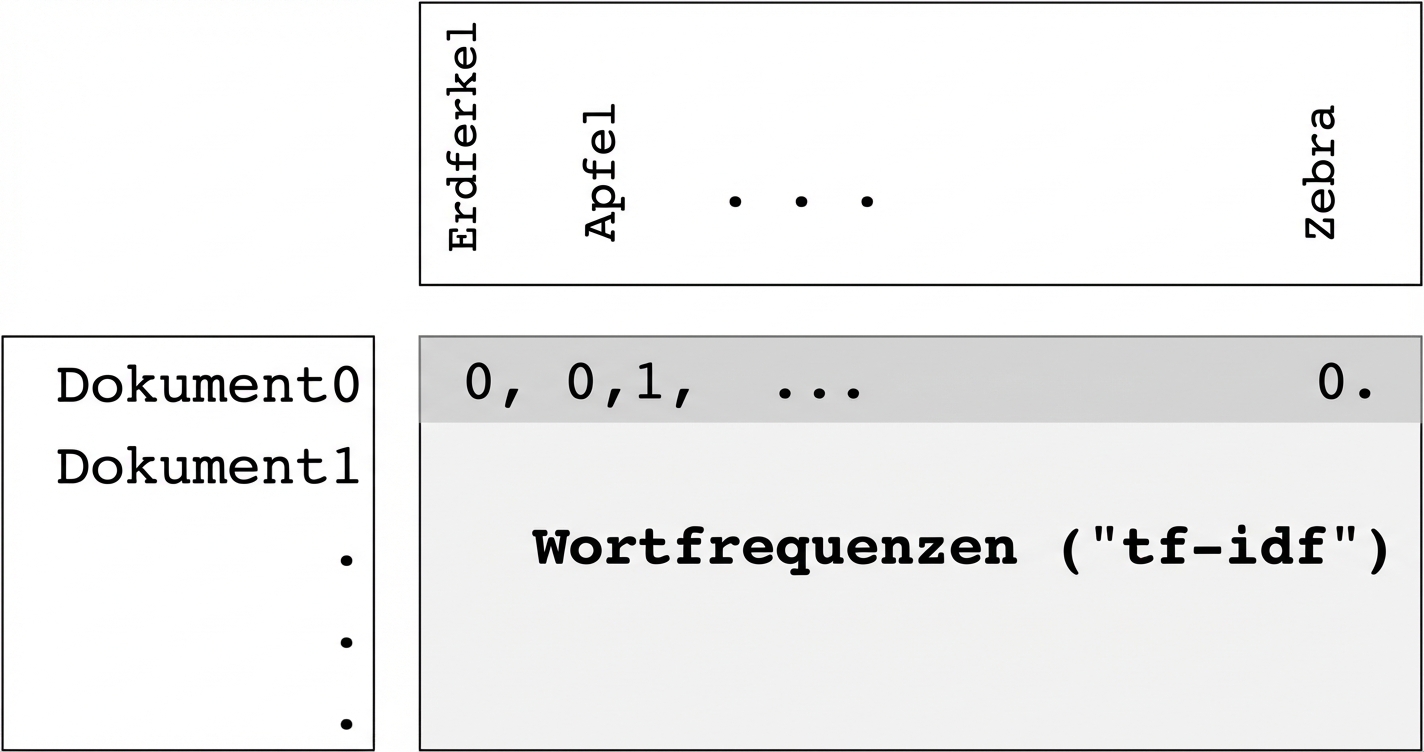

Worthäufigkeit-Arrays

- Die Zeilen stehen für Dokumente, die Spalten für Wörter.

- Die Einträge repräsentieren die Häufigkeit jedes Wortes in jedem Dokument.

- Messung mittels „tf-idf” (mehr dazu später)

Sparse-Arrays und csr_matrix

- „Sparse“: Die meisten Einträge mit dem Wert Null.

- Du kannst

scipy.sparse.csr_matrixanstelle von NumPy-Arrays verwenden. csr_matrixmerkt sich nur die Einträge, die nicht Null sind (spart Speicherplatz!)

TruncatedSVD und csr_matrix

- scikit-learn

PCAunterstütztcsr_matrixnicht. - Benutze stattdessen

TruncatedSVDaus scikit-learn. - Führt die gleiche Transformation durch.

from sklearn.decomposition import TruncatedSVD

model = TruncatedSVD(n_components=3)

model.fit(documents) # documents is csr_matrix

transformed = model.transform(documents)

Lass uns üben!

Unsupervised Learning in Python