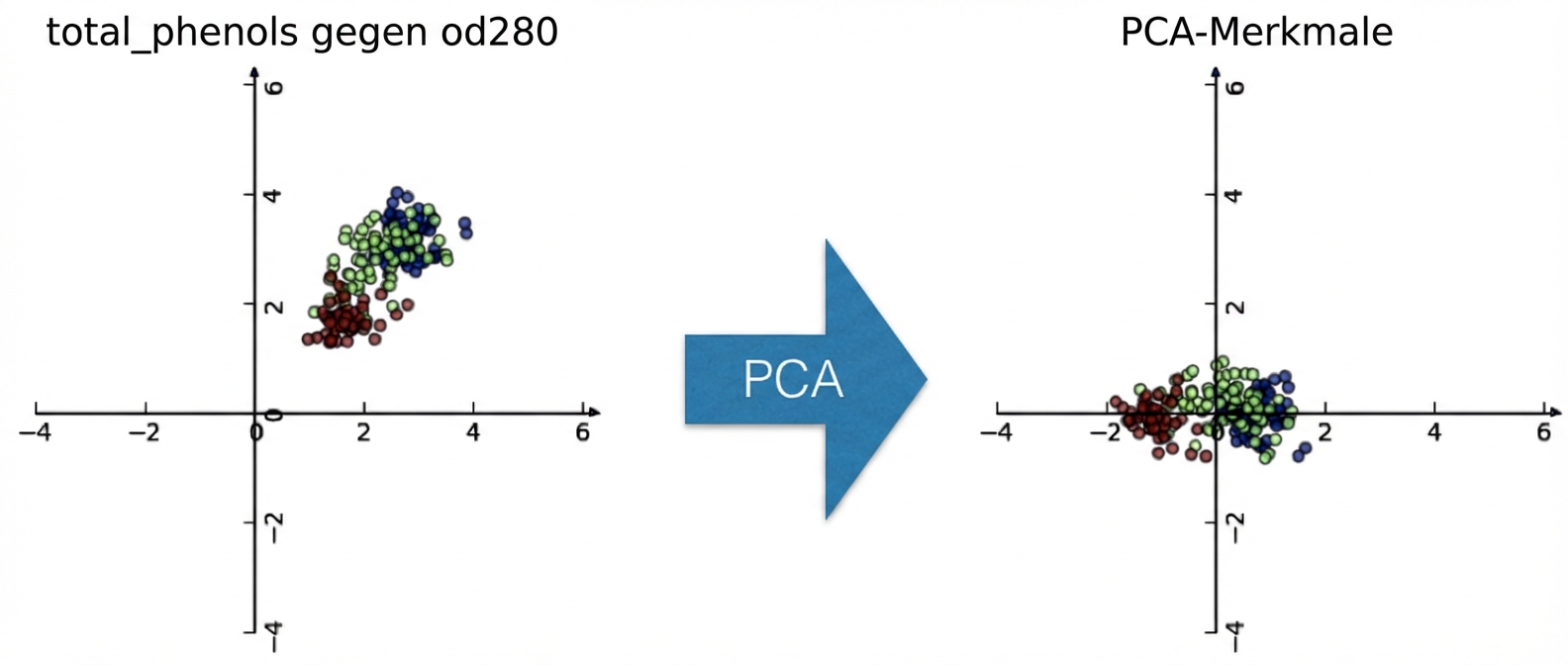

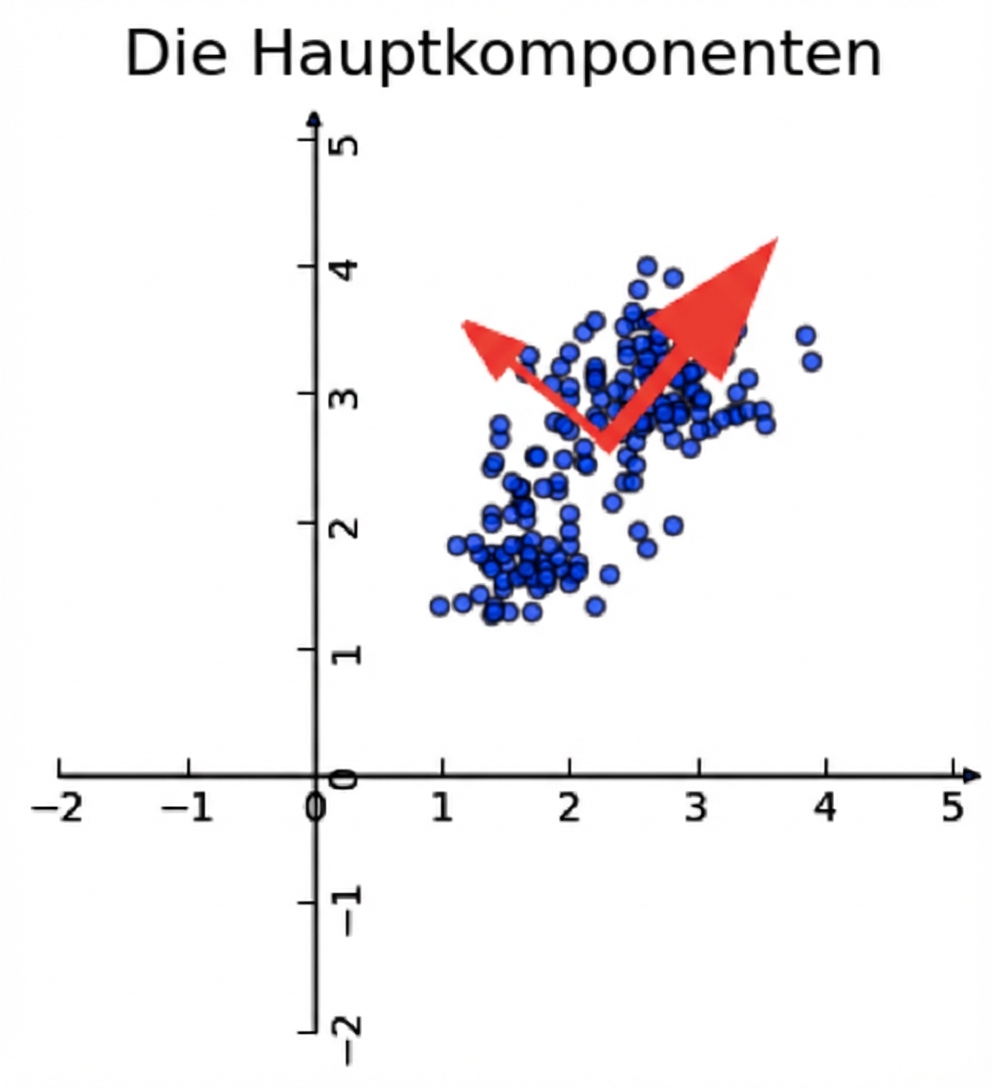

Visualisierung der PCA-Transformation

Unsupervised Learning in Python

Benjamin Wilson

Director of Research at lateral.io

Dimensionsreduktion

- Effizientere Speicherung und Berechnung

- Entfernen von „Rauschen“ mit geringem Informationsgehalt

- ... welches Probleme bei Vorhersageaufgaben verursacht, z. B. Klassifizierung, Regression.

Principal Component Analysis

- PCA = „Principal Component Analysis”

- Grundlegende Technik zur Dimensionsreduktion

- Erster Schritt „Dekorrelation“ (hier behandelt)

- Der zweite Schritt reduziert die Dimension (wird später behandelt).

PCA richtet Daten an den Achsen aus

- Dreht Datenproben, damit sie mit den Achsen übereinstimmen.

- Verschiebt Datenproben, sodass sie den Mittelwert 0 haben.

- Keine Information geht verloren.

PCA folgt dem Muster „Anpassen/Transformieren“

PCAist eine scikit-learn-Komponente wieKMeansoderStandardScaler.fit()lernt die Umwandlung aus den bereitgestellten Daten.transform()wendet die gelernte Transformation an.transform()kann auch auf neue Daten angewendet werden.

Verwendung von scikit-learn PCA

samples= Array mit zwei Merkmalen (total_phenols&od280)

[[ 2.8 3.92]

...

[ 2.05 1.6 ]]

from sklearn.decomposition import PCAmodel = PCA() model.fit(samples)

PCA()

transformed = model.transform(samples)

PCA-Merkmale

- Die transformierten Zeilen entsprechen den Proben.

- Die transformierten Spalten sind die „PCA-Merkmale“.

- Die Zeile gibt die PCA-Merkmalswerte der entsprechenden Stichprobe an.

print(transformed)

[[ 1.32771994e+00 4.51396070e-01]

[ 8.32496068e-01 2.33099664e-01]

...

[ -9.33526935e-01 -4.60559297e-01]]

PCA-Merkmale korrelieren nicht miteinander

- Die Merkmale von Datensätzen korrelieren oft miteinander, z. B.

total_phenolsundod280 - PCA richtet die Daten an den Achsen aus.

- Die resultierenden PCA-Merkmale sind nicht linear miteinander verbunden („Dekorrelation“).

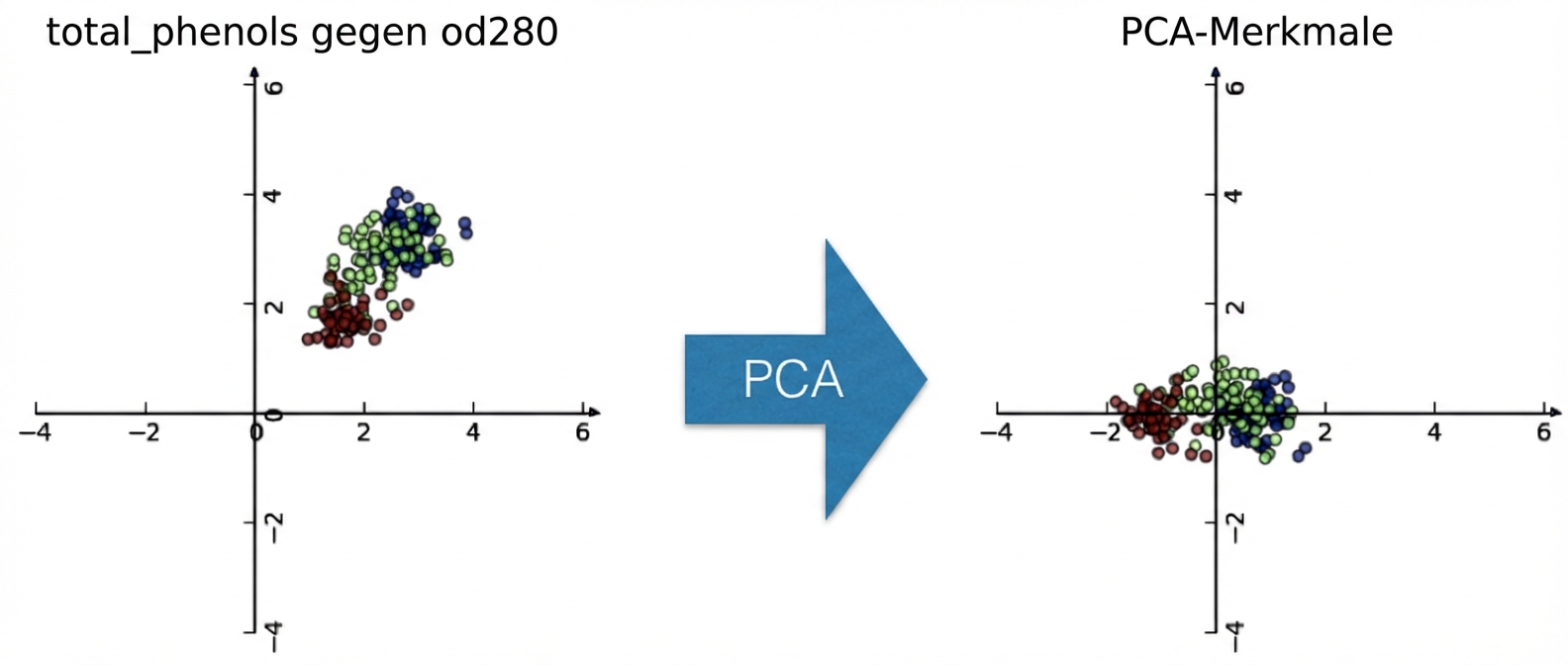

Pearson-Korrelation

- Misst die lineare Korrelation von Merkmalen

- Wert zwischen -1 und 1

- Der Wert 0 heißt, dass es keine lineare Korrelation existiert.

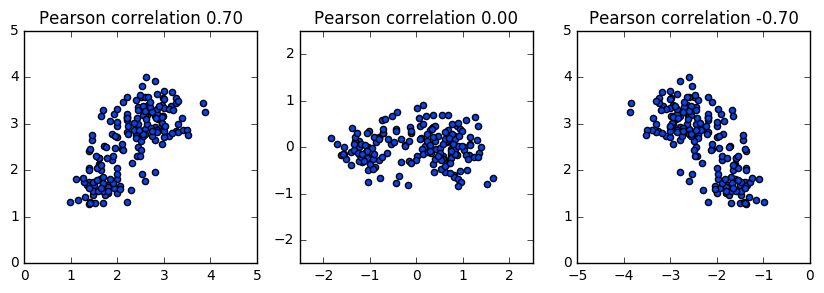

Principal components

- „Principal components“ = Richtungen der Varianz

- PCA ordnet „principal components“ den Achsen zu

Principal components

- Verfügbar als Attribut

components_des PCA-Objekts - Jede Zeile definiert die Abweichung vom Mittelwert.

print(model.components_)

[[ 0.64116665 0.76740167]

[-0.76740167 0.64116665]]

Lass uns üben!

Unsupervised Learning in Python