Aufteilen externer Daten für den Abruf

Entwickeln von LLM-Anwendungen mit LangChain

Jonathan Bennion

AI Engineer & LangChain Contributor

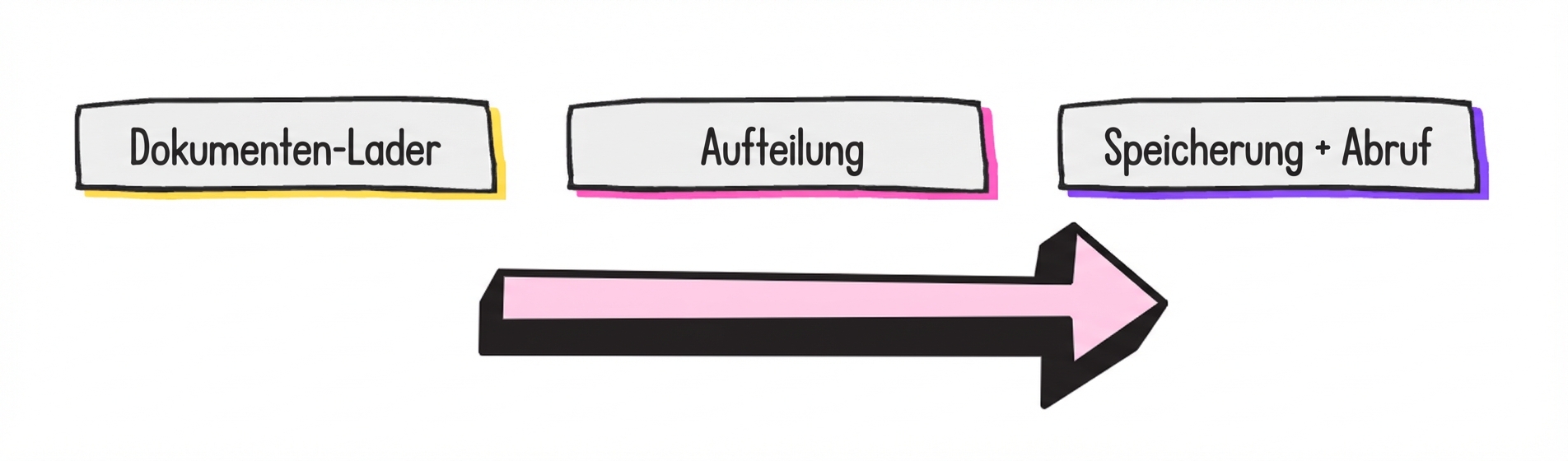

RAG-Entwicklungsschritte

- Document splitting: Dokument in Chunks aufteilen

- Dokumente so aufteilen, dass sie in das Kontextfenster eines LLM passen

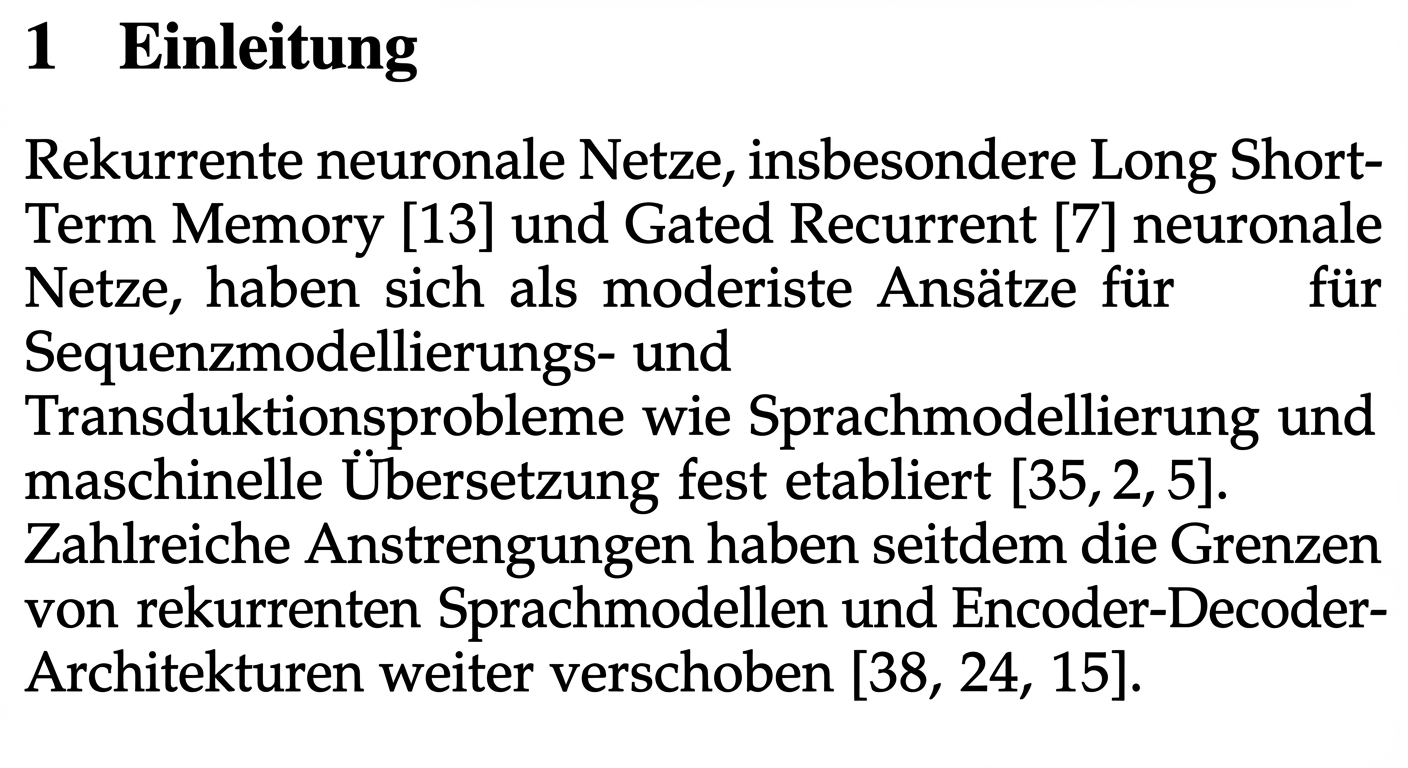

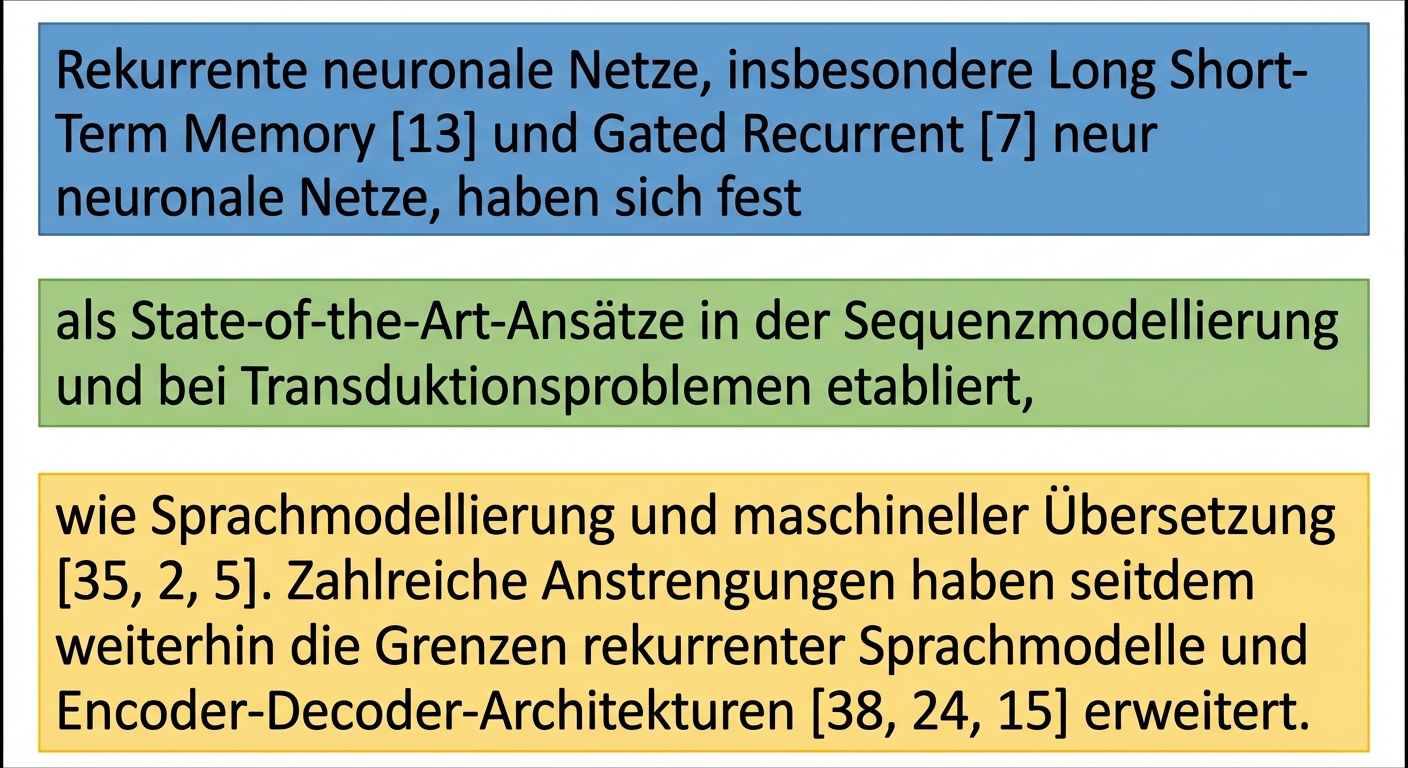

Über eine Trennung nachdenken...

Zeile 1:

Recurrent neural networks, long short-term memory [13] and gated recurrent [7] neural networks

Zeile 2:

in particular, have been firmly established as state of the art approaches in sequence modeling and

1 https://arxiv.org/abs/1706.03762

Chunk-Überlappung

Was ist die beste Strategie zum Aufteilen von Dokumenten?

CharacterTextSplitterRecursiveCharacterTextSplitter- Viele andere

1 Wikipedia Commons

quote = '''One machine can do the work of fifty ordinary humans.

No machine can do

the work of one extraordinary human.'''

len(quote)

103

chunk_size = 24

chunk_overlap = 3

1 Elbert Hubbard

from langchain_text_splitters import CharacterTextSplitterct_splitter = CharacterTextSplitter( separator='.', chunk_size=chunk_size, chunk_overlap=chunk_overlap)docs = ct_splitter.split_text(quote) print(docs)print([len(doc) for doc in docs])

['One machine can do the work of fifty ordinary humans', 'No machine can do the work of one extraordinary human'][52, 53]

- Aufteilung mit dem Trennzeichen damit <

chunk_size, aber das klappt vielleicht nicht immer!

from langchain_text_splitters import RecursiveCharacterTextSplitterrc_splitter = RecursiveCharacterTextSplitter( separators=[" ", " ", " ", ""], chunk_size=chunk_size, chunk_overlap=chunk_overlap)docs = rc_splitter.split_text(quote) print(docs)

RecursiveCharacterTextSplitter

separators=["\n\n", "\n", " ", ""]

['One machine can do the',

'work of fifty ordinary',

'humans.',

'No machine can do the',

'work of one',

'extraordinary human.']

- Versuche, nach Absätzen aufzuteilen:

"\n\n" - Versuche, nach Sätzen aufzuteilen:

"\n" - Versuche, nach Wörtern aufzuteilen:

" "

RecursiveCharacterTextSplitter mit HTML

from langchain_community.document_loaders import UnstructuredHTMLLoader from langchain_text_splitters import RecursiveCharacterTextSplitterloader = UnstructuredHTMLLoader("white_house_executive_order_nov_2023.html") data = loader.load()rc_splitter = RecursiveCharacterTextSplitter( chunk_size=chunk_size, chunk_overlap=chunk_overlap, separators=['.'])docs = rc_splitter.split_documents(data) print(docs[0])

Document(page_content="To search this site, enter a search term [...]

Lass uns üben!

Entwickeln von LLM-Anwendungen mit LangChain