Data Pipelines on Kubernetes

Introduction to Kubernetes

Frank Heilmann

Platform Architect and Freelance Instructor

What are Data Pipelines?

- Set of processes to move, transform, or analyze data

- Typical steps:

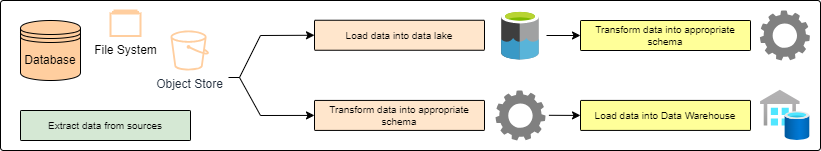

- ETL: Extract data from various data sources, then Transform into a meaningful schema, finally Load into a target data sink (e.g., a data warehouse )

- ELT: Extract data from various data sources, then Load into a target data sink (e.g., a data lake), finally Transform data into meaningful schema when needed

Data Pipelines on Kubernetes

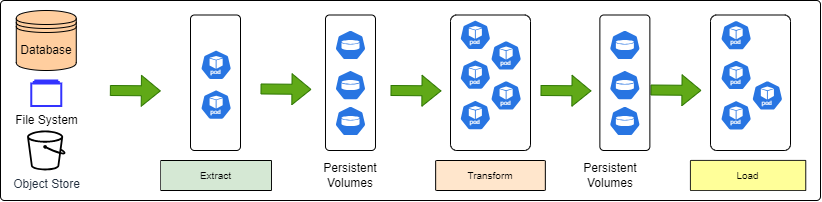

- The steps of a data pipeline map nicely to Kubernetes objects:

- Extract, Transform, Load steps: Pods (Deployment or StatefulSet)

- Extracted and Transformed Data: Persistent Volumes

- Kubernetes can scale out Deployments and Storage as required, hence increase throughput

Open-Source Tools for Data Pipelines

- Many open-source software exists that is readily deployable on Kubernetes

- Some examples:

- Extract: Apache NiFi, Apache Kafka with Kafka Connect

- Transform: Apache Spark, Apache Kafka, PostgreSQL

- Load: Apache Spark, Apache Kafka with KSQL, PostgreSQL

- Storage on top of PVs: Minio, Ceph

- This list is by no means complete

Let's practice!

Introduction to Kubernetes