Explainability metrics

Explainable AI in Python

Fouad Trad

Machine Learning Engineer

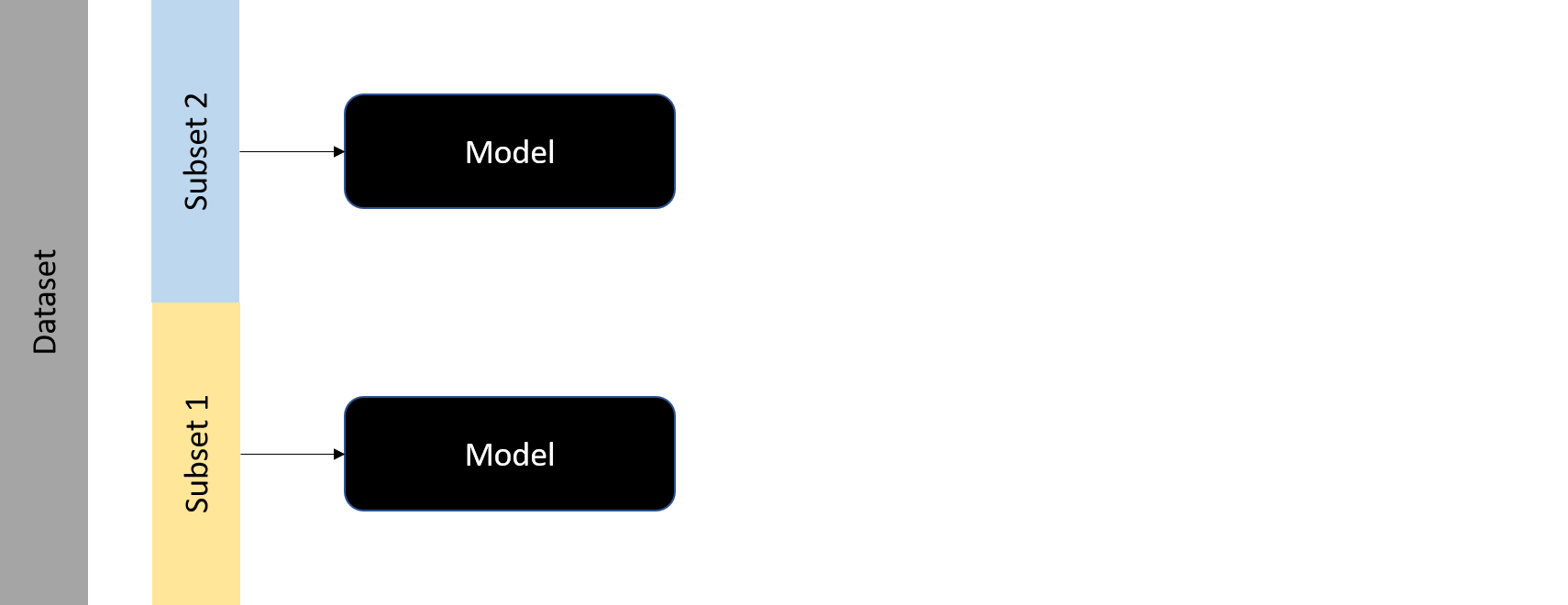

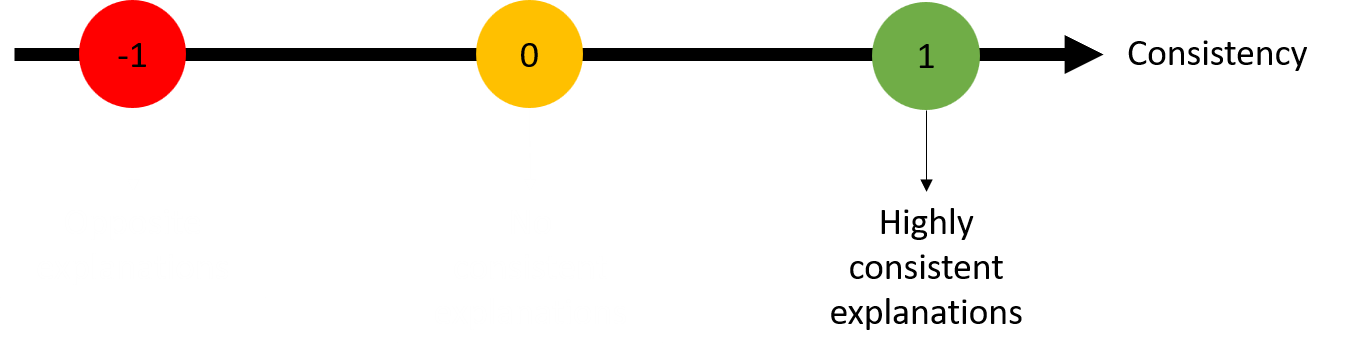

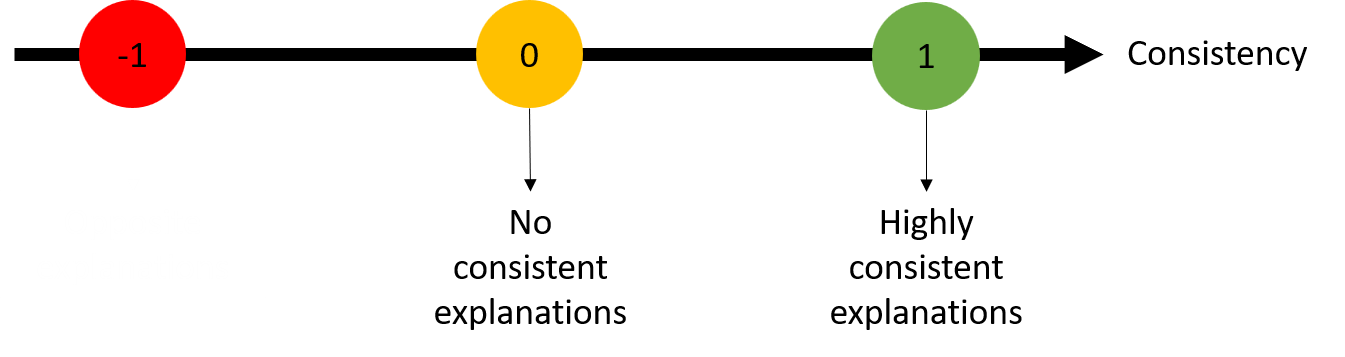

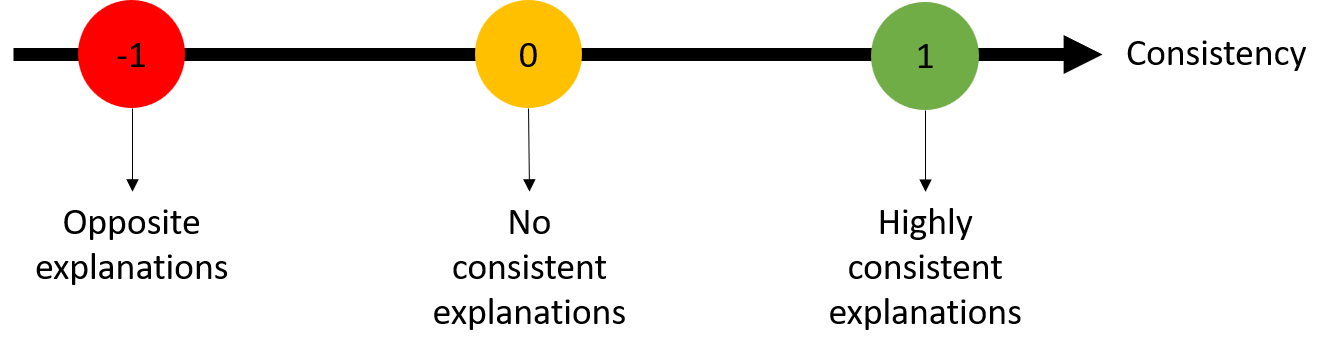

Consistency

- Assesses stability of explanations when model trained on different subsets

- Low consistency → no robust explanations

Consistency

- Assesses stability of explanations when model trained on different subsets

- Low consistency → no robust explanations

Consistency

- Assesses stability of explanations when model trained on different subsets

- Low consistency → no robust explanations

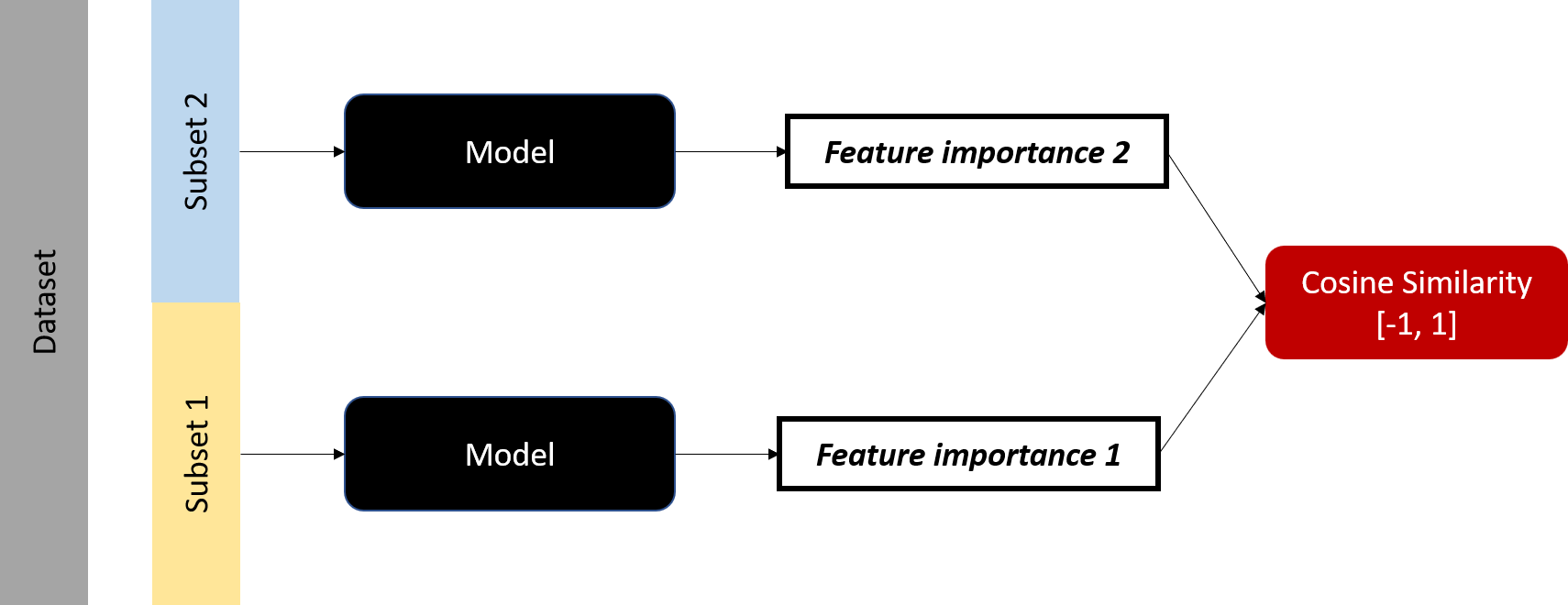

Cosine similarity to measure consistency

Cosine similarity to measure consistency

Cosine similarity to measure consistency

Admissions dataset

| GRE Score | TOEFL Score | University Rating | SOP | LOR | CGPA | Chance of Admit |

|---|---|---|---|---|---|---|

| 337 | 118 | 4 | 4.5 | 4.5 | 9.65 | 0.92 |

| 324 | 107 | 4 | 4 | 4.5 | 8.87 | 0.76 |

| 316 | 104 | 3 | 3 | 3.5 | 8 | 0.72 |

| 322 | 110 | 3 | 3.5 | 2.5 | 8.67 | 0.8 |

| 314 | 103 | 2 | 2 | 3 | 8.21 | 0.45 |

X1,y1: first part of the datasetX2,y2: second part of the datasetmodel1,model2: random forest regressors

Computing consistency

from sklearn.metrics.pairwise import cosine_similarityexplainer1 = shap.TreeExplainer(model1) explainer2 = shap.TreeExplainer(model2)shap_values1 = explainer1.shap_values(X1) shap_values2 = explainer2.shap_values(X2)feature_importance1 = np.mean(np.abs(shap_values1), axis=0) feature_importance2 = np.mean(np.abs(shap_values2), axis=0)consistency = cosine_similarity([feature_importance1], [feature_importance2]) print("Consistency between SHAP values:", consistency)

Consistency between SHAP values: [[0.99706516]]

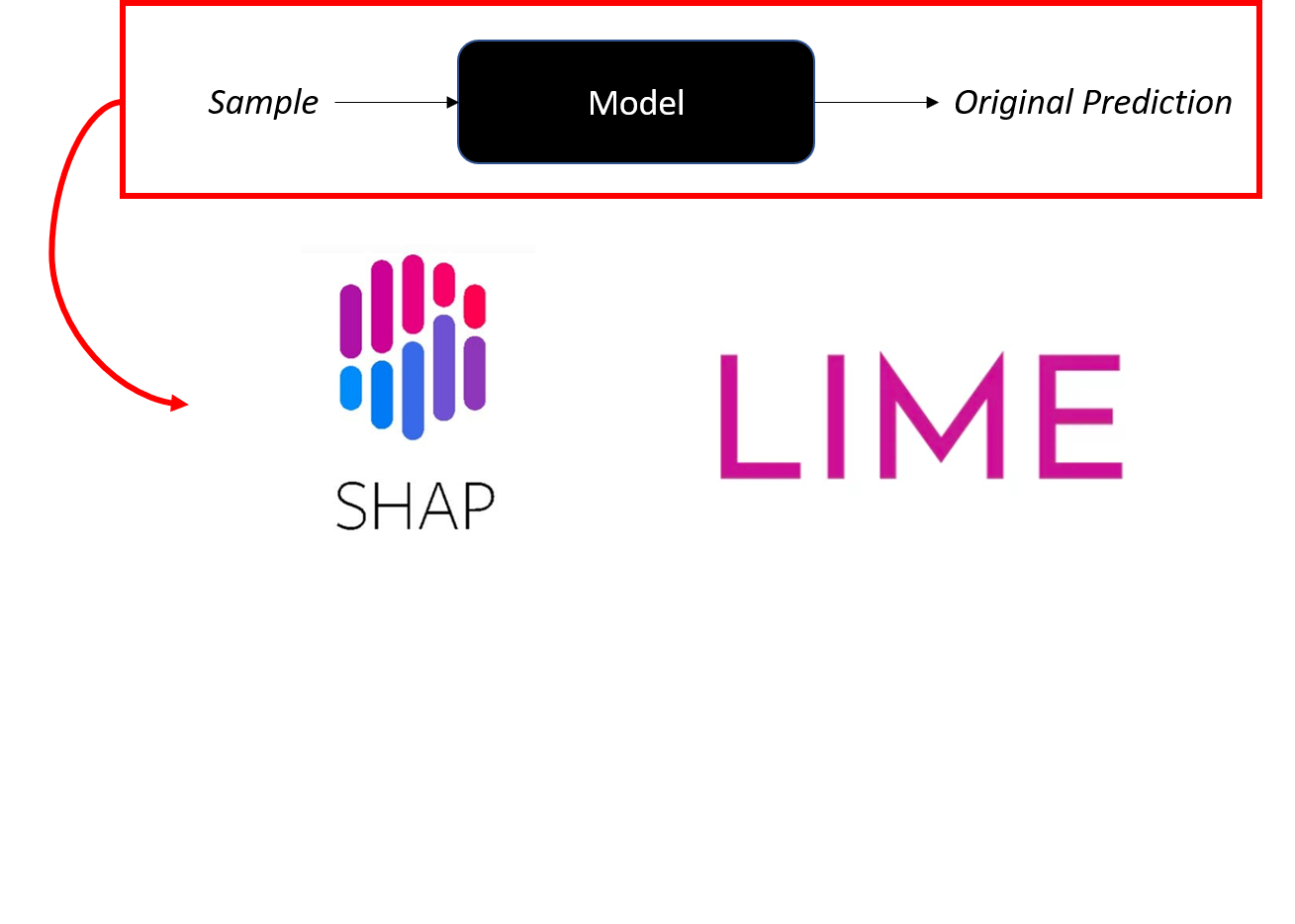

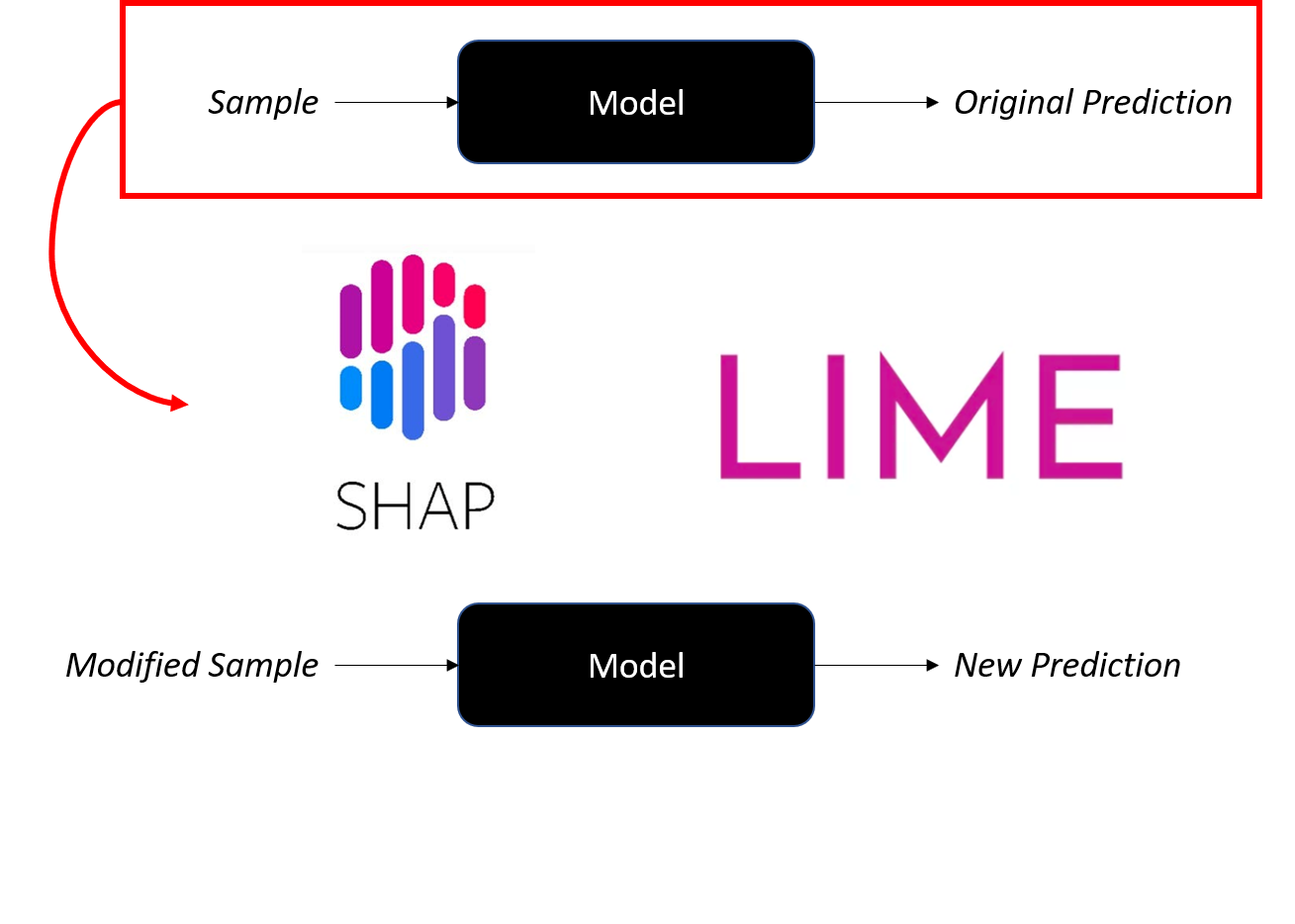

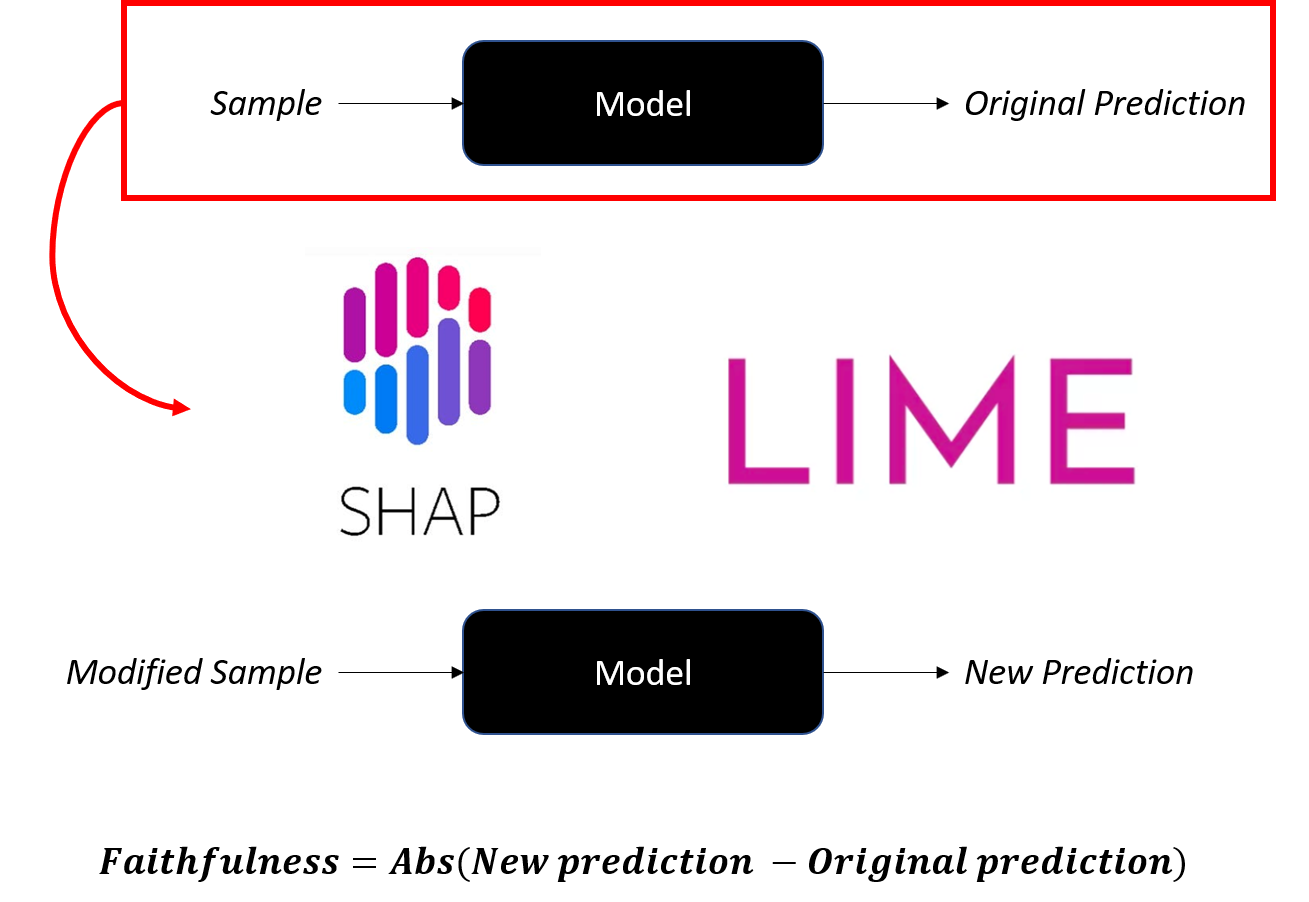

Faithfulness

- Evaluates if important features influence model's predictions

- Low faithfulness → misleads trust in model reasoning

- Useful in sensitive applications

Faithfulness

- Evaluates if important features influence model's predictions

- Low faithfulness → misleads trust in model reasoning

- Useful in sensitive applications

Faithfulness

- Evaluates if important features influence model's predictions

- Low faithfulness → misleads trust in model reasoning

- Useful in sensitive applications

Faithfulness

- Evaluates if important features influence model's predictions

- Low faithfulness → misleads trust in model reasoning

- Useful in sensitive applications

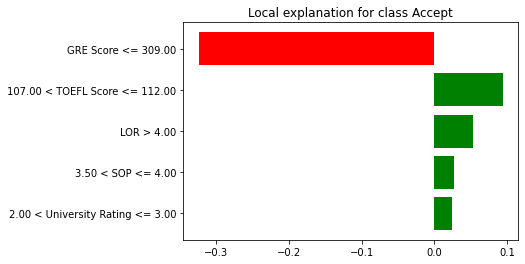

Computing faithfulness

X_instance = X_test.iloc[[0]]original_prediction = model.predict_proba(X_instance)[0, 1] print(f"Original prediction: {original_prediction}")

Original prediction: 0.43

Computing faithfulness

X_instance['GRE Score'] = 310new_prediction = model.predict_proba(X_instance)[0, 1] print(f"Prediction after perturbing {important_feature}: {new_prediction}")faithfulness_score = np.abs(original_prediction - new_prediction) print(f"Local Faithfulness Score: {faithfulness_score}")

Prediction after perturbing GRE Score: 0.77Local Faithfulness Score: 0.34

Let's practice!

Explainable AI in Python