Visualizing SHAP explainability

Explainable AI in Python

Fouad Trad

Machine Learning Engineer

Dataset

| age | gender | bmi | children | smoker | charges |

|---|---|---|---|---|---|

| 19 | 0 | 27.900 | 0 | 1 | 16884.92 |

| 18 | 1 | 33.770 | 1 | 0 | 1725.55 |

| 28 | 1 | 33.000 | 3 | 0 | 4449.46 |

| 33 | 1 | 22.705 | 0 | 0 | 21984.47 |

| 32 | 1 | 28.880 | 0 | 0 | 3866.85 |

model: random forest regressor to predict charges

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X)

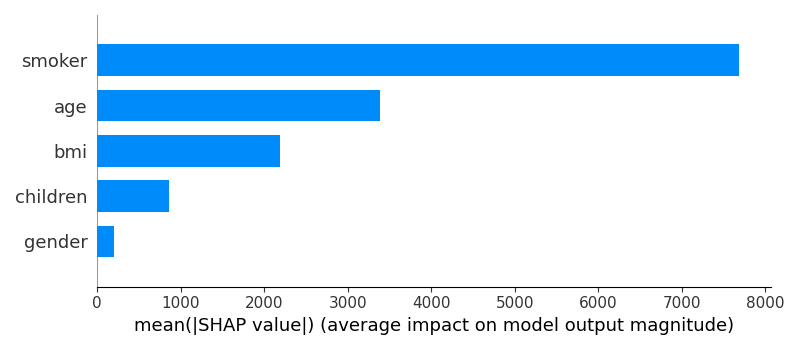

Feature importance plot

- Shows contribution of each feature on model output

shap.summary_plot(shap_values, X, plot_type="bar")

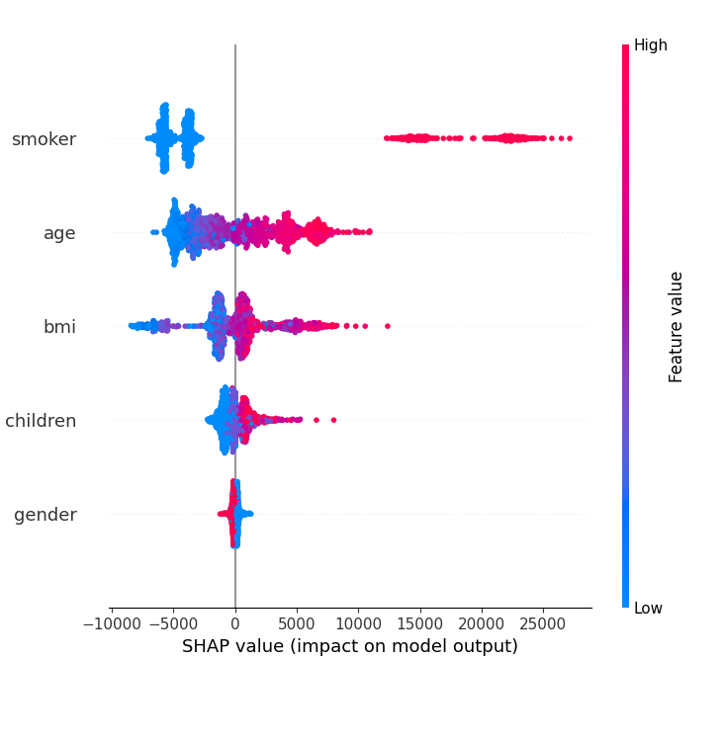

Beeswarm plot

- Shows SHAP values distribution

- Highlights direction and magnitude of each feature on prediction

- Red color → high feature value

- Blue color → low feature value

- SHAP value > 0 → increases outcome

- SHAP value < 0 → decreases outcome

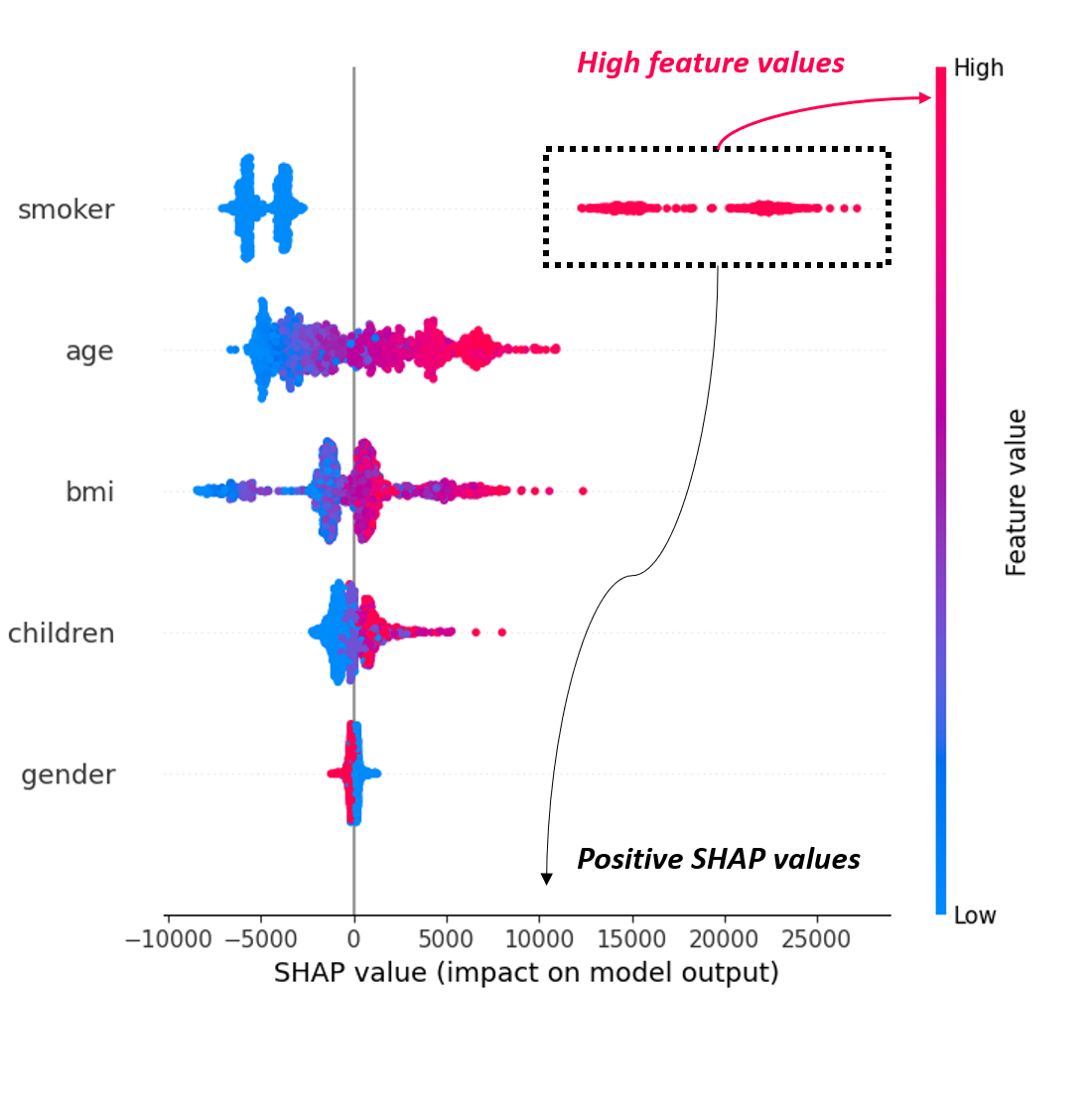

Beeswarm plot

- Shows SHAP values distribution

- Highlights direction and magnitude of each feature on prediction

- Red color → high feature value

- Blue color → low feature value

- SHAP value > 0 → increases outcome

- SHAP value < 0 → decreases outcome

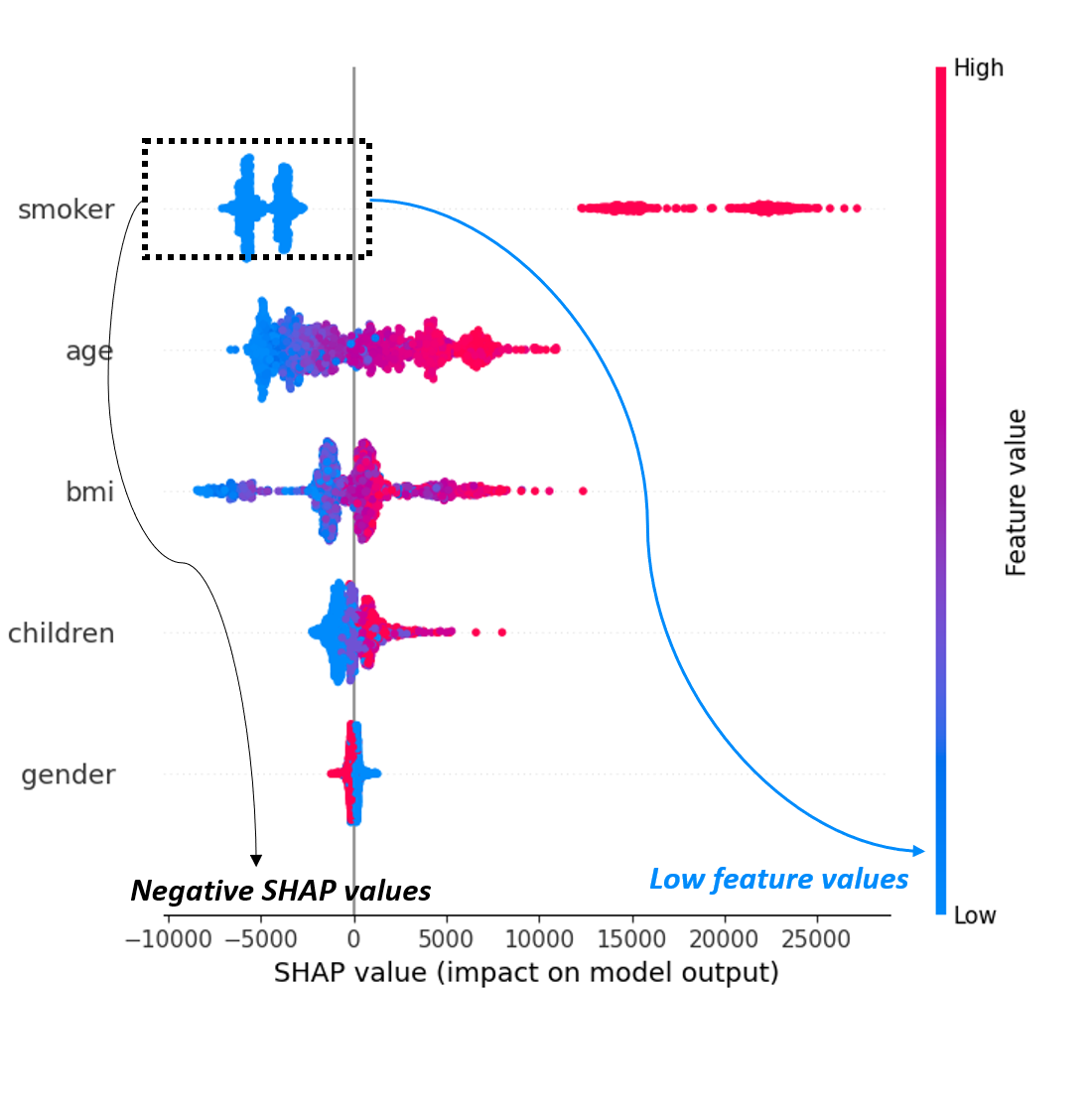

Beeswarm plot

- Shows SHAP values distribution

- Highlights direction and magnitude of each feature on prediction

- Red color → high feature value

- Blue color → low feature value

- SHAP value > 0 → increases outcome

- SHAP value < 0 → decreases outcome

shap.summary_plot(shap_values, X,

plot_type="dot")

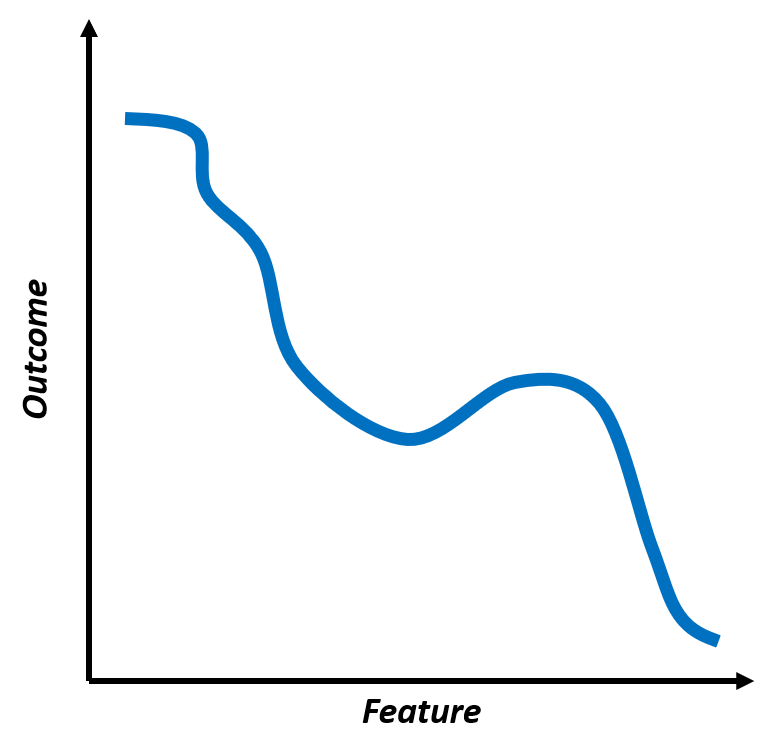

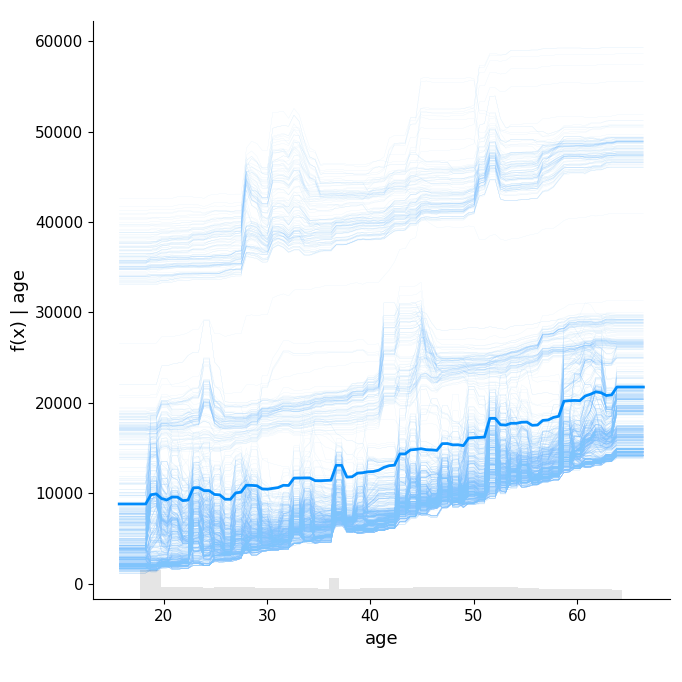

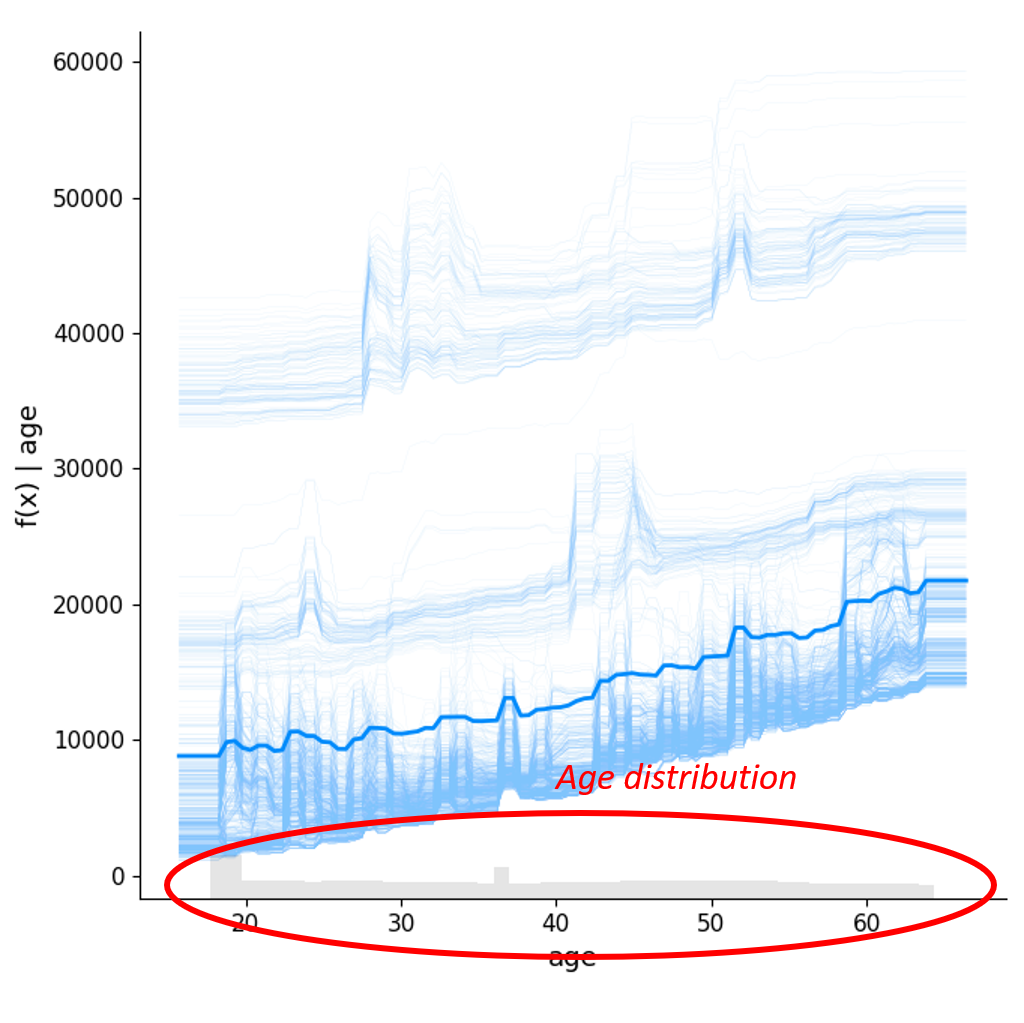

Partial dependence plot

- Shows relationship between feature and predicted outcome

- Shows feature's impact across its range

- Verifies if relationship is as expected

Partial dependence plot

- For each sample:

- Vary value of selected feature

- Hold other features constant

- Predict outcome

- Average results from all samples

shap.partial_dependence_plot("age",

model.predict,

X)

Partial dependence plot

For each sample:

- Vary value of selected feature

- Hold other features constant

- Predict outcome

Average results from all samples

shap.partial_dependence_plot("age",

model.predict,

X)

Let's practice!

Explainable AI in Python