Recurrent Neural Networks

Intermediate Deep Learning with PyTorch

Michal Oleszak

Machine Learning Engineer

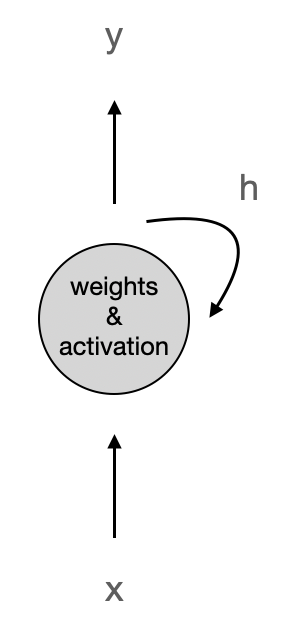

Recurrent neuron

- Feed-forward networks

- RNNs: have connections pointing back

- Recurrent neuron:

- Input

x - Output

y - Hidden state

h

- Input

- In PyTorch:

nn.RNN()

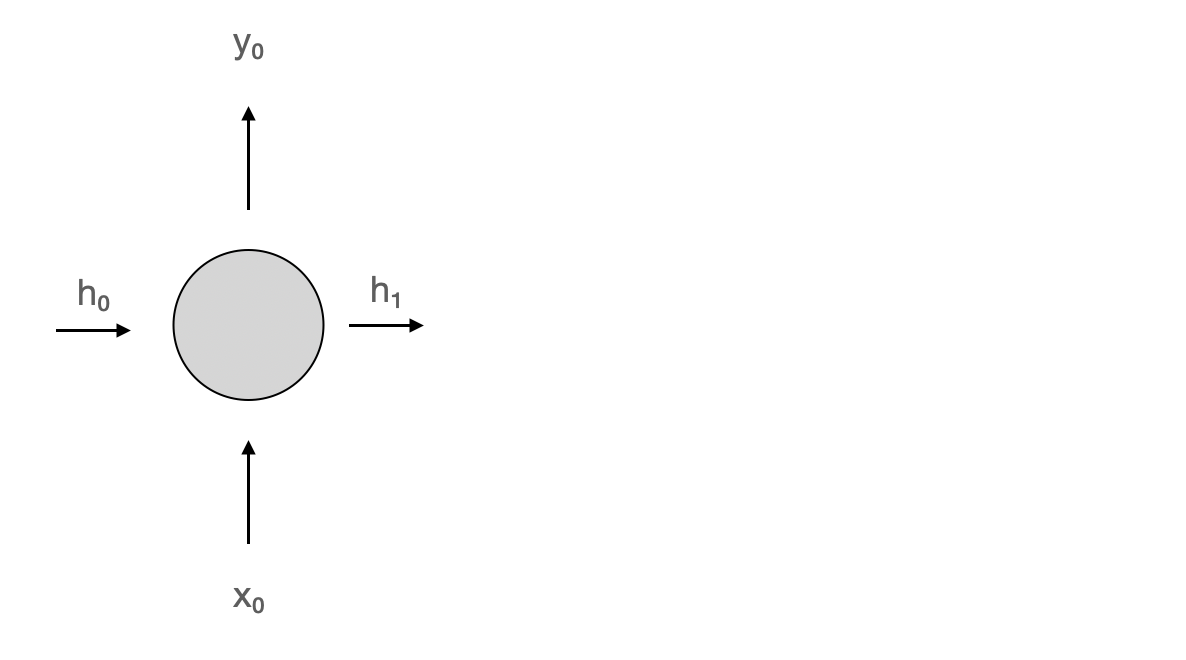

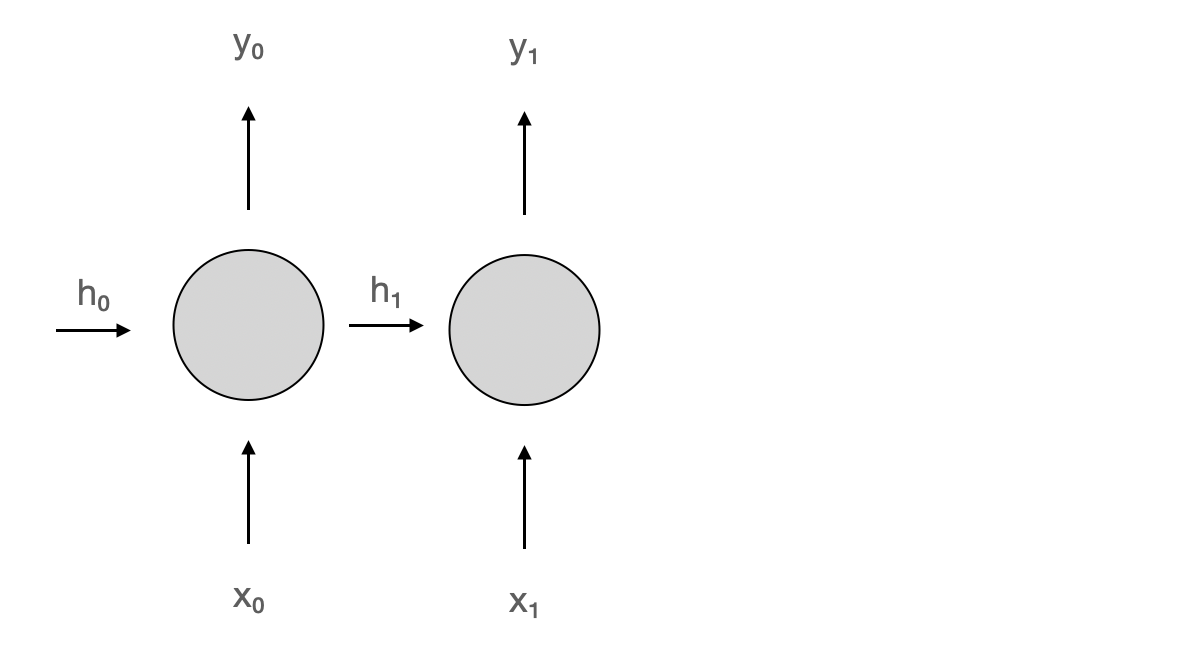

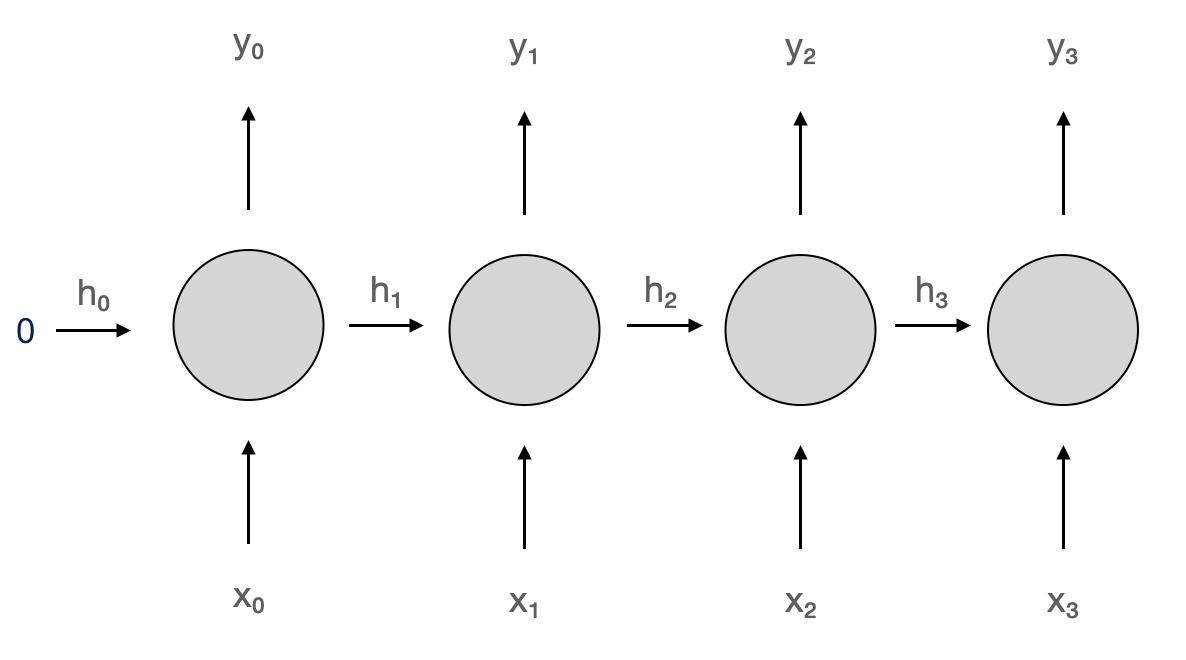

Unrolling recurrent neuron through time

Unrolling recurrent neuron through time

Unrolling recurrent neuron through time

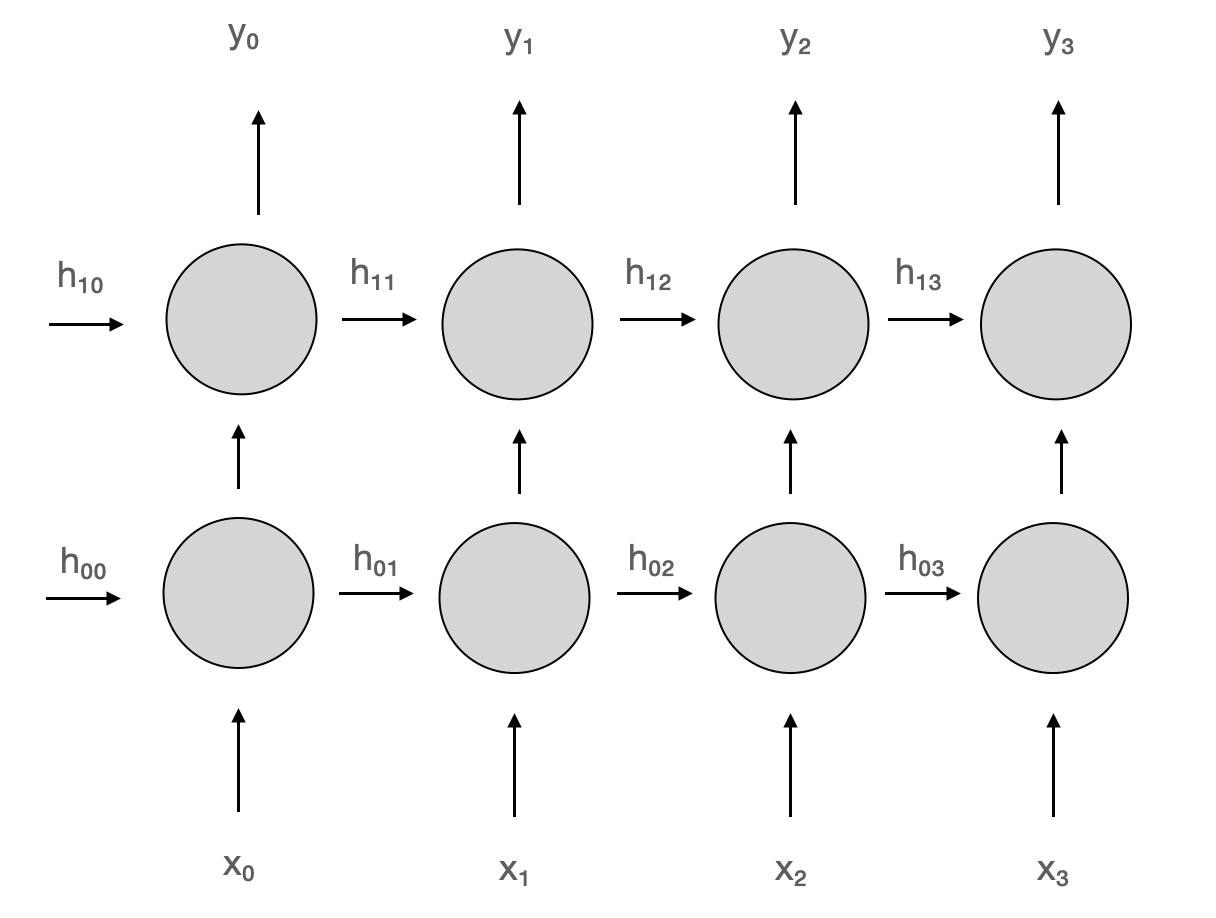

Deep RNNs

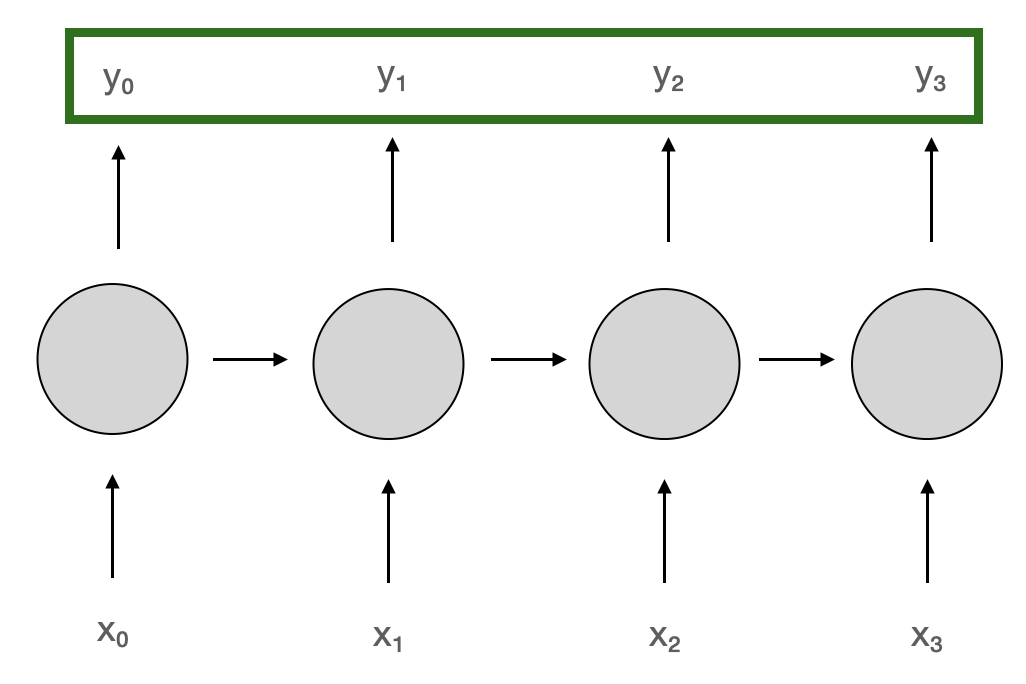

Sequence-to-sequence architecture

- Pass sequence as input, use the entire output sequence

- Example: Real-time speech recognition

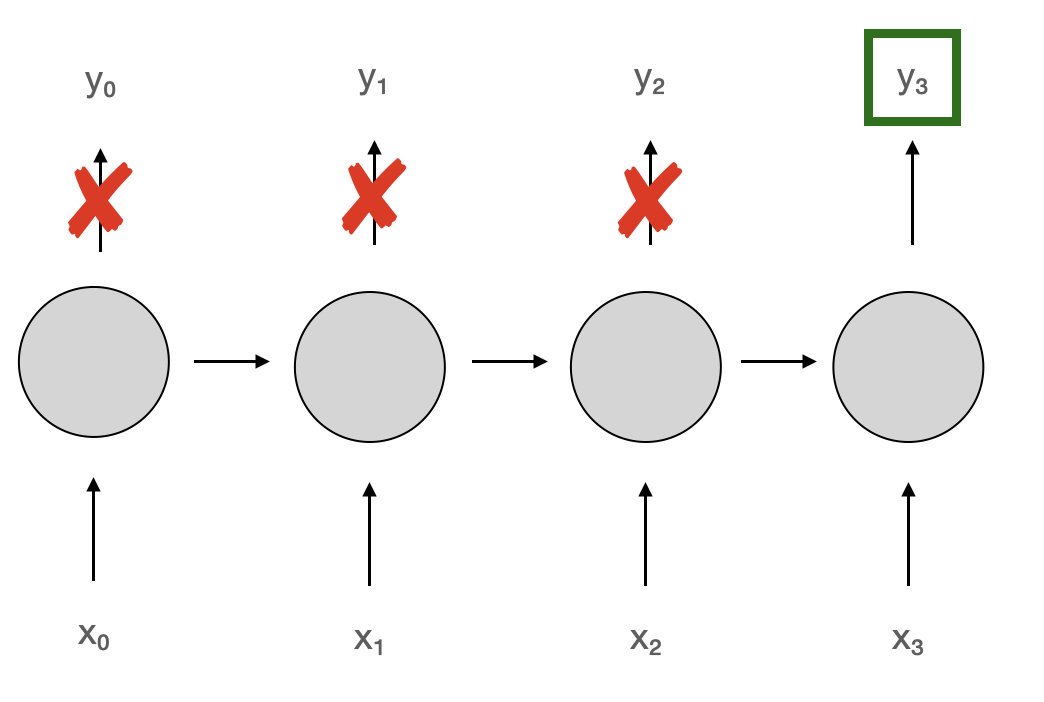

Sequence-to-vector architecture

- Pass sequence as input, use only the last output

- Example: Text topic classification

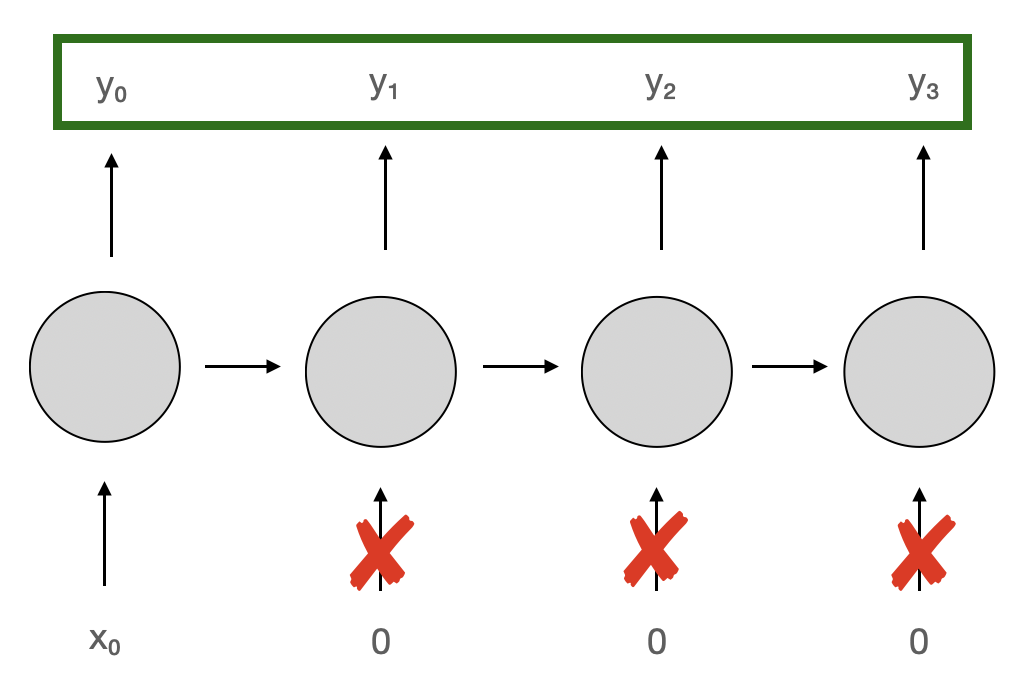

Vector-to-sequence architecture

- Pass single input, use the entire output sequence

- Example: Text generation

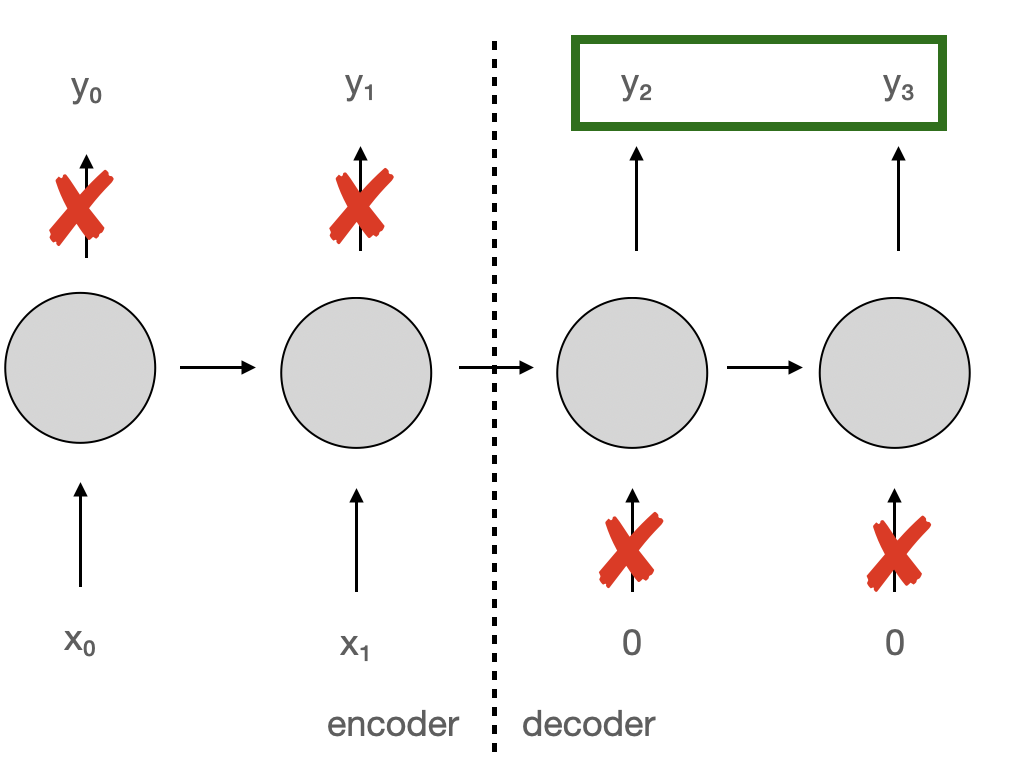

Encoder-decoder architecture

- Pass entire input sequence, only then start using output sequence

- Example: Machine translation

RNN in PyTorch

class Net(nn.Module): def __init__(self): super().__init__()self.rnn = nn.RNN( input_size=1, hidden_size=32, num_layers=2, batch_first=True, )self.fc = nn.Linear(32, 1)def forward(self, x): h0 = torch.zeros(2, x.size(0), 32)out, _ = self.rnn(x, h0)out = self.fc(out[:, -1, :]) return out

- Define model class with

__init__method - Define recurrent layer,

self.rnn - Define linear layer,

fc - In

forward(), initialize first hidden state to zeros - Pass input and first hidden state through RNN layer

- Select last RNN's output and pass it through linear layer

Let's practice!

Intermediate Deep Learning with PyTorch