Convolutional Neural Networks

Intermediate Deep Learning with PyTorch

Michal Oleszak

Machine Learning Engineer

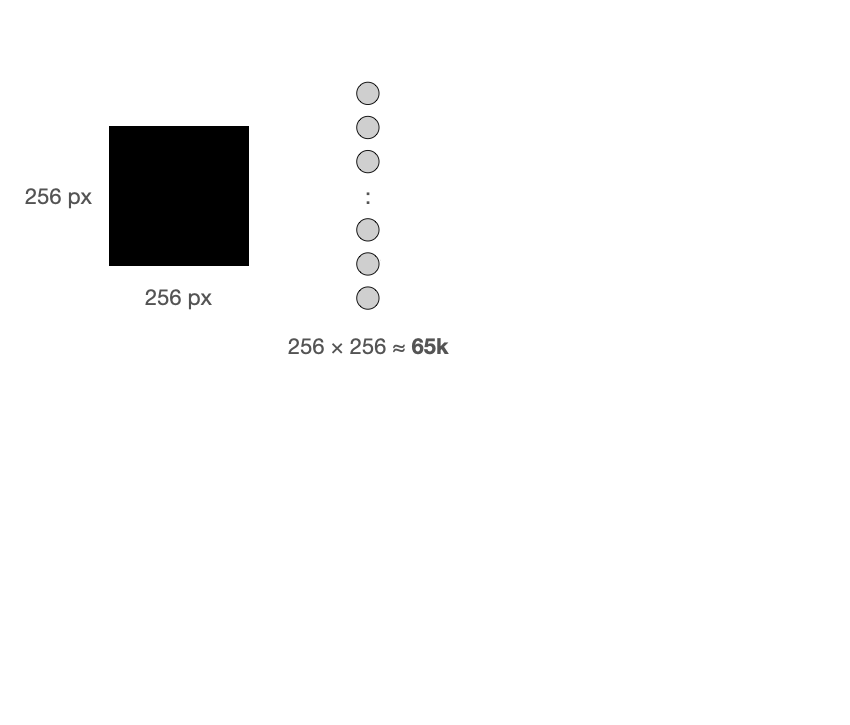

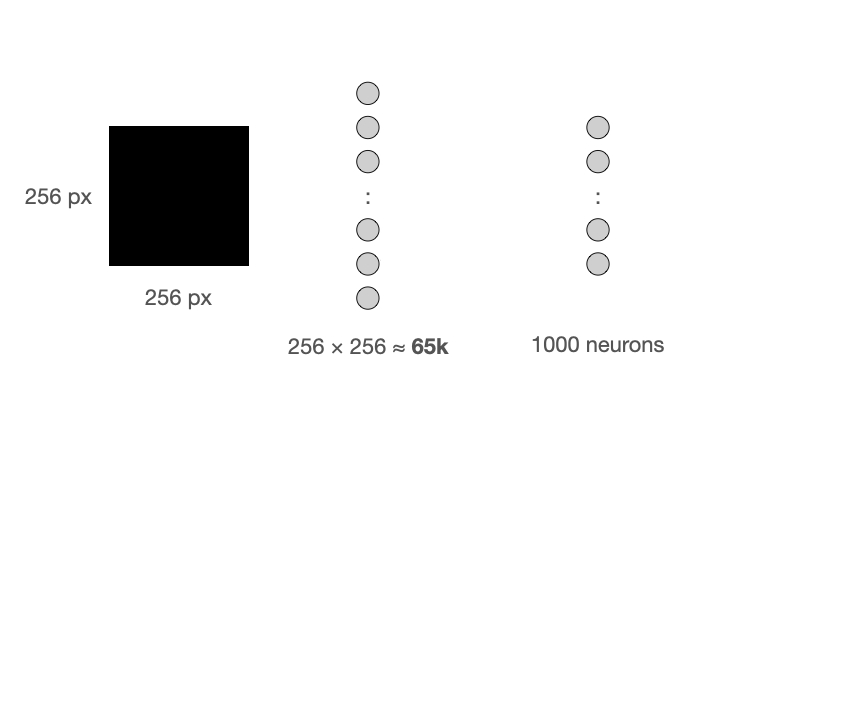

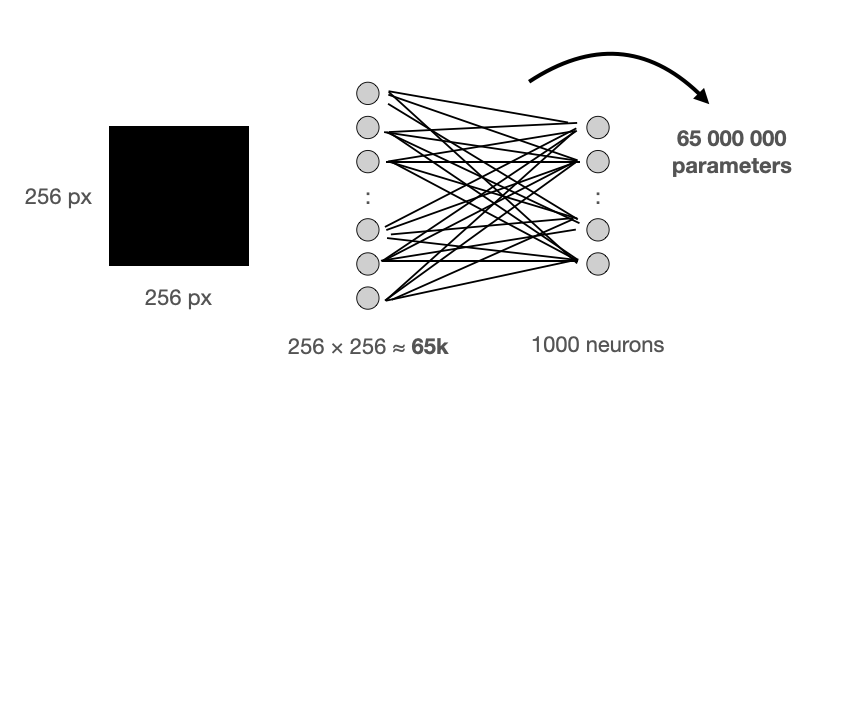

Why not use linear layers?

Why not use linear layers?

Why not use linear layers?

Why not use linear layers?

Why not use linear layers?

Why not use linear layers?

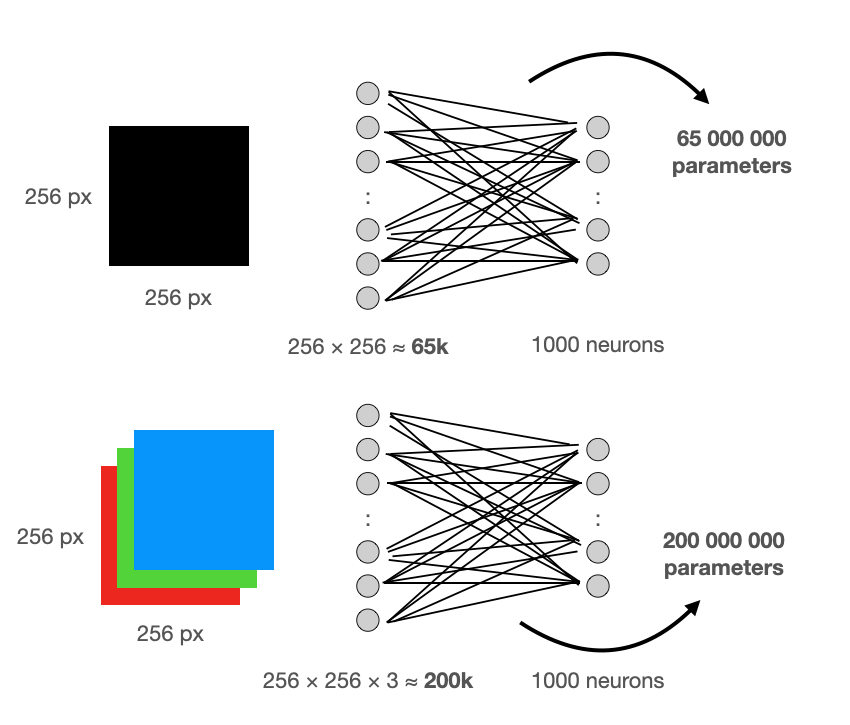

- Linear layers:

- Slow training

- Overfitting

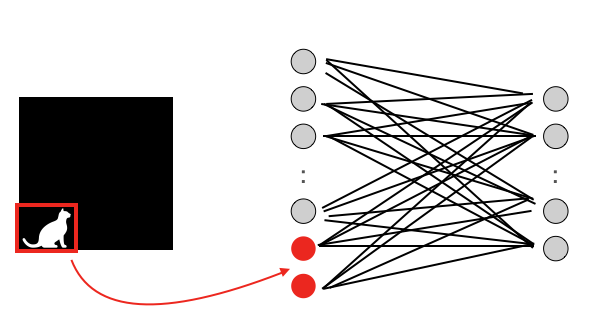

- Don't recognize spatial patterns

- A better alternative: convolutional layers!

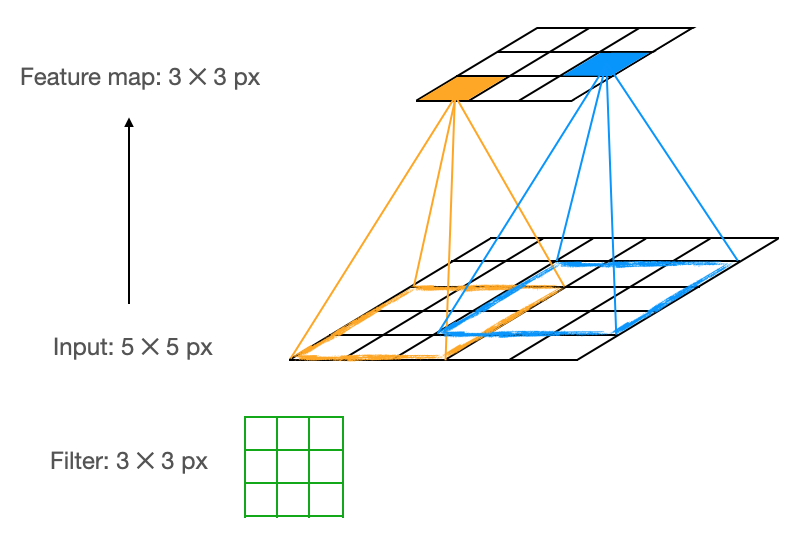

Convolutional layer

- Slide filter(s) of parameters over the input

- At each position, perform convolution

- Resulting feature map:

- Preservers spatial patterns from input

- Uses fewer parameters than linear layer

- One filter = one feature map

- Apply activations to feature maps

- All feature maps combined form the output

nn.Conv2d(3, 32, kernel_size=3)

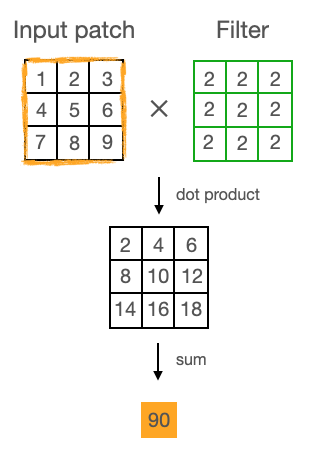

Convolution

- Compute dot product of input patch and filter

- Top-left field: 2 × 1 = 2

- Sum the result

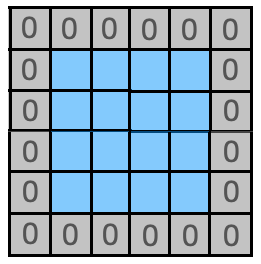

Zero-padding

- Add a frames of zeros to convolutional layer's input

nn.Conv2d(

3, 32, kernel_size=3, padding=1

)

- Maintains spatial dimensions of the input and output tensors

- Ensures border pixels are treated equally to others

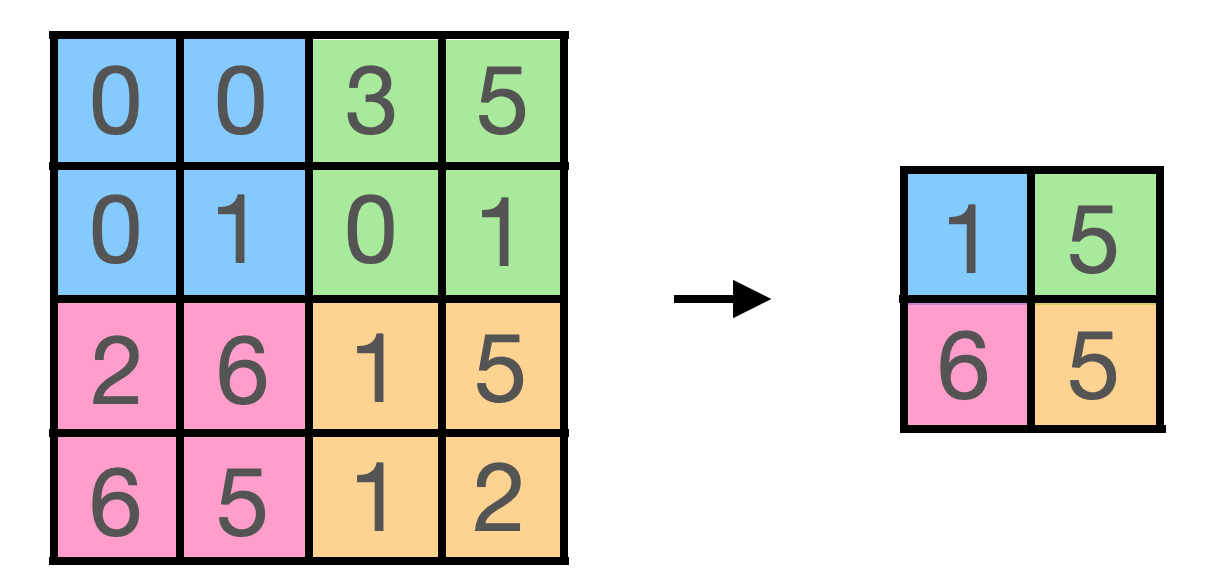

Max Pooling

- Slide non-overlapping window over input

- At each position, retain only the maximum value

- Used after convolutional layers to reduce spatial dimensions

nn.MaxPool2d(kernel_size=2)

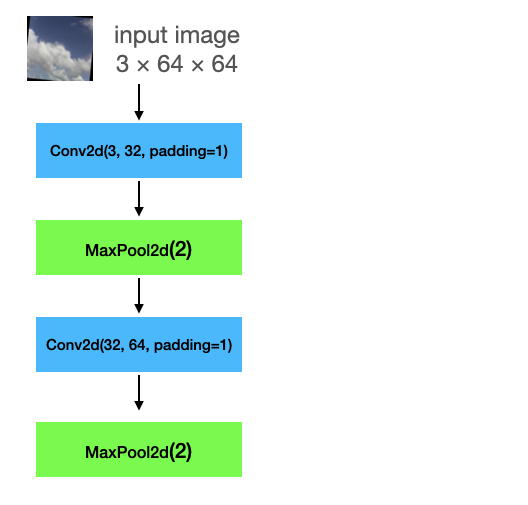

Convolutional Neural Network

class Net(nn.Module): def __init__(self, num_classes): super().__init__()self.feature_extractor = nn.Sequential( nn.Conv2d(3, 32, kernel_size=3, padding=1), nn.ELU(), nn.MaxPool2d(kernel_size=2), nn.Conv2d(32, 64, kernel_size=3, padding=1), nn.ELU(), nn.MaxPool2d(kernel_size=2), nn.Flatten(), )self.classifier = nn.Linear(64*16*16, num_classes)def forward(self, x): x = self.feature_extractor(x) x = self.classifier(x) return x

feature_extractor: (convolution, activation, pooling), repeated twice and flattenedclassifier: single linear layerforward(): pass input image through feature extractor and classifier

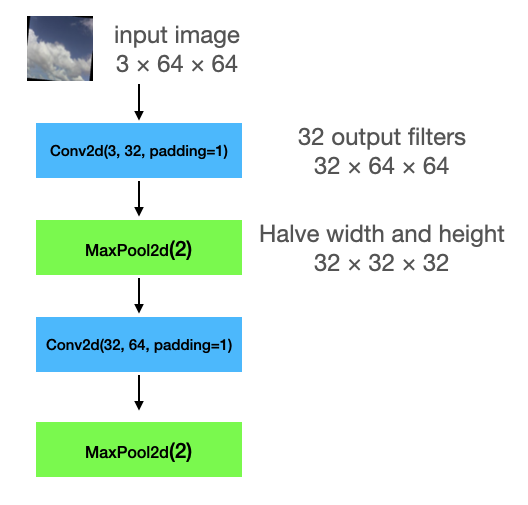

Feature extractor output size

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

self.classifier = nn.Linear(64*16*16, num_classes)

`

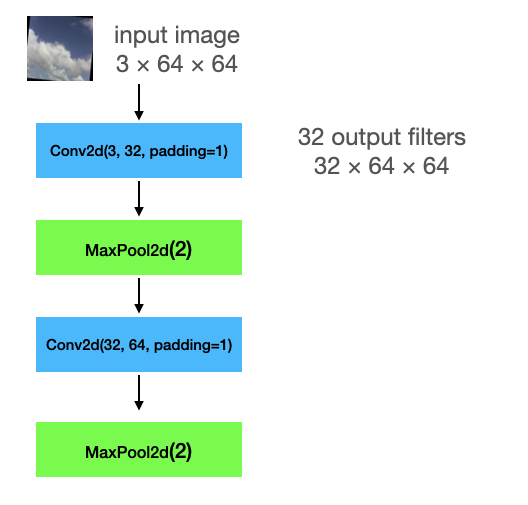

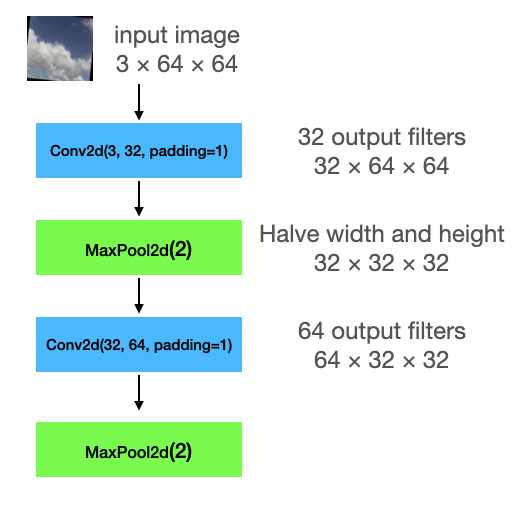

Feature extractor output size

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

self.classifier = nn.Linear(64*16*16, num_classes)

`

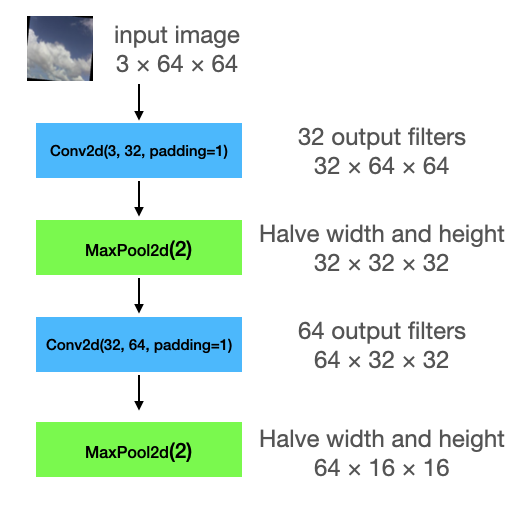

Feature extractor output size

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

self.classifier = nn.Linear(64*16*16, num_classes)

`

Feature extractor output size

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

self.classifier = nn.Linear(64*16*16, num_classes)

`

Feature extractor output size

self.feature_extractor = nn.Sequential(

nn.Conv2d(3, 32, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Conv2d(32, 64, kernel_size=3, padding=1),

nn.ELU(),

nn.MaxPool2d(kernel_size=2),

nn.Flatten(),

)

self.classifier = nn.Linear(64*16*16, num_classes)

`

Let's practice!

Intermediate Deep Learning with PyTorch