Response caching and usage quotas

Azure API Management

Fiodar Sazanavets

Senior Software Engineer at Microsoft

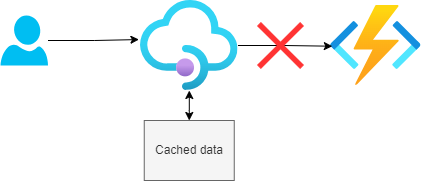

Setting up response caching

- When responses are cached, there's no need to go to the backend endpoint

- Caching is applied to popular endpoints that often return the same data

- Round-trips to endpoints are time consuming and expensive

- Can be implemented in APIM via caching policies

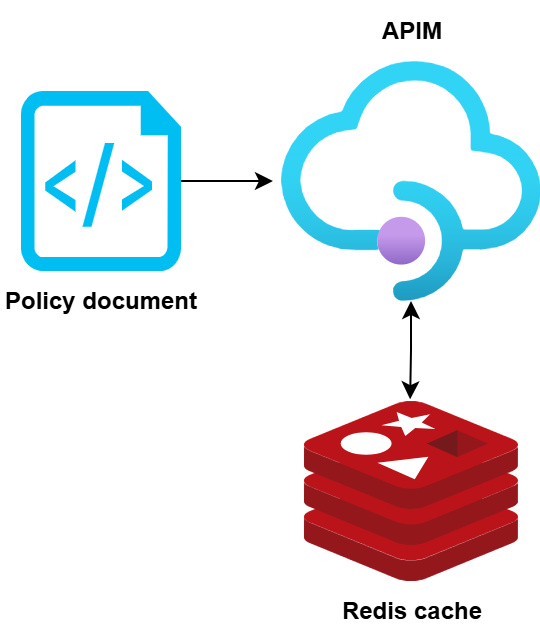

Configuring response caching

- Caching policies configuration can:

- Specify cache duration

- Vary by query string or headers

- Set the location of the cache

- Cache location can be:

- The edge, i.e. API gateway

- External caching service, e.g. Redis

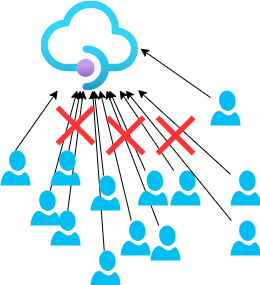

Protecting against request surges

- Even with caching, too many clients can overload APIs

- Causes slower performance

- Increases resource costs

- Worst case: system outage (e.g., DDoS attack)

How usage quotas protect APIs

- Restrict number of calls over a period

- This guards against sudden spikes of requests

- Can protect against DDoS attacks

- Can save money by preventing overuse

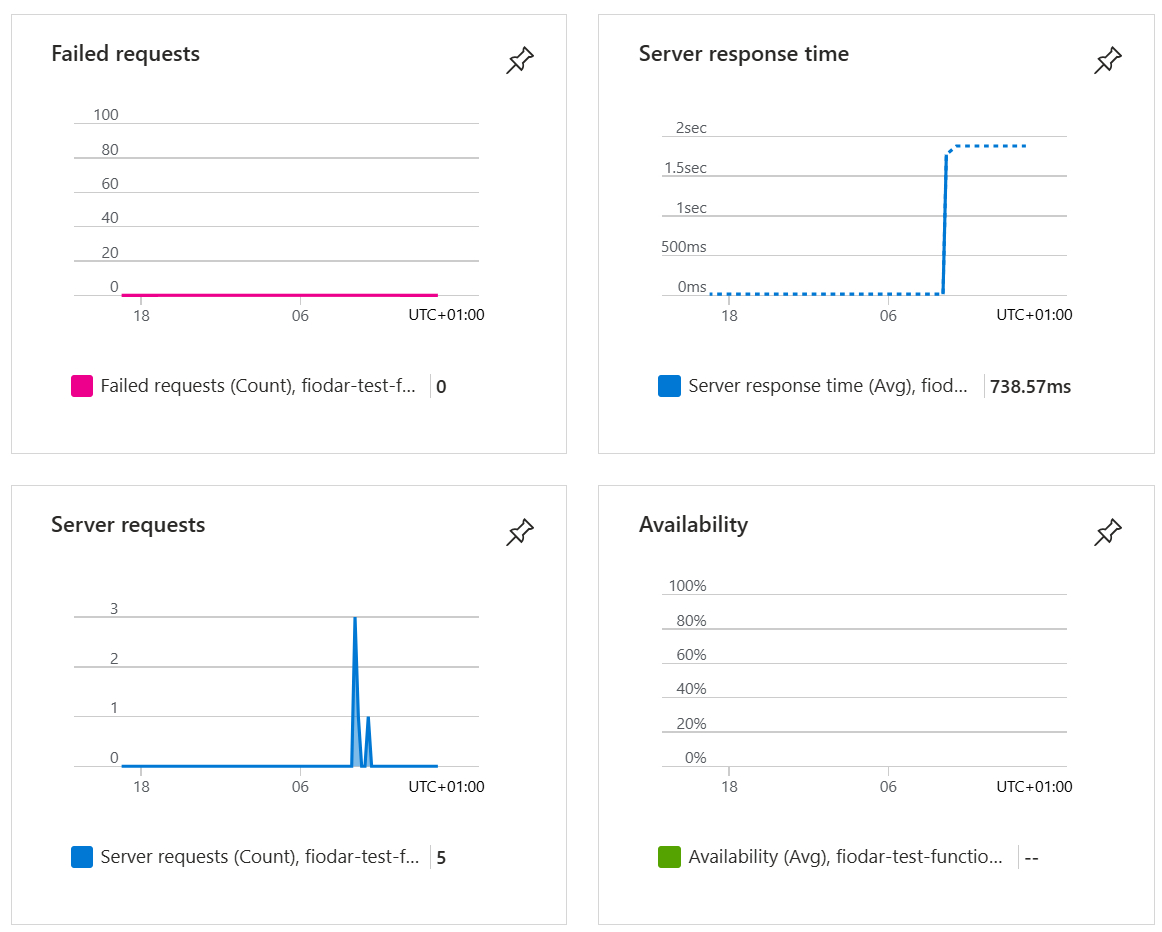

Measuring resource usage

You can't improve what you don't measure

- Enable Application Insights to track metrics (e.g., latency, outliers)

Example: 'GET Products' call

- Low cache hit ratio but high traffic

- Solution: increase cache duration (5 -> 30 minutes)

With insights, you can fine-tune:

- Cache durations

- Quota thresholds

- Service tiers for best cost-performance

Let's practice!

Azure API Management