Congratulations!

Transformer Models with PyTorch

James Chapman

Curriculum Manager, DataCamp

Chapter 1

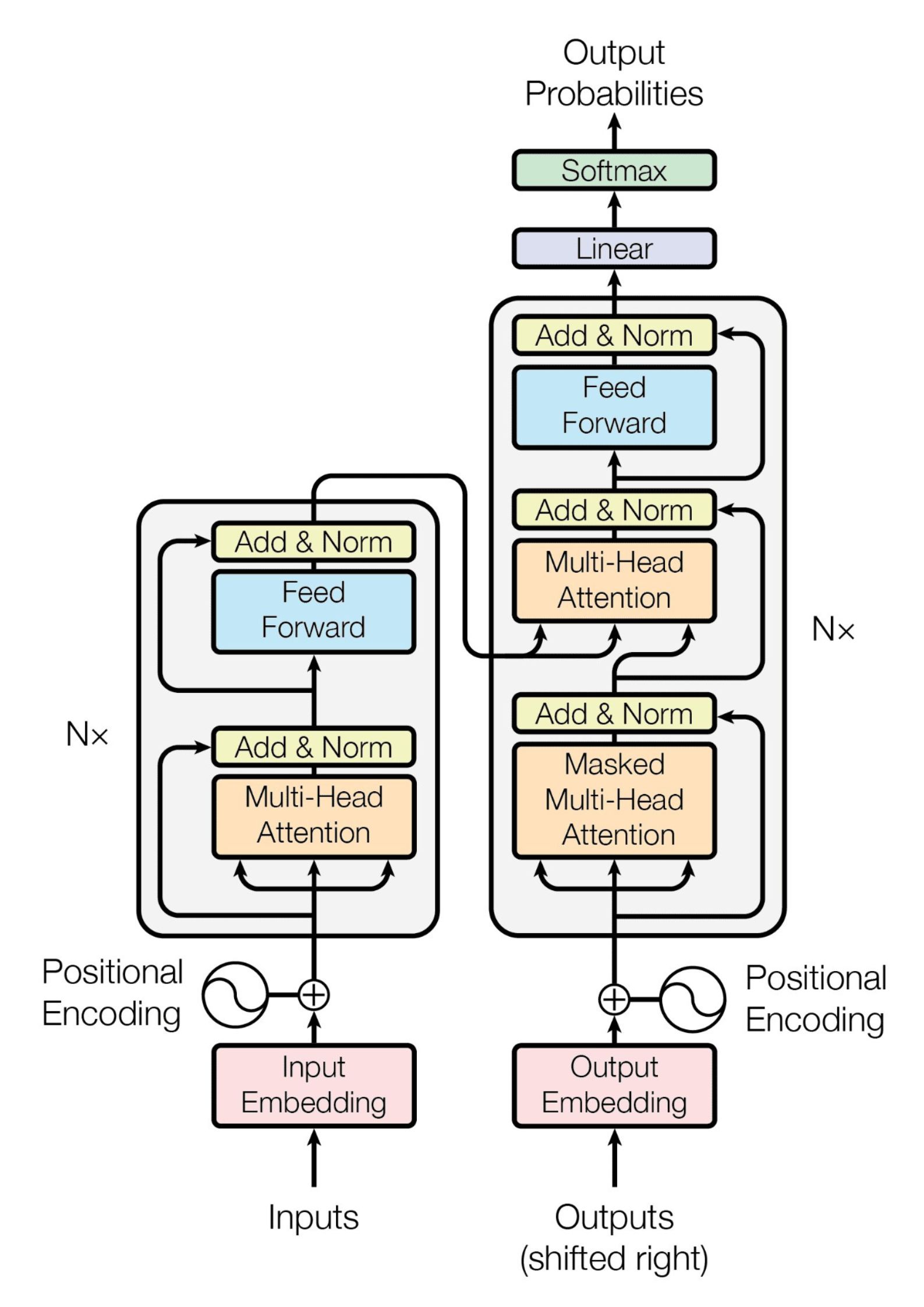

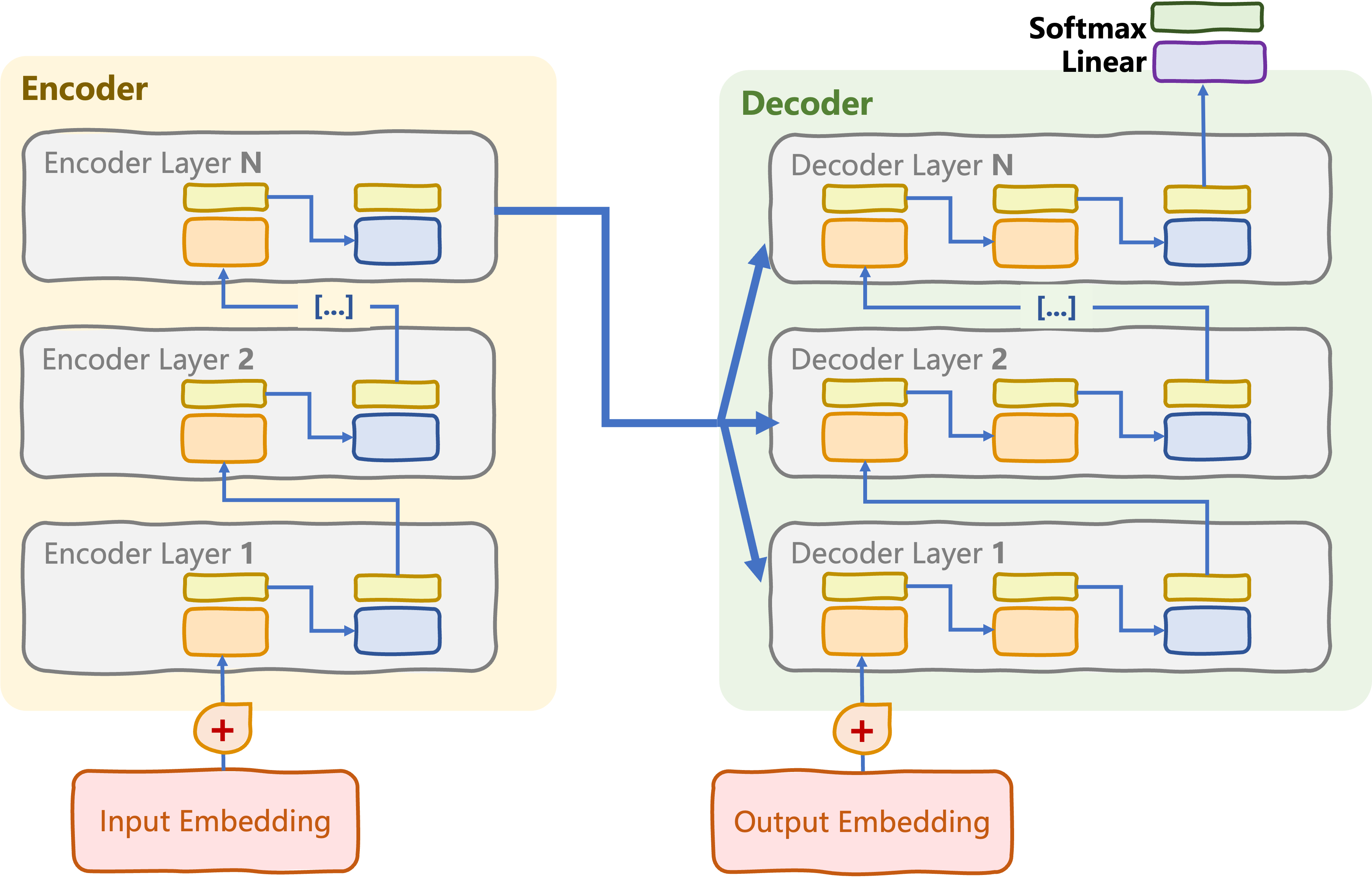

model = nn.Transformer(

d_model=1536,

nhead=8,

num_encoder_layers=6,

num_decoder_layers=6

)

class InputEmbeddings(nn.Module): ...

class PositionalEncoding(nn.Module): ...

class MultiHeadAttention(nn.Module): ...

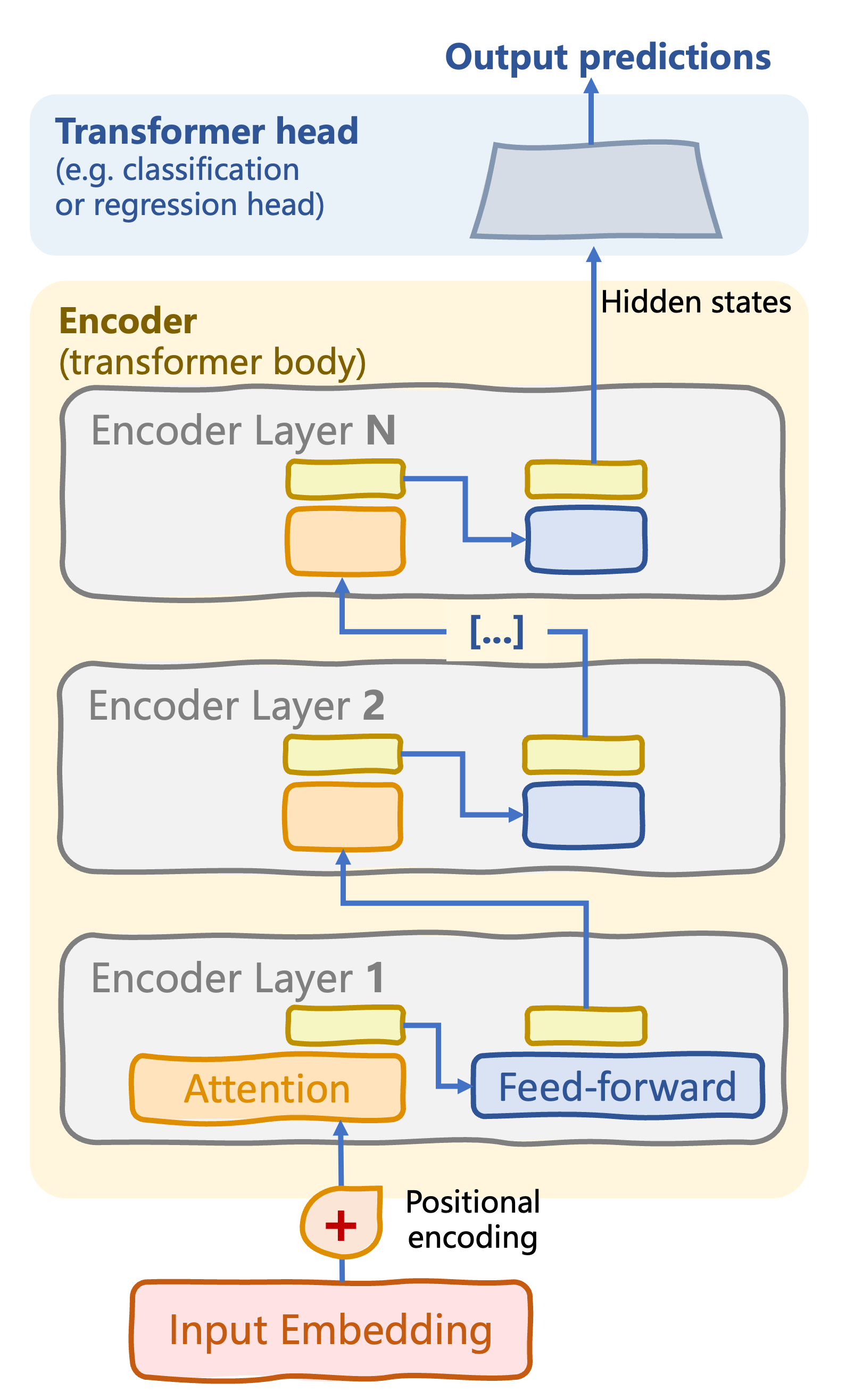

Encoder-only transformer

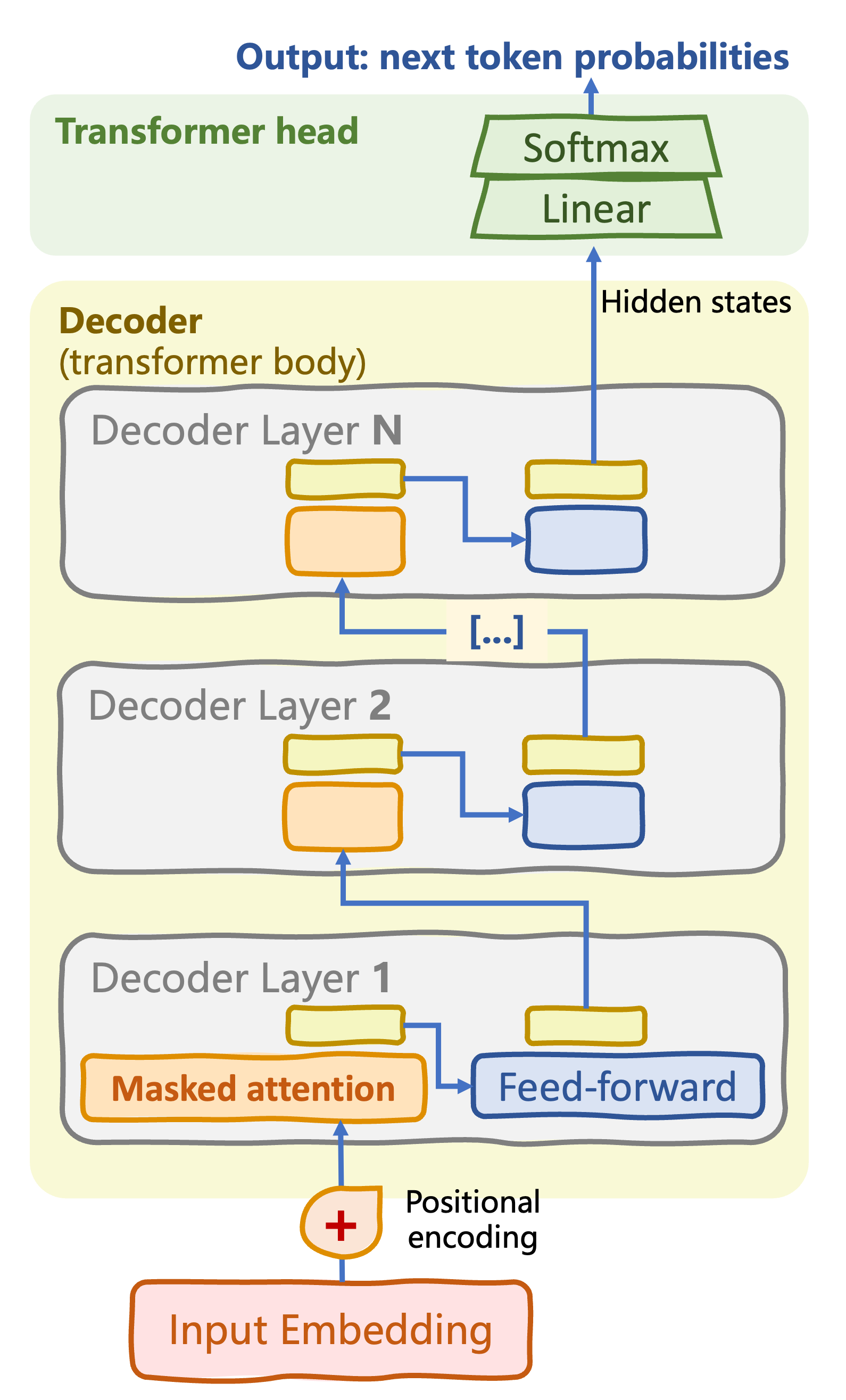

Decoder-only transformer

Chapter 2 - Encoder-decoder transformer

What next?

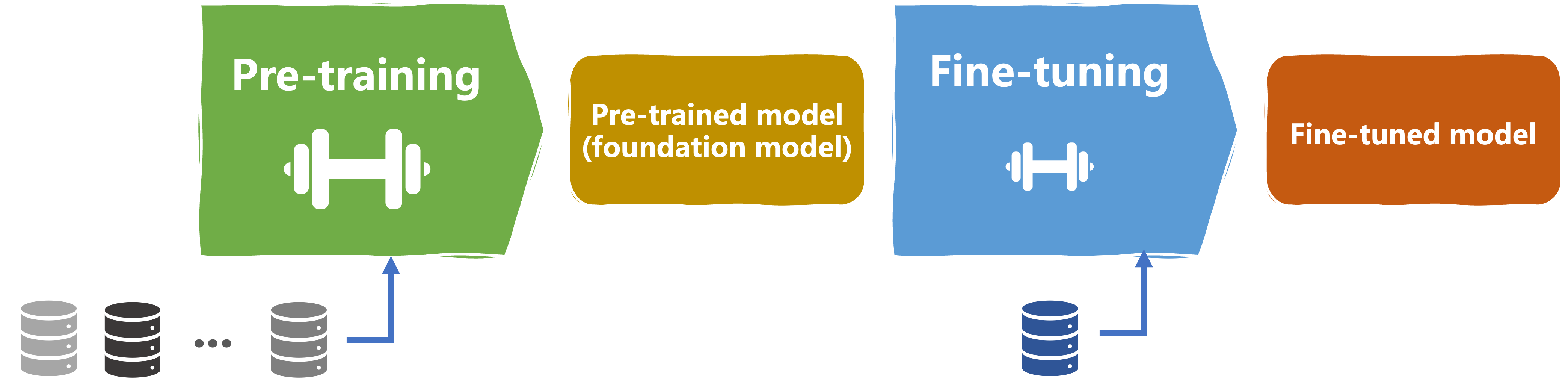

Pre-trained transformers

Let's practice!

Transformer Models with PyTorch