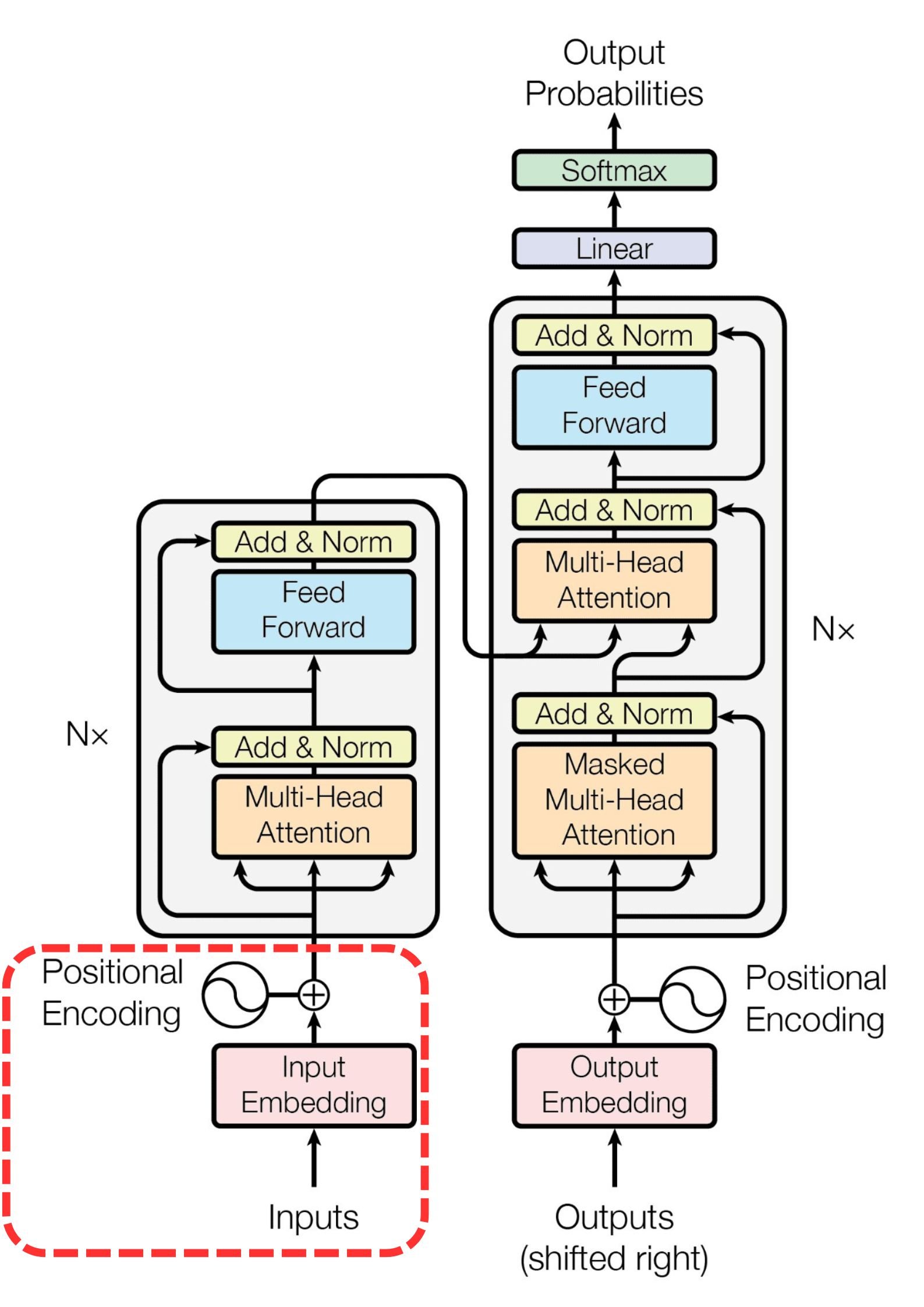

Embedding and positional encoding

Transformer Models with PyTorch

James Chapman

Curriculum Manager, DataCamp

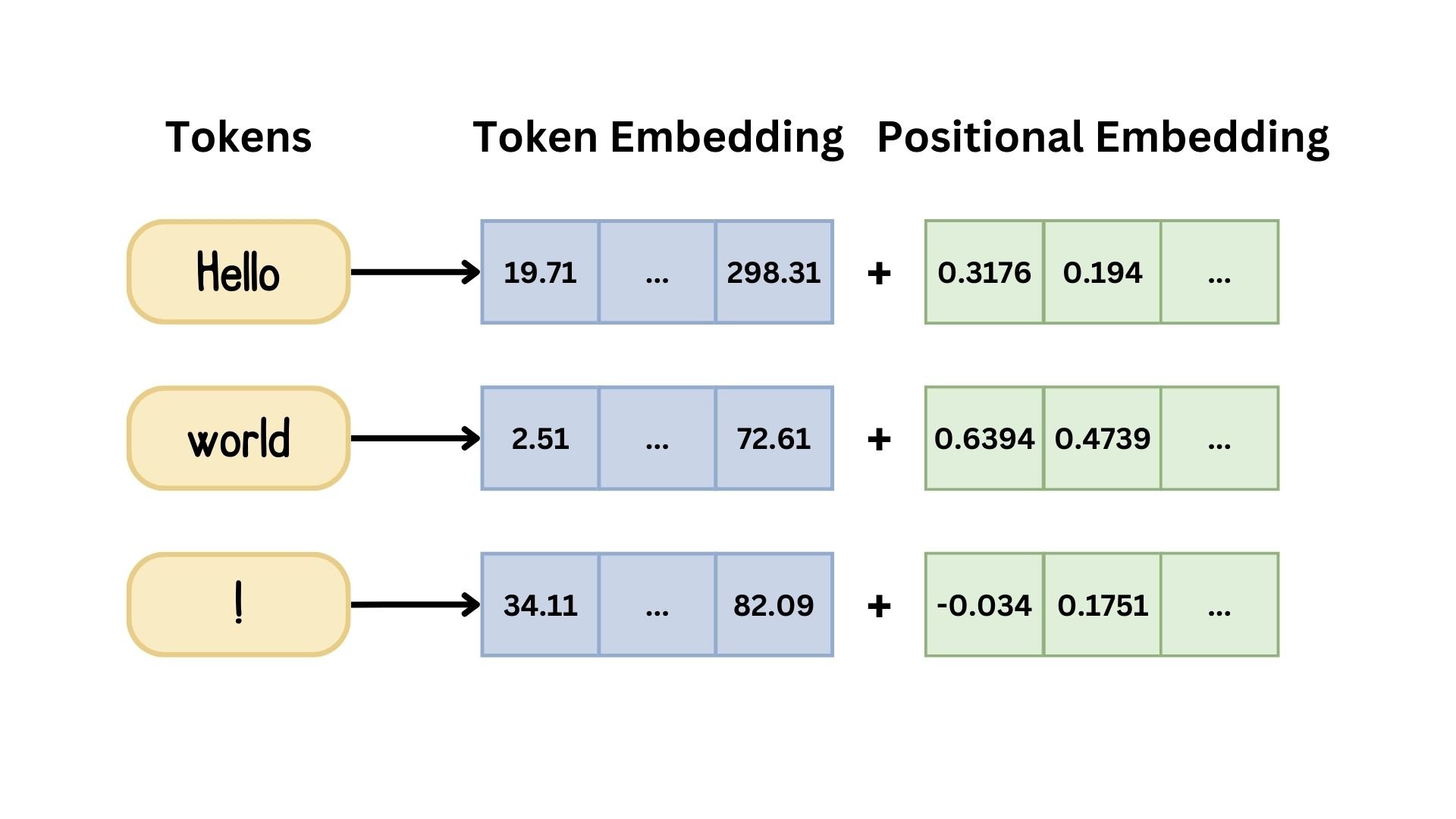

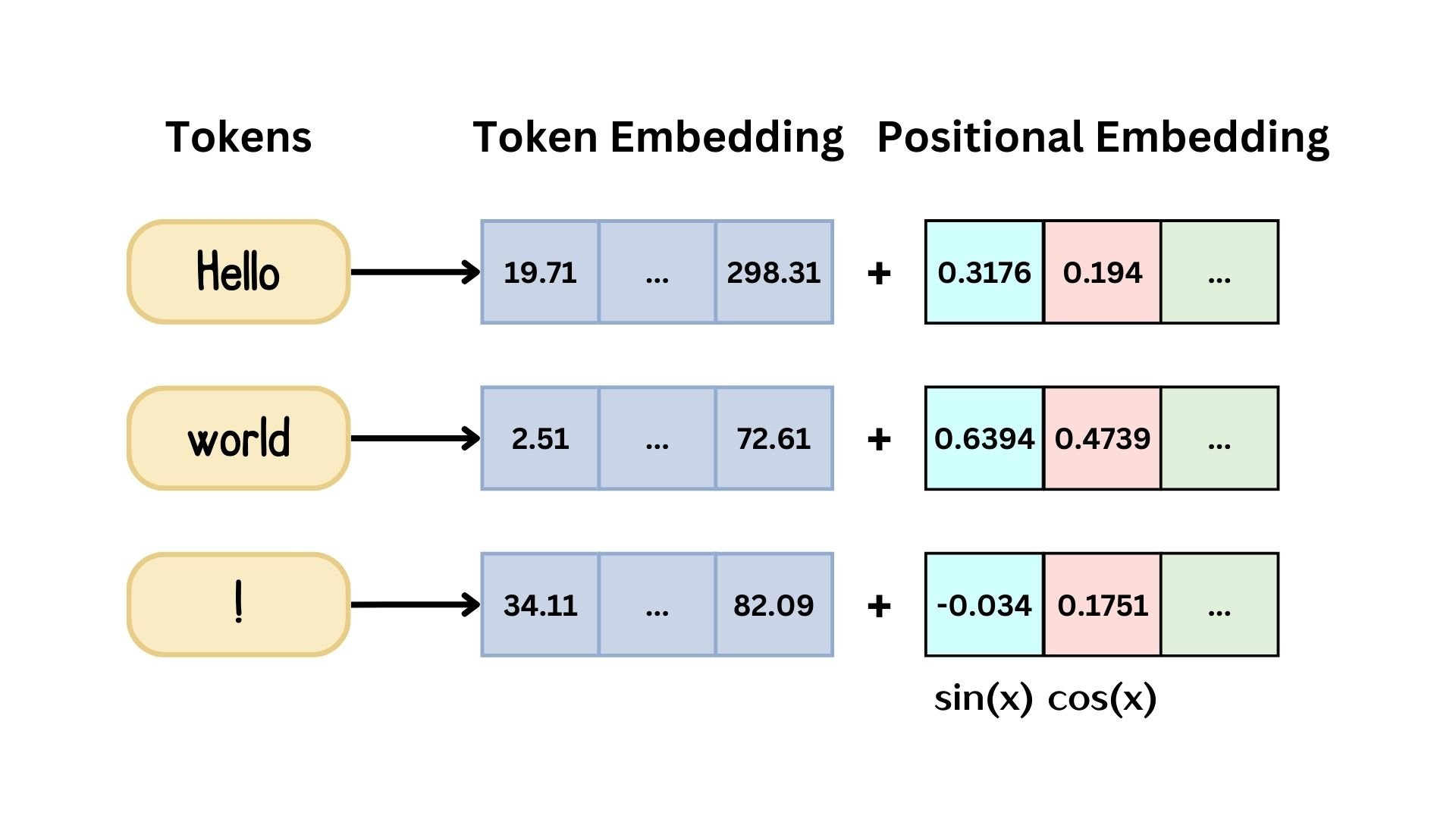

Embedding and positional encoding in transformers

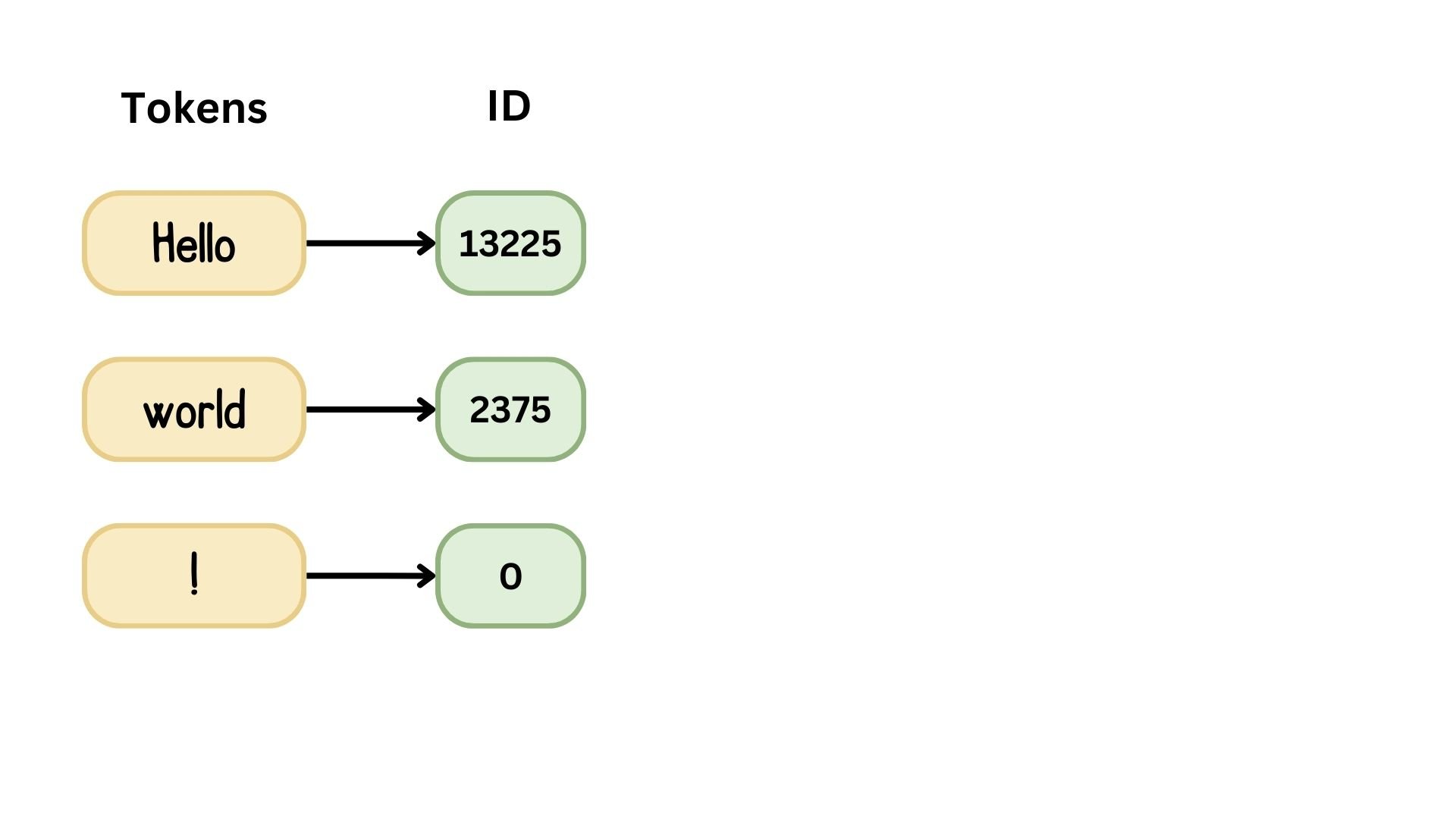

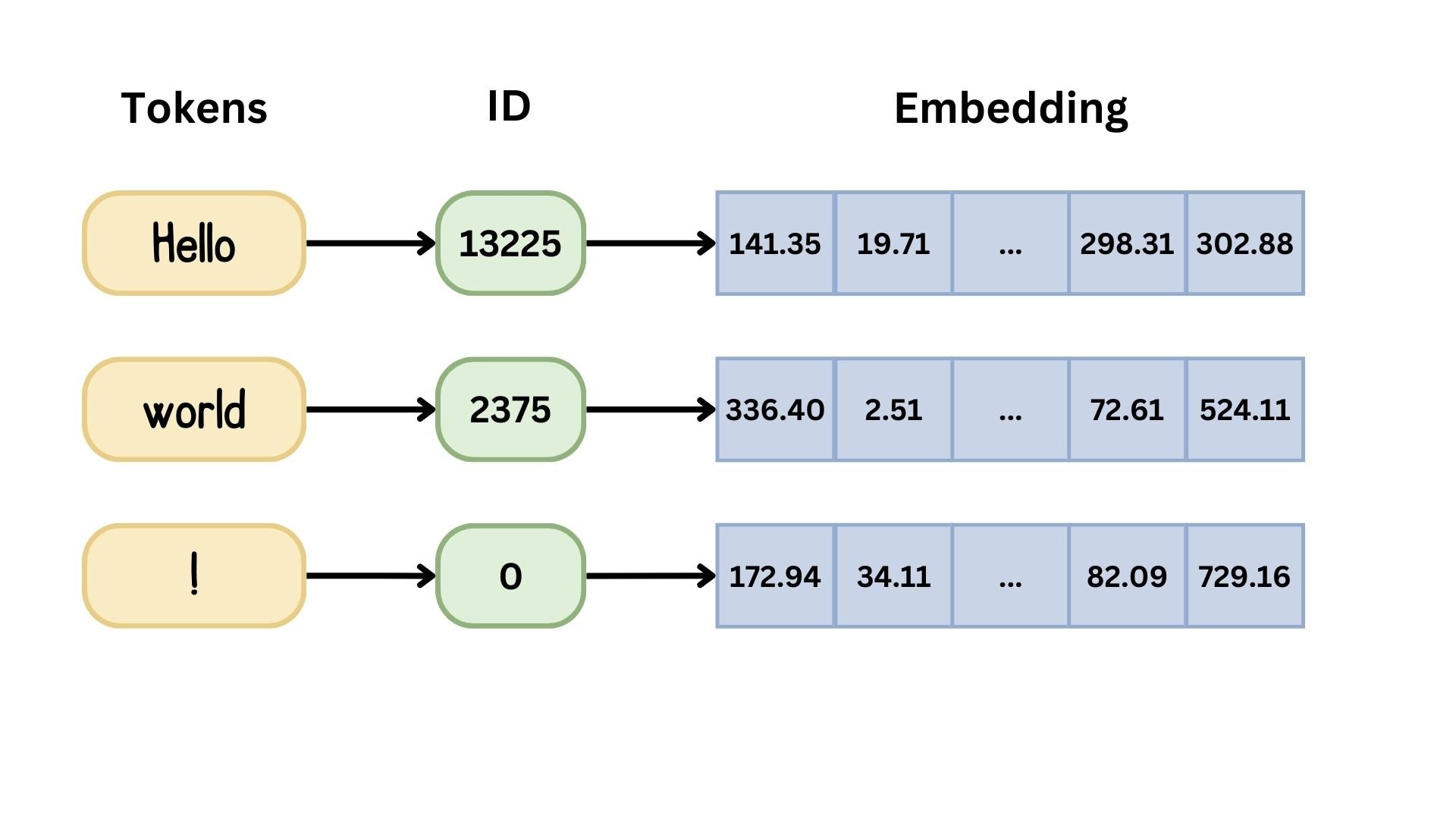

- Embedding: tokens → embedding vector

- Positional encoding: Token position + embedding vector → positional encoding

Embedding sequences

Embedding sequences

Embedding sequences

import torch import math import torch.nn as nn class InputEmbeddings(nn.Module):def __init__(self, vocab_size: int, d_model: int) -> None: super().__init__() self.d_model = d_model self.vocab_size = vocab_size self.embedding = nn.Embedding(vocab_size, d_model)def forward(self, x): return self.embedding(x) * math.sqrt(self.d_model)

- Standard Practice: scaling by $\sqrt{d_{model}}$

Creating embeddings

embedding_layer = InputEmbeddings(vocab_size=10_000, d_model=512)embedded_output = embedding_layer(torch.tensor([[1, 2, 3, 4], [5, 6, 7, 8]]))print(embedded_output.shape)

torch.Size([2, 4, 512])

Positional encoding

Positional encoding

sin(x)

$$ PE_{(pos, 2i)}=\sin(\frac{pos}{10000^{2i/d_{model}}}) $$

cos(x)

$$ PE_{(pos, 2i+1)}=\cos(\frac{pos}{10000^{2i/d_{model}}}) $$

Building a positional encoder

class PositionalEncoding(nn.Module): def __init__(self, d_model, max_seq_length): super().__init__() pe = torch.zeros(max_seq_length, d_model)position = torch.arange(0, max_seq_length, dtype=torch.float).unsqueeze(1)div_term = torch.exp(torch.arange(0, d_model, 2, dtype=torch.float) * -(math.log(10000.0) / d_model)) pe[:, 0::2] = torch.sin(position * div_term) pe[:, 1::2] = torch.cos(position * div_term)self.register_buffer('pe', pe.unsqueeze(0))def forward(self, x): return x + self.pe[:, :x.size(1)]

Creating positional encodings

pos_encoding_layer = PositionalEncoding(d_model=512, max_seq_length=4)pos_encoded_output = pos_encoding_layer(embedded_output)print(pos_encoded_output.shape)

torch.Size([2, 4, 512])

Let's practice!

Transformer Models with PyTorch