Querying AI models with Databricks

Databricks with the Python SDK

Avi Steinberg

Senior Software Engineer

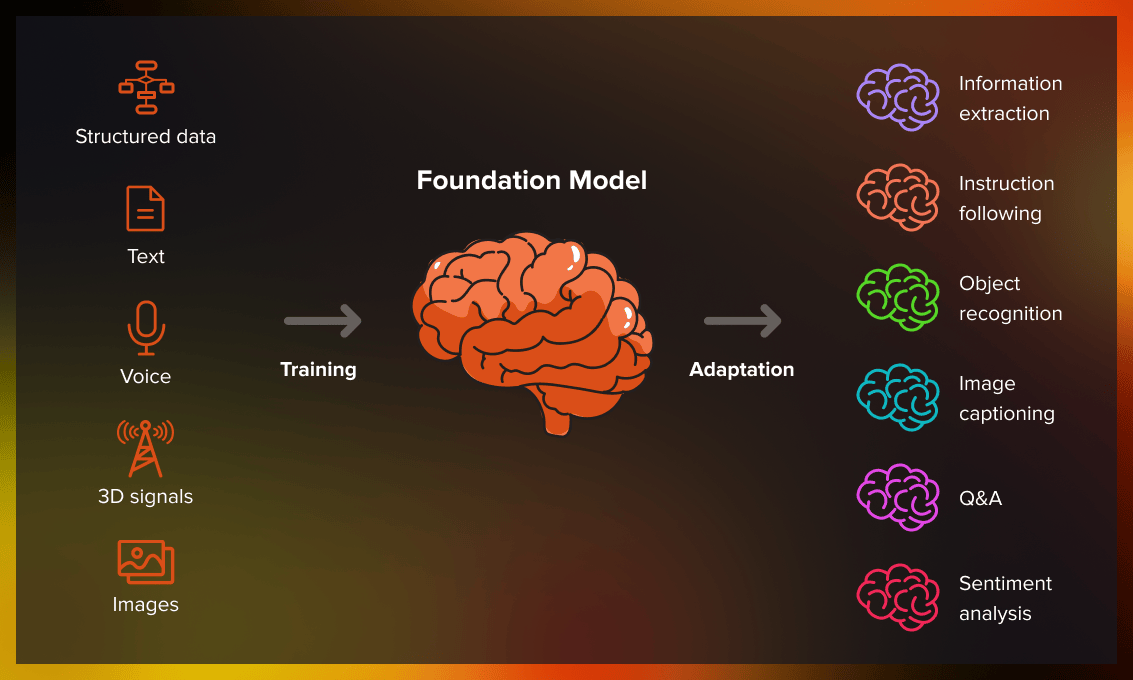

Foundation Models

- Large, pre-trained neural networks

- Trained on a broad range of large datasets

- Hosted on Databricks or outside Databricks

1 https://docs.databricks.com/aws/en/machine-learning/model-serving/foundation-model-overview

Popular chat completion models

Hosted outside of Databricks:

- OpenAI's ChatGPT

- Anthropic's Claude

- Google Cloud's Gemini

Hosted on Databricks:

- Meta Llama

$$

Databricks Meta Llama

- Meta partnered with Databricks to host Meta Llama on Databricks

- Large Language Model (LLM), built and trained by Meta

- Competitive with OpenAI GPT, in terms of performance

1 1. https://docs.databricks.com/aws/en/machine-learning/foundation-model-apis/supported-models#meta-llama-3-3-70b-instruct 2. https://www.databricks.com/product/pricing/foundation-model-serving

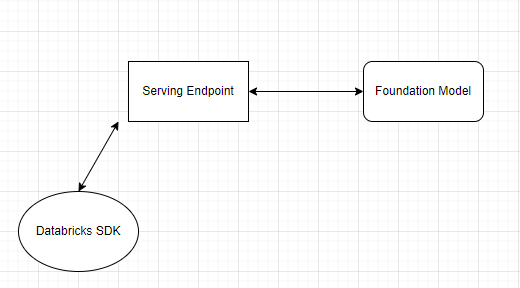

Serving endpoints

- Already exist for Foundation models hosted on Databricks

- Can be created for external and custom models

- Can use the Databricks SDK to query Foundation Models

1 https://docs.databricks.com/api/workspace/servingendpoints

Serving endpoints API

- Allows you to use query AI models by making API requests to the corresponding Serving Endpoint

- Accessed via

serving_endpointsattribute of theWorkspaceClient

1 https://docs.databricks.com/api/workspace/servingendpoints

Chat message roles

SYSTEM:

Instructions for how model should respond to queries

USER:

Queries sent to the model by the user

Example prompt:

"You are a helpful python coding assistant."

Example prompt:

"How do for loops work in Python?"

1 https://databricks-sdk-py.readthedocs.io/en/stable/dbdataclasses/serving.html#databricks.sdk.service.serving.ChatMessageRole

Querying chat models with Databricks

from databricks.sdk import WorkspaceClient

from databricks.sdk.service.serving import ChatMessage, ChatMessageRole

w = WorkspaceClient()

response = w.serving_endpoints.query(

name="databricks-meta-llama-3-3-70b-instruct",

messages=[

ChatMessage(

role=ChatMessageRole.SYSTEM, content="You are a helpful assistant."

),

ChatMessage(role=ChatMessageRole.USER, content="<your-question>"),

],

max_tokens=128)

print(f"RESPONSE:\n{response.choices[0].message.content}")

1 https://docs.databricks.com/aws/en/machine-learning/foundation-model-apis/supported-models#meta-llama-33-70b-instruct

Let's practice!

Databricks with the Python SDK