The Llama fine-tuning libraries

Fine-Tuning with Llama 3

Francesca Donadoni

Curriculum Manager, DataCamp

When to use fine-tuning

- Pre-trained model

- Uses specialized data

- Improve accuracy

- Reduce bias

- Improve knowledge base

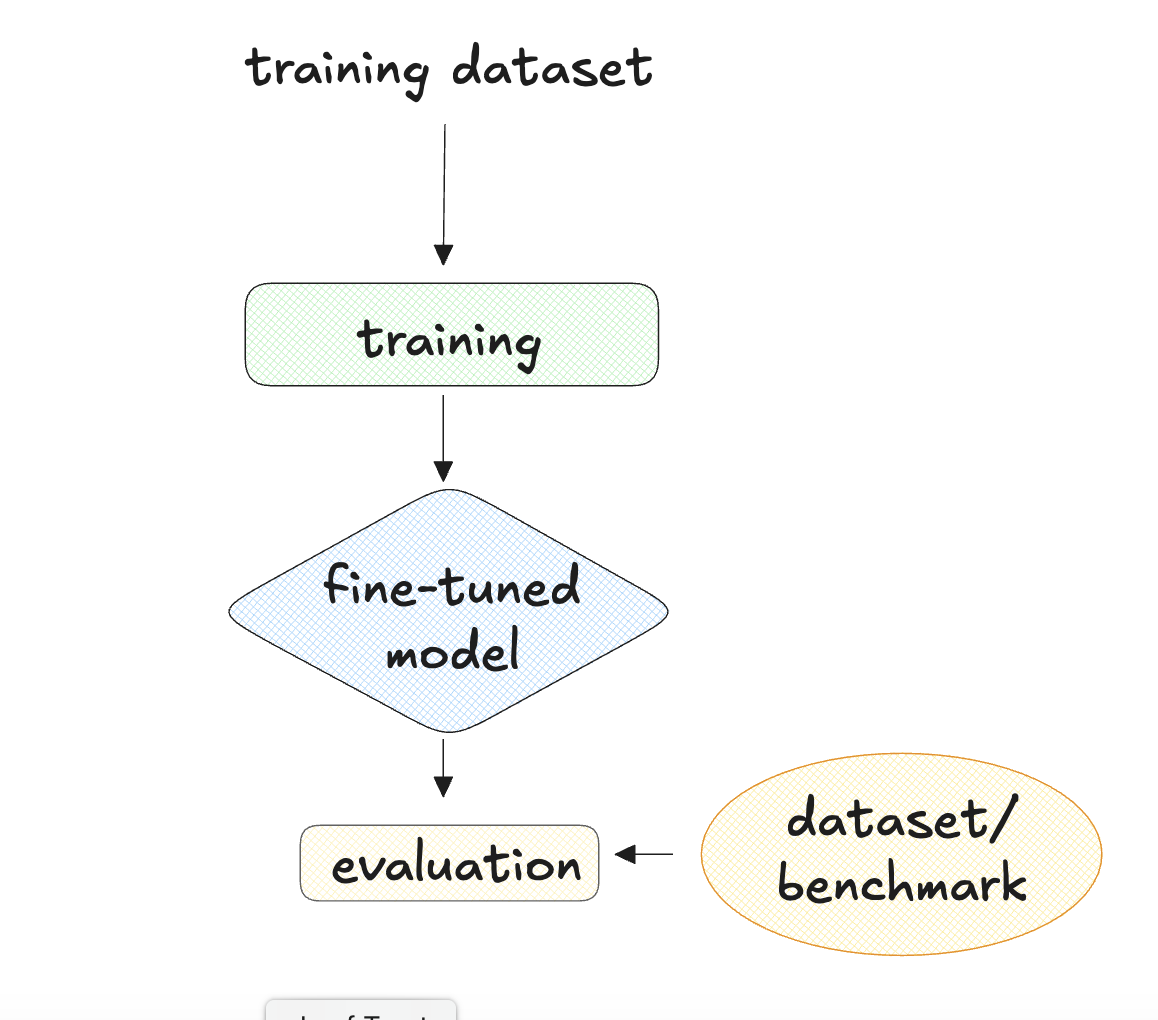

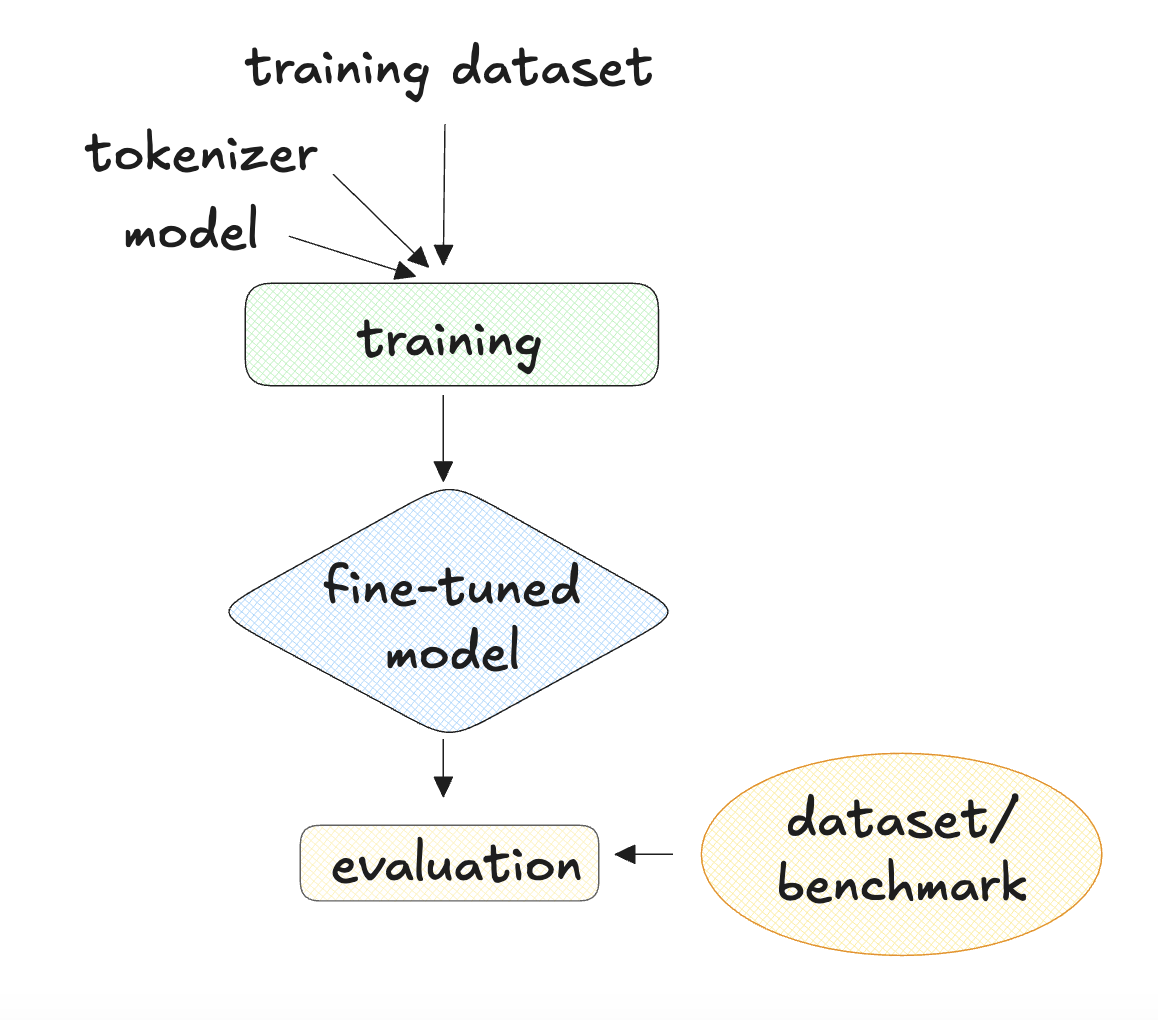

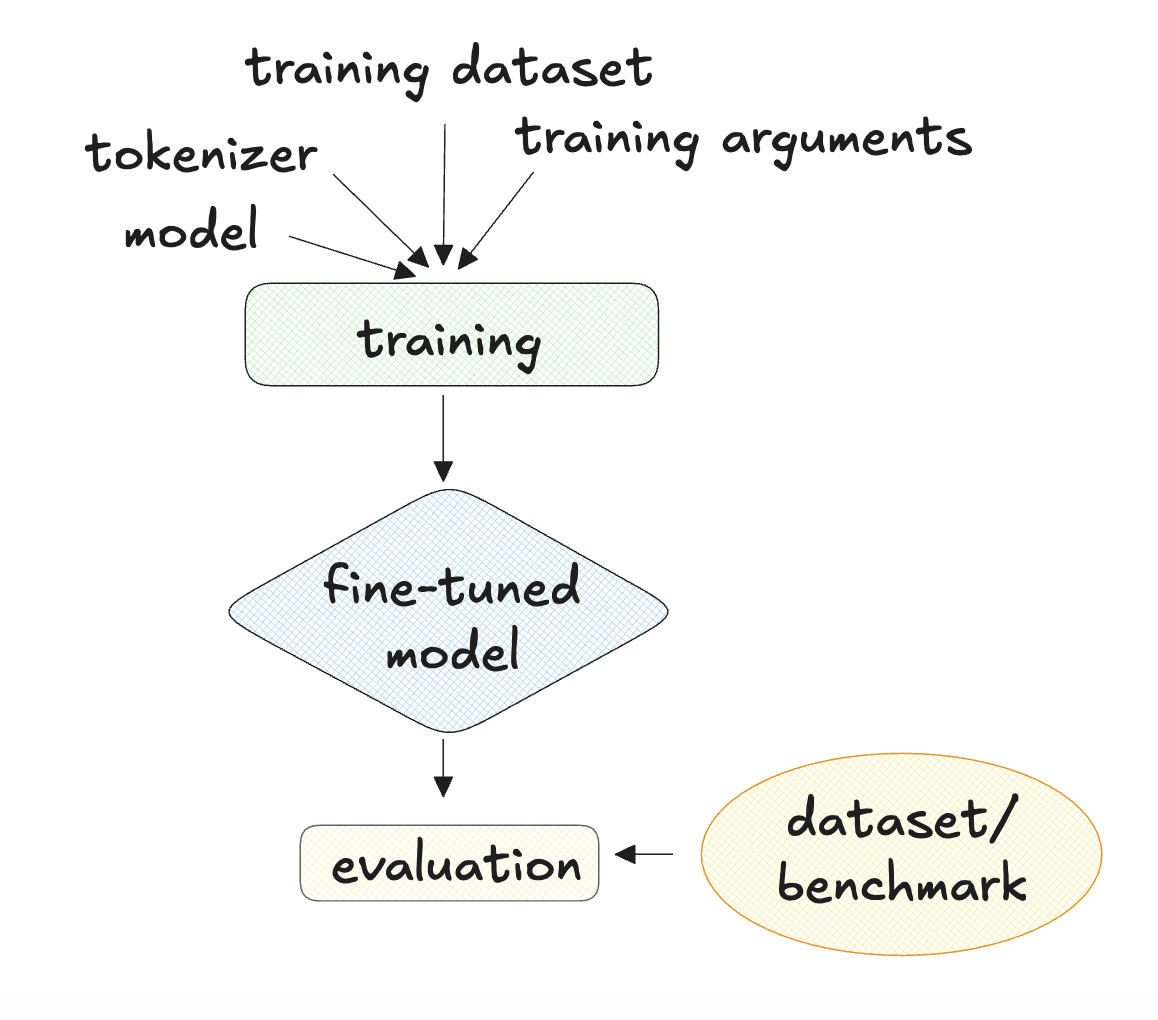

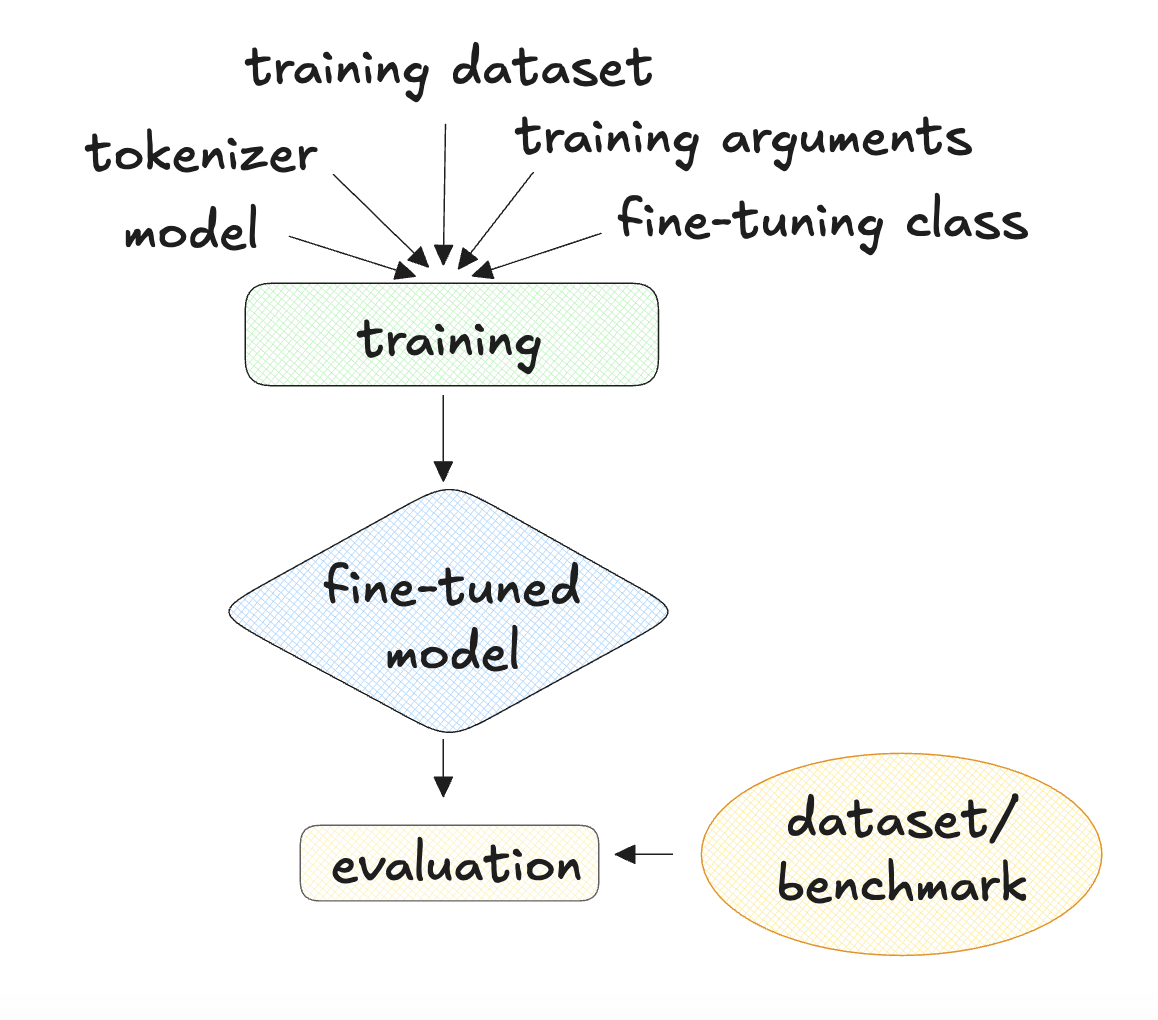

How to use fine-tuning

- Quality of the data

- Model's capacity

- Task definition

- Fine-tuning process

How to use fine-tuning

- Quality of the data

- Model's capacity

- Task definition

- Fine-tuning process

How to use fine-tuning

- Quality of the data

- Model's capacity

- Task definition

- Fine-tuning process

How to use fine-tuning

- Quality of the data

- Model's capacity

- Task definition

- Fine-tuning process

How to use fine-tuning

- Quality of the data

- Model's capacity

- Task definition

- Fine-tuning process

- New model

- Evaluation

The Llama fine-tuning libraries

- 📚 Several libraries for fine-tuning

- 🦙 TorchTune for Llama fine-tuning

- 🚀 Launching a fine-tuning task with TorchTune

Options for Llama fine-tuning

- TorchTune

- Based on configurable templates

- Ideal for: scaling quickly

- SFTTrainer from Hugging Face

- Access to other LLMs

- Ideal for: fine-tuning multiple models

- Unsloth

- Efficient memory usage

- Ideal for: limited hardware

- Axolotl

- Modular approach

- Ideal for: no extensive reconfiguration

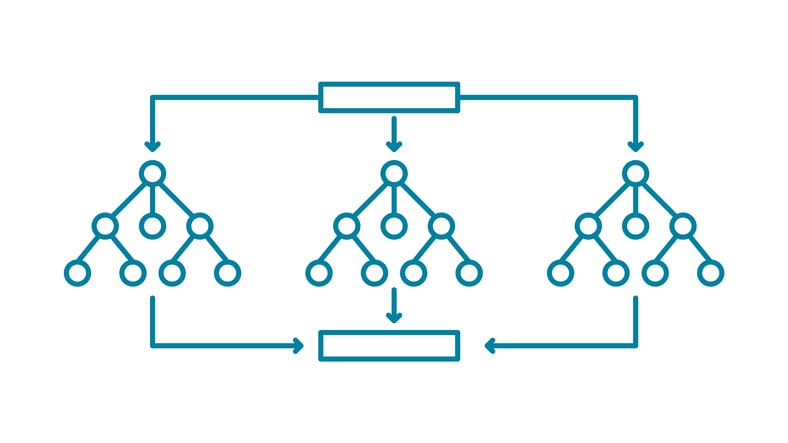

TorchTune and the recipes for fine-tuning

TorchTune recipes:

- Modular templates

- Configurable to be adapted to different projects

- Keep code organized

- Ensure reproducibility

TorchTune list

- Run from a terminal

- Environment with Python

- Install TorchTune

pip3 install torchtune List available recipes

tune ls! if using IPython

!tune ls

TorchTune list

!tune ls

- Output:

RECIPE CONFIG

full_finetune_single_device llama3/8B_full_single_device

llama3_1/8B_full_single_device

llama3_2/1B_full_single_device

llama3_2/3B_full_single_device

full_finetune_distributed llama3/8B_full

llama3_1/8B_full

llama3_2/1B_full

...

TorchTune run

- Use recipe +

--config+ configuration Run fine-tuning

tune run full_finetune_single_device --config \ llama3_1/8B_lora_single_deviceParameters

device=cpuordevice=cudaepochs=<int>(<int>is 0 or a positive integer)

Let's practice!

Fine-Tuning with Llama 3