Preprocessing data for fine-tuning

Fine-Tuning with Llama 3

Francesca Donadoni

Curriculum Manager, DataCamp

Using datasets for fine-tuning

Quality of the data is key

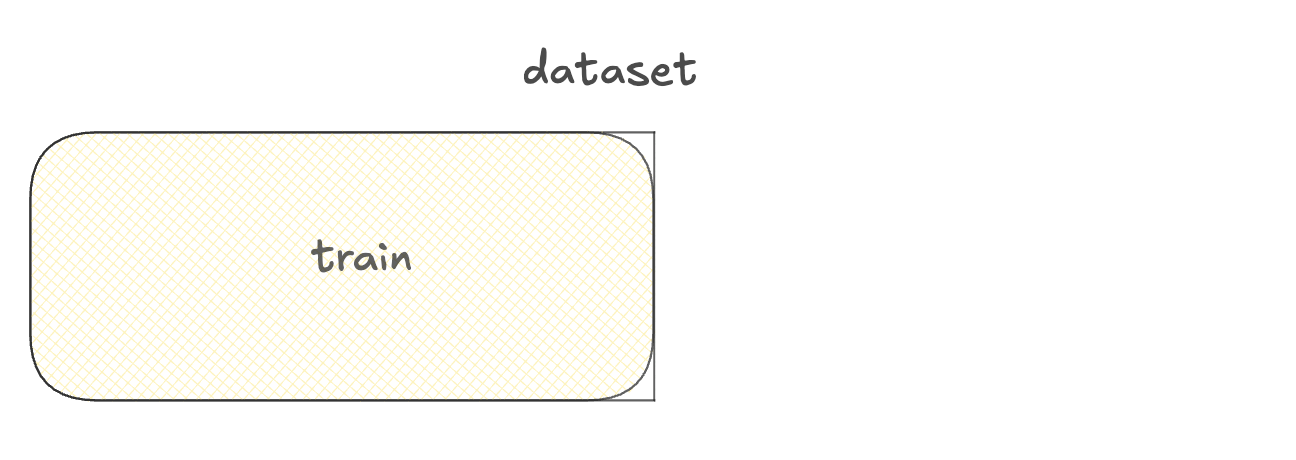

Training Set:

- For model training

- Majority of the data

Using datasets for fine-tuning

Quality of the data is key

Training Set:

- For model training

- Majority of the data

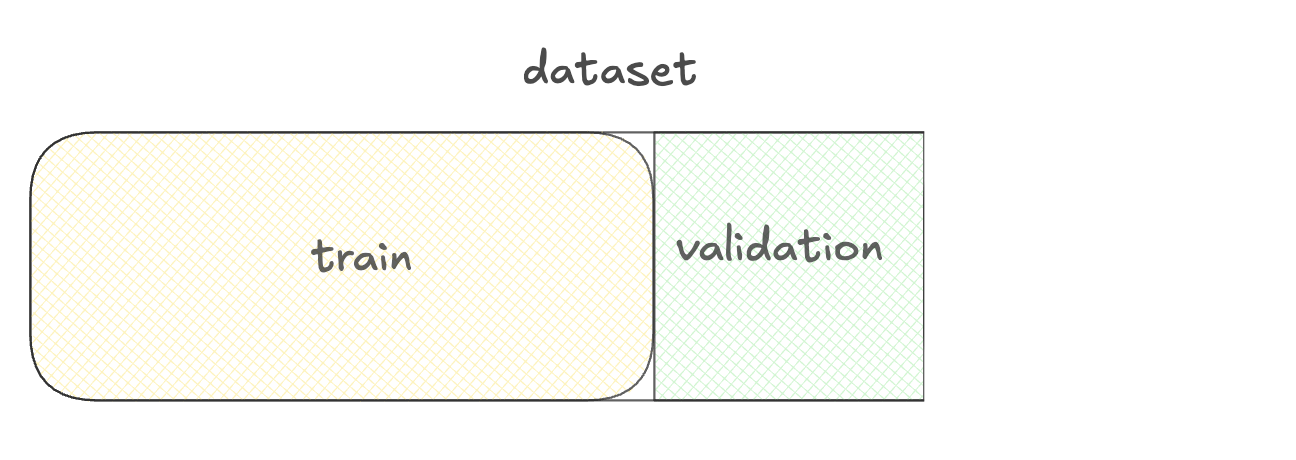

- Validation Set:

- For selecting the best model version

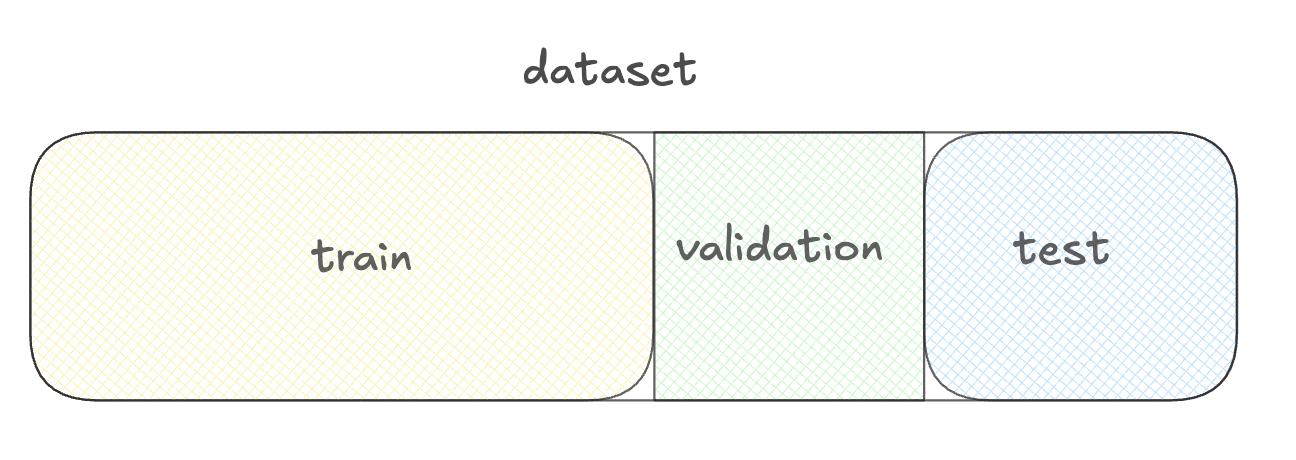

Using datasets for fine-tuning

Quality of the data is key

Training Set:

- For model training

- Majority of the data

- Validation Set:

- For selecting the best model version

- Test Set:

- For evaluating model's performance

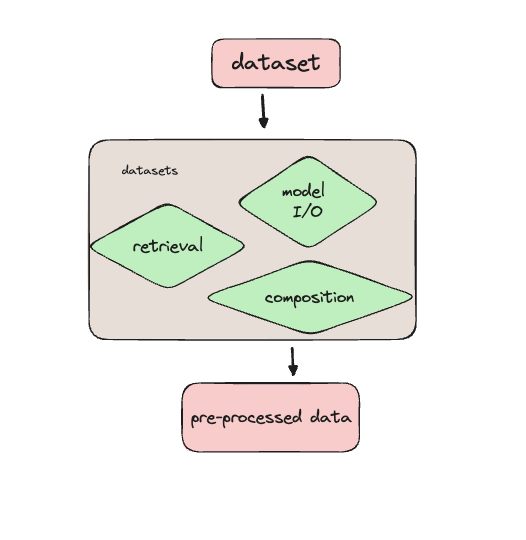

Preparing data using the datasets library

- Datasets library

- Preprocessing

- Split

- Load

- Manage memory

Loading a customer service dataset

from datasets import load_datasetds = load_dataset( 'bitext/Bitext-customer-support-llm-chatbot-training-dataset',split="train")print(ds.column_names)

['flags', 'instruction', 'category', 'intent', 'response']

Peeking into the data

import pprint

pprint.pprint(ds[0])

{'category': 'ORDER',

'flags': 'B',

'instruction': 'question about cancelling order {{Order Number}}',

'intent': 'cancel_order',

'response': "I've understood you have a question regarding canceling order "

"{{Order Number}}, and I'm here to provide you with the "

'information you need. Please go ahead and ask your question, and '

"I'll do my best to assist you."}

Filtering the dataset

from datasets import load_dataset, Dataset ds = load_dataset( 'bitext/Bitext-customer-support-llm-chatbot-training-dataset', split="train")print(ds.shape)

(26872, 5)

first_thousand_points = ds[:1000]ds = Dataset.from_dict(first_thousand_points)

Preprocessing the dataset

def merge_example(row):row['conversation'] = f"Query: {row['instruction']}\nResponse: {row['response']}" return rowds = ds.map(merge_example)print(ds[0]['conversation'])

Query: question about cancelling order {{Order Number}}

Response: I've understood you have a question regarding canceling order {{Order Number}},

and I'm here to provide you with the information you need. Please go ahead and ask your

question, and I'll do my best to assist you.

Saving the preprocessed dataset

ds.save_to_disk("preprocessed_dataset")

Saving the dataset (1/1 shards): 100%

26872/26872 [00:00<00:00, 383823.33 examples/s]

from datasets import load_from_disk

ds_preprocessed = load_from_disk("preprocessed_dataset")

Using Hugging Face datasets with TorchTune

- Can use Hugging Face dataset with TorchTune

- Set a dataset path and configurations

tune run full_finetune_single_device --config llama3/8B_full_single_device \

dataset=preprocessed_dataset dataset.split=train

Let's practice!

Fine-Tuning with Llama 3