Efficient fine-tuning with LoRA

Fine-Tuning with Llama 3

Francesca Donadoni

Curriculum Manager, DataCamp

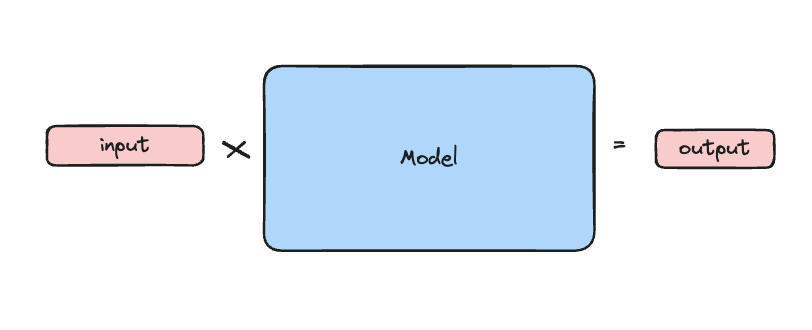

What happens when we train a model?

- Tokens are input data forming a vector

- Matrix (model) multiplication

- Results in output vectors

- Errors are used to update model weights

- Model size determines training difficulty

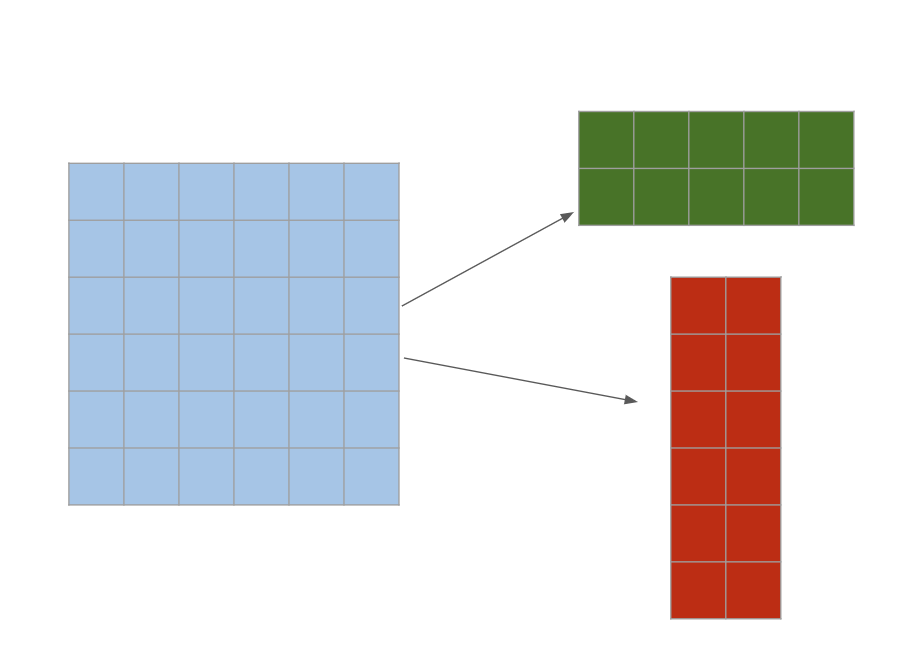

What is LoRA

- Low-rank Decomposition

- Reduces training parameters

- Maintains performance

- Regularization effect

How to implement LoRA using PEFT

from peft import LoraConfiglora_config = LoraConfig(r=12,lora_alpha=32,lora_dropout=0.05,bias="none",task_type="CAUSAL_LM", target_modules=['q_proj', 'v_proj'])

Integrating LoRA configuration in training

trainer = SFTTrainer( model=model,train_dataset=ds,max_seq_length=250, dataset_text_field='conversation',tokenizer=tokenizer, args=training_argumentspeft_config=lora_config,)trainer.train()

LoRA vs regular finetuning

TinyLlama/TinyLlama-1.1B-Chat-v1.0- 1.1 billion parameters

- 11k samples

- ~30 minutes

nvidia/Llama3-ChatQA-1.5-8B- 8 billion parameters

- 11k samples

- ~30 minutes

Let's practice!

Fine-Tuning with Llama 3