Managing data with LightningDataModule

Scalable AI Models with PyTorch Lightning

Sergiy Tkachuk

Director, GenAI Productivity

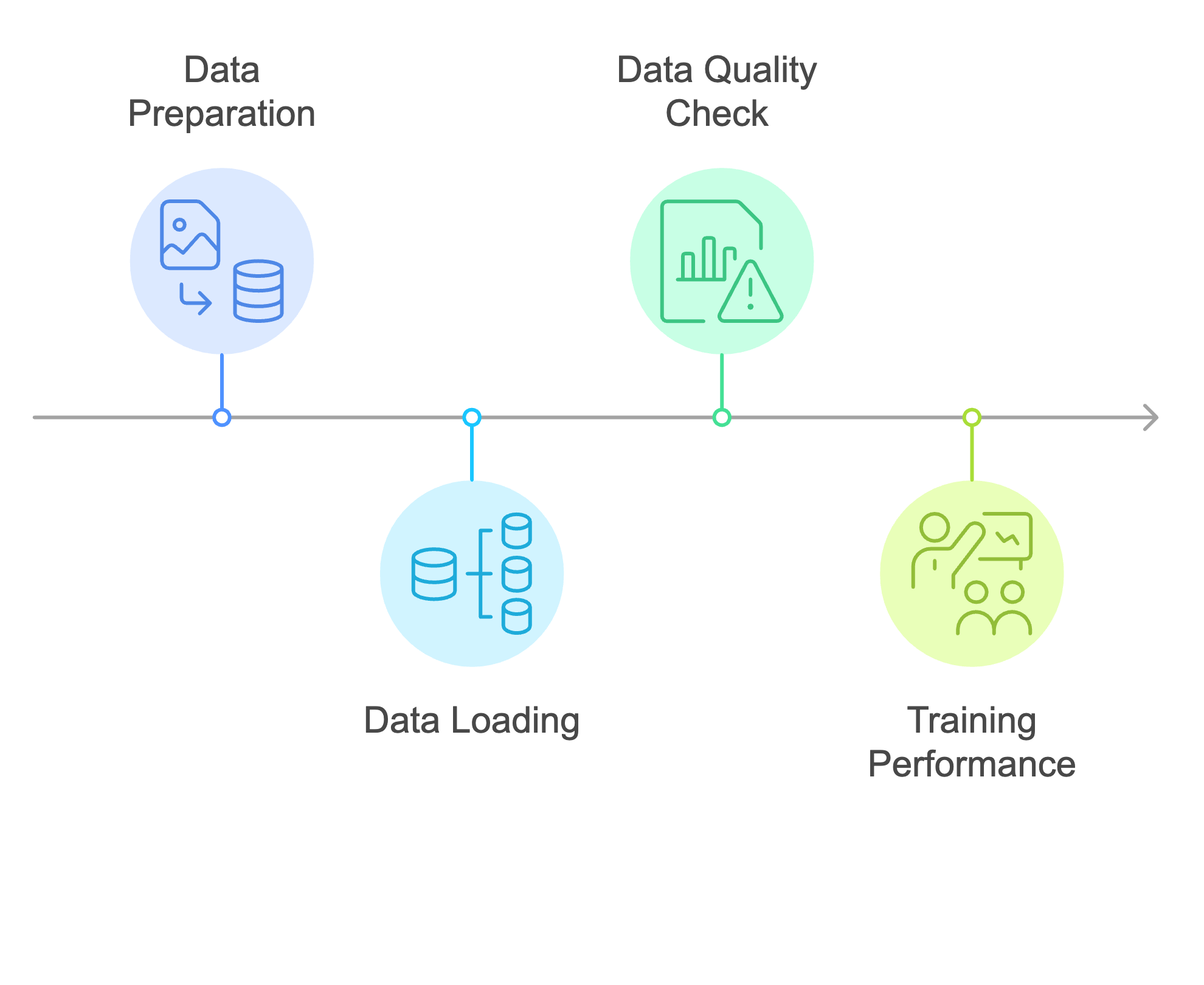

Data preparation for model training

- Poorly prepared data results in training issues

- Slow training speeds

- Frequent interruptions

- Convergence failure

Why use LightningDataModule?

$$

- 📂 Centralizes dataset handling

$$

- 📊 Standardizes data preparation workflows

$$

- 🚀 Simplifies training and evaluation phases

Managing data with LightningDataModule

Key methods:

prepare_data: Download and set up datasetup: Split data into train, validation, and test sets

class ImageDataModule(pl.LightningDataModule): def __init__(self, data_dir="./data", batch_size=32): super().__init__() ...def prepare_data(self): datasets.MNIST(self.data_dir, train=True, download=True)def setup(self, stage=None): dataset = datasets.MNIST(self.data_dir, train=True, transform=self.transform) self.train_data, self.val_data = random_split(dataset, [55000, 5000]) self.test_data = datasets.MNIST(self.data_dir, train=False, transform=self.transform)

Creating the train DataLoader

$$

- Supplies batches of training data

- Helps optimize GPU utilization

- Enables efficient iteration over large datasets

def train_dataloader(self):

return DataLoader(self.train_data, batch_size=self.batch_size, shuffle=True)

Creating the validation DataLoader

$$

- Supplies data for model validation

- Helps monitor generalization performance

- Ensures consistency across evaluation runs through shuffling

def val_dataloader(self):

return DataLoader(self.val_data, batch_size=self.batch_size)

Creating the test DataLoader

$$

- Supplies data for final model evaluation after training is completed

- Simulates real-world performance assessment

- Ensures unbiased performance measurement

def test_dataloader(self):

return DataLoader(self.test_data, batch_size=self.batch_size)

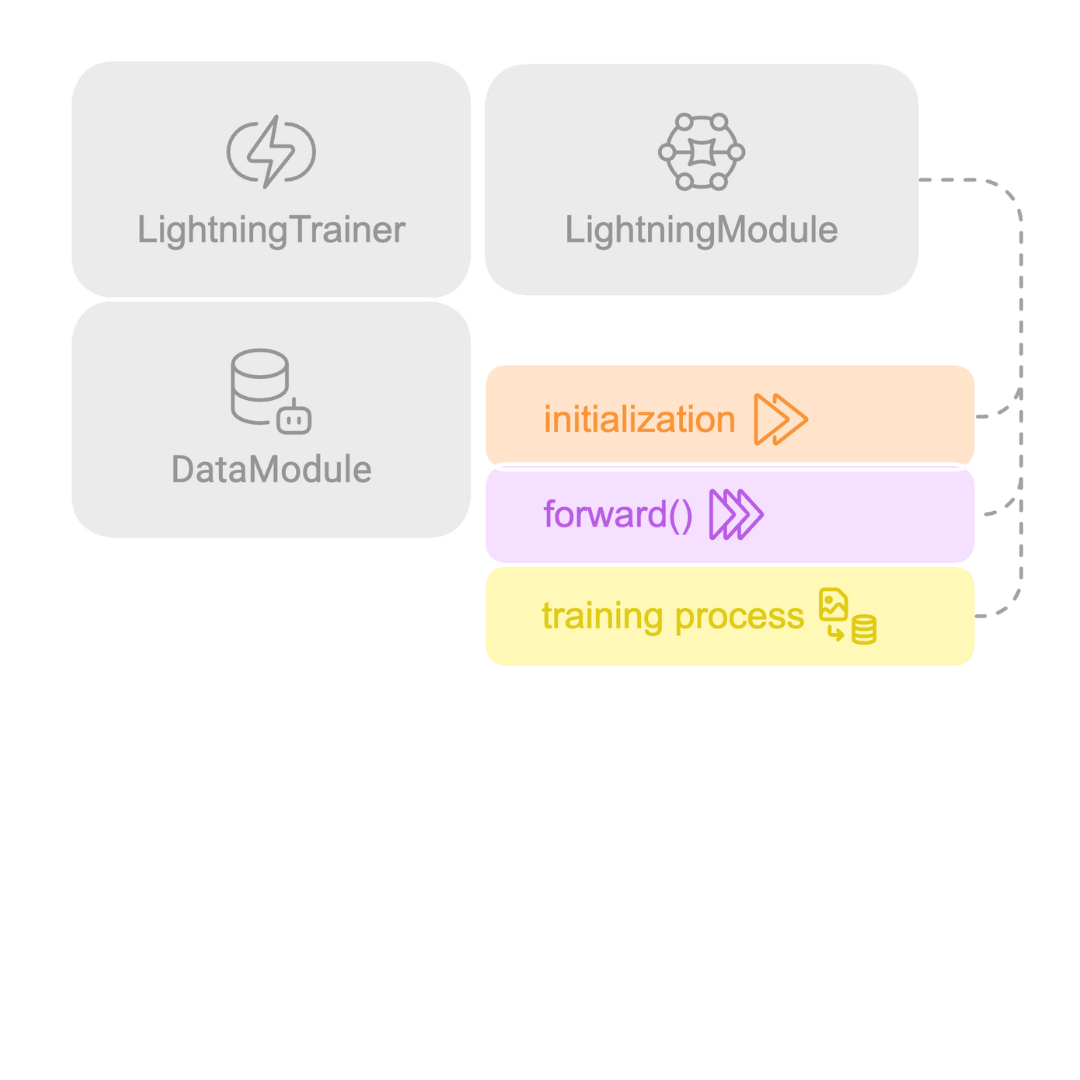

Connecting DataModule to LightningModule

- Modular design separates data and model logic

Connecting DataModule to LightningModule

- Modular design separates data and model logic

LightningDataModulepairs withLightningModule- Standardized workflow enhances reproducibility

Let's practice!

Scalable AI Models with PyTorch Lightning