Introduction to PyTorch Lightning

Scalable AI Models with PyTorch Lightning

Sergiy Tkachuk

Director, GenAI Productivity

PyTorch & PyTorch Lightning

$$

Standard PyTorch:

- Significant manual effort

- Writing explicit training loops

- GPU/TPU handling, logging, and checkpointing

PyTorch & PyTorch Lightning

PyTorch Lightning:

- Built on top of PyTorch

- Automates:

- Training

- Checkpointing

- Logging

- Reduces boilerplate

- Improves scalability and reproducibility

Overview of PyTorch Lightning

- Example: global e-commerce streamlining workflows

- Visual search model development

- Automated training loops

- Rapid iteration with minimal boilerplate

$$

- Core components:

LightningModuleandTrainerfrom lightning.pytorch import LightningModule from lightning.pytorch import Trainer

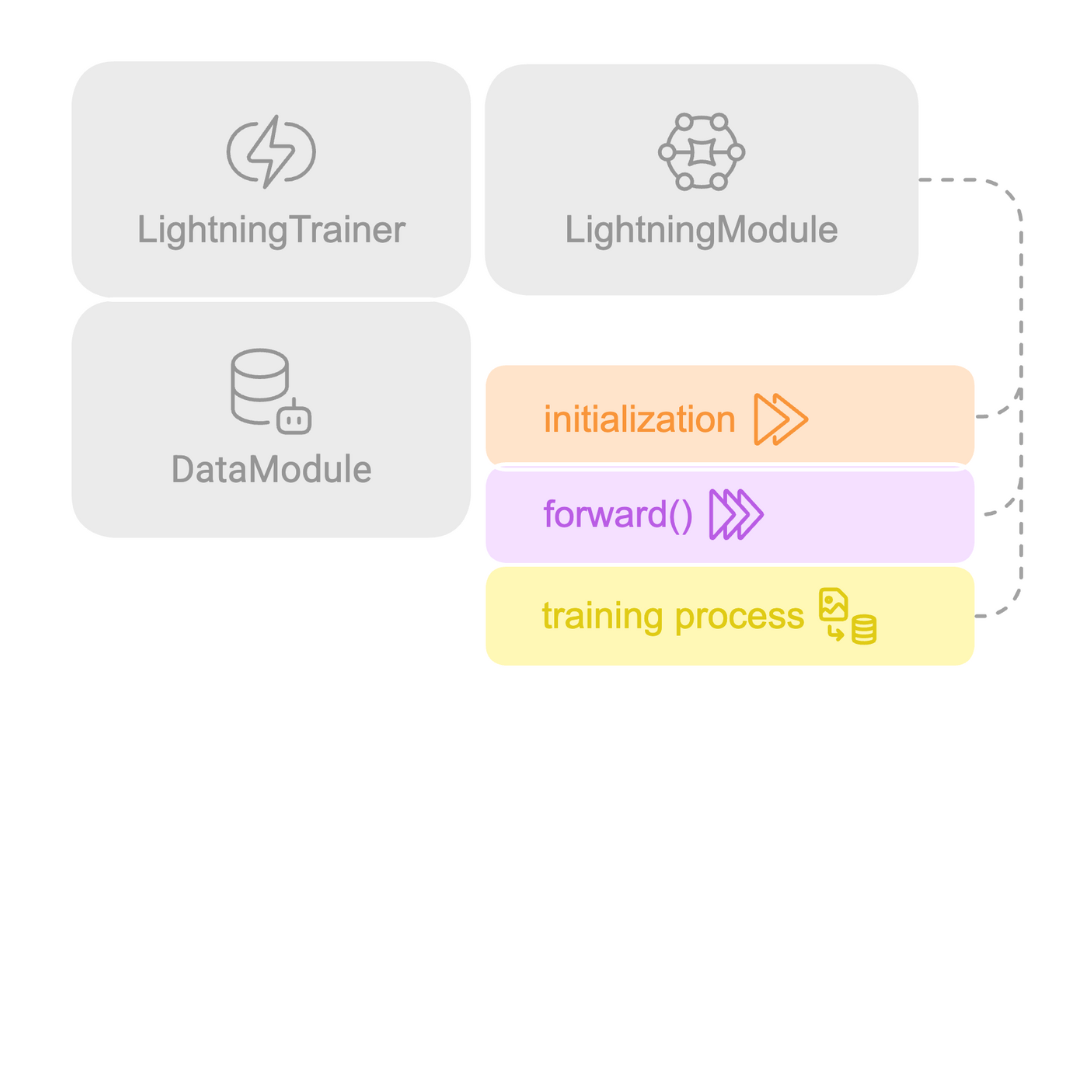

Lightning structure

Key components:

- LightningModule: core model logic

Lightning structure

Key components:

- LightningModule: core model logic

- Lightning Trainer: orchestrates training

Lightning structure

Key components:

- LightningModule: core model logic

- Lightning Trainer: orchestrates training

- DataModule: organizes data pipelines

- Callbacks: automates events

- Logger: tracks experiments

LightningModule in action

Key points:

__init__: Defines model architectureforward(): Pass data through the modeltraining_step(): Define training- Custom hooks available

import lightning.pytorch as pl class LightClassifier(pl.LightningModule): def __init__(self, model, criterion, optimizer):super().__init__() self.model = model self.criterion = criterion self.optimizer = optimizerdef forward(self, x): return self.model(x)def training_step(self, batch, batch_idx): x, y = batch logits = self(x) loss = self.criterion(logits, y) return loss

Lightning Trainer in action

Key points:

- Manages training loop

- Supports distributed training

- Handles callbacks & logging

- Optimizes resource usage

model = LightClassifier()trainer = Trainer(max_epochs=10, accelerator="gpu", devices=1) trainer.fit(model, train_dataloader, val_dataloader)

Introducing the Afro-MNIST dataset

A set of synthetic MNIST-style datasets for four orthographies used in Afro-Asiatic and Niger-Congo languages: Ge'ez (Ethiopic), Vai, Osmanya, and N'Ko.

1 Wu, Daniel J., Andrew C. Yang, and Vinay U. Prabhu. "Afro-MNIST: Synthetic generation of MNIST-style datasets for low-resource languages." arXiv preprint arXiv:2009.13509 (2020).

PyTorch Lightning recap

Let's practice!

Scalable AI Models with PyTorch Lightning